ORB-SLAM2的源码阅读(一):系统的整体构建

春节后又要开工啦,加油ヾ(◍°∇°◍)ノ゙

ORB-SLAM2在SLAM界算的上是火遍大江南北,无数更新的版本在github上发布,这么厉害的代码怎么样也得拜读一下。原谅我的脑力有限,一次性是没法读完这整套代码的,当然在当中也会有不理解的地方或者理解错误的地方,如果理解有误,还请批评指正。

ORB-SLAM2源自于ORB-SLAM,为什么有名?因为完善,集合了单目,双目,RGB-D多种模型。更重要的一点是人家开源了,安装很方便,并且做了运行各种大数据库的demo,基本上只要编译通过,什么TUM,KITTI , EuRoc都可以很快的使用,并且心里感觉就是,哇!SLAM很酷炫。

但是话说回来,一般很酷炫的东西要想吃透理解就是一件不是很酷炫的事儿了,可能还有点痛苦,毕竟看代码不像看小说书,不仅逻辑思维要清晰,还要明白一行行代码后的具体意义。一句话,还是得耐住性子才行。

ORB-SLAM2的代码量不小,先要理解大概的结构,在进行细节上的理解。下面的代码来自ORB-SLAM2。这是基本上构建出了SLAM的基本框架。

#ifndef SYSTEM_H

#define SYSTEM_H

#include#include "System.h"

#include "Converter.h"

#include (NULL)), mbReset(false),mbActivateLocalizationMode(false),

mbDeactivateLocalizationMode(false)

{

// Output welcome message

cout << endl <<

"ORB-SLAM2 Copyright (C) 2014-2016 Raul Mur-Artal, University of Zaragoza." << endl <<

"This program comes with ABSOLUTELY NO WARRANTY;" << endl <<

"This is free software, and you are welcome to redistribute it" << endl <<

"under certain conditions. See LICENSE.txt." << endl << endl;

cout << "Input sensor was set to: ";

//系统的完整之处在于此,单目,立体,深度非常完备

if(mSensor==MONOCULAR)

cout << "Monocular" << endl;

else if(mSensor==STEREO)

cout << "Stereo" << endl;

else if(mSensor==RGBD)

cout << "RGB-D" << endl;

//Check settings file

//读取对应的参数设定的文件,如果运行过ORB-SLAM2就会知道对应的就是相机内参、帧率、基线(双目)

//深度阈值,对应ORB Extractor的参数设定,还有Viewer线程的参数设定,XXX.yaml这种文件类型

cv::FileStorage fsSettings(strSettingsFile.c_str(), cv::FileStorage::READ);

if(!fsSettings.isOpened())

{

cerr << "Failed to open settings file at: " << strSettingsFile << endl;

exit(-1);

}

//Load ORB Vocabulary

//下载对应的词袋模型,对应的是.txt文件类型

cout << endl << "Loading ORB Vocabulary. This could take a while..." << endl;

mpVocabulary = new ORBVocabulary();

bool bVocLoad = mpVocabulary->loadFromTextFile(strVocFile);

if(!bVocLoad)

{

cerr << "Wrong path to vocabulary. " << endl;

cerr << "Falied to open at: " << strVocFile << endl;

exit(-1);

}

cout << "Vocabulary loaded!" << endl << endl;

//Create KeyFrame Database

mpKeyFrameDatabase = new KeyFrameDatabase(*mpVocabulary);

//Create the Map

mpMap = new Map();

//Create Drawers. These are used by the Viewer

mpFrameDrawer = new FrameDrawer(mpMap);

mpMapDrawer = new MapDrawer(mpMap, strSettingsFile);

//Initialize the Tracking thread

//(it will live in the main thread of execution, the one that called this constructor)

//初始化Tracking线程

mpTracker = new Tracking(this, mpVocabulary, mpFrameDrawer, mpMapDrawer,

mpMap, mpKeyFrameDatabase, strSettingsFile, mSensor);

//Initialize the Local Mapping thread and launch

//初始化Local Mapping 线程

mpLocalMapper = new LocalMapping(mpMap, mSensor==MONOCULAR);

mptLocalMapping = new thread(&ORB_SLAM2::LocalMapping::Run,mpLocalMapper);

//Initialize the Loop Closing thread and launch

//初始化闭环检测线程

mpLoopCloser = new LoopClosing(mpMap, mpKeyFrameDatabase, mpVocabulary, mSensor!=MONOCULAR);

mptLoopClosing = new thread(&ORB_SLAM2::LoopClosing::Run, mpLoopCloser);

//Initialize the Viewer thread and launch

//初始化可视化线程

if(bUseViewer)

{

mpViewer = new Viewer(this, mpFrameDrawer,mpMapDrawer,mpTracker,strSettingsFile);

mptViewer = new thread(&Viewer::Run, mpViewer);

mpTracker->SetViewer(mpViewer);

}

//Set pointers between threads

mpTracker->SetLocalMapper(mpLocalMapper);

mpTracker->SetLoopClosing(mpLoopCloser);

mpLocalMapper->SetTracker(mpTracker);

mpLocalMapper->SetLoopCloser(mpLoopCloser);

mpLoopCloser->SetTracker(mpTracker);

mpLoopCloser->SetLocalMapper(mpLocalMapper);

}

//运行的系统设定为双目

cv::Mat System::TrackStereo(const cv::Mat &imLeft, const cv::Mat &imRight, const double ×tamp)

{

if(mSensor!=STEREO)

{

cerr << "ERROR: you called TrackStereo but input sensor was not set to STEREO." << endl;

exit(-1);

}

// Check mode change

{

unique_lock lock(mMutexMode);

if(mbActivateLocalizationMode)

{

mpLocalMapper->RequestStop();

// Wait until Local Mapping has effectively stopped

while(!mpLocalMapper->isStopped())

{

usleep(1000);

}

mpTracker->InformOnlyTracking(true);

mbActivateLocalizationMode = false;

}

if(mbDeactivateLocalizationMode)

{

mpTracker->InformOnlyTracking(false);

mpLocalMapper->Release();

mbDeactivateLocalizationMode = false;

}

}

// Check reset

{

unique_lock lock(mMutexReset);

if(mbReset)

{

mpTracker->Reset();

mbReset = false;

}

}

cv::Mat Tcw = mpTracker->GrabImageStereo(imLeft,imRight,timestamp);

unique_lock lock2(mMutexState);

mTrackingState = mpTracker->mState;

mTrackedMapPoints = mpTracker->mCurrentFrame.mvpMapPoints;

mTrackedKeyPointsUn = mpTracker->mCurrentFrame.mvKeysUn;

return Tcw;

}

//运行系统设定为深度相机

cv::Mat System::TrackRGBD(const cv::Mat &im, const cv::Mat &depthmap, const double ×tamp)

{

if(mSensor!=RGBD)

{

cerr << "ERROR: you called TrackRGBD but input sensor was not set to RGBD." << endl;

exit(-1);

}

// Check mode change

{

unique_lock lock(mMutexMode);

if(mbActivateLocalizationMode)

{

mpLocalMapper->RequestStop();

// Wait until Local Mapping has effectively stopped

while(!mpLocalMapper->isStopped())

{

usleep(1000);

}

mpTracker->InformOnlyTracking(true);

mbActivateLocalizationMode = false;

}

if(mbDeactivateLocalizationMode)

{

mpTracker->InformOnlyTracking(false);

mpLocalMapper->Release();

mbDeactivateLocalizationMode = false;

}

}

// Check reset

{

unique_lock lock(mMutexReset);

if(mbReset)

{

mpTracker->Reset();

mbReset = false;

}

}

cv::Mat Tcw = mpTracker->GrabImageRGBD(im,depthmap,timestamp);

unique_lock lock2(mMutexState);

mTrackingState = mpTracker->mState;

mTrackedMapPoints = mpTracker->mCurrentFrame.mvpMapPoints;

mTrackedKeyPointsUn = mpTracker->mCurrentFrame.mvKeysUn;

return Tcw;

}

//运行系统设定为单目

cv::Mat System::TrackMonocular(const cv::Mat &im, const double ×tamp)

{

if(mSensor!=MONOCULAR)

{

cerr << "ERROR: you called TrackMonocular but input sensor was not set to Monocular." << endl;

exit(-1);

}

// Check mode change

{

unique_lock lock(mMutexMode);

if(mbActivateLocalizationMode)

{

mpLocalMapper->RequestStop();

// Wait until Local Mapping has effectively stopped

while(!mpLocalMapper->isStopped())

{

usleep(1000);

}

mpTracker->InformOnlyTracking(true);

mbActivateLocalizationMode = false;

}

if(mbDeactivateLocalizationMode)

{

mpTracker->InformOnlyTracking(false);

mpLocalMapper->Release();

mbDeactivateLocalizationMode = false;

}

}

// Check reset

{

unique_lock lock(mMutexReset);

if(mbReset)

{

mpTracker->Reset();

mbReset = false;

}

}

cv::Mat Tcw = mpTracker->GrabImageMonocular(im,timestamp);

unique_lock lock2(mMutexState);

mTrackingState = mpTracker->mState;

mTrackedMapPoints = mpTracker->mCurrentFrame.mvpMapPoints;

mTrackedKeyPointsUn = mpTracker->mCurrentFrame.mvKeysUn;

return Tcw;

}

//激活定位模块

void System::ActivateLocalizationMode()

{

unique_lock lock(mMutexMode);

mbActivateLocalizationMode = true;

}

//失活定位模块

void System::DeactivateLocalizationMode()

{

unique_lock lock(mMutexMode);

mbDeactivateLocalizationMode = true;

}

//地图是否进行修改

bool System::MapChanged()

{

static int n=0;

int curn = mpMap->GetLastBigChangeIdx();

if(nreturn true;

}

else

return false;

}

//重置系统

void System::Reset()

{

unique_lock lock(mMutexReset);

mbReset = true;

}

//关闭整个系统

void System::Shutdown()

{

mpLocalMapper->RequestFinish();

mpLoopCloser->RequestFinish();

if(mpViewer)

{

mpViewer->RequestFinish();

while(!mpViewer->isFinished())

usleep(5000);

}

// Wait until all thread have effectively stopped

while(!mpLocalMapper->isFinished() || !mpLoopCloser->isFinished() || mpLoopCloser->isRunningGBA())

{

usleep(5000);

}

if(mpViewer)

pangolin::BindToContext("ORB-SLAM2: Map Viewer");

}

//如果运行的是TUM数据集,保存其轨迹

void System::SaveTrajectoryTUM(const string &filename)

{

cout << endl << "Saving camera trajectory to " << filename << " ..." << endl;

if(mSensor==MONOCULAR)

{

cerr << "ERROR: SaveTrajectoryTUM cannot be used for monocular." << endl;

return;

}

vector// pKF->SetPose(pKF->GetPose()*Two);

if(pKF->isBad())

continue;

cv::Mat R = pKF->GetRotation().t();

vector<float> q = Converter::toQuaternion(R);

cv::Mat t = pKF->GetCameraCenter();

f << setprecision(6) << pKF->mTimeStamp << setprecision(7) << " " << t.at<float>(0) << " " << t.at<float>(1) << " " << t.at<float>(2)

<< " " << q[0] << " " << q[1] << " " << q[2] << " " << q[3] << endl;

}

f.close();

cout << endl << "trajectory saved!" << endl;

}

//如果运行的是KITTI数据集,保存其轨迹

void System::SaveTrajectoryKITTI(const string &filename)

{

cout << endl << "Saving camera trajectory to " << filename << " ..." << endl;

if(mSensor==MONOCULAR)

{

cerr << "ERROR: SaveTrajectoryKITTI cannot be used for monocular." << endl;

return;

}

vector lock(mMutexState);

return mTrackingState;

}

vector lock(mMutexState);

return mTrackedMapPoints;

}

vector lock(mMutexState);

return mTrackedKeyPointsUn;

}

} //namespace ORB_SLAM

从上面的代码可以看出其实针对不同的模型,使用的接口还是有差异的。从代码中可以看出:

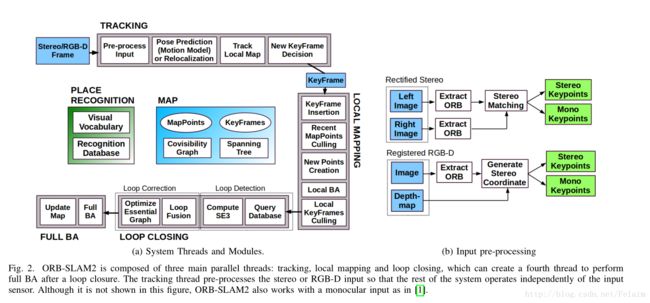

(1)主线程:Tracking线程就是在主线程上 这一部分主要工作是从图像中提取ORB特征,根据上一帧进行姿态估计,或者进行通过全局重定位初始化位姿,然后跟踪已经重建的局部地图,优化位姿,再根据一些规则确定新的关键帧。

(2)Local mappng线程 这一部分主要完成局部地图构建。包括对关键帧的插入,验证最近生成的地图点并进行筛选,然后生成新的地图点,使用局部捆集调整(Local BA),最后再对插入的关键帧进行筛选,去除多余的关键帧。

(3)Loop closing线程 这一部分主要分为两个过程,分别是闭环探测和闭环校正。闭环检测先使用WOB进行探测,然后通过Sim3算法计算相似变换。闭环校正,主要是闭环融合和Essential Graph的图优化。

(4)Viewer线程 对估计的位姿和特征点进行可视化显示

参考链接:

http://blog.csdn.net/u010128736/article/details/53157605