hive_取两次记录的时间差lead/lag/first_value/last_value函数使用 及用户在各个页面停留时间计算示例

这几个函数不支持WINDOW子句

1) LEAD(col,n,DEFAULT) 用于统计窗口内往下第n行值

-- 第一个参数为列名,第二个参数为往下第n行(可选,默认为1),第三个参数为默认值(当往下第n行为NULL时候,取默认值,如不指定,则为NULL)

2)LAG(col,n,DEFAULT) 用于统计窗口内往上第n行值

-- 第一个参数为列名,第二个参数为往上第n行(可选,默认为1),第三个参数为默认值(当往上第n行为NULL时候,取默认值,如不指定,则为NULL)

3)FIRST_VALUE 取分组内排序后,截止到当前行,第一个值

4)LAST_VALUE 取分组内排序后,截止到当前行,最后一个值

--使用denmo1

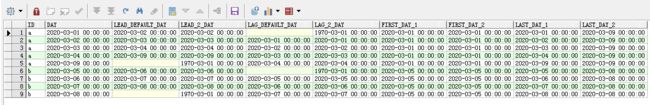

select

t2.id

,t2.day

,t2.lead_default_day

,t2.lead_2_day

,t2.lag_default_day

,t2.lag_2_day

,t2.first_day_1

,t2.first_day_2

,t2.last_day_1

,t2.last_day_2

,(unix_timestamp(t2.lead_default_day)-unix_timestamp(t2.day))/3600 as diff_hour

from (

select

t1.id

,t1.day

,lead(t1.day) over(partition by t1.id order by t1.day) as lead_default_day

,lead(t1.day,1,'1970-03-01 00:00:00') over(partition by t1.id order by t1.day) as lead_2_day

,lag(t1.day) over(partition by t1.id order by t1.day) as lag_default_day

,lag(t1.day,1,'1970-03-01 00:00:00') over(partition by t1.id order by t1.day) as lag_2_day

,first_value(t1.day) over(partition by t1.id order by t1.day) as first_day_1

,first_value(t1.day) over(partition by t1.id) as first_day_2

,last_value(t1.day) over(partition by t1.id order by t1.day) as last_day_1

,last_value(t1.day) over(partition by t1.id) as last_day_2

from (

select 'a' as id, '2020-03-01 00:00:00' as day union all

select 'a' as id, '2020-03-09 00:00:00' as day union all

select 'a' as id, '2020-03-02 00:00:00' as day union all

select 'a' as id, '2020-03-03 00:00:00' as day union all

select 'a' as id, '2020-03-04 00:00:00' as day union all

select 'b' as id, '2020-03-08 00:00:00' as day union all

select 'b' as id, '2020-03-05 00:00:00' as day union all

select 'b' as id, '2020-03-06 00:00:00' as day union all

select 'b' as id, '2020-03-07 00:00:00' as day

) t1

) t2

;

场景 问题 示例

用户Peter在浏览网页,在某个时刻,Peter点进了某个页面,过一段时间后,Peter又进入了另外一个页面,如此反复,那怎么去统计Peter在某个特定网页的停留时间呢,又或是怎么统计某个网页用户停留的总时间呢?

现在用户的行为都被采集了,处理转换到hive数据表,表结构如下:

create table felix.user_log(

userid string,

time string,

url string

) row format delimited fields terminated by ',';

vi /home/hadoop/user_log.log

Peter,2015-10-12 01:10:00,url1

Peter,2015-10-12 01:15:10,url2

Peter,2015-10-12 01:16:40,url3

Peter,2015-10-12 02:13:00,url4

Peter,2015-10-12 03:14:30,url5

Marry,2015-11-12 01:10:00,url1

Marry,2015-11-12 01:15:10,url2

Marry,2015-11-12 01:16:40,url3

Marry,2015-11-12 02:13:00,url4

Marry,2015-11-12 03:14:30,url5

load data local inpath '/home/hadoop/user_log.log' overwrite into table felix.user_log;

hive (felix)> select * from user_log;

OK

Peter 2015-10-12 01:10:00 url1

Peter 2015-10-12 01:15:10 url2

Peter 2015-10-12 01:16:40 url3

Peter 2015-10-12 02:13:00 url4

Peter 2015-10-12 03:14:30 url5

Marry 2015-11-12 01:10:00 url1

Marry 2015-11-12 01:15:10 url2

Marry 2015-11-12 01:16:40 url3

Marry 2015-11-12 02:13:00 url4

Marry 2015-11-12 03:14:30 url5

Time taken: 0.141 seconds, Fetched: 10 row(s)

处理步骤:

1)获取用户在某个页面停留的起始与结束时间

select userid,

time stime,

lead(time) over(partition by userid order by time) etime,

url

from felix.user_log;

OK

userid stime etime url

Marry 2015-11-12 01:10:00 2015-11-12 01:15:10 url1

Marry 2015-11-12 01:15:10 2015-11-12 01:16:40 url2

Marry 2015-11-12 01:16:40 2015-11-12 02:13:00 url3

Marry 2015-11-12 02:13:00 2015-11-12 03:14:30 url4

Marry 2015-11-12 03:14:30 NULL url5

Peter 2015-10-12 01:10:00 2015-10-12 01:15:10 url1

Peter 2015-10-12 01:15:10 2015-10-12 01:16:40 url2

Peter 2015-10-12 01:16:40 2015-10-12 02:13:00 url3

Peter 2015-10-12 02:13:00 2015-10-12 03:14:30 url4

Peter 2015-10-12 03:14:30 NULL url5

Time taken: 22.907 seconds, Fetched: 10 row(s)

hive (felix)>

计算用户在页面停留的时间间隔(实际分析当中,这里要做数据清洗工作,如果一个用户停留了4、5个小时,那这条记录肯定是不可取的。)

select userid,

time stime,

lead(time) over(partition by userid order by time) etime,

UNIX_TIMESTAMP(lead(time) over(partition by userid order by time),'yyyy-MM-dd HH:mm:ss')- UNIX_TIMESTAMP(time,'yyyy-MM-dd HH:mm:ss') period,

url

from felix.user_log;

userid stime etime period url

Marry 2015-11-12 01:10:00 2015-11-12 01:15:10 310 url1

Marry 2015-11-12 01:15:10 2015-11-12 01:16:40 90 url2

Marry 2015-11-12 01:16:40 2015-11-12 02:13:00 3380 url3

Marry 2015-11-12 02:13:00 2015-11-12 03:14:30 3690 url4

Marry 2015-11-12 03:14:30 NULL NULL url5

Peter 2015-10-12 01:10:00 2015-10-12 01:15:10 310 url1

Peter 2015-10-12 01:15:10 2015-10-12 01:16:40 90 url2

Peter 2015-10-12 01:16:40 2015-10-12 02:13:00 3380 url3

Peter 2015-10-12 02:13:00 2015-10-12 03:14:30 3690 url4

Peter 2015-10-12 03:14:30 NULL NULL url5

Time taken: 23.934 seconds, Fetched: 10 row(s)

hive (felix)>

计算每个页面停留的总时间,某个用户访问某个页面的总时间

select nvl(url,'ALL') url,

nvl(userid,'ALL') userid,

sum(period) totol_peroid from (

select userid,

time stime,

lead(time) over(partition by userid order by time) etime,

UNIX_TIMESTAMP(lead(time) over(partition by userid order by time),'yyyy-MM-dd HH:mm:ss')- UNIX_TIMESTAMP(time,'yyyy-MM-dd HH:mm:ss') period,

url

from FELIX.user_log

) a group by url, userid with rollup;

url userid totol_peroid

ALL ALL 14940

url1 ALL 620

url1 Marry 310

url1 Peter 310

url2 ALL 180

url2 Marry 90

url2 Peter 90

url3 ALL 6760

url3 Marry 3380

url3 Peter 3380

url4 ALL 7380

url4 Marry 3690

url4 Peter 3690

url5 ALL NULL

url5 Marry NULL

url5 Peter NULL

Time taken: 50.659 seconds, Fetched: 16 row(s)

hive (felix)>