超简单的豆瓣电影Top前250影片基本信息爬取

记录一篇简单的爬虫经历~豆瓣电影250top_百度搜索https://www.baidu.com/s?word=%E8%B1%86%E7%93%A3%E7%94%B5%E5%BD%B1250top&tn=25017023_10_pg&lm=-1&ssl_s=1&ssl_c=ssl1_1721136aead

爬虫内容参考阿优乐扬的博客-CSDN博客https://blog.csdn.net/ayouleyang/article/details/96023950?

以下内容对小白白无敌友好

实现装备:Windows64,pycharm

为了爬虫安装的库有:requests, bs4, BeautifulSoup, xlwt(区分大小写)

代码参考的是上述博主的,使用中遇到了一些问题,下面我来简单说一下。

原博主代码(当然可能是我的电脑不奥利给的原因)

import re

import urllib.request

from bs4 import BeautifulSoup

import xlwt

urls = "https://movie.douban.com/top250"

html = urllib.request.urlopen(urls).read()

soup = BeautifulSoup(html, "html.parser")

all_page=[]

print(u'网站名称:', soup.title.string.replace("\n", ""))

第一个弹出错误提示:urllib.error.HTTPError: HTTP Error 418

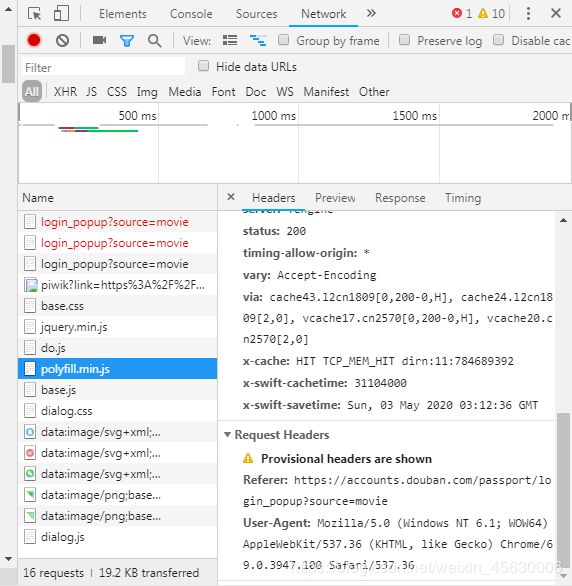

解决方法,headers一行要改成自己计算机在网址中的User-Agent,也就是User-Agent有很种,一定要匹配自己的,查询Headers步骤如下(适合按F12查询无效者):打开任意网址,点开右上角的菜单选项,选择开发者工具,选择network,任意点开网站的连接,在network上会显示一些文件,点开任意文件右边会显示Headers,User-Agent,复制后注意引号与空格。

第二个错误行:html = urllib.request.urlopen(urls).read(),在这一行里面urlopen()很不给力,一直提示错误,修改后代码 html = requests.get(urls,headers = headers )

第三个错误提示:object of type ‘Response’ has no len(),错误行为:soup = BeautifulSoup(html, “html.parser”),错误原因:html是requests对象,无法使用Beautiful解析,需要在html后面接content。解决后代码:soup = BeautifulSoup(html.content , “html.parser”)。

然后下面是卑微的我修改后的代码,然后就可以爬出来啦~

urls = "https://movie.douban.com/top250"

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3947.100 Safari/537.36'}

html = requests.get(urls,headers = headers )

soup = BeautifulSoup(html.content , "html.parser")

all_page = []

print(u'网站名称:', soup.title.string.replace("\n", ""))

# 定位代码范围、爬虫部分

def part(url):

html = requests.get(urls,headers = headers )

soup = BeautifulSoup(html.content , "html.parser")

for tag in soup.find_all(attrs={"class": "item"}):

try:

ta = tag.find('em').string

# print(u'豆瓣排名:', ta)

name = tag.find('span').string

# print(u'中文名称:', name)

English = tag.find_all('span')[1].get_text()

yuanName = English.split('/')[1]

# print(u'原名称:', yuanName)

country = tag.p.get_text().split('/')[-2]

# print(u'国家:', country)

time = tag.p.get_text().split('\n')[2].split('/')[0].replace(" ", "")

# print(u'上映时间:', time)

Leixing = tag.p.get_text().split('\n')[2].split('/')[-1]

# print(u'类型:', Leixing)

# 电影评分

for peo in tag.find_all(attrs={"class": "star"}):

valuation = peo.find_all('span')[-1].get_text()

regex = re.compile(r"\d+\.?\d*")

people = regex.findall(valuation)[0]

# print(u'评价人数:',people)

# 导演及主演

join = tag.p.next_element # p的后节点

role = join.replace("\n", "").replace(" ", "")

# print(role)

introduce = tag.a.get('href')

# print(u'剧情简介:', introduce)

img = tag.img.get('src')

# print(u'图片地址:',img)

lab = tag.find_all(attrs={"class": "other"})

lable = lab[0].get_text()

# print(u'影片标签:', lable)

# print()

page = [ta, name, yuanName, country, time, Leixing, sore, people, role, introduce, img, lable]

all_page.append(page)

# print(all_page)

except IndexError:

pass

if __name__ == '__main__':

i = 0

while i < 10:

print(u'网页当前位置:', (i + 1))

num = i * 25

url = 'https://movie.douban.com/top250?start=' + str(num) + '&filter='

part(url)

book = xlwt.Workbook(encoding='utf-8')

sheet = book.add_sheet('电影排名表')

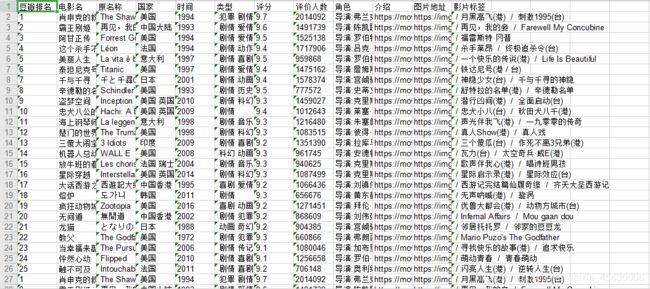

head = ['豆瓣排名', '电影名', '原名称', '国家', '时间', '类型', '评分', '评价人数', '角色', '介绍', '图片地址', '影片标签']

for h in range(len(head)):

sheet.write(0, h, head[h])

j = 1

for list in all_page:

k = 0

for data in list:

# print(data)

sheet.write(j, k, data)

k = k + 1

j += 1

book.save('豆瓣电影top250.xls')

i = i + 1