随机森林参数选择

这里是基于前面两篇相关文章

基于随机森林做回归任务(数据预处理、MAPE指标评估、可视化展示、特征重要性、预测和实际值差异显示图)

https://blog.csdn.net/qq_40229367/article/details/88526749

数据与特征对随机森林的影响(特征对比、特征降维、考虑性价比)

https://blog.csdn.net/qq_40229367/article/details/88528421

本文讨论的是随机森林参数的 选择对结果的影响

这里的处理是基于前面选择了前两个比较重要的特征后的数据

我们首先看一下随机森林有哪些参数

from sklearn.ensemble import RandomForestRegressor

rf = RandomForestRegressor(random_state = 42)

from pprint import pprint

# Look at parameters used by our current forest

print('Parameters currently in use:\n')

pprint(rf.get_params())Parameters currently in use:

{'bootstrap': True,

'criterion': 'mse',

'max_depth': None,

'max_features': 'auto',

'max_leaf_nodes': None,

'min_impurity_decrease': 0.0,

'min_impurity_split': None,

'min_samples_leaf': 1,

'min_samples_split': 2,

'min_weight_fraction_leaf': 0.0,

'n_estimators': 10,

'n_jobs': 1,

'oob_score': False,

'random_state': 42,

'verbose': 0,

'warm_start': False}

经常使用的是n_estimators 建立多少棵树,max_depth 最大深度

更详细的解释可以看sklearn API文档:

https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestRegressor.html#sklearn.ensemble.RandomForestRegressor

之后我们需要尝试进行参数的组合

那么就要了解sklearn.model_selection 中的RandomizedSearchCV 和 GridSearchCV

RandomizedSearchCV 是在给定参数范围内随机选择参数值进行指定次数的参数组合然后找出最好的一个

GridSearchCV 就是把给定的参数值全部组合一遍,然后找出效果最好的一个

CV是cross-validation 就是交叉验证,也就是一边进行交叉验证一边选择参数(交叉验证也有用于参数调整的)

通常情况下是首先使用RandomizedSearchCV 进行大概率最优解,然后用GridSearchCV在一定的浮动范围内微调

RandomizedSearchCV

from sklearn.model_selection import RandomizedSearchCV

# Number of trees in random forest

n_estimators = [int(x) for x in np.linspace(start = 200, stop = 2000, num = 10)]

# Number of features to consider at every split

max_features = ['auto', 'sqrt']

# Maximum number of levels in tree

max_depth = [int(x) for x in np.linspace(10, 100, num = 10)]

max_depth.append(None)

# Minimum number of samples required to split a node

min_samples_split = [2, 5, 10]

# Minimum number of samples required at each leaf node

min_samples_leaf = [1, 2, 4]

# Method of selecting samples for training each tree

bootstrap = [True, False]

# Create the random grid

random_grid = {'n_estimators': n_estimators,

'max_features': max_features,

'max_depth': max_depth,

'min_samples_split': min_samples_split,

'min_samples_leaf': min_samples_leaf,

'bootstrap': bootstrap}

# Use the random grid to search for best hyperparameters

# First create the base model to tune

rf = RandomForestRegressor()

# Random search of parameters, using 3 fold cross validation,

# search across 100 different combinations, and use all available cores

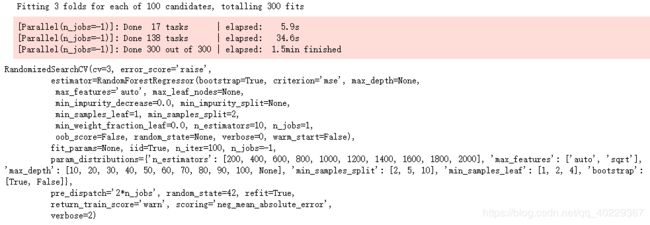

rf_random = RandomizedSearchCV(estimator=rf, param_distributions=random_grid,

n_iter = 100, scoring='neg_mean_absolute_error',

cv = 3, verbose=2, random_state=42, n_jobs=-1)

# Fit the random search model

rf_random.fit(train_features, train_labels)进行100次的参数组合看效果

总共进行了300次,交叉验证用到了三组,n_jobs=-1的意思是所有树一起建立,这种情况下建立森林时占用内存会比较多

看最优的那一个

rf_random.best_params_{'bootstrap': True,

'max_depth': None,

'max_features': 'auto',

'min_samples_leaf': 4,

'min_samples_split': 5,

'n_estimators': 1000}

那我们来看一下参数调整后有没有起到优化的结果呢

def evaluate(model, test_features, test_labels):

predictions = model.predict(test_features)

errors = abs(predictions - test_labels)

mape = 100 * np.mean(errors / test_labels)

accuracy = 100 - mape

print('Model Performance')

print('Average Error: {:0.4f} degrees.'.format(np.mean(errors)))

print('Accuracy = {:0.2f}%.'.format(accuracy))

best_random = rf_random.best_estimator_

evaluate(best_random, test_features, test_labels)Model Performance Average Error: 3.7376 degrees. Accuracy = 93.70%.

而之前的模型是

base_model = RandomForestRegressor(n_estimators = 1000, random_state = 42)

base_model.fit(train_features, train_labels)

evaluate(base_model, test_features, test_labels)Model Performance Average Error: 3.8210 degrees. Accuracy = 93.56%.

的确有起到效果的

之后我们在用GridSearchCV进行微调

from sklearn.model_selection import GridSearchCV

# Create the parameter grid based on the results of random search

param_grid = {

'bootstrap': [True],

'max_depth': [80, 90, 100, 110],

'max_features': [2, 3],

'min_samples_leaf': [3, 4, 5],

'min_samples_split': [8, 10, 12],

'n_estimators': [100, 200, 300, 1000]

}

# Create a based model

rf = RandomForestRegressor()

# Instantiate the grid search model

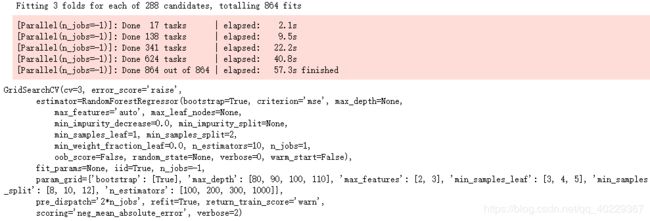

grid_search = GridSearchCV(estimator = rf, param_grid = param_grid,

scoring = 'neg_mean_absolute_error', cv = 3,

n_jobs = -1, verbose = 2)

# Fit the grid search to the data

grid_search.fit(train_features, train_labels)

grid_search.best_params_{'bootstrap': True,

'max_depth': 80,

'max_features': 3,

'min_samples_leaf': 4,

'min_samples_split': 10,

'n_estimators': 300}

看一下效果

best_grid = grid_search.best_estimator_

evaluate(best_grid, test_features, test_labels)Model Performance Average Error: 3.6682 degrees. Accuracy = 93.80%.

同样的道理,可以用多次两者的结合来找出满意的参数