LQR,iLQR,DDP控制论经典算法(MBRL基础知识)

深度强化学习CS285 lec10-lec12 基础知识LQR Framework

- 一、线性二次型调节器LQR(Linear Quadratic Regulator)

- 1.1 LQR符号与术语

- 1.2 LQR问题下的设定

- 1.3 LQR求解

- 1.3.1 推导过程

- 1.3.2 LQR算法流程

- 二、iLQR(Iterative Linear Quadratic Regulator)

- 2.1 Newton Method

- 2.2 Gauss-Newton Method

- 2.3 iLQR算法

- iLQR背景设定

- iLQR流程

- 三、 DDP及iLQR改进

- 四、小总结

- 参考资料

- 补充

一、线性二次型调节器LQR(Linear Quadratic Regulator)

1.1 LQR符号与术语

现有一些随机策略 p θ ( u t ∣ x t ) p_\theta(u_t|x_t) pθ(ut∣xt)收集的样本:

τ i = ( x 1 i , u 1 i , x 2 i , u 2 i , . . . , x T i , u T i , x T + 1 i ) , i = 1 , 2... , N \tau^i=(x_1^i,u_1^i,x_2^i,u_2^i,...,x_T^i,u_T^i,x_{T+1}^i),i=1,2...,N τi=(x1i,u1i,x2i,u2i,...,xTi,uTi,xT+1i),i=1,2...,N

x t , u t x_t,u_t xt,ut即为第t时刻的状态与动作,此为控制论符号的表述 ( x t , u t ) = ( s t , a t ) (x_t,u_t)=(s_t,a_t) (xt,ut)=(st,at),两者可进行混用。lec1-lec4中提到,NN的cost function c ( x t , u t ) c(x_t,u_t) c(xt,ut)实际上是一种immediate的监督信号,RL的reward function r ( s t , a t ) r(s_t,a_t) r(st,at)实际上是一种delayed的监督信息,因此在一个time step下,有 c ( x t , u t ) = − r ( x t , u t ) + c o n s t a n t c(x_t,u_t)=-r(x_t,u_t)+constant c(xt,ut)=−r(xt,ut)+constant。下面给出一个术语表格,基础知识主要使用控制领域的术语表述。

| Control | RL | |

|---|---|---|

| 状态state | x t x_t xt | s t s_t st |

| 动作action | u t u_t ut | a t a_t at |

| 监督信号 | c ( x t , u t ) c(x_t,u_t) c(xt,ut) | r ( s t , a t ) r(s_t,a_t) r(st,at) |

| 动态模型 | x t + 1 ∼ f ( x t , u t ) x_{t+1}\sim f(x_t,u_t) xt+1∼f(xt,ut) | s ′ ∼ p ( s ′ | s , a ) s'\sim p(s'|s,a) s′∼p(s′|s,a) |

1.2 LQR问题下的设定

-

动态模型是deterministic的,即 x t + 1 = f ( x t , u t ) , f ( x t , u t ) x_{t+1}=f(x_t,u_t),f(x_t,u_t) xt+1=f(xt,ut),f(xt,ut)不是一个概率分布的模型。

-

监督信号cost function,最优控制中有performance measure的指标 J = h ( x T , T ) + ∫ t = 0 T g ( x t , u t , t ) d t J=h(x_T,T)+\int_{t_=0}^Tg(x_t,u_t,t)dt J=h(xT,T)+∫t=0Tg(xt,ut,t)dt,第一项衡量该轨迹 τ \tau τ末尾的状态 X T X_T XT与任务目标状态的距离,第二项衡量该轨迹 τ \tau τ从起始到末尾,走完该轨迹中每个状态与动作所要消耗的代价,其中函数g为标量。因此 c ( x t , u t ) c(x_t,u_t) c(xt,ut)即为performace measure中的函数g。LQR的监督信号 c ( x t , u t ) c(x_t,u_t) c(xt,ut)是已知的,输入 x t , u t x_t,u_t xt,ut,输出一个标量scalar。

-

LQR的目的是,给定一个初始状态 x 1 x_1 x1,终止状态或任务目标状态 x T + 1 x_{T+1} xT+1,已知环境动态模型 x t + 1 = f ( x t , u t ) x_{t+1}=f(x_t,u_t) xt+1=f(xt,ut),求出一串动作序列 u 1 , u 2 , . . . , u T u_1,u_2,...,u_T u1,u2,...,uT使得累积cost最小,即

min u 1 , . . . , u T ∑ t = 1 T c ( x t , u t ) s . t x t = f ( x t − 1 , u t − 1 ) \min_{u1,...,u_T}\sum_{t=1}^Tc(x_t,u_t)\quad s.t\quad x_t=f(x_{t-1},u_{t-1}) u1,...,uTmint=1∑Tc(xt,ut)s.txt=f(xt−1,ut−1) -

LQR的approximation,linear体现在对 f ( x t , u t ) f(x_t,u_t) f(xt,ut)采用一阶线性近似,quadratic体现在对 c ( x t , u t ) c(x_t,u_t) c(xt,ut)采用二阶近似,即

x t + 1 = f ( x t , u t ) ≈ F t [ x t u t ] + f t = [ F x t F u t ] [ x t u t ] + f t c ( x t , u t ) = 1 2 [ x t u t ] T [ C x t , x t C x t , u t C u t , x t C u t , u t ] [ x t u t ] + [ x t u t ] T [ c x t c u t ] = 1 2 [ x t u t ] T C t [ x t u t ] + [ x t u t ] T c t x_{t+1}=f(x_t,u_t)\approx F_t \left[ \begin{matrix} x_t\\u_t \end{matrix}\right]+f_t=\left[ \begin{matrix} F_{x_t} &F_{u_t} \end{matrix}\right]\left[ \begin{matrix} x_t\\u_t \end{matrix}\right]+f_t \\ c(x_t,u_t)= \frac{1}{2}\left[ \begin{matrix} x_t\\u_t \end{matrix}\right]^T\left[ \begin{matrix} C_{x_t,x_t} &C_{x_t,u_t}\\C_{u_t,x_t}&C_{u_t,u_t} \end{matrix}\right]\left[ \begin{matrix} x_t\\u_t \end{matrix}\right]+\left[ \begin{matrix} x_t\\u_t \end{matrix}\right]^T\left[ \begin{matrix} c_{x_t}\\c_{u_t} \end{matrix}\right] =\frac{1}{2}\left[ \begin{matrix} x_t\\u_t \end{matrix}\right]^TC_t\left[ \begin{matrix} x_t\\u_t \end{matrix}\right]+\left[ \begin{matrix} x_t\\u_t \end{matrix}\right]^Tc_t xt+1=f(xt,ut)≈Ft[xtut]+ft=[FxtFut][xtut]+ftc(xt,ut)=21[xtut]T[Cxt,xtCut,xtCxt,utCut,ut][xtut]+[xtut]T[cxtcut]=21[xtut]TCt[xtut]+[xtut]Tct

所以LQR的优化问题表述为:

min u 1 , . . . , u T ∑ t = 1 T c ( x t , u t ) s . t x t = f ( x t − 1 , u t − 1 ) f ( x t , u t ) = F t [ x t u t ] + f t c ( x t , u t ) = 1 2 [ x t u t ] T C t [ x t u t ] + [ x t u t ] T c t \min_{u1,...,u_T}\sum_{t=1}^Tc(x_t,u_t)\quad s.t\quad x_t=f(x_{t-1},u_{t-1})\\ f(x_t,u_t)= F_t \left[ \begin{matrix} x_t\\u_t \end{matrix}\right]+f_t \\ c(x_t,u_t)=\frac{1}{2}\left[ \begin{matrix} x_t\\u_t \end{matrix}\right]^TC_t\left[ \begin{matrix} x_t\\u_t \end{matrix}\right]+\left[ \begin{matrix} x_t\\u_t \end{matrix}\right]^Tc_t u1,...,uTmint=1∑Tc(xt,ut)s.txt=f(xt−1,ut−1)f(xt,ut)=Ft[xtut]+ftc(xt,ut)=21[xtut]TCt[xtut]+[xtut]Tct

其中 F t , f t , C t , c t F_t,f_t,C_t,c_t Ft,ft,Ct,ct均已知,下面推到用到其展开形式!

1.3 LQR求解

LQR求解主要有Backward Pass与Forward Pass两大过程,先看看对于LQR这个问题,什么是已知的,什么是未知的。

- 已知:initial state 初始状态 x 1 x_1 x1,goal state目标状态 x T + 1 x_{T+1} xT+1,动态模型 f ( x t , u t ) f(x_t,u_t) f(xt,ut)与cost function c ( x t , u t ) c(x_t,u_t) c(xt,ut)的结构参数 F t , f t , C t , c t F_t,f_t,C_t,c_t Ft,ft,Ct,ct

- 未知: x 2 , x 3 , . . . , x T , u 1 , u 2 , . . . , u T x_2,x_3,...,x_T,u_1,u_2,...,u_T x2,x3,...,xT,u1,u2,...,uT。因为 x 2 = f ( x 1 , u 1 ) , x 3 = f ( x 2 , u 2 ) , . . . , x T = f ( x T − 1 , u T − 1 ) , x T + 1 = f ( x T , u T ) x_2=f(x_1,u_1),x_3=f(x_2,u_2),...,x_T=f(x_{T-1},u_{T-1}),x_{T+1}=f(x_T,u_T) x2=f(x1,u1),x3=f(x2,u2),...,xT=f(xT−1,uT−1),xT+1=f(xT,uT),所以实际上未知的就是动作序列 u 1 , . . . , u T u_1,...,u_T u1,...,uT

- 目标函数变为: min u 1 , . . u T c ( x 1 , u 1 ) + c ( f ( x 1 , u 1 ) , u 2 ) + ⋯ + c ( f ( f ( . . . ( ) . . . ) , u T ) \min_{u_1,..u_T}c(x_1,u_1)+c(f(x_1,u_1),u_2)+\cdots+c(f(f(...()...),u_T) minu1,..uTc(x1,u1)+c(f(x1,u1),u2)+⋯+c(f(f(...()...),uT)啰嗦一下, V ( x T + 1 ) V(x_{T+1}) V(xT+1)由于终态确定,故可看做 c o n s t const const,而且dynamics是deterministic的,所以有 Q ( x t , u t ) = r ( x t , u t ) + V ( x t + 1 ) Q(x_t,u_t)=r(x_t,u_t)+V(x_{t+1}) Q(xt,ut)=r(xt,ut)+V(xt+1),于是目标函数可以看成:

min u 1 , . . u T c ( x 1 , u 1 ) + c ( f ( x 1 , u 1 ) , u 2 ) + ⋯ + c ( f ( f ( . . . ( ) . . . ) , u T ) − V ( x T + 1 ) max u 1 , . . . , u T r ( x 1 , u 1 ) + r ( f ( x 1 , u 2 ) , u 2 ) + ⋯ + r ( x T , u T ) + V ( x T + 1 ) ⏟ Q = max u 1 , . . . , u T r ( x 1 , u 1 ) + r ( f ( x 1 , u 2 ) , u 2 ) + ⋯ + r ( x T − 1 , u T − 1 ) + Q ( x T , u T ) ⏟ u T = K T x T + k T = max u 1 , . . . , u T r ( x 1 , u 1 ) + r ( f ( x 1 , u 2 ) , u 2 ) + ⋯ + r ( x T − 1 , u T − 1 ) + V ( x T , u T ) = max u 1 , . . . , u T r ( x 1 , u 1 ) + r ( f ( x 1 , u 2 ) , u 2 ) + ⋯ + Q ( x T − 1 , u T − 1 ) = max u 1 , . . . , u T Q ( x 1 , u 1 ) \begin{aligned} &\min_{u_1,..u_T}c(x_1,u_1)+c(f(x_1,u_1),u_2)+\cdots+c(f(f(...()...),u_T)-V(x_{T+1})\\ &\max_{u_1,...,u_T}r(x_1,u_1)+r(f(x_1,u_2),u_2)+\cdots+\underbrace{r(x_T,u_T)+V(x_{T+1})}_Q\\ =&\max_{u_1,...,u_T}r(x_1,u_1)+r(f(x_1,u_2),u_2)+\dots+r(x_{T-1},u_{T-1})+\underbrace{Q(x_T,u_T)}_{u_T=K_Tx_T+k_T}\\ =&\max_{u_1,...,u_T}r(x_1,u_1)+r(f(x_1,u_2),u_2)+\dots+r(x_{T-1},u_{T-1})+V(x_T,u_T)\\ =&\max_{u_1,...,u_T}r(x_1,u_1)+r(f(x_1,u_2),u_2)+\dots+Q(x_{T-1},u_{T-1})\\ =&\max_{u_1,...,u_T}Q(x_1,u_1) \end{aligned} ====u1,..uTminc(x1,u1)+c(f(x1,u1),u2)+⋯+c(f(f(...()...),uT)−V(xT+1)u1,...,uTmaxr(x1,u1)+r(f(x1,u2),u2)+⋯+Q r(xT,uT)+V(xT+1)u1,...,uTmaxr(x1,u1)+r(f(x1,u2),u2)+⋯+r(xT−1,uT−1)+uT=KTxT+kT Q(xT,uT)u1,...,uTmaxr(x1,u1)+r(f(x1,u2),u2)+⋯+r(xT−1,uT−1)+V(xT,uT)u1,...,uTmaxr(x1,u1)+r(f(x1,u2),u2)+⋯+Q(xT−1,uT−1)u1,...,uTmaxQ(x1,u1)

求解思路,固定一个变量,调整其它变量,一个个求嘛,但如果是固定 u 1 u_1 u1,即Forward Pass前向算法,会经过多个动态模型 f f f的迭代,很难求解,于是先从BackWard角度考虑,即从 u T u_T uT入手。

1.3.1 推导过程

-

c ( x T , u T ) c(x_T,u_T) c(xT,uT)是一个二元函数,真正未知的只有 u T u_T uT,要使cost最小,所以对其求导,得到 u T u_T uT关于 x T x_T xT的关系,称作控制律,是在Backward pass中实际想要的东西。

∇ u T Q ( x T , u T ) = ∇ u T c ( x T , u T ) = ∇ u T [ 1 2 [ x T u T ] T C T [ x T u T ] + [ x T u T ] T c T ] = ∇ u T [ C x T , u T x T + C u T , u T u T + c u T ] ) = 0 所 以 u T = − C u T , u T − 1 ( C u T , x T x T + c u T ) \begin{aligned} \nabla_{u_T}Q(x_T,u_T)=\nabla_{u_T}c(x_T,u_T)&=\nabla_{u_T}\Big[\frac{1}{2}\left[ \begin{matrix} x_T\\u_T \end{matrix}\right]^TC_T\left[ \begin{matrix} x_T\\u_T \end{matrix}\right]+\left[ \begin{matrix} x_T\\u_T \end{matrix}\right]^Tc_T\Big] \\ &=\nabla_{u_T}\Big[C_{x_T,u_T}x_T+C_{u_T,u_T}u_T+c_{u_T}\Big])\\ &=0\\ 所以u_T&=-C^{-1}_{u_T,u_T}(C_{u_T,x_T}x_T+c_{u_T}) \end{aligned} ∇uTQ(xT,uT)=∇uTc(xT,uT)所以uT=∇uT[21[xTuT]TCT[xTuT]+[xTuT]TcT]=∇uT[CxT,uTxT+CuT,uTuT+cuT])=0=−CuT,uT−1(CuT,xTxT+cuT)

换一下表述有:

u T = K T x T + k T K T = − C u T , u T − 1 C u T , x T , k T = − C u T , u T − 1 c u T u_T=K_Tx_T+k_T\\ K_T=-C^{-1}_{u_T,u_T}C_{u_T,x_T},k_T=-C^{-1}_{u_T,u_T}c_{u_T} uT=KTxT+kTKT=−CuT,uT−1CuT,xT,kT=−CuT,uT−1cuT

这一步,得到了第T时刻的动作 u T u_T uT与第T时刻的状态 x T x_T xT之间的关系,其它系数均已知。 -

再看 u T − 1 u_{T-1} uT−1时,由动态模型 x T = f ( x T − 1 , u T − 1 ) x_T=f(x_{T-1},u_{T-1}) xT=f(xT−1,uT−1),由Q值函数 Q ( x T − 1 , u T − 1 ) = r ( x T − 1 , u T − 1 ) + V ( x T ) = − c ( x T − 1 , u T − 1 ) + V ( x T ) Q(x_{T-1},u_{T-1})=r(x_{T-1},u_{T-1})+V(x_T)=-c(x_{T-1},u_{T-1})+V(x_T) Q(xT−1,uT−1)=r(xT−1,uT−1)+V(xT)=−c(xT−1,uT−1)+V(xT)。

实际上,回顾lec5-lec9中对 Q ( s , a ) Q(s,a) Q(s,a)与 V ( s ) V(s) V(s)的理解,有 Q ( x T , u T ) = r ( x T , u T ) + E [ V ( x T + 1 ) ] = r ( x T , u T ) + c o n s t = − c ( x T , u T ) + c o n s t Q(x_T,u_T)=r(x_T,u_T)+E[V(x_{T+1})]=r(x_T,u_T)+const=-c(x_T,u_T)+const Q(xT,uT)=r(xT,uT)+E[V(xT+1)]=r(xT,uT)+const=−c(xT,uT)+const,代入 u T = K T x T + k T u_T=K_Tx_T+k_T uT=KTxT+kT,则:

V ( x T ) = Q ( x T , K T x T + k T ) = − c ( x T , K T x T + k T ) + c o n s t V(x_T)=Q(x_T,K_Tx_T+k_T)=-c(x_T,K_Tx_T+k_T)+const V(xT)=Q(xT,KTxT+kT)=−c(xT,KTxT+kT)+const代入quadratic cost function后化简一下并替换下表述有:

V ( x T ) = − 1 2 x T T V T x T + x T T v T V T = C x T , x T + C x T , u T K T + K T T C u T , x T + K T T C u T , u T K T v T = c x T + C x T , u T k T + K T T C u T + K T T C u T , u T k T \begin{aligned} &V(x_T) =-\frac{1}{2}x_T^TV_Tx_T+x_T^Tv_T\\ {V}_{T} &= {C}_{ {x}_{T}, {x}_{T}}+ {C}_{ {x}_{T}, {u}_{T}} {K}_{T}+ {K}_{T}^{T} {C}_{ {u}_{T}, {x}_{T}}+ {K}_{T}^{T} {C}_{ {u}_{T}, {u}_{T}} {K}_{T} \\ {v}_{T} &= {c}_{ {x}_{T}}+ {C}_{ {x}_{T}, {u}_{T}} {k}_{T}+ {K}_{T}^{T} {C}_{ {u}_{T}}+ {K}_{T}^{T} {C}_{ {u}_{T}, {u}_{T}} {k}_{T} \end{aligned} VTvTV(xT)=−21xTTVTxT+xTTvT=CxT,xT+CxT,uTKT+KTTCuT,xT+KTTCuT,uTKT=cxT+CxT,uTkT+KTTCuT+KTTCuT,uTkT

因此,表示T-1时刻的Q值函数,利用动态模型消掉 x T x_T xT并使其导数为0。

− Q ( x T − 1 , u T − 1 ) = c ( x T − 1 , u T − 1 ) − V ( x T ) = 1 2 [ x T − 1 u T − 1 ] T C T − 1 [ x T − 1 u T − 1 ] + [ x T − 1 u T − 1 ] T c T − 1 + 1 2 x T T V T x T + x T T v T \begin{aligned} -Q(x_{T-1},u_{T-1})&=c(x_{T-1},u_{T-1})-V(x_T)\\ &=\frac{1}{2}\left[ \begin{matrix} x_{T-1}\\u_{T-1} \end{matrix}\right]^TC_{T-1}\left[ \begin{matrix} x_{T-1}\\u_{T-1} \end{matrix}\right]+\left[ \begin{matrix} x_{T-1}\\u_{T-1} \end{matrix}\right]^Tc_{T-1}+\frac{1}{2}x_T^TV_Tx_T+x_T^Tv_T \end{aligned} −Q(xT−1,uT−1)=c(xT−1,uT−1)−V(xT)=21[xT−1uT−1]TCT−1[xT−1uT−1]+[xT−1uT−1]TcT−1+21xTTVTxT+xTTvT

代入 x T = f ( x T − 1 , u T − 1 ) = F T − 1 [ x T − 1 u T − 1 ] + f T − 1 x_T=f(x_{T-1},u_{T-1})= F_{T-1} \left[ \begin{matrix} x_{T-1}\\u_{T-1} \end{matrix}\right]+f_{T-1} xT=f(xT−1,uT−1)=FT−1[xT−1uT−1]+fT−1,便得到了仅有 x T − 1 , u T − 1 x_{T-1},u_{T-1} xT−1,uT−1表示的 Q ( x T − 1 , u T − 1 ) Q(x_{T-1},u_{T-1}) Q(xT−1,uT−1)

具体而言,经过整理:

Q ( x T − 1 , u T − 1 ) = 1 2 [ x T − 1 u T − 1 ] T Q T − 1 [ x T − 1 u T − 1 ] + [ x T − 1 u T − 1 ] T q T − 1 Q T − 1 = C T − 1 + F T − 1 T V T F T − 1 q T − 1 = c T − 1 + F T − 1 T V T f T − 1 + F T − 1 T v T Q(x_{T-1},u_{T-1})=\frac{1}{2}\left[ \begin{matrix} x_{T-1}\\u_{T-1} \end{matrix}\right]^TQ_{T-1}\left[ \begin{matrix} x_{T-1}\\u_{T-1} \end{matrix}\right]+\left[ \begin{matrix} x_{T-1}\\u_{T-1} \end{matrix}\right]^Tq_{T-1}\\ \begin{aligned} & {Q}_{T-1}= {C}_{T-1}+ {F}_{T-1}^{T} {V}_{T} {F}_{T-1}\\ & {q}_{T-1}= {c}_{T-1}+ {F}_{T-1}^{T} {V}_{T} {f}_{T-1}+ {F}_{T-1}^{T} {v}_{T}\\ \end{aligned} Q(xT−1,uT−1)=21[xT−1uT−1]TQT−1[xT−1uT−1]+[xT−1uT−1]TqT−1QT−1=CT−1+FT−1TVTFT−1qT−1=cT−1+FT−1TVTfT−1+FT−1TvT

令其导数为0,得到 u T − 1 u_{T-1} uT−1与 x T − 1 x_{T-1} xT−1的关系,即T-1时刻的控制律:

∇ u T − 1 Q ( x T − 1 , u T − 1 ) = 0 u T − 1 = K T − 1 x T − 1 + k T − 1 K T − 1 = − Q u T − 1 , u T − 1 − 1 Q u T − 1 , x T − 1 , k T − 1 = − Q u T − 1 , u T − 1 − 1 q u T − 1 \nabla_{u_{T-1}}Q(x_{T-1},u_{T-1})=0\\ u_{T-1}=K_{T-1}x_{T-1}+k_{T-1}\\ K_{T-1}=-Q^{-1}_{u_{T-1},u_{T-1}}Q_{u_{T-1},x_{T-1}},k_{T-1}=-Q^{-1}_{u_{T-1},u_{T-1}}q_{u_{T-1}} ∇uT−1Q(xT−1,uT−1)=0uT−1=KT−1xT−1+kT−1KT−1=−QuT−1,uT−1−1QuT−1,xT−1,kT−1=−QuT−1,uT−1−1quT−1 -

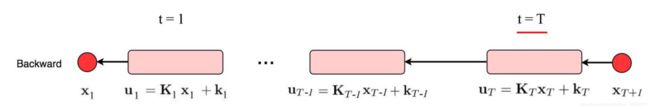

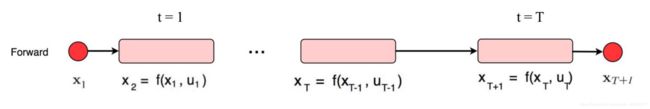

如此类推,Backward Pass得到动作与状态的控制律:

u t = K t x t + k t , t = 1 , 2 , . . . , T u_t=K_tx_t+k_t,t=1,2,...,T ut=Ktxt+kt,t=1,2,...,T又因为 x 1 x_1 x1已知,所以 u 1 u_1 u1可由 u 1 = K 1 x 1 + k 1 u_1=K_1x_1+k_1 u1=K1x1+k1计算得出,于是Forward Pass结合动态模型,算出动作序列:

x 2 = f ( x 1 , u 1 ) u 2 = K 2 x 2 + k 2 x 3 = f ( x 2 , u 2 ) ⋮ u T = K T x T + k T x_2=f(x_1,u_1)\\ u_2=K_2x_2+k_2\\ x_3=f(x_2,u_2)\\ \vdots \\ u_T=K_Tx_T+k_T x2=f(x1,u1)u2=K2x2+k2x3=f(x2,u2)⋮uT=KTxT+kT -

小总结

Backward Pass步骤:

- T时刻的 ∇ u T Q ( x T , u T ) = 0 \nabla_{u_T}Q(x_T,u_T)=0 ∇uTQ(xT,uT)=0,得控制律 u T = K T x T + k T u_T=K_Tx_T+k_T uT=KTxT+kT

- 计算 V ( x T ) V(x_T) V(xT),并利用 x T = f ( x T − 1 , u T − 1 ) x_T=f(x_{T-1},u_{T-1}) xT=f(xT−1,uT−1)消元,从而得到 Q ( x T − 1 , u T − 1 ) = − c ( x T − 1 , u T − 1 ) + V ( x T ) = − c ( x T − 1 , u T − 1 ) + V ( f ( x T − 1 , u T − 1 ) ) Q(x_{T-1},u_{T-1})=-c(x_{T-1},u_{T-1})+V(x_T)=-c(x_{T-1},u_{T-1})+V(f(x_{T-1},u_{T-1})) Q(xT−1,uT−1)=−c(xT−1,uT−1)+V(xT)=−c(xT−1,uT−1)+V(f(xT−1,uT−1))

- ∇ u T Q ( x T , u T ) = 0 \nabla_{u_T}Q(x_T,u_T)=0 ∇uTQ(xT,uT)=0,得控制律 u T − 1 = K T − 1 x T − 1 + k T − 1 u_{T-1}=K_{T-1}x_{T-1}+k_{T-1} uT−1=KT−1xT−1+kT−1

- 计算 V ( x T − 1 ) V(x_{T-1}) V(xT−1),利用动态模型消元,得 Q ( x T − 2 , u T − 2 ) Q(x_{T-2},u_{T-2}) Q(xT−2,uT−2)

- ∇ u T − 2 Q ( x T − 2 , u T − 2 ) ) = 0 \nabla_{u_{T-2}}Q(x_{T-2},u_{T-2}))=0 ∇uT−2Q(xT−2,uT−2))=0,得控制律 u T − 2 = K T − 2 x T − 2 + k T − 2 u_{T-2}=K_{T-2}x_{T-2}+k_{T-2} uT−2=KT−2xT−2+kT−2

- 以此类推。

Forward Pass步骤:

利用动态模型计算下一状态,利用控制律,计算出相应动作

1.3.2 LQR算法流程

- Backward Pass

for t=T to t=1:

Q t = C t + F t T V t + 1 F t q t = c t + F t T V t + 1 f t + F t T v t + 1 Q ( x t , u t ) = c o n s t + 1 2 [ x t u t ] T Q t [ x t u t ] + [ x t u t ] T q t u t ← arg min u t Q ( x t , u t ) = K t x t + k t K t = − Q u t , u t − 1 Q u t , x t k t = − Q u t , u t − 1 q u t V t = Q x t , x t + Q x t , u t K t + K t T Q u t , x t + K t T Q u t , u t K t v t = q x t + Q x t , u t k t + K t T Q u t + K t T Q u t , u t k t V ( x t ) = c o n s t + 1 2 x t T V t x t + x t T v t \begin{aligned} & {Q}_{t}= {C}_{t}+ {F}_{t}^{T} {V}_{t+1} {F}_{t}\\ & {q}_{t}= {c}_{t}+ {F}_{t}^{T} {V}_{t+1} {f}_{t}+ {F}_{t}^{T} {v}_{t+1}\\ &Q\left( {x}_{t}, {u}_{t}\right)=const+\frac{1}{2}\left[\begin{array}{c} { {x}_{t}} \\ { {u}_{t}} \end{array}\right]^{T} {Q}_{t}\left[\begin{array}{c} { {x}_{t}} \\ { {u}_{t}} \end{array}\right]+\left[\begin{array}{c} { {x}_{t}} \\ { {u}_{t}} \end{array}\right]^{T} {q}_{t}\\ & {u}_{t} \leftarrow \arg \min _{ {u}_{t}} Q\left( {x}_{t}, {u}_{t}\right)= {K}_{t} {x}_{t}+ {k}_{t}\\ & {K}_{t}=- {Q}_{ {u}_{t}, {u}_{t}}^{-1} {Q}_{ {u}_{t}, {x}_{t}}\\ & {k}_{t}=- {Q}_{ {u}_{t}, {u}_{t}}^{-1} {q}_{ {u}_{t}}\\ & {V}_{t}= {Q}_{ {x}_{t}, {x}_{t}}+ {Q}_{ {x}_{t}, {u}_{t}} {K}_{t}+ {K}_{t}^{T} {Q}_{ {u}_{t}, {x}_{t}}+ {K}_{t}^{T} {Q}_{ {u}_{t}, {u}_{t}} {K}_{t}\\ & {v}_{t}= {q}_{ {x}_{t}}+ {Q}_{ {x}_{t}, {u}_{t}} {k}_{t}+ {K}_{t}^{T} {Q}_{ {u}_{t}}+ {K}_{t}^{T} {Q}_{ {u}_{t}, {u}_{t}} {k}_{t}\\ &V\left( {x}_{t}\right)=\mathrm{const}+\frac{1}{2} {x}_{t}^{T} {V}_{t} {x}_{t}+ {x}_{t}^{T} {v}_{t} \end{aligned} Qt=Ct+FtTVt+1Ftqt=ct+FtTVt+1ft+FtTvt+1Q(xt,ut)=const+21[xtut]TQt[xtut]+[xtut]Tqtut←argutminQ(xt,ut)=Ktxt+ktKt=−Qut,ut−1Qut,xtkt=−Qut,ut−1qutVt=Qxt,xt+Qxt,utKt+KtTQut,xt+KtTQut,utKtvt=qxt+Qxt,utkt+KtTQut+KtTQut,utktV(xt)=const+21xtTVtxt+xtTvt - Forward Pass

for t=1 to t=T:

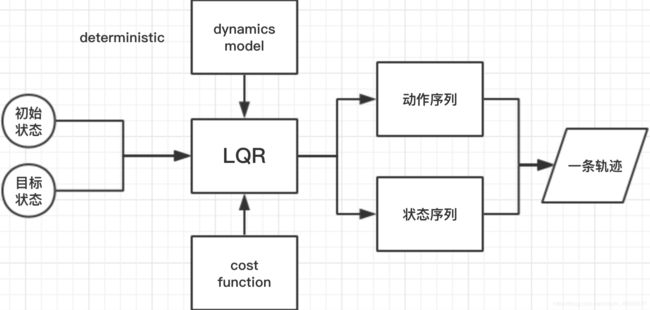

u t = K t x t + k t x t + 1 = f ( x t , u t ) \begin{aligned} & {u}_{t}= {K}_{t} {x}_{t}+ {k}_{t}\\ & {x}_{t+1}=f\left( {x}_{t}, {u}_{t}\right) \end{aligned} ut=Ktxt+ktxt+1=f(xt,ut) - LQR模块

即使上述过程不是很透彻,亦可如下图所示将LQR看成一个黑盒模块,对于已知deterministic的dynamics,使用LQR算法,便可得到一组动作序列 u 1 , . . . , u T u_1,...,u_T u1,...,uT,并可计算出状态序列。

输入:初始状态 x 1 x_1 x1,目标状态 x T + 1 x_{T+1} xT+1,动态模型 f ( x t , u t ) f(x_t,u_t) f(xt,ut),代价函数 c ( x t , u t ) c(x_t,u_t) c(xt,ut)

输出:动作序列 u 1 , . . . , u T u_1,...,u_T u1,...,uT和状态序列 x 1 , . . . , x T x_1,...,x_T x1,...,xT,即一条轨迹

二、iLQR(Iterative Linear Quadratic Regulator)

LQR的linear dynamics是deterministic的,这非常受限,对应RL中的 s ′ = p ( s ′ ∣ s , a ) s'=p(s'|s,a) s′=p(s′∣s,a),在当前state,选择一个action后,下一状态就确定了。为了应对复杂环境dynamics的stochastic,即 s ′ ∼ p ( s ′ ∣ s , a ) s'\sim p(s'|s,a) s′∼p(s′∣s,a),相当于说把LQR中假设linear dynamics拓展成了Non-linear dynamics,这时候需要采用iLQR,再叙述之前,先回顾一下以下两种优化算法,可参考以下专栏。

优化算法(甩甩的知乎专栏)

2.1 Newton Method

寻找参数 θ \theta θ最小化损失函数

m i n L ( θ ) minL(\theta) minL(θ)

在参数空间初始化一个参数 θ ^ \hat\theta θ^,寻找一个增量 Δ θ \Delta\theta Δθ,采用泰勒二阶近似:

L ( θ ^ + Δ θ ) ≈ L ^ ( θ ^ + Δ θ ) = L ( θ ^ ) + ∇ L ( θ ^ ) T Δ θ + 1 2 ( Δ θ ) T ∇ 2 L ( θ ^ ) Δ θ L(\hat\theta+\Delta\theta)\approx \hat{L}(\hat\theta+\Delta\theta)=L(\hat\theta)+\nabla L(\hat\theta)^T\Delta\theta+\frac{1}{2}(\Delta\theta)^T\nabla^2L(\hat\theta)\Delta\theta L(θ^+Δθ)≈L^(θ^+Δθ)=L(θ^)+∇L(θ^)TΔθ+21(Δθ)T∇2L(θ^)Δθ

寻找的增量 Δ θ \Delta\theta Δθ使得 L ( θ ^ + Δ θ ) L(\hat\theta+\Delta\theta) L(θ^+Δθ)最小,即 L ( θ ^ + Δ θ ) ≤ L ( θ ^ ) L(\hat\theta+\Delta\theta)\leq L(\hat\theta) L(θ^+Δθ)≤L(θ^)且 ∇ Δ θ L ( θ ^ + Δ θ ) = 0 \nabla_{\Delta\theta} L(\hat\theta+\Delta\theta)=0 ∇ΔθL(θ^+Δθ)=0所以有:

∇ Δ θ ( L ( θ ^ ) + ∇ L ( θ ^ ) T Δ θ + 1 2 ( Δ θ ) T ∇ 2 L ( θ ^ ) Δ θ ) ≈ 0 \nabla_{\Delta\theta} \Big(L(\hat\theta)+\nabla L(\hat\theta)^T\Delta\theta+\frac{1}{2}(\Delta\theta)^T\nabla^2L(\hat\theta)\Delta\theta\Big)\approx0 ∇Δθ(L(θ^)+∇L(θ^)TΔθ+21(Δθ)T∇2L(θ^)Δθ)≈0

下面将符号稍微写繁琐一点,实际上 ∇ L ( θ ^ ) \nabla L(\hat\theta) ∇L(θ^)为Jacobian矩阵 J ( θ ^ ) J(\hat\theta) J(θ^), ∇ 2 L ( θ ^ ) \nabla^2L(\hat\theta) ∇2L(θ^)为Hessian矩阵 H ( θ ^ ) H(\hat\theta) H(θ^)

∇ θ ^ L ( θ ^ ) + ∇ θ ^ 2 L ( θ ^ ) Δ θ ≈ 0 \nabla_{\hat\theta} L(\hat\theta)+\nabla_{\hat\theta} ^2L(\hat\theta)\Delta\theta\approx0 ∇θ^L(θ^)+∇θ^2L(θ^)Δθ≈0

Δ θ ≈ − ( ∇ θ ^ 2 L ( θ ^ ) ) − 1 ∇ θ ^ L ( θ ^ ) = − H ( θ ^ ) − 1 J ( θ ^ ) θ t + 1 = θ t + Δ θ t ≈ θ t − H t − 1 J t \Delta\theta\approx-(\nabla_{\hat\theta} ^2L(\hat\theta))^{-1}\nabla_{\hat\theta} L(\hat\theta)=-H(\hat\theta)^{-1}J(\hat\theta)\\ \theta_{t+1}=\theta_t+\Delta\theta_t\approx\theta_t-H_t^{-1}J_t Δθ≈−(∇θ^2L(θ^))−1∇θ^L(θ^)=−H(θ^)−1J(θ^)θt+1=θt+Δθt≈θt−Ht−1Jt

所以Newton Method的更新策略为:

J t = ∇ L ( θ t ) H t = ∇ 2 L ( θ t ) Δ t = − H t − 1 J t α t = arg min α > 0 L ( θ t + α Δ t ) L i n e S e a r c h ! θ t + 1 = θ t + α t Δ t J_t=\nabla L(\theta_t)\\ H_t=\nabla^2L(\theta_t)\\ \Delta_t=-H_t^{-1}J_t\\ \alpha_t=\argmin_{\alpha>0}L(\theta_t+\alpha\Delta_t)\quad Line\quad Search!\\ \theta_{t+1}=\theta_t+\alpha_t\Delta_t Jt=∇L(θt)Ht=∇2L(θt)Δt=−Ht−1Jtαt=α>0argminL(θt+αΔt)LineSearch!θt+1=θt+αtΔt

上述更新策略,有一个line search的过程。通过 g g g表示gradient, x x x替换 θ \theta θ, x ^ \hat x x^为更新前的值,亦可简化表述为iLQR需要用到的形式,如下:

Until convergence:

g = ∇ x L ( x ^ ) H = ∇ x 2 L ( x ^ ) x ^ ← arg min x L ( x ) − L ( x ^ ) ≈ 1 2 ( x − x ^ ) T H ( x − x ^ ) + g T ( x − x ^ ) g=\nabla_xL(\hat x)\\ H=\nabla_x^2L(\hat x)\\ \hat x\leftarrow \argmin_x L(x)-L(\hat x)\approx\frac{1}{2}(x-\hat x)^TH(x-\hat x)+g^T(x-\hat x) g=∇xL(x^)H=∇x2L(x^)x^←xargminL(x)−L(x^)≈21(x−x^)TH(x−x^)+gT(x−x^)

2.2 Gauss-Newton Method

因为牛顿方法中不仅要求Hessian矩阵,而且还要求它的逆,计算复杂度猛增,许多拟牛顿方法就是通过不同方式去逼近Hessian矩阵的逆。而高斯牛顿方法实际上,是在最小二乘法中的特殊求解,用一阶梯度的信息来逼近Hessian矩阵

H − 1 ≈ ( J T J ) − 1 H^{-1}\approx (J^TJ)^{-1} H−1≈(JTJ)−1

2.3 iLQR算法

iLQR背景设定

- dynamics model

iLQR的特点是能处理non-linear,stochastic的dynamics model,其模型结构,可从LQR简化为:

L Q R : f ( x t , u t ) = F t [ x t u t ] + f t LQR:f(x_t,u_t)= F_t \left[ \begin{matrix} x_t\\u_t \end{matrix}\right]+f_t LQR:f(xt,ut)=Ft[xtut]+ft

i L Q R : f ( x t , u t ) = N ( F t [ x t u t ] + f t , Σ t ) iLQR:f(x_t,u_t)=N\big(F_t \left[ \begin{matrix} x_t\\u_t \end{matrix}\right]+f_t,\Sigma_t\big) iLQR:f(xt,ut)=N(Ft[xtut]+ft,Σt)

- LQR

LQR的约束,是一个线性系统,可通过deterministic的dynamics model确定下一状态 x t + 1 x_{t+1} xt+1与当前状态 x t x_t xt、动作 u t u_t ut的关系,cost也可以由 x t , u t x_t,u_t xt,ut确定。

f ( x t , u t ) = F t [ x t u t ] + f t c ( x t , u t ) = 1 2 [ x t u t ] T C t [ x t u t ] + [ x t u t ] T c t \begin{aligned} &f\left( {x}_{t}, {u}_{t}\right)= {F}_{t}\left[\begin{array}{l} { {x}_{t}} \\ { {u}_{t}} \end{array}\right]+ {f}_{t}\\ &c\left( {x}_{t}, {u}_{t}\right)=\frac{1}{2}\left[\begin{array}{l} { {x}_{t}} \\ { {u}_{t}} \end{array}\right]^{T} {C}_{t}\left[\begin{array}{l} { {x}_{t}} \\ { {u}_{t}} \end{array}\right]+\left[\begin{array}{l} { {x}_{t}} \\ { {u}_{t}} \end{array}\right]^{T} {c}_{t} \end{aligned} f(xt,ut)=Ft[xtut]+ftc(xt,ut)=21[xtut]TCt[xtut]+[xtut]Tct - iLQR

iLQR的dynamics是非线性的,即下一状态 x t + 1 x_{t+1} xt+1不能靠当前状态 x t x_t xt、当前动作 u t u_t ut线性关系确定,可理解为利用泰勒展开逼近两状态间 x t , x t + 1 x_t,x_{t+1} xt,xt+1假设的高斯分布,对dynamics一阶泰勒近似,对cost二阶泰勒近似,如下:

f ( x t , u t ) ≈ f ( x ^ t , u ^ t ) + ∇ x t , u t f ( x ^ t , u ^ t ) [ x t − x ^ t u t − u ^ t ] c ( x t , u t ) ≈ c ( x ^ t , u ^ t ) + ∇ x t , u t c ( x ^ t , u ^ t ) [ x t − x ^ t u t − u ^ t ] + 1 2 [ x t − x ^ t u t − u ^ t ] T ∇ x t , u t 2 c ( x ^ t , u ^ t ) [ x t − x ^ t u t − u ^ t ] \begin{aligned} &f\left( {x}_{t}, {u}_{t}\right) \approx f\left(\hat{ {x}}_{t}, \hat{ {u}}_{t}\right)+\nabla_{ {x}_{t}, {u}_{t}} f\left(\hat{ {x}}_{t}, \hat{ {u}}_{t}\right)\left[\begin{array}{l} { {x}_{t}-\hat{ {x}}_{t}} \\ { {u}_{t}-\hat{ {u}}_{t}} \end{array}\right]\\ &c\left( {x}_{t}, {u}_{t}\right) \approx c\left(\hat{ {x}}_{t}, \hat{ {u}}_{t}\right)+\nabla_{ {x}_{t}, {u}_{t}} c\left(\hat{ {x}}_{t}, \hat{ {u}}_{t}\right)\left[\begin{array}{c} { {x}_{t}-\hat{ {x}}_{t}} \\ { {u}_{t}-\hat{ {u}}_{t}} \end{array}\right]+\frac{1}{2}\left[\begin{array}{c} { {x}_{t}-\hat{ {x}}_{t}} \\ { {u}_{t}-\hat{ {u}}_{t}} \end{array}\right]^{T} \nabla_{ {x}_{t}, {u}_{t}}^{2} c\left(\hat{ {x}}_{t}, \hat{ {u}}_{t}\right)\left[\begin{array}{l} { {x}_{t}-\hat{ {x}}_{t}} \\ { {u}_{t}-\hat{ {u}}_{t}} \end{array}\right] \end{aligned} f(xt,ut)≈f(x^t,u^t)+∇xt,utf(x^t,u^t)[xt−x^tut−u^t]c(xt,ut)≈c(x^t,u^t)+∇xt,utc(x^t,u^t)[xt−x^tut−u^t]+21[xt−x^tut−u^t]T∇xt,ut2c(x^t,u^t)[xt−x^tut−u^t]

iLQR流程

整理一下有:

f ( x t , u t ) − f ( x ^ t , u ^ t ) ≈ ∇ x t , u t f ( x ^ t , u ^ t ) [ x t − x ^ t u t − u ^ t ] c ( x t , u t ) − c ( x ^ t , u ^ t ) ≈ ∇ x t , u t c ( x ^ t , u ^ t ) [ x t − x ^ t u t − u ^ t ] + 1 2 [ x t − x ^ t u t − u ^ t ] T ∇ x t , u t 2 c ( x ^ t , u ^ t ) [ x t − x ^ t u t − u ^ t ] \begin{aligned} &f\left( {x}_{t}, {u}_{t}\right)-f\left(\hat{ {x}}_{t}, \hat{ {u}}_{t}\right) \approx \nabla_{ {x}_{t}, {u}_{t}} f\left(\hat{ {x}}_{t}, \hat{ {u}}_{t}\right)\left[\begin{array}{l} { {x}_{t}-\hat{ {x}}_{t}} \\ { {u}_{t}-\hat{ {u}}_{t}} \end{array}\right]\\ &c\left( {x}_{t}, {u}_{t}\right)-c\left(\hat{ {x}}_{t}, \hat{ {u}}_{t}\right) \approx \nabla_{ {x}_{t}, {u}_{t}} c\left(\hat{ {x}}_{t}, \hat{ {u}}_{t}\right)\left[\begin{array}{c} { {x}_{t}-\hat{ {x}}_{t}} \\ { {u}_{t}-\hat{ {u}}_{t}} \end{array}\right]+\frac{1}{2}\left[\begin{array}{c} { {x}_{t}-\hat{ {x}}_{t}} \\ { {u}_{t}-\hat{ {u}}_{t}} \end{array}\right]^{T} \nabla_{ {x}_{t}, {u}_{t}}^{2} c\left(\hat{ {x}}_{t}, \hat{ {u}}_{t}\right)\left[\begin{array}{l} { {x}_{t}-\hat{ {x}}_{t}} \\ { {u}_{t}-\hat{ {u}}_{t}} \end{array}\right] \end{aligned} f(xt,ut)−f(x^t,u^t)≈∇xt,utf(x^t,u^t)[xt−x^tut−u^t]c(xt,ut)−c(x^t,u^t)≈∇xt,utc(x^t,u^t)[xt−x^tut−u^t]+21[xt−x^tut−u^t]T∇xt,ut2c(x^t,u^t)[xt−x^tut−u^t]

更换一下表述 δ x t = x t − x ^ t , δ u t = u t − u ^ t \delta x_t=x_t-\hat x_t,\delta u_t=u_t-\hat u_t δxt=xt−x^t,δut=ut−u^t:

f ˉ ( δ x t , δ u t ) = f ( x t , u t ) − f ( x ^ t , u ^ t ) ≈ ∇ x t , u t f ( x ^ t , u ^ t ) [ x t − x ^ t u t − u ^ t ] = F t [ δ x t δ u t ] \bar f(\delta x_t,\delta u_t)=f\left( {x}_{t}, {u}_{t}\right)-f\left(\hat{ {x}}_{t}, \hat{ {u}}_{t}\right) \approx \nabla_{ {x}_{t}, {u}_{t}} f\left(\hat{ {x}}_{t}, \hat{ {u}}_{t}\right)\left[\begin{array}{l} { {x}_{t}-\hat{ {x}}_{t}} \\ { {u}_{t}-\hat{ {u}}_{t}} \end{array}\right]=F_t\left[\begin{array}{l} { \delta x_t} \\ { \delta u_t} \end{array}\right] fˉ(δxt,δut)=f(xt,ut)−f(x^t,u^t)≈∇xt,utf(x^t,u^t)[xt−x^tut−u^t]=Ft[δxtδut]

c ˉ ( δ x t , δ u t ) = c ( x t , u t ) − c ( x ^ t , u ^ t ) ≈ ∇ x t , u t c ( x ^ t , u ^ t ) [ x t − x ^ t u t − u ^ t ] + 1 2 [ x t − x ^ t u t − u ^ t ] T ∇ x t , u t 2 c ( x ^ t , u ^ t ) [ x t − x ^ t u t − u ^ t ] = c t [ δ x t δ u t ] + 1 2 [ δ x t δ u t ] T C t [ δ x t δ u t ] \begin{aligned} \bar{c}(\delta x_t,\delta u_t)