Unity录屏实现(一)

几天前下载到一个安卓工程,可以把图片合成视频,突然想开坑做一个Unity录屏功能,然后就开始了。

Android代码:

package cn.net.xuefei.unityrec;

import static com.googlecode.javacv.cpp.opencv_highgui.cvLoadImage;

import java.io.File;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.OutputStream;

import com.googlecode.javacv.FFmpegFrameRecorder;

import com.googlecode.javacv.FrameRecorder.Exception;

import com.googlecode.javacv.cpp.opencv_core;

import com.unity3d.player.UnityPlayer;

import com.unity3d.player.UnityPlayerActivity;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.os.Bundle;

import android.os.Environment;

import android.util.Log;

public class MainActivity extends UnityPlayerActivity {

private static MainActivity ma;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

ma = this;

}

public static void StartFusionVideo(final String videoName, final String frameCount, final String vidoeLength) {

ma.runOnUiThread(new Runnable() {

public void run() { //

if (!isStarted()) {

int fc = Integer.parseInt(frameCount);

long vl = Long.parseLong(vidoeLength);

start(videoName, fc, vl);

}

}

});

}

public static void PauseFusionVideo() {

pause();

}

public static void StopFusionVideo() {

stop();

}

private static int switcher = 0;// 录像键

private static boolean isPaused = false;// 暂停键

private static String filename = null;

public static int INDEX_MAX = 21;

private static String filePathRoot = Environment.getExternalStorageDirectory()

+ "/Android/data/cn.net.xuefei.unityrec/files/";

private static Bitmap frame;

private static Bitmap testBitmap;

public static void start(final String videoName, final int frameCount, final long vidoeLength) {

Log.e("start参数:", "videoName:" + videoName + " frameCount:" + frameCount + " vidoeLength:" + vidoeLength);

INDEX_MAX = frameCount - 1;

frame = Bitmap.createBitmap(1080, 1920, Bitmap.Config.RGB_565);

testBitmap = Bitmap.createBitmap(1080, 1920, Bitmap.Config.RGB_565);

switcher = 1;

new Thread() {

public void run() {

OutputStream os = null;

filename = videoName + ".mp4";

testBitmap = getFrameBitmap(filePathRoot + "0.jpg");

FFmpegFrameRecorder recorder = new FFmpegFrameRecorder(filePathRoot + filename, 1080, 1920);

recorder.setFormat("mp4");

// recorder.setFrameRate(30f);//录像帧率

Log.e("录像帧率 ", (frameCount / (vidoeLength / 1000)) + "");

Log.e("录像时长 ", vidoeLength + "");

Log.e("frameCount", frameCount + "");

recorder.setFrameRate(frameCount / (vidoeLength / 1000));// 录像帧率

// recorder.setTimestamp(vidoeLength);

try {

recorder.start();

} catch (Exception e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

int index = 0;

while (switcher != 0) {

if (!isPaused) {

File file = new File(filePathRoot + "video.jpg");

if (!file.exists()) {

file.getParentFile().mkdirs();

try {

file.createNewFile();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

} else {

file = new File(filePathRoot + "video.jpg");

}

try {

os = new FileOutputStream(file);

} catch (FileNotFoundException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

frame = getFrameBitmap(filePathRoot + index + ".jpg");

frame.compress(Bitmap.CompressFormat.JPEG, 100, os);

try {

os.flush();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

try {

os.close();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

// frame.recycle();

// frame = null;

opencv_core.IplImage image = cvLoadImage(filePathRoot + "video.jpg");

try {

recorder.record(image);

} catch (Exception e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

if (index + 1 > INDEX_MAX) {

// index = 1;

try {

recorder.stop();

} catch (Exception e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

UnityPlayer.UnitySendMessage("UnityREC", "FusionOver", videoName);

} else {

index++;

}

try {

recorder.stop();

} catch (Exception e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

UnityPlayer.UnitySendMessage("UnityREC", "FusionOver", videoName);

}

}

}.start();

}

public static void stop() {

switcher = 0;

isPaused = false;

}

public static void pause() {

if (switcher == 1) {

isPaused = true;

}

}

public static void restart() {

if (switcher == 1) {

isPaused = false;

}

}

public static boolean isStarted() {

if (switcher == 1) {

return true;

} else {

return false;

}

}

public static boolean isPaused() {

return isPaused;

}

static Bitmap image = null;

private static Bitmap getFrameBitmap(String filename) {

image = BitmapFactory.decodeFile(filename);

return image;

}

}

Unity代码:

using UnityEngine;

using System.Collections;

using System.IO;

using System;

using System.Diagnostics;

using System.Collections.Generic;

public class UnityREC : MonoBehaviour

{

Texture2D tex;

int width;

int height;

Queue imageBytes = new Queue();

bool isREC = false;

int index = 0;

private Rect CutRect = new Rect(0, 0, 1, 1);

private RenderTexture rt;

// Use this for initialization

void Start()

{

width = Screen.width;

height = Screen.height;

tex = new Texture2D(width, height, TextureFormat.RGB24, false);

rt = new RenderTexture(width, height, 2, RenderTextureFormat.ARGB32, RenderTextureReadWrite.Default);

Camera.main.pixelRect = new Rect(0, 0, width, height);

Camera.main.targetTexture = rt;

//StartCoroutine(StartREC());

}

// Update is called once per frame

void Update()

{

if (isREC && Time.time > nextFire)

{

//StartCoroutine(StartREC());

StartRec();

}

}

float startTime = 0;

float videoLength = 0;

void OnGUI()

{

if (GUI.Button(new Rect(0, 0, 300, 300), "开始截屏"))

{

if (isREC)

{

isREC = false;

videoLength = Time.time - startTime;

return;

}

else

{

isREC = true;

startTime = Time.time;

index = 0;

return;

}

}

if (GUI.Button(new Rect(0, 300, 300, 300), "合成视频"))

{

#if UNITY_ANDROID

AndroidJavaClass jc = new AndroidJavaClass("com.unity3d.player.UnityPlayer");

AndroidJavaObject jo = jc.GetStatic("currentActivity");

jo.CallStatic("StartFusionVideo", new object[] { DateTime.Now.ToFileTime().ToString(), index + "", Convert.ToInt32(videoLength * 1000).ToString() });

#elif UNITY_IPHONE

#endif

}

}

void FixedUpdate()

{

if (imageBytes.Count > 0)

{

File.WriteAllBytes(Application.persistentDataPath + "/" + index + ".jpg", imageBytes.Dequeue());

index++;

}

}

byte[] imagebytes;

float fireRate = 0.02F;

float nextFire = 0.0F;

IEnumerator StartREC()

{

nextFire = Time.time + fireRate;

yield return new WaitForEndOfFrame();

tex.ReadPixels(new Rect(0, 0, width, height), 0, 0, false);

//tex.Compress(false);//对屏幕缓存进行压缩

imagebytes = tex.EncodeToJPG(50);//转化为jpg图

//float t = Time.time;

//Stopwatch sw = new Stopwatch();

//sw.Start();

//UnityEngine.Debug.Log(string.Format("total: {0} ms", Time.time - t));

imageBytes.Enqueue(imagebytes);

// sw.Stop();

//UnityEngine.Debug.Log(string.Format("total: {0} ms", sw.ElapsedMilliseconds));

}

void StartRec()

{

nextFire = Time.time + fireRate;

float t = Time.time;

Stopwatch sw = new Stopwatch();

sw.Start();

UnityEngine.Debug.Log(string.Format("total: {0} ms", Time.time - t));

Camera.main.Render();

RenderTexture.active = rt;

tex.ReadPixels(new Rect(width * CutRect.x, width * CutRect.y, width * CutRect.width, height * CutRect.height), 0, 0);

Camera.main.targetTexture = null;

RenderTexture.active = null;

imagebytes = tex.EncodeToJPG();

imageBytes.Enqueue(imagebytes);

sw.Stop();

UnityEngine.Debug.Log(string.Format("total: {0} ms", sw.ElapsedMilliseconds));

}

public void FusionOver(string videoName)

{

UnityEngine.Debug.Log("videoName:" + videoName);

}

}

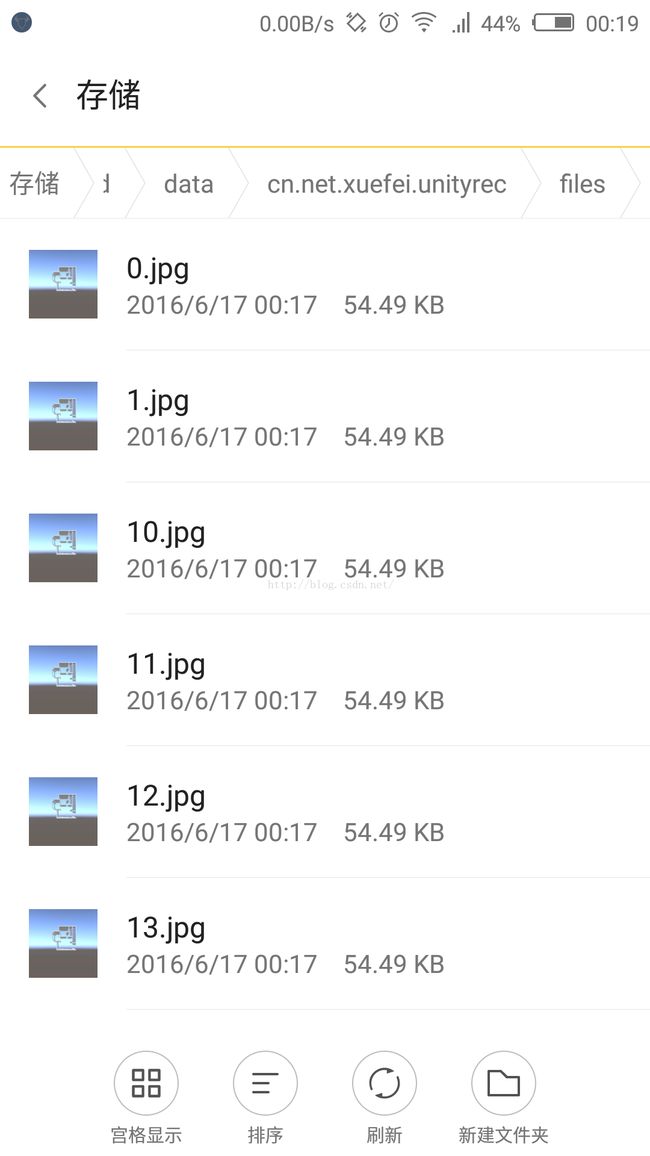

现在的结果是,截图实现了,但是很卡……

卡到截取一帧耗时300多毫秒……

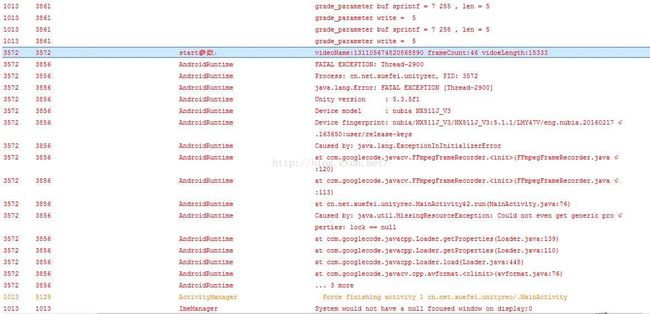

合成视频调用失败:

不知道万能的博友有什么建议……

点击这里下载工程