使用MATLAB的Kalman Filter做目标跟踪——来自MathWorks网站的技术文档

目录

1.前言

2.正文

2.1 介绍

2.2 目标跟踪的挑战

2.3 使用卡尔曼滤波器跟踪单个目标

2.4 卡尔曼滤波器参数配置

2.5 多目标跟踪

3. 本例中用到的函数

-

1.前言

本文来自MathWorks官方的技术文档——Using Kalman Filter for Object Tracking。在自己理解的基础上翻译了部分英文注释,并添加了一些注释。有关卡尔曼滤波的基本原理,可以参考B站UP主的视频“卡尔曼滤波器的原理以及在matlab中的实现”。其他可能会用到的资料,以及我整理好的.m文件放在文章的最后。

-

2.正文

-

2.1 介绍

卡尔曼滤波(Kalman filter)在机电控制、导航、计算机视觉以及时间序列计量经济学等领域具有广泛的应用。MathWorks的官方技术文档中介绍了如何使用卡尔曼滤波器进行目标跟踪,主要聚焦于以下几个方面。

- 目标将来将来位置的预测(轨迹预测);

- 减少由于噪声导致的探测不准确;

- 促进多目标轨迹相互关联的过程(这个还不太明白什么意思)。

-

2.2 目标跟踪的挑战

这里给了一段视频,可以在C:\Program Files\MATLAB\R20**\toolbox\vision\visiondata文件夹中找到,文件名为“singleball.mp4”。视频中有一个小球从画面左端运动到画面右端,如下:

singleball.mp4

可以使用说明中的“showDetections()”函数显示该视频,showDetections()函数的.m文件也附在最后。

showDetections();showDetections()函数处理后,小球显示为一团高亮的白色像素,showDetections()调用了vision.ForegroundDetector前景检测函数,可以检测静止相机拍摄图像中的前景对象。检测后的视频如下:

singleBall1.avi

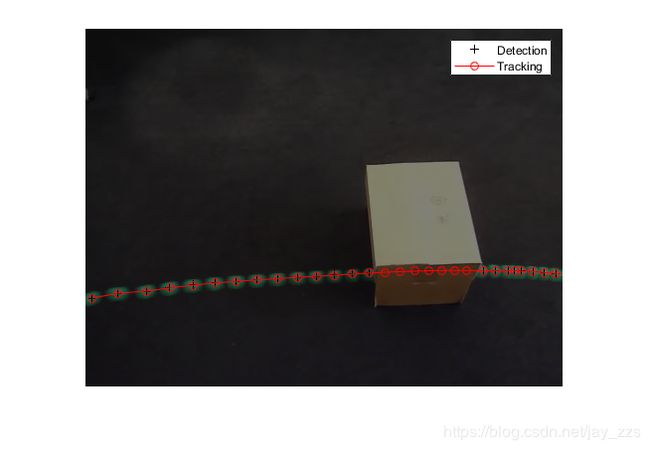

前景检测由于球的边缘和地板的对比度比较低,引入了噪声,因此只能检测到球的一部分。把所有帧叠加到单幅图片上可以得到检测到的小球轨迹,如下图。

检测结果暴露了两个问题:

- 检测得到的区域中心与球的中心不完全重合,定位球的位置的时候存在误差;

- 并不是时刻都能检测到球的位置,比如当球被箱子遮挡的时候。

上述两个问题可以通过卡尔曼滤波来解决。

2.3 使用卡尔曼滤波器跟踪单个目标

函数trackSingleObject 展示了如何实现上述功能,包括:

- 使用configureKalmanFilter创建卡尔曼滤波器;

- 使用预测和矫正的方法消除跟踪系统中存在的误差;

- 当小球被遮挡时,使用预测方法估计小球的位置。

使用configureKalmanFilter可以简化卡尔曼滤波器构造的过程。trackSingleObject 函数中还包含了一些内嵌函数,用于实现部分功能。下面的全局变量用于在主函数和各个嵌套函数中传递数据。

frame = []; % A video frame

detectedLocation = []; % The detected location

trackedLocation = []; % The tracked location

label = ''; % Label for the ball

utilities = []; % Utilities used to process the video跟踪单个目标使用的trackSingleObject 函数的主体部分如下(还有些嵌套的子函数在后面单独列出来):

function trackSingleObject(param)

% Create utilities used for reading video, detecting moving objects,

% and displaying the results. 如上,创建用于读取视频,目标检测以及显示结果的实用程序。

utilities = createUtilities(param); %创建一个用于读取视频,显示视频,提取前景对象以及分析连接组件的系统对象(system object)

isTrackInitialized = false;

while hasFrame(utilities.videoReader) %hasFrame用于确定是否有可用的视频帧可供读取,如有,继续

frame = readFrame(utilities.videoReader);

% Detect the ball.

[detectedLocation, isObjectDetected] = detectObject(frame);

if ~isTrackInitialized

if isObjectDetected

% Initialize a track by creating a Kalman filter when the ball is

% detected for the first time.

%如英文所述,如果检测到第一个目标,初始化卡尔曼滤波器(Kalman filter)

%最后三个依次是P,Q,R矩阵

initialLocation = computeInitialLocation(param, detectedLocation);

kalmanFilter = configureKalmanFilter(param.motionModel, ...

initialLocation, param.initialEstimateError, ...

param.motionNoise, param.measurementNoise);

isTrackInitialized = true;

%Correct state and state estimation error covariance using tracking filter

%使用卡尔曼滤波器矫正状态和状态估计协方差

trackedLocation = correct(kalmanFilter, detectedLocation);

label = 'Initial';

else

trackedLocation = [];

label = '';

end

else %如果已经初始化

% Use the Kalman filter to track the ball.

if isObjectDetected % The ball was detected.如果检测到目标

% Reduce the measurement noise by calling predict followed by

% correct.

%使用predict更新跟踪滤波器的状态估计和状态估计误差协方差

%应该是更新xt_以及P_,不返回任何值

predict(kalmanFilter);

%使用更新过的滤波器和观测位置,预测实际位置

trackedLocation = correct(kalmanFilter, detectedLocation);

label = 'Corrected';

else % The ball was missing.

% Predict the ball's location.

%由于没有观测到的实际位置,因此以状态估计作为预测值返回

trackedLocation = predict(kalmanFilter);

label = 'Predicted';

end

end

annotateTrackedObject();

end % while

showTrajectory();卡尔曼滤波器在处理过程中分为两个部分:

- 如果能检测到小球,卡尔曼滤波器首先预测其位置(应该是先验状态估计),然后根据检测到的位置去对小球的实际位置进行修正(后验估计),得到一个理想的位置;

- 如果检测不到小球,卡尔曼滤波器只依据前一时刻小球的位置对当前小球的位置进行预测。

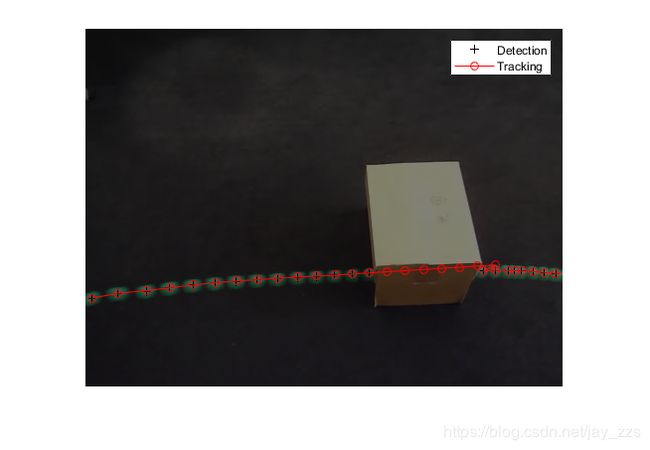

通过对所有视频帧进行叠加可以得到小球的轨迹,如下图:

trackSingleObject 函数需要一个传入参数para,因此实际需要先调用getDefaultParameters函数得到para,再传给trackSingleObject 函数。我这里新建了一个kalmanFilterForTracking函数实现这个功能。

function kalmanFilterForTracking

trackSingleObject(getDefaultParameters)

end2.4 卡尔曼滤波器参数配置

卡尔曼滤波器的参数设置需要对卡尔曼滤波的基本原理有比较深入的理解,主要是几个矩阵的设置,对于MATLAB的configureKalmanFilter,其可以选择运动类型(MotionModel),如果运动类型没有设置对,也会出错。

- configureKalmanFilter函数需要5个输入参数,然后返回一个卡尔曼滤波器,如下:

kalmanFilter = configureKalmanFilter(MotionModel, InitialLocation,

InitialEstimateError, MotionNoise, MeasurementNoise)- 运动模型必须与物体实际的运动特性相对应,可以设置为匀速或者匀加速运动。下面将运动模型设置为匀速,得到一个次优模型。

param = getDefaultParameters(); % get parameters that work well

param.motionModel = 'ConstantVelocity'; % switch from ConstantAcceleration

% to ConstantVelocity

% After switching motion models, drop noise specification entries

% corresponding to acceleration.

param.initialEstimateError = param.initialEstimateError(1:2);

param.motionNoise = param.motionNoise(1:2);

trackSingleObject(param); % visualize the results该模型的结果如下图:

球出现在与预测模型完全不同的位置,其实一个被释放的小球,由于受地毯摩擦力的影响,其运动更接近匀加速运动。因此如果保持匀速运动模型,无论其他参数的结果如何,跟踪结果都将是次优的。

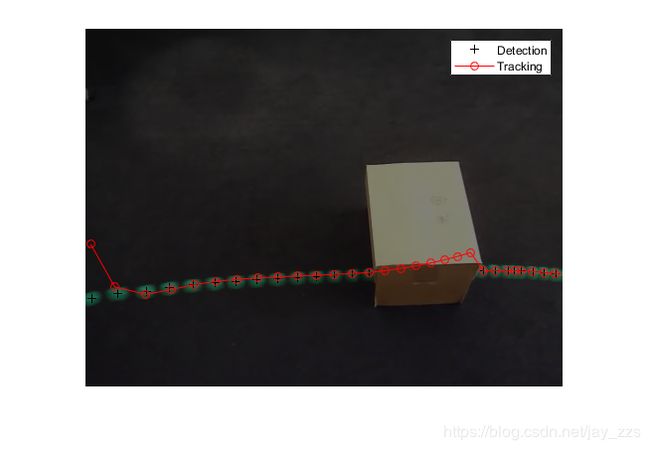

- InitialLocation一般可以设置为初次检测到的值,也可以设置为其他值。InitialEstimateError 可以设置为相对比较大的值,因为通过一次检测得到的初始状态可能包含很大的噪声。下面展示了不合理设置这些参数导致的结果。

param = getDefaultParameters(); % get parameters that work well

param.initialLocation = [0, 0]; % location that's not based on an actual detection

param.initialEstimateError = 100*ones(1,3); % use relatively small values

trackSingleObject(param); % visualize the results参数设置不恰当会导致卡尔曼滤波器的结果需要几次收敛才能与物体的实际轨迹对其。

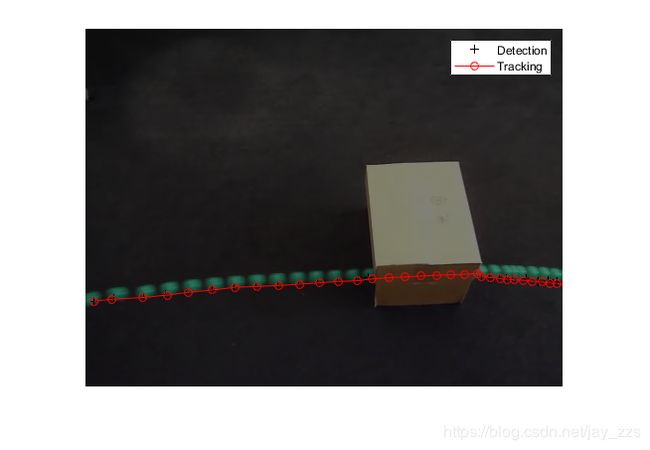

- 应该根据探测器的精度设置MeasurementNoise 的值,测量噪声的增大使得检测器的精确度下降,卡尔曼滤波器的输出将更多的依赖内部状态而不是输入的测量值,从而补偿噪声。

param = getDefaultParameters();

param.segmentationThreshold = 0.0005; % smaller value resulting in noisy detections

param.measurementNoise = 12500; % increase the value to compensate

% for the increase in measurement noise

trackSingleObject(param); % visualize the results- 物体通常不会按照严格的匀速或者匀加速规律运动,我们可以通过MotionNoise 设置物体实际运动与理想运动之间的偏差。当增加MotionNoise 时,卡尔曼滤波器的输出更多地依赖输入测量值而不是内部状态。可以调节MotionNoise 来进行实验,这里不再说明。如果需要设置其他高级参数,可以使用vision.KalmanFilter对象。

2.5 多目标跟踪

跟踪多目标时会有额外的挑战:

- 多个检测器必须与跟踪器统一;

- 必须对场景中新出现的目标进行处理;

- 当多个目标进入一个跟踪器时必须保持各个目标能单独辨别。

vision.KalmanFilter对象结合assignDetectionsToTracks函数可以帮助解决以下问题:

- 将检测器分配给跟踪器;

- 确定检测器是否对应一个新的目标或者新产生的轨迹;

- 就像预测被遮挡的单一目标一样,预测可以帮助区分相近的几个目标。

更多信息可以查看示例“Motion-Based Multiple Object Tracking”。

3. 本例中用到的函数

- 参数设置

function param = getDefaultParameters

param.motionModel = 'ConstantAcceleration';

param.initialLocation = 'Same as first detection';

param.initialEstimateError = 1E5 * ones(1, 3); %初始状态误差协方差矩阵,P矩阵

param.motionNoise = [25, 10, 1];%运动模型本身的噪声,Q矩阵

param.measurementNoise = 25; %测量噪声协方差矩阵,R矩阵

param.segmentationThreshold = 0.05;

end

- 在视频序列中检测小球

function [detection, isObjectDetected] = detectObject(frame)

grayImage = rgb2gray(im2single(frame));

%step函数用于运行systemobject算法,

%相当于utilities.foregroundDetector(grayImage);后面的step功能类似

utilities.foregroundMask = step(utilities.foregroundDetector, grayImage);

detection = step(utilities.blobAnalyzer, utilities.foregroundMask);

if isempty(detection)

isObjectDetected = false;

else

% To simplify the tracking process, only use the first detected object.

%为了简化跟踪过程,只取第一个检测到的目标

%说明:vision.BlobAnalysis输出多个检测到的连通域,输出其面积,中心点等信息

detection = detection(1, :);

isObjectDetected = true;

end

end- 显示当前的检测和跟踪结果

function annotateTrackedObject()

accumulateResults();

% Combine the foreground mask with the current video frame in order to

% show the detection result.

combinedImage = max(repmat(utilities.foregroundMask, [1,1,3]), im2single(frame));

if ~isempty(trackedLocation)

shape = 'circle';

region = trackedLocation;

region(:, 3) = 5;

combinedImage = insertObjectAnnotation(combinedImage, shape, ...

region, {label}, 'Color', 'red');

end

step(utilities.videoPlayer, combinedImage);

end- 通过多帧图片叠加,显示小球的轨迹

function showTrajectory

% Close the window which was used to show individual video frame.

uiscopes.close('All');

% Create a figure to show the processing results for all video frames.

figure; imshow(utilities.accumulatedImage/2+0.5); hold on;

plot(utilities.accumulatedDetections(:,1), ...

utilities.accumulatedDetections(:,2), 'k+');

if ~isempty(utilities.accumulatedTrackings)

plot(utilities.accumulatedTrackings(:,1), ...

utilities.accumulatedTrackings(:,2), 'r-o');

legend('Detection', 'Tracking');

end

end- 累计视频帧,检测到的位置以及跟踪到的位置,用来显示小球的轨迹

function accumulateResults()

utilities.accumulatedImage = max(utilities.accumulatedImage, frame);

utilities.accumulatedDetections ...

= [utilities.accumulatedDetections; detectedLocation];

utilities.accumulatedTrackings ...

= [utilities.accumulatedTrackings; trackedLocation];

end- 为了便于说明,提供一个卡尔曼滤波器初始位置

function loc = computeInitialLocation(param, detectedLocation)

if strcmp(param.initialLocation, 'Same as first detection')

loc = detectedLocation;

else

loc = param.initialLocation;

end

end- 创建用于读取视频、检测移动对象和显示结果的实用程序

function utilities = createUtilities(param)

% Create System objects for reading video, displaying video, extracting

% foreground, and analyzing connected components.

utilities.videoReader = VideoReader('singleball.mp4');

utilities.videoPlayer = vision.VideoPlayer('Position', [100,100,500,400]);

utilities.foregroundDetector = vision.ForegroundDetector(...

'NumTrainingFrames', 10, 'InitialVariance', param.segmentationThreshold); %基于高斯混合模型的前景检测

utilities.blobAnalyzer = vision.BlobAnalysis('AreaOutputPort', false, ...

'MinimumBlobArea', 70, 'CentroidOutputPort', true); %统计连通区域信息

utilities.accumulatedImage = 0;

utilities.accumulatedDetections = zeros(0, 2);

utilities.accumulatedTrackings = zeros(0, 2);

end本文资源:MATLAB中单目标跟踪说明文档创建的程序(.m文件),翻译了部分英文注释,并添加了部分注释,程序可直接运行,可以保存结果视频。