Android通过NDk使用OpenCV4.1--人脸检查与模板训练

文章目录

- 一、前言

- 二、OpenCV4.1.0环境搭建

- 1、NDK搭建和OpenCV下载

- 2、创建项目

- 3、修改app下build.gradle文件

- 4、修改CMakeLists.txt文件

- 5、点击build下刷新C++工程

- 三、NDK实现人脸检测

- 1、对camera的封装

- 2、MainActivity

- 3、FaceDetectionActivity

- 4、native方法实现

- 四、后台样本训练

- 五、总结

一、前言

某天,领导突然来问,可不可以在项目登录时,绑定手机的人脸开屏功能使用人脸登录。然后就搜索了很多文章,发现并没有提供相应的功能接口(也许是我搜索的姿势不对,如果有大神知道希望可以指点一下)。后来突发奇想,可不可以使用不健康样本(只是登录本人的人脸样本)训练的模型进行检测,来实现指定人员人脸检测。在此记录下学习过程。也希望能有大神之纠其不正,补其不足。

二、OpenCV4.1.0环境搭建

详细步骤见文章:https://cloud.tencent.com/developer/article/1472652

此处只是从文章中挑出关键步骤:

1、NDK搭建和OpenCV下载

-

NDK搭建:

在Android studio中,

settings->Appearance&Behavior->system settings->Android SDK->SDK Tools中,选择LLDB、CMake和NDK这三个下载。 -

OpenCv下载地址:https://opencv.org/releases/ 选择Android下载

此处是使用的是4.1.0,目前更新到4.1.1。之后的搭建方式目测通用,未实测。

2、创建项目

3、修改app下build.gradle文件

在android和android->defaultConfig下添加如下配置

android {

compileSdkVersion 28

defaultConfig {

....

externalNativeBuild {

cmake {

cppFlags "-std=c++11"

//添加上的

//APP要支持的CPU架构,此处只支持armeabi v7

abiFilters "armeabi-v7a"

}

//添加上的

ndk {

// 设置支持的SO库架构

abiFilters 'armeabi-v7a'

}

}

}

//添加上的

sourceSets{

main{

//此处设置的目录为上面下载的OpenCv-android-sdk的保存目录

//中的so库,也就是libopencv_java4.so

//当前这个目录下的库文件会被调用并且被打包进apk中

jniLibs.srcDirs = ['E:/tools/OpenCV-android-sdk/sdk/native/libs']

}

}

......

}

4、修改CMakeLists.txt文件

cmake_minimum_required(VERSION 3.4.1)

#------以下为添加的内容------------

#该变量为真时会创建完整版本的Makefile

set(CMAKE_VERBOSE_MAKEFILE on)

#定义变量ocvlibs使后面的命令可以使用定位具体的库文件

set(opencvlibs "E:/tools/OpenCV-android-sdk/sdk/native/libs")

#调用头文件的具体路径

#此处和上边的路径都是OpenCv-android-sdk下载保存路径

include_directories(E:/tools/OpenCV-android-sdk/sdk/native/jni/include)

#增加我们的动态库

add_library(libopencv_java4 SHARED IMPORTED)

#建立链接

set_target_properties(libopencv_java4 PROPERTIES IMPORTED_LOCATION

"${opencvlibs}/${ANDROID_ABI}/libopencv_java4.so")

#---------------------------------------------

add_library(native-lib SHARED native-lib.cpp)

find_library( log-lib log)

target_link_libraries( native-lib

#添加这两个

libopencv_java4

android

${log-lib})

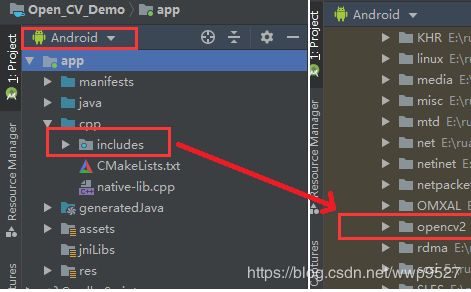

5、点击build下刷新C++工程

-

点击

build->Refresh Linked C++ Projects; -

查看搭建是否完成,

在左侧项目目录中选择Android视图

三、NDK实现人脸检测

1、对camera的封装

封装一个camera的工具类,提供切换摄像头,开始预览,停止预览和对预览换面的回调方法

public class CameraHelper implements Camera.PreviewCallback {

private static final String TAG = "CameraHelper";

//摄像头宽和高,有固定对的值

public static final int WIDTH = 640;

public static final int HEIGHT = 480;

//摄像头id 区分前后摄像头的

private int mCameraId;

private Camera mCamera;

//图片数组

private byte[] buffer;

//摄像头预览回调

private Camera.PreviewCallback mPreviewCallback;

public CameraHelper(int cameraId) {

this.mCameraId = cameraId;

}

/**

* 切换摄像头

*/

public void switchCamera() {

if (mCameraId == Camera.CameraInfo.CAMERA_FACING_BACK) {

mCameraId = Camera.CameraInfo.CAMERA_FACING_FRONT;

} else {

mCameraId = Camera.CameraInfo.CAMERA_FACING_BACK;

}

stopPreview();

startPreview();

}

/**

* 开始预览

*/

public void startPreview() {

try {

//获取摄像头Camera实例,并打开前置摄像头

mCamera = Camera.open(mCameraId);

//配置Camera属性,首先获取属性列表

Camera.Parameters parameters = mCamera.getParameters();

Camera.Size pictureSize = parameters.getPictureSize();

//设置预览数据格式为nv21

parameters.setPreviewFormat(ImageFormat.NV21);

//设置摄像头的宽和高

parameters.setPreviewSize(WIDTH, HEIGHT);

mCamera.setParameters(parameters);

//初始化图片数组

buffer = new byte[WIDTH * HEIGHT * 3 / 2];

//添加数据缓存区并设置监听

mCamera.addCallbackBuffer(buffer);

mCamera.setPreviewCallbackWithBuffer(this);

//设置预览画面

SurfaceTexture surfaceTexture = new SurfaceTexture(11);

//离屏渲染

mCamera.setPreviewTexture(surfaceTexture);

//打开预览

mCamera.startPreview();

} catch (IOException e) {

e.printStackTrace();

}

}

/**

* 停止预览

*/

public void stopPreview() {

if (mCamera != null) {

//预览回调方法值为null

mCamera.setPreviewCallback(null);

//停止预览

mCamera.stopPreview();

//释放摄像头

mCamera.release();

//摄像头实例置null,方便被GC回收

mCamera = null;

}

}

/**

* 获取摄像头id

*/

public int getCameraId() {

return mCameraId;

}

/**

* 预览画面的回调

* @param previewCallback

*/

public void setPreviewCallback(Camera.PreviewCallback previewCallback) {

this.mPreviewCallback = previewCallback;

}

/**

* 预览画面

* @param data 预览的图片数据

* @param camera 摄像头实例

*/

@Override

public void onPreviewFrame(byte[] data, Camera camera) {

//data数据是倒的

mPreviewCallback.onPreviewFrame(data, camera);

camera.addCallbackBuffer(buffer);

}

}

2、MainActivity

- 动态申请摄像头和读写权限

- 在布局中只提供三个按钮,一个跳转到采集模型界面,一个挑战到检查人脸界面,一个上传人脸模型按钮

- 在上传之前进行压缩,

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

if (Build.VERSION.SDK_INT >= 23) {

int REQUEST_CODE_CONTACT = 101;

String[] permissions = {Manifest.permission.WRITE_EXTERNAL_STORAGE, Manifest.permission.CAMERA};

//验证是否许可权限

for (String str : permissions) {

if (this.checkSelfPermission(str) != PackageManager.PERMISSION_GRANTED) {

//申请权限

this.requestPermissions(permissions, REQUEST_CODE_CONTACT);

}

}

}

//采集

findViewById(R.id.btn_face_collect).setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

Intent intent = new Intent(MainActivity.this, FaceDetectionActivity.class);

intent.putExtra("isCollect", true);

startActivity(intent);

}

});

//检查

findViewById(R.id.button).setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

Intent intent = new Intent(MainActivity.this, FaceDetectionActivity.class);

intent.putExtra("isCollect", false);

startActivity(intent);

}

});

//上传

findViewById(R.id.btn_up_load).setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

new Thread(new Runnable() {

@Override

public void run() {

long statTime = System.currentTimeMillis();

try {

//压缩文件

HttpAssist.ZipFolder(

Environment.getExternalStorageDirectory() +

File.separator + "info",

Environment.getExternalStorageDirectory() +

File.separator + "info.zip");

} catch (Exception e) {

e.printStackTrace();

}

long zipTime = System.currentTimeMillis();

Log.e("压缩文件耗时","zipTime="+(zipTime-statTime));

File file = new File(Environment.getExternalStorageDirectory() +

File.separator + "info.zip");

if (!file.exists()){

return;

}

//上传文件

HttpAssist.uploadFile(file);

//目前上传时间略长 可优化

Log.e("上传文件耗时","uploadFile="+(

System.currentTimeMillis()-zipTime));

}

}).start();

}

});

//下载人脸模型

findViewById(R.id.btn_down_load).setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

new Thread(new Runnable() {

@Override

public void run() {

HttpAssist.downloadFile();

}

}).start();

}

});

}

3、FaceDetectionActivity

- 在activity布局中,只添加一个

SurfaceView控件来展示摄像头内容; - 添加几个native方法,提供对opencv的初始化方法、对surface的设置、对摄像头数据的处理、采集样本和释放资源的方法;

- 设置摄像头

public class FaceDetectionActivity extends AppCompatActivity implements

SurfaceHolder.Callback, Camera.PreviewCallback {

private int cameraId = Camera.CameraInfo.CAMERA_FACING_FRONT;

private CameraHelper mCameraHelper;

private boolean isCollect;

/**

* 初始化opencv

* @param model 训练的人脸模型

*/

native void init(String model);

/**

* 设置画布

* @param surface 画布

*/

native void setSurface(Surface surface);

/**

* 处理摄像头的数据

* @param data 图片数组

* @param width 宽度

* @param height 高度

* @param cameraId 摄像头id 区分前后摄像头

*/

native void postData(byte[] data, int width, int height, int cameraId);

//收集样本方法

native void faceCollect(byte[] data, int width, int height, int cameraId);

/**

* 释放跟踪器

*/

native void release();

@Override

protected void onCreate(@Nullable Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_face_detection);

Intent intent = getIntent();

isCollect = intent.getBooleanExtra("isCollect", false);

SurfaceView surfaceView = findViewById(R.id.surface_view);

//给SurfaceView添加监听

surfaceView.getHolder().addCallback(this);

mCameraHelper = new CameraHelper(cameraId);

mCameraHelper.setPreviewCallback(this);

//从资源目录下拷出训练样本到本地

if (isCollect) {

//如果是采集样本时加载。opencv内自带的样本模型

Utils.copyAssets(this, "lbpcascade_frontalface.xml");

} else {

//如果不是就加载自己训练的样本,此处是训练完放在了资源目录,可以直接从后台下载

Utils.copyAssets(this, "cascade.xml");

}

}

@Override

protected void onResume() {

super.onResume();

//初始化opencv

init(Environment.getExternalStorageDirectory() +

File.separator + "lbpcascade_frontalface.xml");

mCameraHelper.startPreview();

}

@Override

protected void onStop() {

super.onStop();

//释放跟踪器

release();

mCameraHelper.stopPreview();

}

/**

* SurfaceView创建时的回调

* @param holder

*/

@Override

public void surfaceCreated(SurfaceHolder holder) {}

/**

* SurfaceView 画面改变时的回调

* @param holder

* @param format

* @param width

* @param height

*/

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) {

setSurface(holder.getSurface());

}

/**

* SurfaceView被销毁时的回调

* @param holder

*/

@Override

public void surfaceDestroyed(SurfaceHolder holder) {}

/**

* 摄像头工具类返回的回调

* @param data

* @param camera

*/

@Override

public void onPreviewFrame(byte[] data, Camera camera) {

if (isCollect) {

faceCollect(data, CameraHelper.WIDTH, CameraHelper.HEIGHT, cameraId);

} else {

postData(data, CameraHelper.WIDTH, CameraHelper.HEIGHT, cameraId);

}

}

/**

* 点击屏幕切换摄像头

*/

@Override

public boolean onTouchEvent(MotionEvent event) {

if (event.getAction() == MotionEvent.ACTION_UP) {

mCameraHelper.switchCamera();

cameraId = mCameraHelper.getCameraId();

}

return super.onTouchEvent(event);

}

/**

* 点击返回按钮时,可以设置直接将采集的样本打包上传到服务器

* @param keyCode

* @param event

* @return

*/

@Override

public boolean onKeyDown(int keyCode, KeyEvent event) {

/*

if (keyCode == KeyEvent.KEYCODE_BACK) {

if (isCollect) {

//如果在采集页面,退出时将采集的info图片上传到服务器

//首先压缩采集的图片

new Thread(new Runnable() {

@Override

public void run() {

long statTime = System.currentTimeMillis();

try {

HttpAssist.ZipFolder(

Environment.getExternalStorageDirectory() + File.separator + "info",

Environment.getExternalStorageDirectory() + File.separator + "info.zip");

} catch (Exception e) {

e.printStackTrace();

}

long zipTime = System.currentTimeMillis();

Log.e("压缩文件耗时", "zipTime=" + (zipTime - statTime));

File file = new File(Environment.getExternalStorageDirectory() + File.separator + "info.zip");

if (!file.exists()) {

return;

}

HttpAssist.uploadFile(file);

Log.e("上传文件耗时", "uploadFile=" + (System.currentTimeMillis() - zipTime));

}

}).start();

}

}

*/

return super.onKeyDown(keyCode, event);

}

}

4、native方法实现

#include <jni.h>

#include <string>

#include <opencv2/opencv.hpp>

#include <android/native_window_jni.h>

#include <android/log.h>

using namespace cv;

ANativeWindow *window = 0;

class CascadeDetectorAdapter : public DetectionBasedTracker::IDetector {

public:

CascadeDetectorAdapter(cv::Ptr<cv::CascadeClassifier> detector) :

IDetector(),

Detector(detector) {

CV_Assert(detector);

}

void detect(const cv::Mat &Image, std::vector<cv::Rect> &objects) {

Detector->detectMultiScale(Image, objects, scaleFactor,

minNeighbours, 0, minObjSize,maxObjSize);

}

virtual ~CascadeDetectorAdapter() {

}

private:

CascadeDetectorAdapter();

cv::Ptr<cv::CascadeClassifier> Detector;

};

DetectionBasedTracker *tracker = 0;

int index = 0;

extern "C"

JNIEXPORT void JNICALL

Java_com_wwp_open_1cv_1demo_FaceDetectionActivity_init(JNIEnv *env, jobject instance, jstring model_) {

const char *model = env->GetStringUTFChars(model_, 0);

if (tracker) {

tracker->stop();

delete tracker;

tracker = 0;

}

//智能指针

Ptr<CascadeClassifier> classifier = makePtr<CascadeClassifier>(model);

//创建一个跟踪适配器

Ptr<CascadeDetectorAdapter> mainDetector = makePtr<CascadeDetectorAdapter>(classifier);

Ptr<CascadeClassifier> classifier1 = makePtr<CascadeClassifier>(model);

//创建一个跟踪适配器

Ptr<CascadeDetectorAdapter> trackingDetector = makePtr<CascadeDetectorAdapter>(classifier1);

//拿去用的跟踪器

DetectionBasedTracker::Parameters DetectorParams;

tracker = new DetectionBasedTracker(mainDetector, trackingDetector, DetectorParams);

//开启跟踪器

tracker->run();

env->ReleaseStringUTFChars(model_, model);

}

extern "C"

JNIEXPORT void JNICALL

Java_com_wwp_open_1cv_1demo_FaceDetectionActivity_release(JNIEnv *env, jobject instance) {

if (tracker) {

tracker->stop();

delete tracker;

tracker = 0;

index =0;

}

}

extern "C"

JNIEXPORT void JNICALL

Java_com_wwp_open_1cv_1demo_FaceDetectionActivity_setSurface(JNIEnv *env, jobject

instance,jobject surface) {

if (window) {

ANativeWindow_release(window);

window = 0;

}

window = ANativeWindow_fromSurface(env, surface);

}

extern "C"

JNIEXPORT void JNICALL

Java_com_wwp_open_1cv_1demo_FaceDetectionActivity_postData(JNIEnv *env, jobject instance, jbyteArray data_,jint width, jint height, jint cameraId) {

//传过来的数据都是nv21的数据,而OpenCv是在Mat中处理的,

jbyte *data = env->GetByteArrayElements(data_, NULL);

//所以将data数据添加到Mat中

//1.高(nv21模型转换) 2.宽,3.

Mat src(height + height / 2, width, CV_8UC1, data);

//颜色格式转换女 nv21 转成 RGBA

//将nv21的yuv数据转成rgba

cvtColor(src, src, COLOR_YUV2RGBA_NV21);

//如果正在写的过程中退出,导致文件丢失

if (cameraId == 1) {

//前置摄像头,需要逆时针旋转90度

rotate(src, src, ROTATE_90_COUNTERCLOCKWISE);

//水平翻转 镜像1水平 0为垂直

flip(src, src, 1);

} else {

//顺时针旋转90度

rotate(src, src, ROTATE_90_CLOCKWISE);

}

Mat gray;

//灰色

cvtColor(src, gray, COLOR_RGBA2GRAY);

//增强对比度 (直方图均衡)

equalizeHist(gray, gray);

std::vector<Rect> faces;

//定位人脸 N个

tracker->process(gray);

tracker->getObjects(faces);

for (Rect face : faces) {

//画矩形

//分别指定 bgra

rectangle(src, face, Scalar(255, 0, 255));

}

//显示

if (window) {

//设置windows的属性

// 因为旋转了 所以宽、高需要交换

//这里使用 cols 和rows 代表 宽、高 就不用关心上面是否旋转了

ANativeWindow_setBuffersGeometry(window, src.cols,

src.rows, WINDOW_FORMAT_RGBA_8888);

ANativeWindow_Buffer buffer;

do {

//lock失败 直接brek出去

if (ANativeWindow_lock(window, &buffer, 0)) {

ANativeWindow_release(window);

window = 0;

break;

}

//src.data : rgba的数据

//把src.data 一行一行的拷贝到 buffer.bits 里去

//填充rgb数据给dst_data

uint8_t *dst_data = static_cast<uint8_t *>(buffer.bits);

//stride : 一行多少个数据 (RGBA) * 4

int dst_line_size = buffer.stride * 4;

//一行一行拷贝

for (int i = 0; i < buffer.height; ++i) {

//void *memcpy(void *dest, const void *src, size_t n);

//从源src所指的内存地址的起始位置开始拷贝n个字节到目标dest所指的内存地址的起始位置中

memcpy(dst_data + i * dst_line_size,

src.data + i * src.cols * 4, dst_line_size);

}

//提交刷新

ANativeWindow_unlockAndPost(window);

} while (0);

}

//释放Mat

//内部采用的 引用计数

src.release();

gray.release();

env->ReleaseByteArrayElements(data_, data, 0);

}

extern "C"

JNIEXPORT void JNICALL

Java_com_wwp_open_1cv_1demo_FaceDetectionActivity_faceCollect(JNIEnv *env, jobject

instance,jbyteArray data_, jint width,jint height, jint cameraId) {

jbyte *data = env->GetByteArrayElements(data_, NULL);

Mat src(height + height / 2, width, CV_8UC1, data);

cvtColor(src, src, COLOR_YUV2RGBA_NV21);

if (cameraId == 1) {

rotate(src, src, ROTATE_90_COUNTERCLOCKWISE);

flip(src, src, 1);

} else {

rotate(src, src, ROTATE_90_CLOCKWISE);

}

Mat gray;

cvtColor(src, gray, COLOR_RGBA2GRAY);

equalizeHist(gray, gray);

std::vector<Rect> faces;

tracker->process(gray);

tracker->getObjects(faces);

for (Rect face : faces) {

rectangle(src, face, Scalar(255, 0, 255));

//将截取到的人脸保存到sdcard

Mat m;

//把img中的人脸部位拷到m中

src(face).copyTo(m);

//把人脸从新定义为24*24的大小图片

resize(m, m, Size(24, 24));

//置灰

cvtColor(m, m, COLOR_BGR2GRAY);

char p[100];

sprintf(p, "/sdcard/info/%d.jpg",index++);

//把mat写出为jpg文件

//这里可以控制一下数量

imwrite(p, m);

}

if (window) {

ANativeWindow_setBuffersGeometry(window, src.cols,

src.rows, WINDOW_FORMAT_RGBA_8888);

ANativeWindow_Buffer buffer;

do {

if (ANativeWindow_lock(window, &buffer, 0)) {

ANativeWindow_release(window);

window = 0;

break;

}

uint8_t *dst_data = static_cast<uint8_t *>(buffer.bits);

int dst_line_size = buffer.stride * 4;

for (int i = 0; i < buffer.height; ++i) {

memcpy(dst_data + i * dst_line_size,

src.data + i * src.cols * 4, dst_line_size);

}

ANativeWindow_unlockAndPost(window);

} while (0);

}

src.release();

gray.release();

env->ReleaseByteArrayElements(data_, data, 0);

}

四、后台样本训练

- 后台通过java实现,主要是利用opencv内的opencv_createsamples.exe 和opencv_traincascade.exe,通过命令行完成对样本的训练;

- 后台接收到APP采集的正样本数据,解压,创建模板,并且训练样本;

- 训练需要正负样本,规则见官网,

- 中文地址:http://www.opencv.org.cn/opencvdoc/2.3.2/html/doc/user_guide/ug_traincascade.html

//上传文件接口

@RequestMapping(value = "/upLoadingFile", method = RequestMethod.POST)

public void upLoadingFile(MultipartFile file, HttpServletRequest request,

HttpServletResponse response) {

String savePath = "D:\\training\\img\\";

try {

System.out.println("文件名字=" + file.getOriginalFilename());

file.transferTo(new File(savePath + file.getOriginalFilename()));

//解压文件

unZip(new File(savePath + file.getOriginalFilename()), savePath + "info");

//整理正样本格式

writeFileName();

//正样本训练模板

createVec();

//训练样本

createTrain();

} catch (IOException e) {

e.printStackTrace();

}

}

/**

* 训练后的模板下载接口

* 这里的模板也在可以训练完样本后直接返回给客户

* @param request

* @param response

*/

@RequestMapping("/file")

@ResponseBody

public void file(HttpServletRequest request, HttpServletResponse response) {

String name = request.getParameter("file");

String path = "/file" + File.separator + name;

String path1 = "D:\\training\\img\\data\\cascade.xml";

File imageFile = new File(path1);

if (!imageFile.exists()) {

return;

}

//下载的文件携带这个名称

response.setHeader("Content-Disposition", "attachment;filename=ascade.xml");

//文件下载类型--二进制文件

response.setContentType("application/octet-stream");

try {

FileInputStream fis = new FileInputStream(path1);

byte[] content = new byte[fis.available()];

fis.read(content);

fis.close();

ServletOutputStream sos = response.getOutputStream();

sos.write(content);

sos.flush();

sos.close();

} catch (Exception e) {

e.printStackTrace();

}

}

/**

* 为训练整理正样本格式

*/

private void writeFileName() {

String path = "D:\\training\\img\\info";

File file = new File(path);

File[] jpgFiles = file.listFiles();

String[] list = file.list();

try {

FileOutputStream fileOutputStream = new FileOutputStream(new File("D:\\training\\img\\info.txt"));

for (int i = 0; i < list.length; i++) {

if (i < list.length-1)

fileOutputStream.write(("info/" + list[i] + " 1 0 0 24 24\n").getBytes());

else if (i == list.length -1)

fileOutputStream.write(("info/" + list[i] + " 1 0 0 24 24").getBytes());

}

fileOutputStream.close();

} catch (Exception e) {

e.printStackTrace();

}

}

/**

* 创建正样本模板

*/

public void createVec() {

String cmd = "E:\\tools\\opencv\\build\\x64\\vc15\\bin\\opencv_createsamples.exe "

+ "/c opencv_createsamples -info info.txt -vec wwp.vec -num 100 -w 24 -h 24";

String path = "D:\\training\\img";

createVEC(cmd, path);

}

/**

* 训练

*/

public void createTrain() {

//前边部分为exe文件所在位置,后边为要执行的语句,见opencv官网

String cmd = "E:\\tools\\opencv\\build\\x64\\vc15\\bin\\opencv_traincascade.exe " +

"/c opencv_traincascade -data data -vec wwp.vec -bg bg.txt -numPos 100 -numNeg 300 -numStages 15 -featureType LBP -w 24 -h 24";

String path = "D:\\training\\img";

createVEC(cmd, path);

}

//执行命令行语句

private void createVEC(String cmd, String path) {

try {

File dir = new File(path);//此处是指定路径

System.out.println(cmd);

Process process = Runtime.getRuntime().exec(cmd, null, dir);

// 记录dos命令的返回信息

StringBuffer resStr = new StringBuffer();

// 获取返回信息的流

InputStream in = process.getInputStream();

Reader reader = new InputStreamReader(in);

BufferedReader bReader = new BufferedReader(reader);

for (String res = ""; (res = bReader.readLine()) != null; ) {

resStr.append(res + "\n");

}

System.out.println(resStr.toString());

bReader.close();

reader.close();

//一定要关

process.getOutputStream().close();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

/**

* zip解压

*

* @param srcFile zip源文件

* @param destDirPath 解压后的目标文件夹

* @throws RuntimeException 解压失败会抛出运行时异常

*/

public void unZip(File srcFile, String destDirPath) throws RuntimeException {

long start = System.currentTimeMillis();

// 判断源文件是否存在

if (!srcFile.exists()) {

throw new RuntimeException(srcFile.getPath() + "所指文件不存在");

}

// 开始解压

ZipFile zipFile = null;

try {

zipFile = new ZipFile(srcFile);

Enumeration<?> entries = zipFile.entries();

while (entries.hasMoreElements()) {

ZipEntry entry = (ZipEntry) entries.nextElement();

System.out.println("解压" + entry.getName());

// 如果是文件夹,就创建个文件夹

if (entry.isDirectory()) {

String dirPath = destDirPath + "/" + entry.getName();

File dir = new File(dirPath);

dir.mkdirs();

} else {

// 如果是文件,就先创建一个文件,然后用io流把内容copy过去

File targetFile = new File(destDirPath + "/" + entry.getName());

// 保证这个文件的父文件夹必须要存在

if (!targetFile.getParentFile().exists()) {

targetFile.getParentFile().mkdirs();

}

targetFile.createNewFile();

// 将压缩文件内容写入到这个文件中

InputStream is = zipFile.getInputStream(entry);

FileOutputStream fos = new FileOutputStream(targetFile);

int len;

byte[] buf = new byte[1024];

while ((len = is.read(buf)) != -1) {

fos.write(buf, 0, len);

}

// 关流顺序,先打开的后关闭

fos.close();

is.close();

}

}

long end = System.currentTimeMillis();

System.out.println("解压完成,耗时:" + (end - start) + " ms");

} catch (Exception e) {

throw new RuntimeException("unzip error from ZipUtils", e);

} finally {

if (zipFile != null) {

try {

zipFile.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

五、总结

- 这样可以简单实现指定人员人脸检查,后期可根据检测到的人脸,来确认是本人操作,从而实现与账户密码绑定来进行人脸识别登录。

- 但是由于样本不健康,检查识别率较低,而且上传文件时间略长。不适合项目中使用。