python计算机视觉_图像内容分类

hello,大家好。博主前一段时间比较忙,过去的一周考完了二专的最后一科,结束了答辩,又考完了初会。当初考完初会我就不该去网上找答案,对过答案后黯然失色,唉!

好了,不说了,这次想带大家讲的是python计算机视觉_图像内容的分类

文章目录

- 1、K邻近分类法(KNN)

- 2、用稠密SIFT作为图像特征

- 3、图像分类:手势识别

说到分类,这里先提出一个简单有效的分类方法——K邻近分类法

1、K邻近分类法(KNN)

在分类方法中,最简单且用的最多的一种方法之一就是KNN,这种算法把要分类的对象(如一个特征向量)与训练集中已知类标记的所有对象进行对比,并由K近邻对指派到哪个类进行投票。下面见

代码:

(1)

# -*- coding: utf-8 -*-

from numpy.random import randn

import pickle

from pylab import *

# create sample data of 2D points

n = 200

# two normal distributions

class_1 = 0.6 * randn(n,2)

class_2 = 1.2 * randn(n,2) + array([5,1])

labels = hstack((ones(n),-ones(n)))

# save with Pickle

#with open('points_normal.pkl', 'w') as f:

with open('points_normal_test.pkl', 'wb') as f:

pickle.dump(class_1,f)

pickle.dump(class_2,f)

pickle.dump(labels,f)

# normal distribution and ring around it

print ("save OK!")

class_1 = 0.6 * randn(n,2)

r = 0.8 * randn(n,1) + 10

angle = 2*pi * randn(n,1)

class_2 = hstack((r*cos(angle),r*sin(angle)))

labels = hstack((ones(n),-ones(n)))

# save with Pickle

#with open('points_ring.pkl', 'w') as f:

with open('points_ring_test.pkl', 'wb') as f:

pickle.dump(class_1,f)

pickle.dump(class_2,f)

pickle.dump(labels,f)

print ("save OK!")

可以看出,代码(1)负责生成需要分类的数据,并保存这些数据。以上代码生成了两类不同类型的数据:

class_1 = 0.6 * randn(n,2)

class_2 = 1.2 * randn(n,2) + array([5,1])

和

class_1 = 0.6 * randn(n,2)

r = 0.8 * randn(n,1) + 10

angle = 2*pi * randn(n,1)

class_2 = hstack((r*cos(angle),r*sin(angle)))

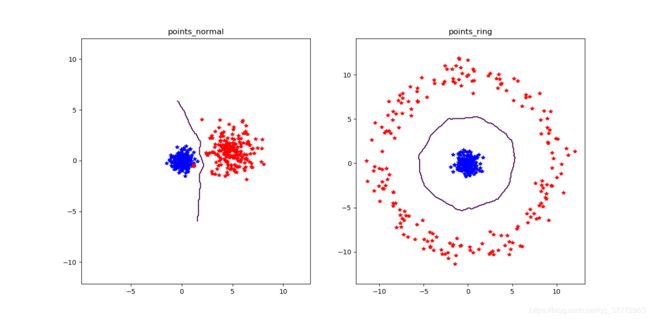

第一类数据自然不用多说,生成的数据大致分为左右两堆;第二类数据则生成的是环状数据,类似于行星和星环。

(2)

# -*- coding: utf-8 -*-

import pickle

from pylab import *

from PCV.classifiers import knn

from PCV.tools import imtools

pklist=['points_normal.pkl','points_ring.pkl']

figure()

# load 2D points using Pickle

for i, pklfile in enumerate(pklist):

print(pklfile[:-4])

with open(pklfile, 'rb') as f:

class_1 = pickle.load(f)

class_2 = pickle.load(f)

labels = pickle.load(f)

# load test data using Pickle

with open(pklfile[:-4]+'_test.pkl', 'rb') as f:

class_1 = pickle.load(f)

class_2 = pickle.load(f)

labels = pickle.load(f)

model = knn.KnnClassifier(labels,vstack((class_1,class_2)))

# test on the first point

print (model.classify(class_1[0]))

#define function for plotting

def classify(x,y,model=model):

return array([model.classify([xx,yy]) for (xx,yy) in zip(x,y)])

# lot the classification boundary

subplot(1,2,i+1)

imtools.plot_2D_boundary([-6,6,-6,6],[class_1,class_2],classify,[1,-1])

titlename=pklfile[:-4]

title(titlename)

show()

from numpy import *

class KnnClassifier(object):

def __init__(self,labels,samples):

""" Initialize classifier with training data. """

self.labels = labels

self.samples = samples

def classify(self,point,k=3):

""" Classify a point against k nearest

in the training data, return label. """

# compute distance to all training points

dist = array([L2dist(point,s) for s in self.samples])

# sort them

ndx = dist.argsort()

# use dictionary to store the k nearest

votes = {}

for i in range(k):

label = self.labels[ndx[i]]

votes.setdefault(label,0)

votes[label] += 1

return max(votes, key=lambda x: votes.get(x))

def L2dist(p1,p2):

return sqrt( sum( (p1-p2)**2) )

def L1dist(v1,v2):

return sum(abs(v1-v2))

代码(2)导入刚才生成的数据,并对每一类数据进行分类,画出分界线,结果如下:

2、用稠密SIFT作为图像特征

说到稠密SIFT,自然会想到一般的SIFT算法,那么它们俩有什么区别和相似之处呢?

(1)相似点:稠密SIFT和SIFT算法都是为了生成图像的特征向量

(2)区别:顾名思义,稠密SIFT算法能够得到比SIFT算法更加稠密的特征点

为什么教材在做图像分类时用dense-sift,而不用sift?这一点博主没有深究,不过这点应该很容易想到,dense-sift算法得到的特征点更加稠密,利于提高分类的精确性。

下面请看代码:

# -*- coding: utf-8 -*-

from PCV.localdescriptors import sift, dsift

from pylab import *

from PIL import Image

dsift.process_image_dsift('gesture/火烧云.jpg','火烧云.dsift',90,40,True)

#dsift('gesture/火烧云.jpg', '火烧云.dsift')

l,d = sift.read_features_from_file('火烧云.dsift')

im = array(Image.open('gesture/火烧云.jpg'))

sift.plot_features(im,l,True)

title('dense SIFT')

show()

dsift.py:

from PIL import Image

from numpy import *

import os

from PCV.localdescriptors import sift

def process_image_dsift(imagename,resultname,size=20,steps=10,force_orientation=False,resize=None):

""" Process an image with densely sampled SIFT descriptors

and save the results in a file. Optional input: size of features,

steps between locations, forcing computation of descriptor orientation

(False means all are oriented upwards), tuple for resizing the image."""

im = Image.open(imagename).convert('L')

if resize!=None:

im = im.resize(resize)

m,n = im.size

if imagename[-3:] != 'pgm':

#create a pgm file

im.save('tmp.pgm')

imagename = 'tmp.pgm'

# create frames and save to temporary file

scale = size/3.0

x,y = meshgrid(range(steps,m,steps),range(steps,n,steps))

xx,yy = x.flatten(),y.flatten()

frame = array([xx,yy,scale*ones(xx.shape[0]),zeros(xx.shape[0])])

savetxt('tmp.frame',frame.T,fmt='%03.3f')

path = os.path.abspath(os.path.join(os.path.dirname("__file__"),os.path.pardir))

path = path + "\\python3-ch08\\win32vlfeat\\sift.exe "

if force_orientation:

cmmd = str(path+imagename+" --output="+resultname+

" --read-frames=tmp.frame --orientations")

else:

cmmd = str(path+imagename+" --output="+resultname+

" --read-frames=tmp.frame")

os.system(cmmd)

print ('processed', imagename, 'to', resultname)

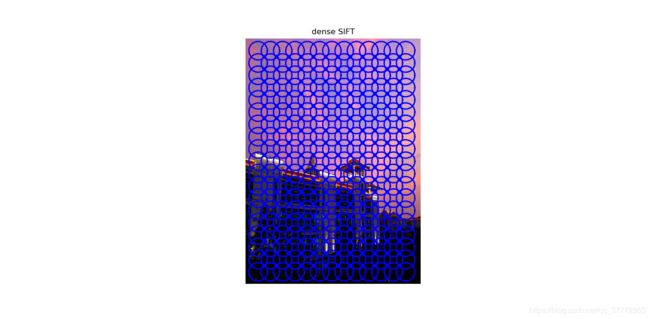

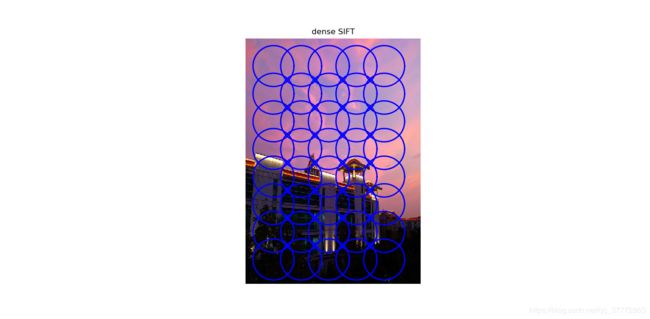

运行结果:

1)dsift.process_image_dsift(‘gesture/火烧云.jpg’,‘火烧云.dsift’,90,40,True)

2)dsift.process_image_dsift(‘gesture/火烧云.jpg’,‘火烧云.dsift’,200,90,True)

根据这两张运行结果图,这里讲解一下此段代码:dsift.process_image_dsift(‘gesture/火烧云.jpg’,‘火烧云.dsift’,200,90,True)的意思

这里还要从dense-sift的原理说起,dense-sift是先对图像进行分块,然后再对每个分块中的图像做特征提取,因此(90,40)和(200,90)指的便是分块区域的大小了。

3、图像分类:手势识别

手势识别分为两步,第一步是训练数据,第二部是测试数据

(1)训练数据

# -*- coding: utf-8 -*-

import os

from PCV.localdescriptors import sift, dsift

from pylab import *

from PIL import Image

imlist=['gesture/train2/C-uniform01.jpg','gesture/train2/B-uniform01.jpg',

'gesture/train2/A-uniform01.jpg','gesture/train2/Five-uniform01.jpg',

'gesture/train2/Point-uniform01.jpg','gesture/train2/V-uniform01.jpg']

figure()

for i, im in enumerate(imlist):

print(im)

dsift.process_image_dsift(im, im[:-3]+'dsift', 15, 6, True)

l, d = sift.read_features_from_file(im[:-3]+'dsift')

dirpath, filename = os.path.split(im)

im = array(Image.open(im))

#显示手势含义title

titlename = filename[:-14]

subplot(2, 3, i+1)

sift.plot_features(im, l, True)

title(titlename)

show()

# -*- coding: utf-8 -*-

from PCV.localdescriptors import dsift

import os

from PCV.localdescriptors import sift

from pylab import *

from PCV.classifiers import knn

def get_imagelist(path):

""" Returns a list of filenames for

all jpg images in a directory. """

return [os.path.join(path,f) for f in os.listdir(path) if f.endswith('.jpg')]

def read_gesture_features_labels(path):

# create list of all files ending in .dsift

featlist = [os.path.join(path,f) for f in os.listdir(path) if f.endswith('.dsift')]

# read the features

features = []

for featfile in featlist:

l,d = sift.read_features_from_file(featfile)

features.append(d.flatten())

features = array(features)

# create labels

labels = [featfile.split('/')[-1][0] for featfile in featlist]

return features,array(labels)

def print_confusion(res,labels,classnames):

n = len(classnames)

# confusion matrix

class_ind = dict([(classnames[i],i) for i in range(n)])

confuse = zeros((n,n))

for i in range(len(test_labels)):

confuse[class_ind[res[i]],class_ind[test_labels[i]]] += 1

print ('Confusion matrix for')

print (classnames)

print (confuse)

filelist_train = get_imagelist('gesture/train2')

filelist_test = get_imagelist('gesture/test2')

imlist=filelist_train+filelist_test

# process images at fixed size (50,50)

for filename in imlist:

featfile = filename[:-3]+'dsift'

dsift.process_image_dsift(filename,featfile,10,5,resize=(50,50))

features,labels = read_gesture_features_labels('gesture/train2/')

test_features,test_labels = read_gesture_features_labels('gesture/test2/')

classnames = unique(labels)

# test kNN

k = 1

knn_classifier = knn.KnnClassifier(labels,features)

res = array([knn_classifier.classify(test_features[i],k) for i in

range(len(test_labels))])

# accuracy

acc = sum(1.0*(res==test_labels)) / len(test_labels)

print ('Accuracy:', acc)

print_confusion(res,test_labels,classnames)

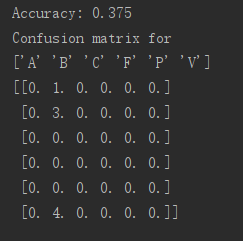

测试结果:

可以看到accuracy为1,这说明测试结果的正确率为100%。

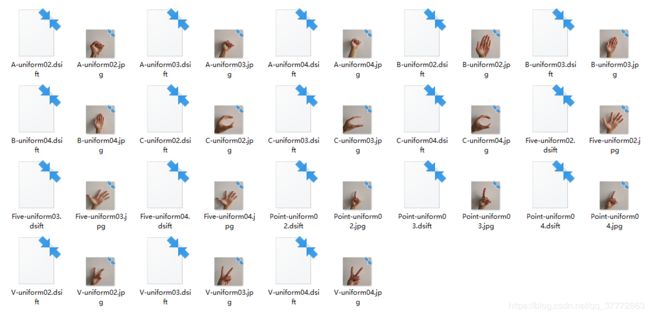

为什么正确率会这么高呢?先给大家看一下博主测试的图片吧:

可以看到,测试图片可以说是非常的规范了。那么如果测试图片“很丑”时,分类结果又会怎样呢?

这是接下来要测试的图像集:

这些图像理论上应该分类给“B”这一类,下面我们来看一下实验效果:

可以看到accuracy为0.375,正确率大大下降,这说明在做手势识别时,测试的手势的角度不同,对结果有很大的影响。

谢谢阅读!