ORB-SLAM2代码阅读笔记(六):Tracking线程4——Track函数中相机位姿估计

Table of Contents

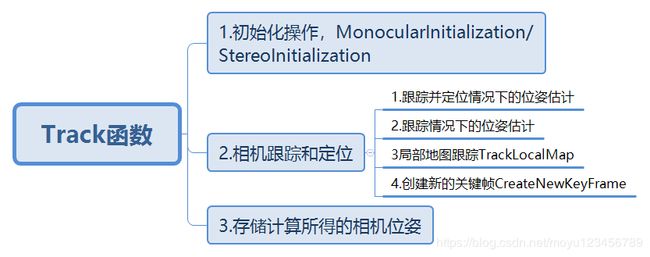

1.Track函数结构

2.相机位姿估计

1)Track函数代码分析

2)跟踪并定位情况下的位姿估计

(1)CheckReplacedInLastFrame函数

(2)TrackReferenceKeyFrame函数

(3)TrackWithMotionModel函数

3)跟踪情况下的位姿估计

4)局部地图跟踪函数TrackLocalMap分析

(1)更新局部地图:UpdateLocalMap()

(2)在局部地图中查找与当前帧匹配的地图点:SearchLocalPoints

(3)进行位姿优化:Optimizer::PoseOptimization

(4)判断局部地图是否跟踪成功

5)创建新的关键帧函数CreateNewKeyFrame分析

(1)NeedNewKeyFrame函数

(2)CreateNewKeyFrame函数

3.小结

1.Track函数结构

Track函数作为Tracking线程中最主要的函数,在 ORB-SLAM2代码阅读笔记(五):Tracking线程3——Track函数中单目相机初始化 中已经对单目初始化函数MonocularInitialization代码进行了分析。本篇笔记重点分析跟踪和定位部分的代码流程。

2.相机位姿估计

1)Track函数代码分析

/**

* 相当于Tracking线程中的主要方法

* track包含两部分:估计运动、跟踪局部地图

* tracking过程的状态机:

* SYSTEM_NOT_READY=-1,

* NO_IMAGES_YET=0,

* NOT_INITIALIZED=1,

* OK=2,

* LOST=3

* 如果图像复位过、或者第一次运行,则为NO_IMAGE_YET状态

*/

void Tracking::Track()

{

if(mState==NO_IMAGES_YET)

{

mState = NOT_INITIALIZED;

}

//mLastProcessedState存储了Tracking最新的状态,用于FrameDrawer中的绘制

mLastProcessedState=mState;

// Get Map Mutex -> Map cannot be changed

unique_lock lock(mpMap->mMutexMapUpdate);

//1.初始化:没有初始化的话会先进入初始化分支

if(mState==NOT_INITIALIZED)

{

if(mSensor==System::STEREO || mSensor==System::RGBD)

StereoInitialization();

else

//1.单目相机初始化

MonocularInitialization();

mpFrameDrawer->Update(this);

if(mState!=OK)

return;

}

else//2.跟踪估计相机位姿:初始化完成后会进入跟踪分支

{

// System is initialized. Track Frame.

bool bOK;

// Initial camera pose estimation using motion model or relocalization (if tracking is lost)

//使用运动模型或者重定位来初始化相机位姿

//在viewer中有个开关menuLocalizationMode,由它控制是否ActivateLocalizationMode,并最终管控mbOnlyTracking

//mbOnlyTracking等于false表示正常VO模式(有地图更新),mbOnlyTracking等于true表示用户手动选择定位模式

if(!mbOnlyTracking)

{

// Local Mapping is activated. This is the normal behaviour, unless

// you explicitly activate the "only tracking" mode.

//正常初始化成功

if(mState==OK)

{

// Local Mapping might have changed some MapPoints tracked in last frame

//检查并更新上一帧被替换的MapPoints,更新Fuse函数和SearchAndFuse函数替换的MapPoints

CheckReplacedInLastFrame();

//上一帧速度为0 || 当前帧ID与重定位帧ID之间相差大于2

//跟踪上一帧或者参考帧或者重定位

if(mVelocity.empty() || mCurrentFrame.mnId vpMPsMM;

vector vbOutMM;

cv::Mat TcwMM;

if(!mVelocity.empty())

{

bOKMM = TrackWithMotionModel();

vpMPsMM = mCurrentFrame.mvpMapPoints;

vbOutMM = mCurrentFrame.mvbOutlier;

TcwMM = mCurrentFrame.mTcw.clone();

}

bOKReloc = Relocalization();

if(bOKMM && !bOKReloc)

{

mCurrentFrame.SetPose(TcwMM);

mCurrentFrame.mvpMapPoints = vpMPsMM;

mCurrentFrame.mvbOutlier = vbOutMM;

if(mbVO)

{

for(int i =0; iIncreaseFound();

}

}

}

}

else if(bOKReloc)

{

//重定位成功的时候,就使用重定位的思路,所以标记mbVO要设置为false

mbVO = false;

}

bOK = bOKReloc || bOKMM;

}

}

}//else end(mbOnlyTracking==true)

//将最新的关键帧作为reference frame

mCurrentFrame.mpReferenceKF = mpReferenceKF;

// If we have an initial estimation of the camera pose and matching. Track the local map.

/**

* 在帧间匹配得到初始的姿态后,现在对local map进行跟踪得到更多的匹配,并优化当前位姿

* local map:当前帧、当前帧的MapPoints、当前关键帧与其它关键帧共视关系

* 在步骤2.1中主要是两两跟踪(恒速模型跟踪上一帧、跟踪参考帧),这里搜索局部关键帧后搜集所有局部MapPoints,

* 然后将局部MapPoints和当前帧进行投影匹配,得到更多匹配的MapPoints后进行Pose优化

*/

if(!mbOnlyTracking)

{

if(bOK)

bOK = TrackLocalMap();

}

else

{

// mbVO true means that there are few matches to MapPoints in the map. We cannot retrieve

// a local map and therefore we do not perform TrackLocalMap(). Once the system relocalizes

// the camera we will use the local map again.

// mbVO值为true表示有很少的匹配点和地图中的MapPoint相匹配,我们无法检索本地地图因此此时不调用TrackLocalMap函数。

// 一旦系统重新定位相机位置,我们将再次使用本地地图

if(bOK && !mbVO)

bOK = TrackLocalMap();//局部地图跟踪

}

if(bOK)

mState = OK;

else

mState=LOST;

// Update drawer

mpFrameDrawer->Update(this);

// If tracking were good, check if we insert a keyframe

if(bOK)

{

// Update motion model

if(!mLastFrame.mTcw.empty())

{

cv::Mat LastTwc = cv::Mat::eye(4,4,CV_32F);

mLastFrame.GetRotationInverse().copyTo(LastTwc.rowRange(0,3).colRange(0,3));

mLastFrame.GetCameraCenter().copyTo(LastTwc.rowRange(0,3).col(3));

mVelocity = mCurrentFrame.mTcw*LastTwc;

}

else

mVelocity = cv::Mat();

//地图中设置当前帧的相机位姿

mpMapDrawer->SetCurrentCameraPose(mCurrentFrame.mTcw);

// Clean VO matches

for(int i=0; iObservations()<1)

{

mCurrentFrame.mvbOutlier[i] = false;

mCurrentFrame.mvpMapPoints[i]=static_cast(NULL);

}

}

// Delete temporal MapPoints 删除暂时的MapPoint

for(list::iterator lit = mlpTemporalPoints.begin(), lend = mlpTemporalPoints.end(); lit!=lend; lit++)

{

MapPoint* pMP = *lit;

delete pMP;

}

mlpTemporalPoints.clear();

// Check if we need to insert a new keyframe

// 判断是否需要插入关键帧

if(NeedNewKeyFrame())

CreateNewKeyFrame();

// We allow points with high innovation (considererd outliers by the Huber Function)

// pass to the new keyframe, so that bundle adjustment will finally decide

// if they are outliers or not. We don't want next frame to estimate its position

// with those points so we discard them in the frame.

//抛弃无用的点

for(int i=0; i(NULL);

}

}

// Reset if the camera get lost soon after initialization

//如果初始化后不久相机丢失,则进行重置

if(mState==LOST)

{

if(mpMap->KeyFramesInMap()<=5)

{

cout << "Track lost soon after initialisation, reseting..." << endl;

mpSystem->Reset();

return;

}

}

if(!mCurrentFrame.mpReferenceKF)

mCurrentFrame.mpReferenceKF = mpReferenceKF;

//Tracking完成的时候,记录当前帧为上一帧

mLastFrame = Frame(mCurrentFrame);

}//跟踪end

// Store frame pose information to retrieve the complete camera trajectory afterwards.

//3:存储帧的位姿信息,稍后用来重现完整的相机运动轨迹

if(!mCurrentFrame.mTcw.empty())

{

cv::Mat Tcr = mCurrentFrame.mTcw*mCurrentFrame.mpReferenceKF->GetPoseInverse();

mlRelativeFramePoses.push_back(Tcr);

mlpReferences.push_back(mpReferenceKF);

mlFrameTimes.push_back(mCurrentFrame.mTimeStamp);

mlbLost.push_back(mState==LOST);

}

else

{

// This can happen if tracking is lost

mlRelativeFramePoses.push_back(mlRelativeFramePoses.back());

mlpReferences.push_back(mlpReferences.back());

mlFrameTimes.push_back(mlFrameTimes.back());

mlbLost.push_back(mState==LOST);

}

} 2)跟踪并定位情况下的位姿估计

Track函数中下面图片中所示代码为跟踪并同时定位的代码分支。

通过代码流程可以发现此时需要根据系统初始化是否成功(也就是mState==OK的判断)来进行相应处理。

系统初始化成功时,直接进行跟踪和定位就行。系统初始化失败的话,需要重新进行定位,也就是说要进行相机位姿的跟踪,初始的相机位姿和在地图中的位置必须是清楚的。

下面直接看这几个函数的代码:

(1)CheckReplacedInLastFrame函数

void Tracking::CheckReplacedInLastFrame()

{

//遍历关键点,获取其对应的MapPoint

for(int i =0; iGetReplaced();

if(pRep)

{

mLastFrame.mvpMapPoints[i] = pRep;

}

}

}

} (2)TrackReferenceKeyFrame函数

/**

* 函数功能:

* 1. 计算当前帧的词包,将当前帧的特征点分到特定层的nodes上

* 2. 对属于同一node的描述子进行匹配

* 3. 根据匹配对估计当前帧的姿态

* 4. 根据姿态剔除误匹配

* 具体步骤:

* 1. 按照关键帧进行Track的方法和运动模式恢复相机运动位姿的方法接近。首先求解当前帧的BOW向量。

* 2. 再搜索当前帧和关键帧之间的关键点匹配关系,如果这个匹配关系小于15对的话,就Track失败了。

* 3. 接着将当前帧的位置假定到上一帧的位置那里

* 4. 并通过最小二乘法优化相机的位姿。

* 5. 最后依然是抛弃无用的杂点,当match数大于等于10的时候,返回true成功。

*/

bool Tracking::TrackReferenceKeyFrame()

{

// Compute Bag of Words vector

//步骤1:将当前帧的描述子转化为BoW向量

mCurrentFrame.ComputeBoW();

// We perform first an ORB matching with the reference keyframe

// If enough matches are found we setup a PnP solver

//构建ORBmatcher

ORBmatcher matcher(0.7,true);

vector vpMapPointMatches;

//步骤2:通过特征点的BoW加快当前帧与参考帧之间的特征点匹配,对上一个关键帧进行BOW搜索匹配点

//特征点的匹配关系由MapPoints进行维护.

/**

* vpMapPointMatches中存储的是当前帧特征点的index,值为mpReferenceKF参考帧中对应的MapPoint

*/

int nmatches = matcher.SearchByBoW(mpReferenceKF,mCurrentFrame,vpMapPointMatches);

//匹配数小于15,返回false

if(nmatches<15)

return false;

//步骤3:将上一帧的位姿态作为当前帧位姿的初始值

mCurrentFrame.mvpMapPoints = vpMapPointMatches;

//用上一次的Tcw设置位姿初值,在PoseOptimization可以收敛快一些

mCurrentFrame.SetPose(mLastFrame.mTcw);

//步骤4:通过优化3D-2D的重投影误差来获得位姿。通过PoseOptimization优化相机的位姿

Optimizer::PoseOptimization(&mCurrentFrame);

// Discard outliers

//抛弃杂点.步骤5:剔除优化后的outlier匹配点(MapPoints)

int nmatchesMap = 0;

for(int i =0; i(NULL);

mCurrentFrame.mvbOutlier[i]=false;

//表示这个MapPoint不在可视化窗口中跟踪

pMP->mbTrackInView = false;

pMP->mnLastFrameSeen = mCurrentFrame.mnId;

nmatches--;

}

else if(mCurrentFrame.mvpMapPoints[i]->Observations()>0)

nmatchesMap++;

}

}

//若被观察的点数大于10返回true

return nmatchesMap>=10;

} 该函数中调用的ORBmatcher::SearchByBoW(KeyFrame* pKF,Frame &F, vector

/**

* pKF 为参考关键帧

* F 为当前帧

* vpMapPointMatches 作为出参,存储匹配的MapPoint点

* 该函数作用也就是吧pKF中的MapPoint和F中按照描述子距离最小进行匹配

*/

int ORBmatcher::SearchByBoW(KeyFrame* pKF,Frame &F, vector &vpMapPointMatches)

{

//获得参考关键帧的MapPoint列表

const vector vpMapPointsKF = pKF->GetMapPointMatches();

vpMapPointMatches = vector(F.N,static_cast(NULL));

const DBoW2::FeatureVector &vFeatVecKF = pKF->mFeatVec;

int nmatches=0;

vector rotHist[HISTO_LENGTH];

for(int i=0;ifirst == Fit->first)

{

const vector vIndicesKF = KFit->second;

const vector vIndicesF = Fit->second;

//遍历参考关键帧中对应的MapPoint和对应描述子

for(size_t iKF=0; iKFisBad())

continue;

//获取参考关键帧中的realIdxKF这个index对应的描述子

const cv::Mat &dKF= pKF->mDescriptors.row(realIdxKF);

int bestDist1=256;

int bestIdxF =-1 ;

int bestDist2=256;

//遍历计算最小距离和次最小距离

for(size_t iF=0; iF(bestDist1)(bestDist2))

{

//pMP为传入的参考关键帧里边的MapPoint,bestIdxF为当前帧中和pMP描述子最小的特征点的index

vpMapPointMatches[bestIdxF]=pMP;

const cv::KeyPoint &kp = pKF->mvKeysUn[realIdxKF];

//方向检查

if(mbCheckOrientation)

{

float rot = kp.angle-F.mvKeys[bestIdxF].angle;

if(rot<0.0)

rot+=360.0f;

int bin = round(rot*factor);

if(bin==HISTO_LENGTH)

bin=0;

assert(bin>=0 && binfirst < Fit->first)

{

KFit = vFeatVecKF.lower_bound(Fit->first);

}

else

{

Fit = F.mFeatVec.lower_bound(KFit->first);

}

}

if(mbCheckOrientation)

{

int ind1=-1;

int ind2=-1;

int ind3=-1;

ComputeThreeMaxima(rotHist,HISTO_LENGTH,ind1,ind2,ind3);

for(int i=0; i(NULL);

nmatches--;

}

}

}

return nmatches;

} 调用的位姿优化函数代码如下。优化作为SLAM中的核心,后边写一篇笔记整理一下自己的学习心得。

/**

* 这里仅优化帧的位姿,不优化地图点的坐标。(之后会剔除优化后的外点,这个操作不再本函数中做)

* (3D-2D)将地图点投影到相机坐标系下的相机平面

*/

int Optimizer::PoseOptimization(Frame *pFrame)

{

g2o::SparseOptimizer optimizer;

g2o::BlockSolver_6_3::LinearSolverType * linearSolver;

linearSolver = new g2o::LinearSolverDense();

g2o::BlockSolver_6_3 * solver_ptr = new g2o::BlockSolver_6_3(linearSolver);

g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg(solver_ptr);

optimizer.setAlgorithm(solver);

int nInitialCorrespondences=0;

// Set Frame vertex 设置帧结点

//VertexSE3Expmap为李代数位姿

g2o::VertexSE3Expmap * vSE3 = new g2o::VertexSE3Expmap();

//当前帧的变换矩阵

vSE3->setEstimate(Converter::toSE3Quat(pFrame->mTcw));

vSE3->setId(0);

vSE3->setFixed(false);

optimizer.addVertex(vSE3);

// Set MapPoint vertices 设置地图点结点

const int N = pFrame->N;

vector vpEdgesMono;

vector vnIndexEdgeMono;

vpEdgesMono.reserve(N);

vnIndexEdgeMono.reserve(N);

vector vpEdgesStereo;

vector vnIndexEdgeStereo;

vpEdgesStereo.reserve(N);

vnIndexEdgeStereo.reserve(N);

const float deltaMono = sqrt(5.991);

const float deltaStereo = sqrt(7.815);

{

unique_lock lock(MapPoint::mGlobalMutex);

//遍历帧中的MapPoint

for(int i=0; imvpMapPoints[i];

if(pMP)

{

// Monocular observation 单目

if(pFrame->mvuRight[i]<0)

{

nInitialCorrespondences++;

pFrame->mvbOutlier[i] = false;

Eigen::Matrix obs;

//获取关键点

const cv::KeyPoint &kpUn = pFrame->mvKeysUn[i];

//obs中输入关键点的x,y坐标

obs << kpUn.pt.x, kpUn.pt.y;

/**

* EdgeSE3ProjectXYZOnlyPose():PoseEstimation中的重投影误差,将地图点投影到相机坐标系下的相机平面。

*/

//构造pose一元边

g2o::EdgeSE3ProjectXYZOnlyPose* e = new g2o::EdgeSE3ProjectXYZOnlyPose();

//这里只设置一个顶点

//optimizer.vertex(0)返回的就是上边的vSE3结点,也就是当前帧的Tcw

e->setVertex(0, dynamic_cast(optimizer.vertex(0)));

//设置观测数值,obs中为帧中的关键点坐标,也就是三维坐标中物体投影到相机平面的坐标

e->setMeasurement(obs);

//获取关键点所在层的sigma2值

const float invSigma2 = pFrame->mvInvLevelSigma2[kpUn.octave];

e->setInformation(Eigen::Matrix2d::Identity()*invSigma2);

g2o::RobustKernelHuber* rk = new g2o::RobustKernelHuber;

e->setRobustKernel(rk);

rk->setDelta(deltaMono);//设置阈值

//相机内参

e->fx = pFrame->fx;

e->fy = pFrame->fy;

e->cx = pFrame->cx;

e->cy = pFrame->cy;

//地图中MapPoint的世界坐标系的坐标值

cv::Mat Xw = pMP->GetWorldPos();

e->Xw[0] = Xw.at(0);

e->Xw[1] = Xw.at(1);

e->Xw[2] = Xw.at(2);

optimizer.addEdge(e);

vpEdgesMono.push_back(e);

vnIndexEdgeMono.push_back(i);

}

else // Stereo observation 双目

{

nInitialCorrespondences++;

pFrame->mvbOutlier[i] = false;

//SET EDGE 设置边

Eigen::Matrix obs;

const cv::KeyPoint &kpUn = pFrame->mvKeysUn[i];

const float &kp_ur = pFrame->mvuRight[i];

//obs中存入的是双目相机中像素坐标下一个关键点的坐标

obs << kpUn.pt.x, kpUn.pt.y, kp_ur;

//优化变量只有pose,地图点位置固定,是一边元,双目中使用的是EdgeStereoSE3ProjectXYZOnlyPoze()。

g2o::EdgeStereoSE3ProjectXYZOnlyPose* e = new g2o::EdgeStereoSE3ProjectXYZOnlyPose();

e->setVertex(0, dynamic_cast(optimizer.vertex(0)));

e->setMeasurement(obs);

const float invSigma2 = pFrame->mvInvLevelSigma2[kpUn.octave];

Eigen::Matrix3d Info = Eigen::Matrix3d::Identity()*invSigma2;

e->setInformation(Info);

g2o::RobustKernelHuber* rk = new g2o::RobustKernelHuber;

e->setRobustKernel(rk);

rk->setDelta(deltaStereo);

e->fx = pFrame->fx;

e->fy = pFrame->fy;

e->cx = pFrame->cx;

e->cy = pFrame->cy;

e->bf = pFrame->mbf;

cv::Mat Xw = pMP->GetWorldPos();

e->Xw[0] = Xw.at(0);

e->Xw[1] = Xw.at(1);

e->Xw[2] = Xw.at(2);

optimizer.addEdge(e);

vpEdgesStereo.push_back(e);

vnIndexEdgeStereo.push_back(i);

}

}

}

}

if(nInitialCorrespondences<3)

return 0;

// We perform 4 optimizations, after each optimization we classify observation as inlier/outlier

// At the next optimization, outliers are not included, but at the end they can be classified as inliers again.

const float chi2Mono[4]={5.991,5.991,5.991,5.991};

const float chi2Stereo[4]={7.815,7.815,7.815, 7.815};

const int its[4]={10,10,10,10};

int nBad=0;

for(size_t it=0; it<4; it++)

{

vSE3->setEstimate(Converter::toSE3Quat(pFrame->mTcw));

optimizer.initializeOptimization(0);

optimizer.optimize(its[it]);

nBad=0;

for(size_t i=0, iend=vpEdgesMono.size(); imvbOutlier[idx])

{

e->computeError();

}

const float chi2 = e->chi2();

if(chi2>chi2Mono[it])

{

pFrame->mvbOutlier[idx]=true;

e->setLevel(1);

nBad++;

}

else

{

pFrame->mvbOutlier[idx]=false;

e->setLevel(0);

}

if(it==2)

e->setRobustKernel(0);

}

for(size_t i=0, iend=vpEdgesStereo.size(); imvbOutlier[idx])

{

e->computeError();

}

const float chi2 = e->chi2();

if(chi2>chi2Stereo[it])

{

pFrame->mvbOutlier[idx]=true;

e->setLevel(1);

nBad++;

}

else

{

e->setLevel(0);

pFrame->mvbOutlier[idx]=false;

}

if(it==2)

e->setRobustKernel(0);

}

if(optimizer.edges().size()<10)

break;

}

// Recover optimized pose and return number of inliers

g2o::VertexSE3Expmap* vSE3_recov = static_cast(optimizer.vertex(0));

g2o::SE3Quat SE3quat_recov = vSE3_recov->estimate();

cv::Mat pose = Converter::toCvMat(SE3quat_recov);

//设置优化完成后的位姿

pFrame->SetPose(pose);

return nInitialCorrespondences-nBad;

} (3)TrackWithMotionModel函数

/**

* 步骤:

* 1. 首先通过上一帧的位姿和速度来设置当前帧相机的位姿

* 2. 通过PnP方法估计相机位姿,再将上一帧的地图点投影到当前固定大小范围的帧平面上,如果匹配点少,那么扩大两倍的采点范围

* 3. 然后进行一次BA算法,优化相机的位姿

* 4. 优化位姿之后,对当前帧的关键点和地图点,抛弃无用的杂点,剩下的点供下一次操作使用

*/

bool Tracking::TrackWithMotionModel()

{

//构建ORBmatcher

ORBmatcher matcher(0.9,true);

// Update last frame pose according to its reference keyframe

// Create "visual odometry" points if in Localization Mode

// 根据参考关键帧更新上一帧的位姿。如果在定位模式中的话,创建视觉里程计点

/**

* 步骤1:对于双目或rgbd摄像头,根据深度值为上一关键帧生成新的MapPoints

* (跟踪过程中需要将当前帧与上一帧进行特征点匹配,将上一帧的MapPoints投影到当前帧可以缩小匹配范围)

* 在跟踪过程中,去除outlier的MapPoint,如果不及时增加MapPoint会逐渐减少

* 这个函数的功能就是补充增加RGBD和双目相机上一帧的MapPoints数

*/

UpdateLastFrame();

//根据Const Velocity Model(认为这两帧之间的相对运动和之前两帧间相对运动相同)估计当前帧的位姿

mCurrentFrame.SetPose(mVelocity*mLastFrame.mTcw);//当前帧位置等于mVelocity*mLastFrame.mTcw

fill(mCurrentFrame.mvpMapPoints.begin(),mCurrentFrame.mvpMapPoints.end(),static_cast(NULL));

// Project points seen in previous frame

// 在前一帧观察投影点

int th;

if(mSensor!=System::STEREO)//非双目搜索范围系数设为15

th=15;

else

th=7;

//步骤2:根据匀速度模型进行对上一帧的MapPoints进行跟踪

//根据上一帧特征点对应的3D点投影的位置缩小特征点匹配范围

int nmatches = matcher.SearchByProjection(mCurrentFrame,mLastFrame,th,mSensor==System::MONOCULAR);

// If few matches, uses a wider window search

//如果匹配对不到20个,扩大投影面积继续投影

if(nmatches<20)

{

fill(mCurrentFrame.mvpMapPoints.begin(),mCurrentFrame.mvpMapPoints.end(),static_cast(NULL));

nmatches = matcher.SearchByProjection(mCurrentFrame,mLastFrame,2*th,mSensor==System::MONOCULAR);

}

//若匹配数依旧小于20返回false

if(nmatches<20)

return false;

// Optimize frame pose with all matches

//步骤3:优化位姿.通过PoseOptimization优化相机位姿

Optimizer::PoseOptimization(&mCurrentFrame);

// Discard outliers

//步骤4:优化位姿后剔除outlier的mvpMapPoints.抛弃杂点

int nmatchesMap = 0;

for(int i =0; i(NULL);

mCurrentFrame.mvbOutlier[i]=false;

pMP->mbTrackInView = false;

pMP->mnLastFrameSeen = mCurrentFrame.mnId;

nmatches--;

}

else if(mCurrentFrame.mvpMapPoints[i]->Observations()>0)

nmatchesMap++;

}

}

if(mbOnlyTracking)

{

//匹配数<10,则mbVO的值为true

mbVO = nmatchesMap<10;

return nmatches>20;

}

return nmatchesMap>=10;

} 其中调用的UpdateLastFrame函数代码如下:

/**

* 1.更新最近一帧的位姿

* 2.对于双目或rgbd摄像头,为上一帧临时生成新的MapPoints,注意这些MapPoints不加入到Map中,

* 在tracking的最后会删除,跟踪过程中需要将上一帧的MapPoints投影到当前帧可以缩小匹配范围,加快当前帧与上一帧进行特征点匹配

*/

void Tracking::UpdateLastFrame()

{

// Update pose according to reference keyframe

//步骤1:更新最近一帧的位姿

KeyFrame* pRef = mLastFrame.mpReferenceKF;

cv::Mat Tlr = mlRelativeFramePoses.back();

mLastFrame.SetPose(Tlr*pRef->GetPose());

//如果上一帧为关键帧,或者单目的情况,则退出

if(mnLastKeyFrameId==mLastFrame.mnId || mSensor==System::MONOCULAR || !mbOnlyTracking)

return;

// Create "visual odometry" MapPoints

// We sort points according to their measured depth by the stereo/RGB-D sensor

//创建视觉里程计地图点,我们根据双目或者RGB-D传感器的测量深度对点进行排序

/**

*步骤2:对于双目或rgbd摄像头,为上一帧临时生成新的MapPoints

注意这些MapPoints不加入到Map中,在tracking的最后会删除

跟踪过程中需要将上一帧的MapPoints投影到当前帧可以缩小匹配范围,加快当前帧与上一帧进行特征点匹配

步骤2.1:得到上一帧有深度值的特征点

*/

vector > vDepthIdx;

vDepthIdx.reserve(mLastFrame.N);

for(int i=0; i0)

{

vDepthIdx.push_back(make_pair(z,i));

}

}

if(vDepthIdx.empty())

return;

//步骤2.2:按照深度从小到大排序

sort(vDepthIdx.begin(),vDepthIdx.end());

// We insert all close points (depthObservations()<1)

{

bCreateNew = true;

}

if(bCreateNew)

{

// 这些生成MapPoints后并没有通过:

// a.AddMapPoint、

// b.AddObservation、

// c.ComputeDistinctiveDescriptors、

// d.UpdateNormalAndDepth添加属性,

// 这些MapPoint仅仅为了提高双目和RGBD的跟踪成功率

cv::Mat x3D = mLastFrame.UnprojectStereo(i);

MapPoint* pNewMP = new MapPoint(x3D,mpMap,&mLastFrame,i);

mLastFrame.mvpMapPoints[i]=pNewMP;// 添加新的MapPoint

//标记为临时添加的MapPoint,之后在CreateNewKeyFrame之前会全部删除

mlpTemporalPoints.push_back(pNewMP);

nPoints++;

}

else

{

nPoints++;

}

if(vDepthIdx[j].first>mThDepth && nPoints>100)

break;

}

} 调用的ORBmatcher::SearchByProjection函数代码如下:

/**

* 根据投影来进行搜索,用于匹配前后两帧

* 1.先计算上一帧中的地图点MapPoint对应的像素坐标,也就是由3D点到2D点的投影;

* 2.利用计算所得的像素坐标以及描述子和当前帧的像素坐标进行匹配;

*/

int ORBmatcher::SearchByProjection(Frame &CurrentFrame, const Frame &LastFrame, const float th, const bool bMono)

{

int nmatches = 0;

// Rotation Histogram (to check rotation consistency)

vector rotHist[HISTO_LENGTH];

for(int i=0;i(2)>CurrentFrame.mb && !bMono;

const bool bBackward = -tlc.at(2)>CurrentFrame.mb && !bMono;

//遍历上一帧中的MapPoint点

for(int i=0; i相机坐标;

* 2.相机坐标-->相机归一化平面坐标;

* 3.相机归一化平面坐标-->像素坐标;

*/

// Project 投影

cv::Mat x3Dw = pMP->GetWorldPos();

//1.计算了MapPoint在相机坐标系中的坐标值

cv::Mat x3Dc = Rcw*x3Dw+tcw;

const float xc = x3Dc.at(0);

const float yc = x3Dc.at(1);

const float invzc = 1.0/x3Dc.at(2);

if(invzc<0)

continue;

/**

* X/Z = X * 1/Z = xc * invzc

* Y/Z = Y * 1/Z = yc * invzc

* u,v的计算公式如下:

* u = fx * X/Z + cx

* v = fy * Y/Z + cy

*/

//2.下面的xc*invzc和yc*invzc就是归一化平面的x,y坐标;计算所得u,v为像素坐标

float u = CurrentFrame.fx*xc*invzc+CurrentFrame.cx;

float v = CurrentFrame.fy*yc*invzc+CurrentFrame.cy;

if(uCurrentFrame.mnMaxX)

continue;

if(vCurrentFrame.mnMaxY)

continue;

//octave就是该关键点所在金字塔中哪个层

int nLastOctave = LastFrame.mvKeys[i].octave;

// Search in a window. Size depends on scale

float radius = th*CurrentFrame.mvScaleFactors[nLastOctave];

vector vIndices2;

if(bForward)

vIndices2 = CurrentFrame.GetFeaturesInArea(u,v, radius, nLastOctave);

else if(bBackward)

vIndices2 = CurrentFrame.GetFeaturesInArea(u,v, radius, 0, nLastOctave);

else

vIndices2 = CurrentFrame.GetFeaturesInArea(u,v, radius, nLastOctave-1, nLastOctave+1);

if(vIndices2.empty())

continue;

//获得该MapPoint的描述子

const cv::Mat dMP = pMP->GetDescriptor();

int bestDist = 256;

int bestIdx2 = -1;

for(vector::const_iterator vit=vIndices2.begin(), vend=vIndices2.end(); vit!=vend; vit++)

{

const size_t i2 = *vit;

if(CurrentFrame.mvpMapPoints[i2])

if(CurrentFrame.mvpMapPoints[i2]->Observations()>0)

continue;

if(CurrentFrame.mvuRight[i2]>0)

{

const float ur = u - CurrentFrame.mbf*invzc;

const float er = fabs(ur - CurrentFrame.mvuRight[i2]);

if(er>radius)

continue;

}

const cv::Mat &d = CurrentFrame.mDescriptors.row(i2);

const int dist = DescriptorDistance(dMP,d);

if(dist=0 && bin(NULL);

nmatches--;

}

}

}

}

return nmatches;

} (4)Relocalization函数代码

/**

* 重定位,从之前的关键帧中找出与当前帧之间拥有充足匹配点的候选帧,利用Ransac迭代,通过PnP求解位姿。

* 步骤:

* 1. 先计算当前帧的BOW值,并从关键帧数据库中查找候选的匹配关键帧

* 2. 构建PnP求解器,标记杂点,准备好每个关键帧和当前帧的匹配点集

* 3. 用PnP算法求解位姿,进行若干次P4P Ransac迭代,并使用非线性最小二乘优化,直到发现一个有充足inliers支持的相机位置

* 4. 返回成功或失败

*/

bool Tracking::Relocalization()

{

// Compute Bag of Words Vector

//步骤1:计算当前帧特征点的Bow映射

mCurrentFrame.ComputeBoW();

// Relocalization is performed when tracking is lost

// Track Lost: Query KeyFrame Database for keyframe candidates for relocalisation

//步骤2:找到与当前帧相似的候选关键帧

vector vpCandidateKFs = mpKeyFrameDB->DetectRelocalizationCandidates(&mCurrentFrame);

//如果未找到候选帧,返回false

if(vpCandidateKFs.empty())

return false;

const int nKFs = vpCandidateKFs.size();

// We perform first an ORB matching with each candidate

// If enough matches are found we setup a PnP solver

//我们首先执行与每个候选匹配的ORB匹配

//如果找到足够的匹配,我们设置一个PNP解算器

ORBmatcher matcher(0.75,true);

vector vpPnPsolvers;

vpPnPsolvers.resize(nKFs);

vector > vvpMapPointMatches;

vvpMapPointMatches.resize(nKFs);

vector vbDiscarded;

vbDiscarded.resize(nKFs);

int nCandidates=0;

//遍历候选关键帧

for(int i=0; iisBad())

vbDiscarded[i] = true;//去除不好的候选关键帧

else

{

//步骤3:通过BoW进行匹配,计算出pKF中和mCurrentFrame匹配的关键点的MapPoint存入vvpMapPointMatches中。

int nmatches = matcher.SearchByBoW(pKF,mCurrentFrame,vvpMapPointMatches[i]);

if(nmatches<15)

{

//候选关键帧中匹配点数小于15的丢弃

vbDiscarded[i] = true;

continue;

}

else//用pnp求解

{

//候选关键帧中匹配点数大于15的构建PnP求解器。这个PnP求解器中的3D point为vvpMapPointMatches中的MapPoint,2D点为mCurrentFrame中的关键点

//因为是重定位,所以就是求重定位的候选帧对应的MapPoint到当前帧的关键点之间的投影关系,通过投影关系确定当前帧的位姿,也就进行了重定位

PnPsolver* pSolver = new PnPsolver(mCurrentFrame,vvpMapPointMatches[i]);

//候选帧中匹配点数大于15的进行Ransac迭代

pSolver->SetRansacParameters(0.99,10,300,4,0.5,5.991);

vpPnPsolvers[i] = pSolver;

nCandidates++;

}

}

}

// Alternatively perform some iterations of P4P RANSAC

// Until we found a camera pose supported by enough inliers

// 执行一些P4P RANSAC迭代,直到我们找到一个由足够的inliers支持的相机位姿

bool bMatch = false;

ORBmatcher matcher2(0.9,true);

while(nCandidates>0 && !bMatch)

{

for(int i=0; i vbInliers;

int nInliers;

bool bNoMore;

//步骤4:通过EPnP算法估计姿态

PnPsolver* pSolver = vpPnPsolvers[i];

cv::Mat Tcw = pSolver->iterate(5,bNoMore,vbInliers,nInliers);

// If Ransac reachs max. iterations discard keyframe

if(bNoMore)

{

vbDiscarded[i]=true;

nCandidates--;

}

// If a Camera Pose is computed, optimize

if(!Tcw.empty())

{

Tcw.copyTo(mCurrentFrame.mTcw);

set sFound;

const int np = vbInliers.size();

for(int j=0; j(NULL);

// If few inliers, search by projection in a coarse window and optimize again

//步骤6:如果内点比较少,在一个大概的窗口中投影并再次进行位姿优化

if(nGood<50)

{

int nadditional =matcher2.SearchByProjection(mCurrentFrame,vpCandidateKFs[i],sFound,10,100);

if(nadditional+nGood>=50)

{

nGood = Optimizer::PoseOptimization(&mCurrentFrame);

// If many inliers but still not enough, search by projection again in a narrower window

// the camera has been already optimized with many points

if(nGood>30 && nGood<50)

{

sFound.clear();

for(int ip =0; ip=50)

{

nGood = Optimizer::PoseOptimization(&mCurrentFrame);

for(int io =0; io=50)

{

bMatch = true;

break;

}

}

}

}

if(!bMatch)

{

return false;

}

else

{

mnLastRelocFrameId = mCurrentFrame.mnId;

return true;

}

} 其中的DetectRelocalizationCandidates函数如下:

/**

* 检测重定位候选关键帧

* 因为当前的系统状态变为了LOST,所以需要进行重定位。

*/

vector KeyFrameDatabase::DetectRelocalizationCandidates(Frame *F)

{

list lKFsSharingWords;

// Search all keyframes that share a word with current frame

//查找所有与当前帧共享同一个单词的关键帧

{

unique_lock lock(mMutex);

//遍历当前帧F的单词向量

for(DBoW2::BowVector::const_iterator vit=F->mBowVec.begin(), vend=F->mBowVec.end(); vit != vend; vit++)

{

//获取和当前帧共享相同单词的关键帧列表

list &lKFs = mvInvertedFile[vit->first];

//遍历共享同一单词的关键帧

for(list::iterator lit=lKFs.begin(), lend= lKFs.end(); lit!=lend; lit++)

{

KeyFrame* pKFi=*lit;

if(pKFi->mnRelocQuery!=F->mnId)

{

pKFi->mnRelocWords=0;

pKFi->mnRelocQuery=F->mnId;

lKFsSharingWords.push_back(pKFi);

}

pKFi->mnRelocWords++;

}

}

}

if(lKFsSharingWords.empty())

return vector();

// Only compare against those keyframes that share enough words

//只比较共同含有足够多的单词的关键帧

int maxCommonWords=0;

for(list::iterator lit=lKFsSharingWords.begin(), lend= lKFsSharingWords.end(); lit!=lend; lit++)

{

if((*lit)->mnRelocWords > maxCommonWords)

maxCommonWords=(*lit)->mnRelocWords;

}

int minCommonWords = maxCommonWords*0.8f;

list > lScoreAndMatch;

int nscores=0;

// Compute similarity score.

for(list::iterator lit=lKFsSharingWords.begin(), lend= lKFsSharingWords.end(); lit!=lend; lit++)

{

KeyFrame* pKFi = *lit;

if(pKFi->mnRelocWords>minCommonWords)

{

nscores++;

//计算当前帧F和关键帧pKFi之间的词包向量的分值

float si = mpVoc->score(F->mBowVec,pKFi->mBowVec);

pKFi->mRelocScore=si;

lScoreAndMatch.push_back(make_pair(si,pKFi));

}

}

if(lScoreAndMatch.empty())

return vector();

list > lAccScoreAndMatch;

float bestAccScore = 0;

// Lets now accumulate score by covisibility

for(list >::iterator it=lScoreAndMatch.begin(), itend=lScoreAndMatch.end(); it!=itend; it++)

{

KeyFrame* pKFi = it->second;

vector vpNeighs = pKFi->GetBestCovisibilityKeyFrames(10);

float bestScore = it->first;

float accScore = bestScore;

KeyFrame* pBestKF = pKFi;

for(vector::iterator vit=vpNeighs.begin(), vend=vpNeighs.end(); vit!=vend; vit++)

{

KeyFrame* pKF2 = *vit;

if(pKF2->mnRelocQuery!=F->mnId)

continue;

accScore+=pKF2->mRelocScore;

if(pKF2->mRelocScore>bestScore)

{

pBestKF=pKF2;

bestScore = pKF2->mRelocScore;

}

}

lAccScoreAndMatch.push_back(make_pair(accScore,pBestKF));

if(accScore>bestAccScore)

bestAccScore=accScore;

}

// Return all those keyframes with a score higher than 0.75*bestScore

// 返回所有分值高于0.75×bestScore的关键帧

float minScoreToRetain = 0.75f*bestAccScore;

set spAlreadyAddedKF;

vector vpRelocCandidates;

vpRelocCandidates.reserve(lAccScoreAndMatch.size());

for(list >::iterator it=lAccScoreAndMatch.begin(), itend=lAccScoreAndMatch.end(); it!=itend; it++)

{

const float &si = it->first;

if(si > minScoreToRetain)

{

KeyFrame* pKFi = it->second;

if(!spAlreadyAddedKF.count(pKFi))

{

vpRelocCandidates.push_back(pKFi);

spAlreadyAddedKF.insert(pKFi);

}

}

}

return vpRelocCandidates;

} Relocalization调用的ORBmatcher::SearchByBoW函数如下:

/**

* pKF 为参考关键帧

* F 为当前帧

* vpMapPointMatches 作为出参,存储匹配的MapPoint点

* 该函数作用也就是吧pKF中的MapPoint和F中按照描述子距离最小进行匹配

*/

int ORBmatcher::SearchByBoW(KeyFrame* pKF,Frame &F, vector &vpMapPointMatches)

{

//获得参考关键帧的MapPoint列表

const vector vpMapPointsKF = pKF->GetMapPointMatches();

vpMapPointMatches = vector(F.N,static_cast(NULL));

const DBoW2::FeatureVector &vFeatVecKF = pKF->mFeatVec;

int nmatches=0;

vector rotHist[HISTO_LENGTH];

for(int i=0;ifirst == Fit->first)

{

const vector vIndicesKF = KFit->second;

const vector vIndicesF = Fit->second;

//遍历参考关键帧中对应的MapPoint和对应描述子

for(size_t iKF=0; iKFisBad())

continue;

//获取参考关键帧中的realIdxKF这个index对应的描述子

const cv::Mat &dKF= pKF->mDescriptors.row(realIdxKF);

int bestDist1=256;

int bestIdxF =-1 ;

int bestDist2=256;

//遍历计算最小距离和次最小距离

for(size_t iF=0; iF(bestDist1)(bestDist2))

{

//pMP为传入的参考关键帧里边的MapPoint,bestIdxF为当前帧中和pMP描述子最小的特征点的index

vpMapPointMatches[bestIdxF]=pMP;

const cv::KeyPoint &kp = pKF->mvKeysUn[realIdxKF];

//方向检查

if(mbCheckOrientation)

{

float rot = kp.angle-F.mvKeys[bestIdxF].angle;

if(rot<0.0)

rot+=360.0f;

int bin = round(rot*factor);

if(bin==HISTO_LENGTH)

bin=0;

assert(bin>=0 && binfirst < Fit->first)

{

KFit = vFeatVecKF.lower_bound(Fit->first);

}

else

{

Fit = F.mFeatVec.lower_bound(KFit->first);

}

}

if(mbCheckOrientation)

{

int ind1=-1;

int ind2=-1;

int ind3=-1;

ComputeThreeMaxima(rotHist,HISTO_LENGTH,ind1,ind2,ind3);

for(int i=0; i(NULL);

nmatches--;

}

}

}

return nmatches;

} 3)跟踪情况下的位姿估计

在Track函数中,跟踪情况下的相机位姿估计代码为if(!mbOnlyTracking)对应的else分支中,该部分的代码如下:

// Localization Mode: Local Mapping is deactivated

if(mState==LOST)

{

//丢失了需要重定位

bOK = Relocalization();

}

else

{

//mbVO在Tracking线程初始化的时候初始值为false

if(!mbVO)

{

// In last frame we tracked enough MapPoints in the map

//若上一帧有速度,使用TrackWithMotionModel

//若上一帧没有速度,使用TrackReferenceKeyFrame

if(!mVelocity.empty())

{

bOK = TrackWithMotionModel();

}

else

{

bOK = TrackReferenceKeyFrame();

}

}

else

{

// In last frame we tracked mainly "visual odometry" points.

// We compute two camera poses, one from motion model and one doing relocalization.

// If relocalization is sucessfull we choose that solution, otherwise we retain

// the "visual odometry" solution.

//在最后一帧,我们跟踪了主要的视觉里程计点

//我们计算两个相机位姿,一个来自运动模型一个做重定位。如果重定位成功我们就选择这个思路,否则我们继续使用视觉里程计思路

bool bOKMM = false;

bool bOKReloc = false;

vector vpMPsMM;

vector vbOutMM;

cv::Mat TcwMM;

if(!mVelocity.empty())

{

bOKMM = TrackWithMotionModel();

vpMPsMM = mCurrentFrame.mvpMapPoints;

vbOutMM = mCurrentFrame.mvbOutlier;

TcwMM = mCurrentFrame.mTcw.clone();

}

bOKReloc = Relocalization();

if(bOKMM && !bOKReloc)

{

mCurrentFrame.SetPose(TcwMM);

mCurrentFrame.mvpMapPoints = vpMPsMM;

mCurrentFrame.mvbOutlier = vbOutMM;

if(mbVO)

{

for(int i =0; iIncreaseFound();

}

}

}

}

else if(bOKReloc)

{

//重定位成功的时候,就使用重定位的思路,所以标记mbVO要设置为false

mbVO = false;

}

bOK = bOKReloc || bOKMM;

}

} 可以看出,也是需要先判断系统状态,如果为LOST表示相机已经跟丢了,需要进行重定位。

4)局部地图跟踪函数TrackLocalMap分析

/**

* 步骤:

* 1. 更新Covisibility Graph, 更新局部关键帧

* 2. 根据局部关键帧,更新局部地图点,接下来运行过滤函数 isInFrustum

* 3. 将地图点投影到当前帧上,超出图像范围的舍弃

* 4. 当前视线方向v和地图点云平均视线方向n, 舍弃n*vIncreaseFound();

if(!mbOnlyTracking)

{

//该MapPoint被其它关键帧观测到过

if(mCurrentFrame.mvpMapPoints[i]->Observations()>0)

mnMatchesInliers++;

}

else

//记录当前帧跟踪到的MapPoints,用于统计跟踪效果

mnMatchesInliers++;

}

else if(mSensor==System::STEREO)

mCurrentFrame.mvpMapPoints[i] = static_cast(NULL);

}

}

// Decide if the tracking was succesful

// More restrictive if there was a relocalization recently

//步骤4:决定是否跟踪成功

if(mCurrentFrame.mnId 可以看出,TrackLocalMap函数中主要做了下面4个操作:

(1)更新局部地图:UpdateLocalMap()

/**

* 更新局部地图

* 局部地图包括:k1个关键帧、k2个临近关键帧和参考关键帧、由这些关键帧观测到的MapPoints

*

*/

void Tracking::UpdateLocalMap()

{

// This is for visualization

mpMap->SetReferenceMapPoints(mvpLocalMapPoints);

// 更新局部关键帧列表

UpdateLocalKeyFrames();

// 更新局部点

UpdateLocalPoints();

}UpdateLocalKeyFrames函数如下:

/**

* 更新局部关键帧列表,能看到当前关键帧中所有MapPoint的关键帧构成了一个布局关键帧列表mvpLocalKeyFrames

* 遍历当前帧的MapPoints,将观测到这些MapPoints的关键帧和相邻的关键帧取出,更新mvpLocalKeyFrames

*/

void Tracking::UpdateLocalKeyFrames()

{

// Each map point vote for the keyframes in which it has been observed

//步骤1:遍历当前帧的MapPoints,记录所有能观测到当前帧MapPoints的关键帧

map keyframeCounter;

for(int i=0; iisBad())

{

// 获取能观测到当前帧MapPoints的关键帧

const map observations = pMP->GetObservations();

for(map::const_iterator it=observations.begin(), itend=observations.end(); it!=itend; it++)

//keyframeCounter中最后存放的就是对应关键帧可以看到当前帧mCurrentFrame里多少个MapPoint

keyframeCounter[it->first]++;

}

else

{

mCurrentFrame.mvpMapPoints[i]=NULL;

}

}

}

if(keyframeCounter.empty())

return;

int max=0;

KeyFrame* pKFmax= static_cast(NULL);

//步骤2:更新局部关键帧(mvpLocalKeyFrames),添加局部关键帧有三个策略

mvpLocalKeyFrames.clear();

mvpLocalKeyFrames.reserve(3*keyframeCounter.size());

// All keyframes that observe a map point are included in the local map. Also check which keyframe shares most points

//策略1:能观测到当前帧MapPoints的关键帧作为局部关键帧,存入局部关键帧列表中

for(map::const_iterator it=keyframeCounter.begin(), itEnd=keyframeCounter.end(); it!=itEnd; it++)

{

KeyFrame* pKF = it->first;

if(pKF->isBad())

continue;

//it->second表示pKF这个关键帧可以看到多少个MapPoint

if(it->second>max)

{

max=it->second;

//pKFmax表示能看到MapPoint点数最多的关键帧

pKFmax=pKF;

}

//mvpLocalKeyFrames里边存放的是能看到当前帧对应的MapPoint的关键帧列表

mvpLocalKeyFrames.push_back(it->first);

//mnTrackReferenceForFrame防止重复添加局部关键帧

pKF->mnTrackReferenceForFrame = mCurrentFrame.mnId;

}

// Include also some not-already-included keyframes that are neighbors to already-included keyframes

//策略2:与策略1得到的局部关键帧共视程度很高的关键帧作为局部关键帧

for(vector::const_iterator itKF=mvpLocalKeyFrames.begin(), itEndKF=mvpLocalKeyFrames.end(); itKF!=itEndKF; itKF++)

{

// Limit the number of keyframes

if(mvpLocalKeyFrames.size()>80)

break;

KeyFrame* pKF = *itKF;

//策略2.1:最佳共视的10帧

const vector vNeighs = pKF->GetBestCovisibilityKeyFrames(10);

for(vector::const_iterator itNeighKF=vNeighs.begin(), itEndNeighKF=vNeighs.end(); itNeighKF!=itEndNeighKF; itNeighKF++)

{

KeyFrame* pNeighKF = *itNeighKF;

if(!pNeighKF->isBad())

{

//mnTrackReferenceForFrame防止重复添加局部关键帧

if(pNeighKF->mnTrackReferenceForFrame!=mCurrentFrame.mnId)

{

mvpLocalKeyFrames.push_back(pNeighKF);

pNeighKF->mnTrackReferenceForFrame=mCurrentFrame.mnId;

break;

}

}

}

//策略2.2:自己的子关键帧

const set spChilds = pKF->GetChilds();

for(set::const_iterator sit=spChilds.begin(), send=spChilds.end(); sit!=send; sit++)

{

KeyFrame* pChildKF = *sit;

if(!pChildKF->isBad())

{

if(pChildKF->mnTrackReferenceForFrame!=mCurrentFrame.mnId)

{

mvpLocalKeyFrames.push_back(pChildKF);

pChildKF->mnTrackReferenceForFrame=mCurrentFrame.mnId;

break;

}

}

}

//策略2.3:自己的父关键帧

KeyFrame* pParent = pKF->GetParent();

if(pParent)

{

//mnTrackReferenceForFrame防止重复添加局部关键帧

if(pParent->mnTrackReferenceForFrame!=mCurrentFrame.mnId)

{

mvpLocalKeyFrames.push_back(pParent);

pParent->mnTrackReferenceForFrame=mCurrentFrame.mnId;

break;

}

}

}

//步骤3:更新当前帧的参考关键帧,与自己共视程度最高的关键帧作为参考关键帧。

if(pKFmax)

{

mpReferenceKF = pKFmax;

mCurrentFrame.mpReferenceKF = mpReferenceKF;

}

} UpdateLocalPoints函数如下:

/**

* 更新局部关键点

* 局部关键帧mvpLocalKeyFrames的MapPoints,更新mvpLocalMapPoints

*/

void Tracking::UpdateLocalPoints()

{

//步骤1:清空局部MapPoints

mvpLocalMapPoints.clear();

//步骤2:遍历局部关键帧mvpLocalKeyFrames

for(vector::const_iterator itKF=mvpLocalKeyFrames.begin(), itEndKF=mvpLocalKeyFrames.end(); itKF!=itEndKF; itKF++)

{

KeyFrame* pKF = *itKF;

const vector vpMPs = pKF->GetMapPointMatches();

for(vector::const_iterator itMP=vpMPs.begin(), itEndMP=vpMPs.end(); itMP!=itEndMP; itMP++)

{

MapPoint* pMP = *itMP;

if(!pMP)

continue;

if(pMP->mnTrackReferenceForFrame==mCurrentFrame.mnId)

continue;

if(!pMP->isBad())

{

mvpLocalMapPoints.push_back(pMP);

pMP->mnTrackReferenceForFrame=mCurrentFrame.mnId;

}

}

}

} (2)在局部地图中查找与当前帧匹配的地图点:SearchLocalPoints

/**

* 函数功能:在局部地图中查找在当前帧视野范围内的点,将视野范围内的点和当前帧的特征点进行投影匹配

* 步骤:

* 1:遍历当前帧的mvpMapPoints,标记这些MapPoints不参与之后的搜索

* 2:将所有局部MapPoints投影到当前帧。先判断是否在视野范围内,然后进行投影匹配

* 3:对于双目或rgbd摄像头,为当前帧生成新的MapPoints

*/

void Tracking::SearchLocalPoints()

{

// Do not search map points already matched

//步骤1:遍历当前帧的mvpMapPoints,标记这些MapPoints不参与之后的搜索

//因为当前的mvpMapPoints一定在当前帧的视野中

for(vector::iterator vit=mCurrentFrame.mvpMapPoints.begin(), vend=mCurrentFrame.mvpMapPoints.end(); vit!=vend; vit++)

{

MapPoint* pMP = *vit;

if(pMP)

{

if(pMP->isBad())

{

*vit = static_cast(NULL);

}

else

{

// 更新能观测到该点的帧数加1

pMP->IncreaseVisible();

//标记该点被当前帧观测到

pMP->mnLastFrameSeen = mCurrentFrame.mnId;

//标记该点将来不被投影,因为已经匹配过

pMP->mbTrackInView = false;

}

}

}

int nToMatch=0;

// Project points in frame and check its visibility

//步骤2:将所有局部MapPoints投影到当前帧,判断是否在视野范围内,然后进行投影匹配

for(vector::iterator vit=mvpLocalMapPoints.begin(), vend=mvpLocalMapPoints.end(); vit!=vend; vit++)

{

MapPoint* pMP = *vit;

// 已经被当前帧观测到MapPoint不再判断是否能被当前帧观测到

if(pMP->mnLastFrameSeen == mCurrentFrame.mnId)

continue;

if(pMP->isBad())

continue;

// Project (this fills MapPoint variables for matching)

//步骤2.1:判断LocalMapPoints中的点是否在在视野内

if(mCurrentFrame.isInFrustum(pMP,0.5))

{

//观测到该MapPoint点的帧数加1,该MapPoint在某些帧的视野范围内

pMP->IncreaseVisible();

//只有在视野范围内的MapPoints才参与之后的投影匹配

nToMatch++;

}

}

if(nToMatch>0)

{

ORBmatcher matcher(0.8);

int th = 1;

if(mSensor==System::RGBD)

th=3;

// If the camera has been relocalised recently, perform a coarser search

//如果不久前进行过重定位,那么进行一个更加宽泛的搜索,阈值需要增大

if(mCurrentFrame.mnId (3)进行位姿优化:Optimizer::PoseOptimization

PoseOptimization函数代码在上边已经出现过,这里不再列出。

(4)判断局部地图是否跟踪成功

局部地图是否跟踪成功代码如下:

if(mCurrentFrame.mnId < mnLastRelocFrameId+mMaxFrames && mnMatchesInliers < 50)

return false;

if(mnMatchesInliers<30)

return false;

else

return true;可以看出跟踪失败的条件为:

第一种情况:当前帧id距离上次重定位的帧id之间的距离是否太短,并且当前跟踪匹配上的MapPoint点<50。

mCurrentFrame.mnId是当前帧的ID。

mnLastRelocFrameId是最近一次重定位帧的ID。

mMaxFrames等于图像输入的帧率,kitti单目的配置文件中该值为10.

第二种情况:如果在第一种情况不满足的情况下,需要判断当前跟踪匹配上的MapPoint是否<30,小于30则认为跟踪失败(因为匹配的地图点太少了)。

5)创建新的关键帧函数CreateNewKeyFrame分析

“关键帧是相机运动过程中某几个特殊的帧,这里“特殊”的意义是可以由我们自己指定的。常见的做法是,每当相机运动一定间隔,就取一个新的关键帧并保存起来”。这段是高博《视觉SLAM十四讲》中的描述,在阅读ORB-SLAM2代码时可以对照着来理解其中的意义。

关键帧不是随便来一个帧都可以作为关键帧的,关键帧的创建需要判断条件。NeedNewKeyFrame()函数中就是判断该条件的。

(1)NeedNewKeyFrame函数

/**

* 函数功能:判断是否需要生成新的关键帧

* 确定关键帧的标准:

* 1.在上一全局重定位后,过了20帧;

* 2.局部建图闲置了,或在上一关键帧插入后过了20帧;

* 3.当前帧跟踪到大于50个点;

* 4.当前帧跟踪到的比参考帧少了90%

*

*/

bool Tracking::NeedNewKeyFrame()

{

/**

* 步骤1:如果用户在界面上选择重定位,那么将不插入关键帧

* 由于插入关键帧过程中会生成MapPoint,因此用户选择重定位后地图上的点云和关键帧都不会再增加

*/

if(mbOnlyTracking)//如果仅跟踪,则不选择关键帧

return false;

// If Local Mapping is freezed by a Loop Closure do not insert keyframes

//如果局部地图被闭环检测使用,则不插入关键帧

if(mpLocalMapper->isStopped() || mpLocalMapper->stopRequested())

return false;

const int nKFs = mpMap->KeyFramesInMap();//关键帧数

// Do not insert keyframes if not enough frames have passed from last relocalisation

/**

* 步骤2:判断是否距离上一次插入关键帧的时间太短

* mCurrentFrame.mnId是当前帧的ID

* mnLastRelocFrameId是最近一次重定位帧的ID

* mMaxFrames等于图像输入的帧率

* 如果关键帧比较少,则考虑插入关键帧

* 或距离上一次重定位超过1s,则考虑插入关键帧

* kitti从单目配置文件中读取的mMaxFrames值为10

*/

if(mCurrentFrame.mnId < mnLastRelocFrameId+mMaxFrames && nKFs>mMaxFrames)

return false;

// Tracked MapPoints in the reference keyframe

//步骤3:得到参考关键帧跟踪到的MapPoints数量

//在UpdateLocalKeyFrames函数中会将与当前关键帧共视程度最高的关键帧设定为当前帧的参考关键帧

int nMinObs = 3;

if(nKFs<=2)

nMinObs=2;

int nRefMatches = mpReferenceKF->TrackedMapPoints(nMinObs);//获取参考关键帧跟踪到的MapPoints数量

// Local Mapping accept keyframes?

//步骤4:查询局部地图管理器是否繁忙

bool bLocalMappingIdle = mpLocalMapper->AcceptKeyFrames();

// Check how many "close" points are being tracked and how many could be potentially created.

//步骤5:对于双目或RGBD摄像头,统计总的可以添加的MapPoints数量和跟踪到地图中的MapPoints数量

int nNonTrackedClose = 0;

int nTrackedClose= 0;

//非单目相机的处理

if(mSensor!=System::MONOCULAR)

{

for(int i =0; i0 && mCurrentFrame.mvDepth[i]70);

// Thresholds

//步骤6:决策是否需要插入关键帧

float thRefRatio = 0.75f;

if(nKFs<2)

thRefRatio = 0.4f;

if(mSensor==System::MONOCULAR)

thRefRatio = 0.9f;

// Condition 1a: More than "MaxFrames" have passed from last keyframe insertion

//自从上次之后已经有超过MaxFrames帧没有插入

const bool c1a = mCurrentFrame.mnId>=mnLastKeyFrameId+mMaxFrames;

// Condition 1b: More than "MinFrames" have passed and Local Mapping is idle

//local mapping 处于空闲状态

const bool c1b = (mCurrentFrame.mnId>=mnLastKeyFrameId+mMinFrames && bLocalMappingIdle);

//Condition 1c: tracking is weak

const bool c1c = mSensor!=System::MONOCULAR && (mnMatchesInliers15);

if((c1a||c1b||c1c)&&c2)

{

// If the mapping accepts keyframes, insert keyframe.

// Otherwise send a signal to interrupt BA

//如果mapping接受关键帧,则插入关键帧,否则发送信号到中断BA

if(bLocalMappingIdle)

{

return true;

}

else

{

mpLocalMapper->InterruptBA();

if(mSensor!=System::MONOCULAR)

{

// 队列里不能阻塞太多关键帧

// tracking插入关键帧不是直接插入,而且先插入到mlNewKeyFrames中,

// 然后localmapper再逐个pop出来插入到mspKeyFrames

if(mpLocalMapper->KeyframesInQueue()<3)

return true;

else

return false;

}

else

return false;

}

}

else

return false;

} (2)CreateNewKeyFrame函数

/**

* 1:用当前帧构造成关键帧

* 2:将当前关键帧设置为当前帧的参考关键帧

* 3:对于双目或rgbd摄像头,为当前帧生成新的MapPoints

*/

void Tracking::CreateNewKeyFrame()

{

if(!mpLocalMapper->SetNotStop(true))

return;

//步骤1:用当前帧构造成关键帧

KeyFrame* pKF = new KeyFrame(mCurrentFrame,mpMap,mpKeyFrameDB);

//步骤2:将当前关键帧设置为当前帧的参考关键帧

//在UpdateLocalKeyFrames函数中会将与当前关键帧共视程度最高的关键帧设定为当前帧的参考关键帧

mpReferenceKF = pKF;

mCurrentFrame.mpReferenceKF = pKF;

// 步骤3:对于双目或rgbd摄像头,为当前帧生成新的MapPoints

if(mSensor!=System::MONOCULAR)

{

//根据Tcw计算mRcw、mtcw和mRwc、mOw

mCurrentFrame.UpdatePoseMatrices();

// We sort points by the measured depth by the stereo/RGBD sensor.

// We create all those MapPoints whose depth < mThDepth.

// If there are less than 100 close points we create the 100 closest.

//步骤3.1:得到当前帧深度小于阈值的特征点

//创建新的MapPoint, depth < mThDepth

vector > vDepthIdx;

vDepthIdx.reserve(mCurrentFrame.N);

for(int i=0; i0)

{

vDepthIdx.push_back(make_pair(z,i));

}

}

if(!vDepthIdx.empty())

{

//步骤3.2:按照深度从小到大排序

sort(vDepthIdx.begin(),vDepthIdx.end());

//步骤3.3:将距离比较近的点包装成MapPoints

int nPoints = 0;

for(size_t j=0; jObservations()<1)

{

bCreateNew = true;

mCurrentFrame.mvpMapPoints[i] = static_cast(NULL);

}

if(bCreateNew)

{

cv::Mat x3D = mCurrentFrame.UnprojectStereo(i);

MapPoint* pNewMP = new MapPoint(x3D,pKF,mpMap);

// 这些添加属性的操作是每次创建MapPoint后都要做的

pNewMP->AddObservation(pKF,i);

pKF->AddMapPoint(pNewMP,i);

pNewMP->ComputeDistinctiveDescriptors();

pNewMP->UpdateNormalAndDepth();

mpMap->AddMapPoint(pNewMP);

mCurrentFrame.mvpMapPoints[i]=pNewMP;

nPoints++;

}

else

{

nPoints++;

}

// 这里决定了双目和rgbd摄像头时地图点云的稠密程度

// 但是仅仅为了让地图稠密直接改这些不太好,

// 因为这些MapPoints会参与之后整个slam过程

if(vDepthIdx[j].first>mThDepth && nPoints>100)

break;

}

}

}

/**

* 往LocalMapping线程的mlNewKeyFrames队列中插入新生成的keyframe

* LocalMapping线程中检测到有队列中有keyframe插入后线程会run起来

*/

mpLocalMapper->InsertKeyFrame(pKF);

mpLocalMapper->SetNotStop(false);

mnLastKeyFrameId = mCurrentFrame.mnId;

mpLastKeyFrame = pKF;

} 3.小结

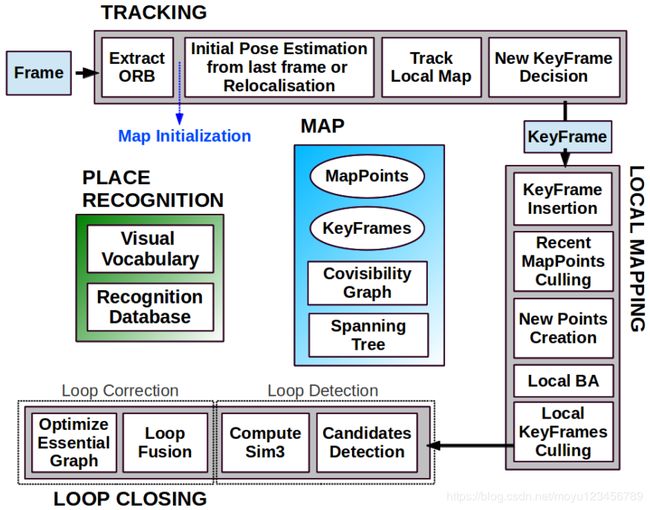

以上是ORB-SLAM2的系统框架图,可以看出Tracking线程主要完成以下几种操作:

1)Extract ORB:ORB-SLAM2代码阅读笔记(四):Tracking线程2——ORB特征提取 中已经介绍过了。

2)Initial Pose Estimation from last frame or Relocalisation;

3)Track Local Map:

4)New KeyFrame Decision;

其中2)、3)、4)都在本篇笔记中有对应,需要仔细体会理解。Tracking线程执行完后会生成KeyFrame传给LocalMapping线程,这个操作在CreateNewKeyFrame函数中下面这一行体现:

/**

* 往LocalMapping线程的mlNewKeyFrames队列中插入新生成的keyframe

* LocalMapping线程中检测到有队列中有keyframe插入后线程会run起来

*/

mpLocalMapper->InsertKeyFrame(pKF);