【Pyspark 】GroupBy分组排序

分组排序:

https://blog.csdn.net/weixin_40161254/article/details/88817225

df_spark_hotpoi = spark.sql("select routeid, cityid, row_number() over (partition by routeid order by sortno asc) as rank from table where sortno<=5 ")

df_spark_hotpoi3.orderBy(["cityid" ,'rank'], ascending= [1,1] ).show()单条件、多条件groupby

https://blog.csdn.net/weixin_42864239/article/details/94456765

http://www.it1352.com/837888.html

不接agg的计数

https://blog.csdn.net/m0_38052384/article/details/100362340

接agg的:

在groupby之后需要接agg,再进行其他操作

import pyspark.sql.functions as F

Eg:

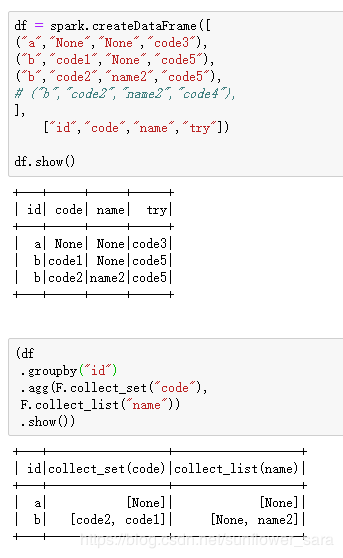

# 建立数据

df = spark.createDataFrame([

("a","None","None","code3"),

("b","code1","None","code5"),

("b","code2","name2","code5"),

("b","code2","name2","code4"),

],

["id","code","name","try"])

df.show()

# 进行groupby

#情况1

单个列进行groupby

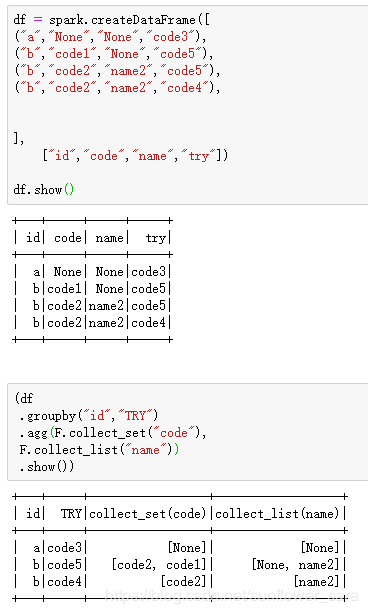

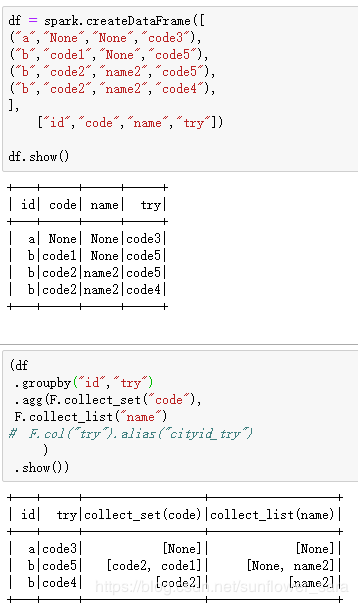

df

.groupby("id","TRY")

.agg(F.collect_set("code"),

F.collect_list("name"))

.show()

# 情况2

如果需要保留多个原始字段,则需要同时对这多个字段进行groupby

这几个字段应该具有相同的对应关系,则之后的关系也是对应的

# 情况3

如果需要保留多个原始字段,则需要同时对这多个字段进行groupby

如果这几个字段具有不同的对应关系,则会对应多个不同的分组,依次以各个gourpby的字段进行分组

综合使用例子

Eg:

import pyspark.sql.functions as F

df_ = df.groupby("country").agg(F.collect_list(F.col('id')).alias("list_new"), F.count(F.col("id")).alias("num")).withColumn("list_new2", F.concat_ws(",",F.col("list_new"))).drop("list_new").where("num>0")