学习笔记|Pytorch使用教程09(模型创建与nn.Module)

学习笔记|Pytorch使用教程09

本学习笔记主要摘自“深度之眼”,做一个总结,方便查阅。

使用Pytorch版本为1.2。

- 网络模型创建步骤

- nn.Module属性

- 作业

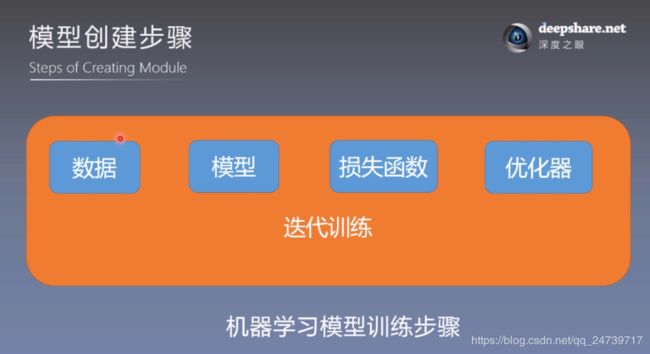

一.网络模型创建步骤

import os

import random

import numpy as np

import torch

import torch.nn as nn

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

import torch.optim as optim

from matplotlib import pyplot as plt

from model.lenet import LeNet

from tools.my_dataset import RMBDataset

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

set_seed() # 设置随机种子

rmb_label = {"1": 0, "100": 1}

# 参数设置

MAX_EPOCH = 10

BATCH_SIZE = 16

LR = 0.01

log_interval = 10

val_interval = 1

# ============================ step 1/5 数据 ============================

split_dir = os.path.join( "..", "data", "rmb_split")

train_dir = os.path.join(split_dir, "train")

valid_dir = os.path.join(split_dir, "valid")

norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]

train_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.RandomCrop(32, padding=4),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

valid_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

# 构建MyDataset实例

train_data = RMBDataset(data_dir=train_dir, transform=train_transform)

valid_data = RMBDataset(data_dir=valid_dir, transform=valid_transform)

# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

valid_loader = DataLoader(dataset=valid_data, batch_size=BATCH_SIZE)

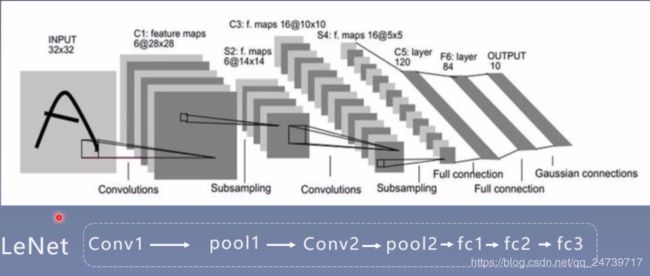

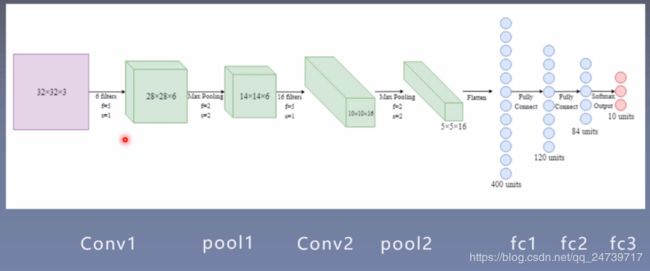

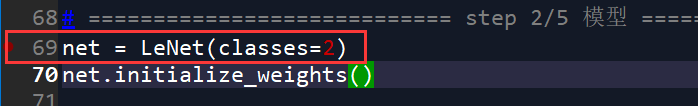

# ============================ step 2/5 模型 ============================

net = LeNet(classes=2)

net.initialize_weights()

# ============================ step 3/5 损失函数 ============================

criterion = nn.CrossEntropyLoss() # 选择损失函数

# ============================ step 4/5 优化器 ============================

optimizer = optim.SGD(net.parameters(), lr=LR, momentum=0.9) # 选择优化器

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.1) # 设置学习率下降策略

# ============================ step 5/5 训练 ============================

train_curve = list()

valid_curve = list()

for epoch in range(MAX_EPOCH):

loss_mean = 0.

correct = 0.

total = 0.

net.train()

for i, data in enumerate(train_loader):

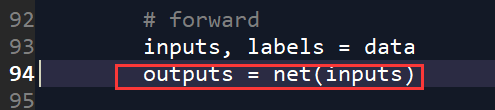

# forward

inputs, labels = data

outputs = net(inputs)

# backward

optimizer.zero_grad()

loss = criterion(outputs, labels)

loss.backward()

# update weights

optimizer.step()

# 统计分类情况

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).squeeze().sum().numpy()

# 打印训练信息

loss_mean += loss.item()

train_curve.append(loss.item())

if (i+1) % log_interval == 0:

loss_mean = loss_mean / log_interval

print("Training:Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, i+1, len(train_loader), loss_mean, correct / total))

loss_mean = 0.

scheduler.step() # 更新学习率

# validate the model

if (epoch+1) % val_interval == 0:

correct_val = 0.

total_val = 0.

loss_val = 0.

net.eval()

with torch.no_grad():

for j, data in enumerate(valid_loader):

inputs, labels = data

outputs = net(inputs)

loss = criterion(outputs, labels)

_, predicted = torch.max(outputs.data, 1)

total_val += labels.size(0)

correct_val += (predicted == labels).squeeze().sum().numpy()

loss_val += loss.item()

valid_curve.append(loss_val)

print("Valid:\t Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, j+1, len(valid_loader), loss_val, correct / total))

train_x = range(len(train_curve))

train_y = train_curve

train_iters = len(train_loader)

valid_x = np.arange(1, len(valid_curve)+1) * train_iters*val_interval # 由于valid中记录的是epochloss,需要对记录点进行转换到iterations

valid_y = valid_curve

plt.plot(train_x, train_y, label='Train')

plt.plot(valid_x, valid_y, label='Valid')

plt.legend(loc='upper right')

plt.ylabel('loss value')

plt.xlabel('Iteration')

plt.show()

# ============================ inference ============================

BASE_DIR = os.path.dirname(os.path.abspath(__file__))

test_dir = os.path.join(BASE_DIR, "test_data")

test_data = RMBDataset(data_dir=test_dir, transform=valid_transform)

valid_loader = DataLoader(dataset=test_data, batch_size=1)

for i, data in enumerate(valid_loader):

# forward

inputs, labels = data

outputs = net(inputs)

_, predicted = torch.max(outputs.data, 1)

rmb = 1 if predicted.numpy()[0] == 0 else 100

print("模型获得{}元".format(rmb))

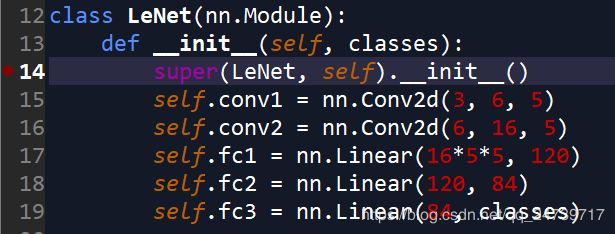

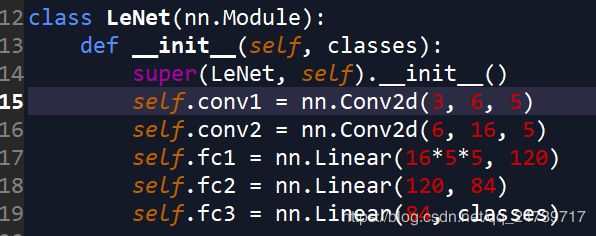

搭建模型并初始化:

net = LeNet(classes=2)

net.initialize_weights()

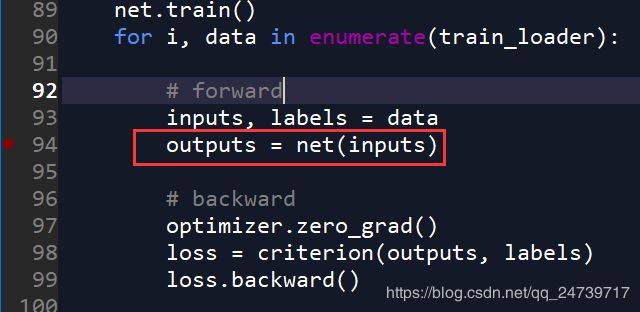

在下处设置断点:

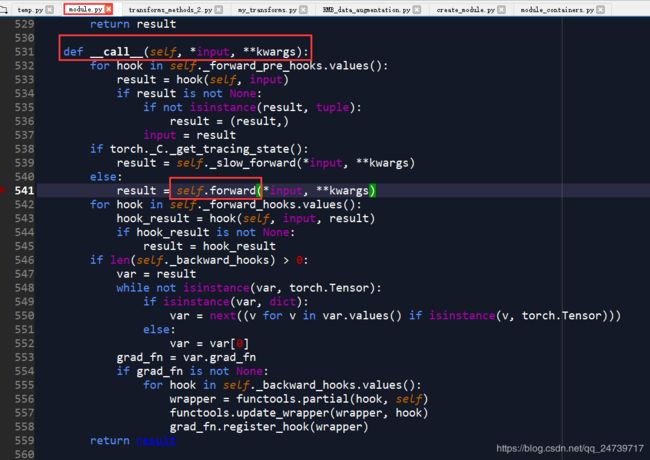

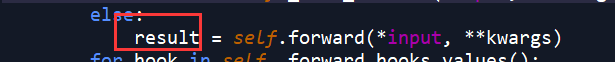

进入该语句(step into),进入 module.py 中的 call 函数。

着重关注其 self.forward 函数,在该处设置断点,并进入该语句(step into)。

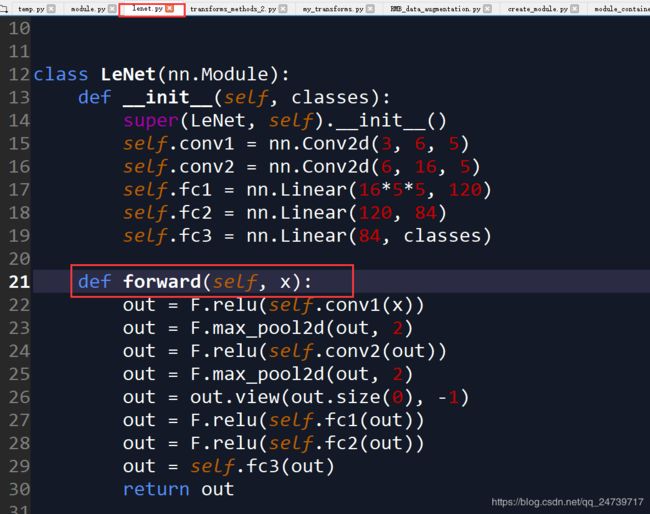

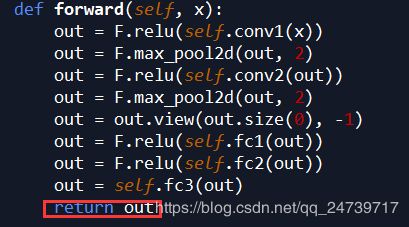

发现跳转到自己定义的网络结构LeNet中(在lenet.py中)。在forward中具体实现了网络前向传播。

在前向传播中一步步计算,最后返回结果out 到 result中。

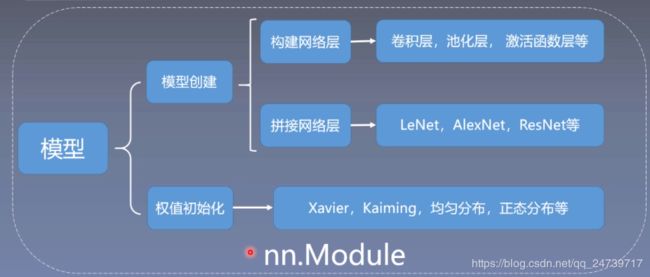

二.nn.Module

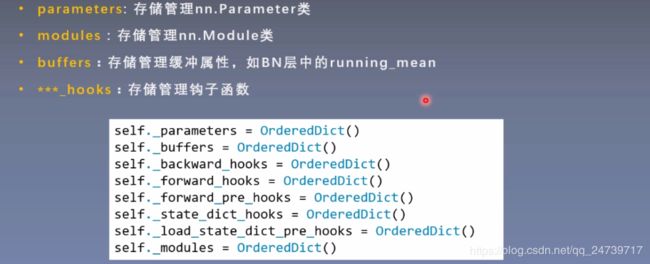

现在了解nn.Modules的创建。

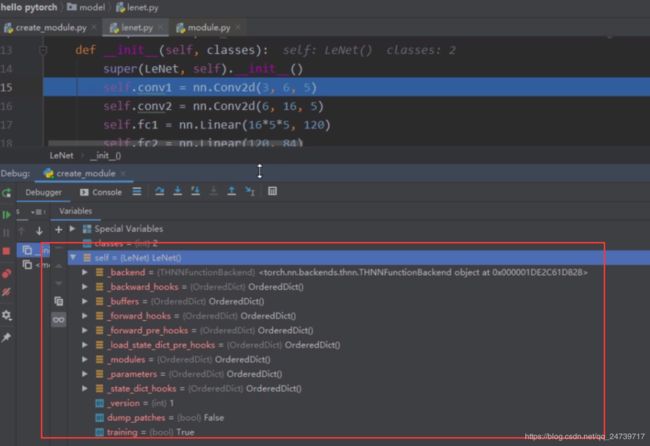

先在该处设置断点:

然后进入该语句(step into)。

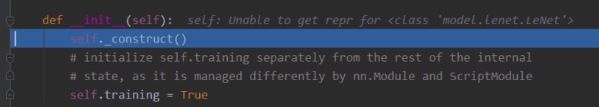

super() 函数是用于调用父类(超类)的一个方法。用法:super(type[, object-or-type])。进入该语句:

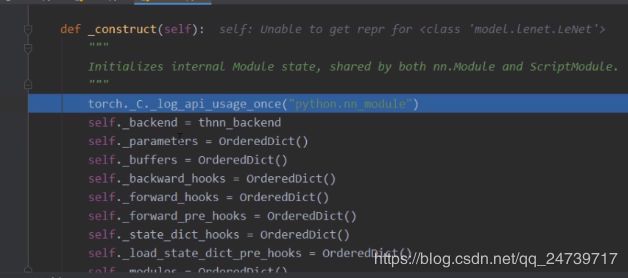

进入self._construct() 中。

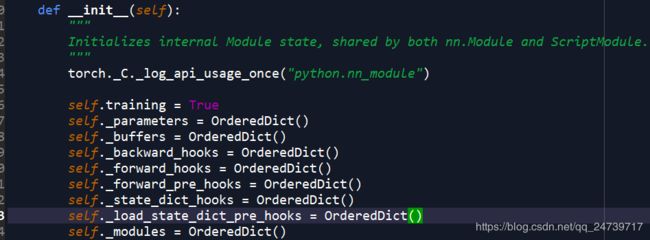

在其中包含了刚刚上述所提到的管理钩子函数。注意:在pytorch 1.3版本中,self._construct()被移除了,其内容直接写在了self.__init__中:

现在跳出该语句到super的下一条语句中:

在self中变有了8个有序字典(spyder没有看到):

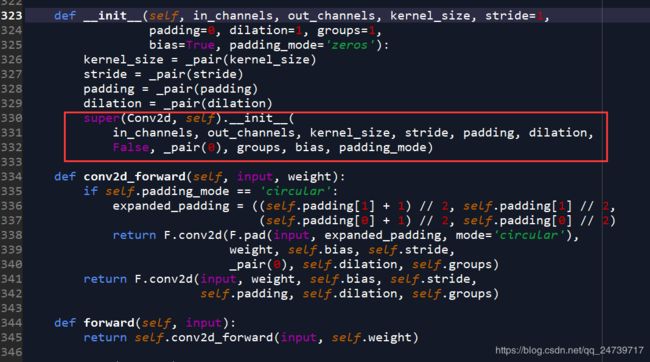

进入 self.Conv2d 中:

进入 super(Conv2d, self).init ,并继续调试可以到:

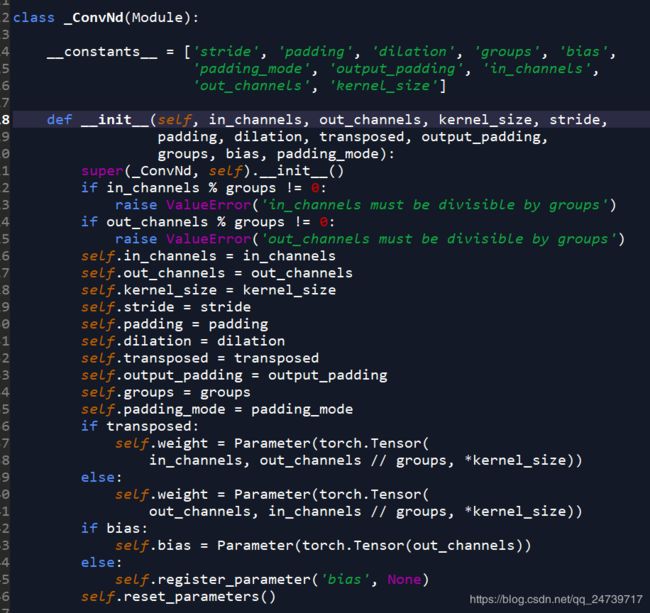

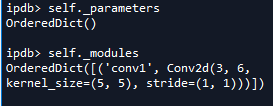

可以发现 _ConvNd 也是一个 module。一步步交出到 class LeNet 中。查看参数:

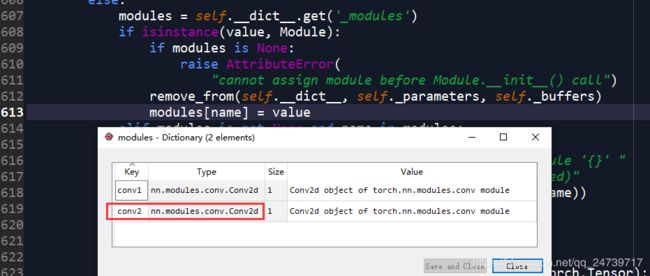

在构建下一层时,self.conv2 = nn.Conv2d(6, 16, 5),先step into,然后再run current line 会到一个 setattr 函数中:

这个函数会拦截所有类属性的赋值,这个函数会对 value 属性进行判断。比如判断 : isinstance(value, Parameter),是否是Parameter属性,如果是则放入相关字典中。nn.Conv2d 是一个 Module 类型的,所有会执行到该处,并把value存储到modules中:

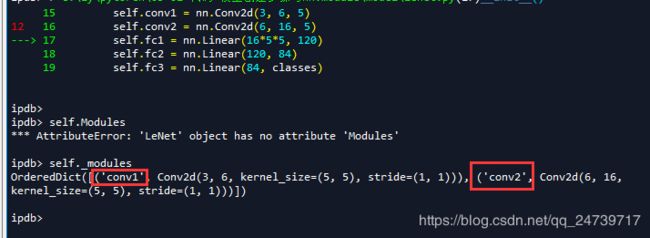

现在跳出到LeNet中, 查看 self._modules 属性:

继续跳出,查看属性:

- 三.作业

采用sequential容器,改写Alexnet,给features中每一个网络层增加名字,并通过下面这行代码打印出来

print(alexnet._modules[‘features’]._modules.keys())

测试代码:

import torchvision

import torch.nn as nn

import torch

from collections import OrderedDict

alexnet_ori = torchvision.models.AlexNet()

print(alexnet_ori._modules['features']._modules.keys())

class AlexNet(nn.Module):

def __init__(self, num_classes=1000):

super(AlexNet, self).__init__()

self.features = nn.Sequential(OrderedDict({

'conv1': nn.Conv2d(3, 64, kernel_size=11, stride=4, padding=2),

'relu1': nn.ReLU(inplace=True),

'maxpool1': nn.MaxPool2d(kernel_size=3, stride=2),

'conv2': nn.Conv2d(64, 192, kernel_size=5, padding=2),

'relu2': nn.ReLU(inplace=True),

'maxpool2': nn.MaxPool2d(kernel_size=3, stride=2),

'conv3': nn.Conv2d(192, 384, kernel_size=3, padding=1),

'relu3': nn.ReLU(inplace=True),

'conv4': nn.Conv2d(384, 256, kernel_size=3, padding=1),

'relu4': nn.ReLU(inplace=True),

'conv5': nn.Conv2d(256, 256, kernel_size=3, padding=1),

'relu5': nn.ReLU(inplace=True),

'maxpool5':nn.MaxPool2d(kernel_size=3, stride=2),

}))

self.avgpool = nn.AdaptiveAvgPool2d((6, 6))

self.classifier = nn.Sequential(OrderedDict({

'dropout1': nn.Dropout(),

'linear1': nn.Linear(256 * 6 * 6, 4096),

'relu1': nn.ReLU(inplace=True),

'dropout2': nn.Dropout(),

'linear2': nn.Linear(4096, 4096),

'relu2': nn.ReLU(inplace=True),

'linear3': nn.Linear(4096, num_classes),

}))

def forward(self, x):

x = self.features(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.classifier(x)

return x

alexnet = AlexNet(num_classes=2)

print(alexnet._modules['features']._modules.keys())

输出:

odict_keys(['0', '1', '2', '3', '4', '5', '6', '7', '8', '9', '10', '11', '12'])

odict_keys(['conv1', 'relu1', 'maxpool1', 'conv2', 'relu2', 'maxpool2', 'conv3', 'relu3', 'conv4', 'relu4', 'conv5', 'relu5', 'maxpool5'])