GloVe模型

目录

- 1、词向量学习算法有两个主要的模型族:

- 2、GloVe模型原理

- 3、GloVe模型共现矩阵生成——代码简单实现

- 4、GloVe模型简单实现

1、词向量学习算法有两个主要的模型族:

- 基于全局矩阵分解的方法,如LSA:

优点:能够有效的利用全局的统计信息;

缺点:在单词类比任务中(如国王VS王后类比于男人VS女人)中表现相对较差; - 基于局部上下文窗口的方法,如word2vec:

优点:在单词类比任务中表现较好;

缺点:因为word2vec在独立的局部上下文窗口上训练,因此难以利用单词的全局统计信息;

GloVe结合了LSA算法和word2vec算法的优点,即考虑了全局统计信息又利用了局部上下文。

2、GloVe模型原理

用 P ( w j ∣ w i ) P(w_j|w_i) P(wj∣wi)表示数据集中以 w i w_i wi为中心词生成背景词 w j w_j wj的条件概率,并记作 P i j P_{ij} Pij,作为源于某大型语料库的真实例子,下表中列举了两组分别以“ice”(冰)和“steam”(蒸汽)作为中心词的条件概率以及它们之间的比值。

| w k w_k wk | p 1 = P ( w k ∣ " i c e " ) p_1=P(w_k \mid "ice") p1=P(wk∣"ice") | p 2 = P ( w k ∣ " s t e a m " ) p_2=P(w_k\mid"steam") p2=P(wk∣"steam") | p 1 / p 2 p_1/p_2 p1/p2 |

|---|---|---|---|

| “solid”(固体) | 0.00019 | 0.000022 | 8.9 |

| “gas” (气体) | 0.000066 | 0.00078 | 0.085 |

| “water”(水) | 0.003 | 0.0022 | 1.36 |

| “fashion”(时尚) | 0.000017 | 0.000018 | 0.96 |

我们可以观察到以下现象:

- 对于与“ice”相关而与“steam”不相关的词 w k w_k wk,比如 w k w_k wk=“solid”(固体),我们期望条件概率比值较大,如上表中的值8.9;

- 对于与“ice”不相关而与“steam”相关的词 w k w_k wk,比如 w k w_k wk=“gas”(气体),我们期望条件概率比值较小,如上表中的值0.085;

- 对于与“ice”和“steam”都相关的词 w k w_k wk,如 w k w_k wk=“water”(水),我们期望条件概率比值接近1,如上表中的值1.36;

- 对于与“ice”和“steam”都不相关的词 w k w_k wk,比如 w k w_k wk=“fashion”(时尚),我们期望条件概率比值接近1,如上表中的值0.96;

由此可见,条件概率比值能比较直观地表达词与词之间的关系,我们可以构建一个词向量函数使他能够有效拟合条件概率比值。我们知道,任意一个这样的比值需要3个词 w i w_i wi、 w j w_j wj和 w k w_k wk。以 w i w_i wi和 w j w_j wj为中心词的条件概率比值为 p i , k / p j , k p_{i,k}/p_{j,k} pi,k/pj,k。我们可以找到一个向量函数,它使用词向量来拟合这个条件概率比值:

F ( w → i , w → j , w → k ) ≈ Rati o i , j k = P i , k P j , k F\left(\overrightarrow{\mathbf{w}}_{i}, \overrightarrow{\mathbf{w}}_{j}, \overrightarrow{\mathbf{w}}_{k}\right)\approx\operatorname{Rati} o_{i, j}^{k}=\frac{P_{i, k}}{P_{j, k}} F(wi,wj,wk)≈Ratioi,jk=Pj,kPi,k

这里函数f可能的设计并不唯一,我们只需要考虑一种较为合理的可能性。注意到条件概率比值是一个标量,同时f函数是用于评估中心词 w i w_i wi和 w j w_j wj的差异,因此我们可以将f函数限制为一个向量内积的标量函数:

F ( w → i − w → j , w → k ) = F ( ( w → i − w → j ) T w → k ) = F ( w → i T w → k − w → j T w → k ) = P i , k P j , k F\left(\overrightarrow{\mathbf{w}}_{i}-\overrightarrow{\mathbf{w}}_{j}, \overrightarrow{\mathbf{w}}_{k}\right)=F\left(\left(\overrightarrow{\mathbf{w}}_{i}-\overrightarrow{\mathbf{w}}_{j}\right)^{T} \overrightarrow{\mathbf{w}}_{k}\right)=F\left(\overrightarrow{\mathbf{w}}_{i}^{T} \overrightarrow{\mathbf{w}}_{k}-\overrightarrow{\mathbf{w}}_{j}^{T} \overrightarrow{\mathbf{w}}_{k}\right)=\frac{P_{i, k}}{P_{j, k}} F(wi−wj,wk)=F((wi−wj)Twk)=F(wiTwk−wjTwk)=Pj,kPi,k

上式左边为差的形式,右边为商的形式,因此联想到指数函数exp(.)。即F(.)函数的形式为:

e x p ( w → i T w → k − w → j T w → k ) = P i k P j k exp(\overrightarrow{\mathbf{w}}_{i}^{T} \overrightarrow{\mathbf{w}}_{k}-\overrightarrow{\mathbf{w}}_{j}^{T} \overrightarrow{\mathbf{w}}_{k})=\frac{P_{ik}}{P_{jk}} exp(wiTwk−wjTwk)=PjkPik

上式可以转化为:

w → i T w → k − w → j T w → k = log P i , k − log P j , k \overrightarrow{\mathbf{w}}_{i}^{T} \overrightarrow{\mathbf{w}}_{k}-\overrightarrow{\mathbf{w}}_{j}^{T} \overrightarrow{\mathbf{w}}_{k}=\log P_{i, k}-\log P_{j, k} wiTwk−wjTwk=logPi,k−logPj,k

要使上式成立,只需要令 w → i T w → k = log P i , k \overrightarrow{\mathbf{w}}_{i}^{T} \overrightarrow{\mathbf{w}}_{k}=\log P_{i, k} wiTwk=logPi,k, w → j T w → k = log P j , k \overrightarrow{\mathbf{w}}_{j}^{T} \overrightarrow{\mathbf{w}}_{k}=\log P_{j, k} wjTwk=logPj,k即可。

向量的内积具有对称性,即 w → i T w → k = w → k T w → i \overrightarrow{\mathbf{w}}_{i}^{T} \overrightarrow{\mathbf{w}}_{k}=\overrightarrow{\mathbf{w}}_{k}^{T} \overrightarrow{\mathbf{w}}_{i} wiTwk=wkTwi,考虑到 P i , k = x i , k x i P_{i,k}=\frac{x_{i,k}}{x_i} Pi,k=xixi,k,而 log X i , k X i ≠ log X k , i X k \log \frac{X_{i, k}}{X_{i}} \neq \log \frac{X_{k, i}}{X_{k}} logXiXi,k=logXkXk,i,即 log P i , k ≠ log P k , i \log P_{i, k} \neq \log P_{k, i} logPi,k=logPk,i,为了解决这个问题,在公式 log X i , k X i ≠ log X k , i X k \log \frac{X_{i, k}}{X_{i}} \neq \log \frac{X_{k, i}}{X_{k}} logXiXi,k=logXkXk,i左右两边分别减去 l o g X k logX_k logXk和 l o g X i logX_i logXi,这样就可以得到左右相等的公式:

log X i , k X i − l o g X k = log X k , i X k − l o g X i \log \frac{X_{i, k}}{X_{i}} - logX_k= \log \frac{X_{k, i}}{X_{k}}-logX_i logXiXi,k−logXk=logXkXk,i−logXi

即

l o g X i , k − l o g X i − l o g X k = l o g X k , i − l o g X k − l o g X i log X_{i,k}-logX_i-logX_k=logX_{k,i}-logX_k-logX_i logXi,k−logXi−logXk=logXk,i−logXk−logXi

令 w → i T w → k = l o g X i , k − l o g X i − l o g X k \overrightarrow{\mathbf{w}}_{i}^{T} \overrightarrow{\mathbf{w}}_{k}=log X_{i,k}-logX_i-logX_k wiTwk=logXi,k−logXi−logXk,则可以满足以上提及的对称性。

同时,对于 w → i T w → k \overrightarrow{\mathbf{w}}_{i}^{T} \overrightarrow{\mathbf{w}}_{k} wiTwk和 w → j T w → k \overrightarrow{\mathbf{w}}_{j}^{T} \overrightarrow{\mathbf{w}}_{k} wjTwk,需要满足条件:

w → i T w → k − w → j T w → k = log P i , k − log P j , k \overrightarrow{\mathbf{w}}_{i}^{T} \overrightarrow{\mathbf{w}}_{k}-\overrightarrow{\mathbf{w}}_{j}^{T} \overrightarrow{\mathbf{w}}_{k}=\log P_{i, k}-\log P_{j, k} wiTwk−wjTwk=logPi,k−logPj,k

分别将 w → i T w → k = l o g X i , k − l o g X i − l o g X k \overrightarrow{\mathbf{w}}_{i}^{T} \overrightarrow{\mathbf{w}}_{k}=log X_{i,k}-logX_i-logX_k wiTwk=logXi,k−logXi−logXk和 w → j T w → k = l o g X j , k − l o g X j − l o g X k \overrightarrow{\mathbf{w}}_{j}^{T} \overrightarrow{\mathbf{w}}_{k}=log X_{j,k}-logX_j-logX_k wjTwk=logXj,k−logXj−logXk代入上述公式左端即可推导出右边的结果。

我们令 b i = l o g X i b_i=log X_i bi=logXi,令 b k = l o g X k b_k=logX_k bk=logXk,则可以得到论文中的公式,即:

log X i , k = w → i T w → k + b i + b ~ k \log X_{i, k}=\overrightarrow{\mathbf{w}}_{i}^{T} \overrightarrow{\mathbf{w}}_{k}+b_{i}+\tilde{b}_{k} logXi,k=wiTwk+bi+b~k

但是上面的公式仅仅是理想状态,实际上只能要求左右两边尽可能相等,于是设计代价函数为:

J = ∑ i , k ( w → i T w → k + b i + b ~ k − log X i , k ) 2 J=\sum_{i, k}\left(\overrightarrow{\mathbf{w}}_{i}^{T} \overrightarrow{\mathbf{w}}_{k}+b_{i}+\tilde{b}_{k}-\log X_{i, k}\right)^{2} J=i,k∑(wiTwk+bi+b~k−logXi,k)2

公式中的 w → , b , b ~ \overrightarrow{\mathbf{w}}, b, \tilde{b} w,b,b~均为模型参数,需要通过训练得到。

根据经验,如果两个词共现的次数越多,则这两个词在代价函数中的影响就越大,因此可以设计一个权重函数 f ( ⋅ ) f(\cdot) f(⋅)来对代价函数中的每一项进行加权,权重为共现次数的函数:

J = ∑ i , k f ( X i , k ) ( w → i T w → k + b i + b ~ k − log X i , k ) 2 J=\sum_{i, k} f\left(X_{i, k}\right)\left(\overrightarrow{\mathbf{w}}_{i}^{T} \overrightarrow{\mathbf{w}}_{k}+b_{i}+\tilde{b}_{k}-\log X_{i, k}\right)^{2} J=i,k∑f(Xi,k)(wiTwk+bi+b~k−logXi,k)2

公式中的权重函数应该符合三个条件:

- f ( 0 ) = 0 f(0)=0 f(0)=0,即如果两个词没有共现过,则权重为0,这是为了确保 lim x → 0 f ( x ) log 2 x \lim _{x \rightarrow 0} f(x) \log ^{2} x limx→0f(x)log2x是有限值;

- f ( ⋅ ) f(\cdot) f(⋅)是非递减的,即两个词共现次数越大,则权重越大;

- f ( ⋅ ) f(\cdot) f(⋅)对于较大的 X i , k X_{i,k} Xi,k不能取太大的值,即有些单词共现次数非常大(如单词“的”与其它词的组合),但是他们的重要性并不是很大;

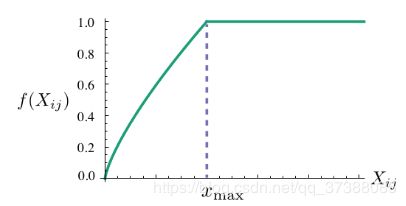

因此论文中根据以上三个条件设计了权重函数 f ( ⋅ ) f(\cdot) f(⋅)的形式为:

f ( x ) = { ( x x max ) α if x < x max 1 , otherwise f(x)=\left\{\begin{array}{ll} \left(\frac{x}{x_{\max }}\right)^{\alpha} & \text { if } x

GloVe论文中给出函数 f ( ⋅ ) f(\cdot) f(⋅)的参数 α \alpha α和 x m a x x_{max} xmax的经验值为:

α = 3 4 , x max = 100 \alpha=\frac{3}{4}, x_{\max }=100 α=43,xmax=100

3、GloVe模型共现矩阵生成——代码简单实现

from torch.utils import data

import os

import numpy as np

import pickle # 用于保存数据

min_count = 50 # 用于去除低频词

# 数据集加载

data = open("./data/text8.txt").read()

data = data.split()

# 构建word2id并去除低频词

word2freq = {} # 保存每个词的词频的字典

for word in data:

if word2freq.get(word)!=None:

word2freq[word] += 1

else:

word2freq[word] = 1

word2id = {} # key是每个词,value是每个词对应的id

for word in word2freq:

if word2freq[word]<min_count: # 如果该词的词频小于min_count则抛弃

continue

else:

if word2id.get(word)==None:

word2id[word]=len(word2id)

# 构建共现矩阵

vocab_size = len(word2id) # 统计词表的词的个数

comat = np.zeros((vocab_size,vocab_size)) # 词共现矩阵,矩阵大小为[词个数,词个数],初始全为零

print(comat.shape)

window_size = 2 # 中心词的窗口

# 构建共现矩阵

for i in range(len(data)):

if i%1000000==0:

print (i,len(data))

if word2id.get(data[i])==None: # 如果该词不在word2id字典中,则表示是低频词,忽略

continue

w_index = word2id[data[i]] # 获得该词对应的id

for j in range(max(0,i-window_size),min(len(data),i+window_size+1)):

if word2id.get(data[j]) == None or i==j: # 如果是低频词或者是词自身

continue

u_index = word2id[data[j]]

comat[w_index][u_index]+=1

# np.nonzero()的作用是将非零的位置找出来

# np.transpose()的作用是转换为矩阵

coocs = np.transpose(np.nonzero(comat))

# 生成训练集和Label

labels = []

for i in range(len(coocs)):

if i%1000000==0:

print (i,len(coocs))

labels.append(comat[coocs[i][0]][coocs[i][1]])

labels = np.array(labels)

# 保存结果

np.save("./data/data.npy",coocs)

np.save("./data/label.npy",labels)

pickle.dump(word2id,open("./data/word2id","wb"))

4、GloVe模型简单实现

import torch

import torch.nn as nn

class glove_model(nn.Module):

def __init__(self,vocab_size,embed_size,x_max,alpha):

# vocab_size是词表大小

# embed_size是词嵌入的维度

# x_max和alpha是损失函数中f(.)的参数

super(glove_model, self).__init__()

self.vocab_size = vocab_size

self.embed_size = embed_size

self.x_max = x_max

self.alpha = alpha

self.w_embed = nn.Embedding(self.vocab_size,self.embed_size).type(torch.float64) # 中心词向量

self.w_bias = nn.Embedding(self.vocab_size,1).type(torch.float64) # 中心词bias

self.v_embed = nn.Embedding(self.vocab_size, self.embed_size).type(torch.float64) # 周围词向量

self.v_bias = nn.Embedding(self.vocab_size, 1).type(torch.float64) # 周围词bias

def forward(self, w_data,v_data,labels):

w_data_embed = self.w_embed(w_data) # bs*embed_size

w_data_bias = self.w_bias(w_data) # bs*1

v_data_embed = self.v_embed(v_data)

v_data_bias = self.v_bias(v_data)

weights = torch.pow(labels/self.x_max,self.alpha) # 权重生成

weights[weights>1]=1

loss = torch.mean(weights*torch.pow(torch.sum(w_data_embed*v_data_embed,1)+w_data_bias+v_data_bias-

torch.log(labels),2)) # 计算loss

return loss