基于albert模型的邮件分类

基于albert模型的邮件分类

最近有个邮件分类的需求,刚好拿最近新出的Albert模型来尝试一下,记录一下,代码来源于两位大佬,只是做了一些修改。

https://github.com/brightmart/albert_zh

https://github.com/bojone/bert4keras

运行环境

安装keras

pip install keras

要注意的一点是keras的版本一定要 >= 2.3.1,已经安装keras的同学可以更新一下keras的版本。

pip install --upgradekeras == 2.3.1

安装bert4keras

pip install bert4keras

准备训练数据

1、数据的格式为:

邮件正文 标签

(标签分七类,分别为: 0 1 2 3 4 5 6)

2、将数据分类训练集、测试集、验证集: 由于邮件的业务数据比较少,存在邮件类别分布不均衡的问题,我只分了训练集和验证集,可视自己的情况而定。

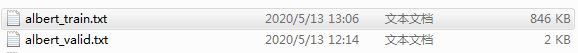

3、文件结构

训练模型

#! -*- coding:utf-8 -*-

# @Time: 2020/5/15 14:26

# @Author: huangcy

# @Email: [email protected]

# @File: albert.py

from bert4keras.backend import keras, set_gelu

from bert4keras.tokenizers import Tokenizer

from bert4keras.models import build_transformer_model

from bert4keras.optimizers import Adam, extend_with_piecewise_linear_lr

from bert4keras.snippets import sequence_padding, DataGenerator

from bert4keras.snippets import open

from keras.layers import Lambda, Dense

set_gelu('tanh') # 切换gelu版本

num_classes = 7

maxlen = 128

batch_size = 32

config_path = './model/albert_base/albert_config.json'

checkpoint_path = './model/albert_base/albert_model.ckpt'

dict_path = './model/vocab.txt'

def load_data(filename):

D = []

with open(filename, encoding='utf-8') as f:

for l in f:

text, label = l.strip().split('\t')

D.append((text, int(label)))

return D

# 加载数据集

train_data = load_data('./data/albert_train.txt')

valid_data = load_data('./data/albert_valid.txt')

# test_data = load_data('./data/albert_train.txt')

# 建立分词器

tokenizer = Tokenizer(dict_path, do_lower_case=True)

class data_generator(DataGenerator):

"""数据生成器

"""

def __iter__(self, random=False):

batch_token_ids, batch_segment_ids, batch_labels = [], [], []

for is_end, (text, label) in self.sample(random):

token_ids, segment_ids = tokenizer.encode(text, max_length=maxlen)

batch_token_ids.append(token_ids)

batch_segment_ids.append(segment_ids)

batch_labels.append([label])

if len(batch_token_ids) == self.batch_size or is_end:

batch_token_ids = sequence_padding(batch_token_ids)

batch_segment_ids = sequence_padding(batch_segment_ids)

batch_labels = sequence_padding(batch_labels)

yield [batch_token_ids, batch_segment_ids], batch_labels

batch_token_ids, batch_segment_ids, batch_labels = [], [], []

# 加载预训练模型

bert = build_transformer_model(

config_path=config_path,

checkpoint_path=checkpoint_path,

model='albert',

return_keras_model=False,

)

output = Lambda(lambda x: x[:, 0], name='CLS-token')(bert.model.output)

output = Dense(

units=num_classes,

activation='softmax',

kernel_initializer=bert.initializer

)(output)

model = keras.models.Model(bert.model.input, output)

model.summary()

# 派生为带分段线性学习率的优化器。

# 其中name参数可选,但最好填入,以区分不同的派生优化器。

AdamLR = extend_with_piecewise_linear_lr(Adam, name='AdamLR')

model.compile(

loss='sparse_categorical_crossentropy',

# optimizer=Adam(1e-5), # 用足够小的学习率

optimizer=AdamLR(learning_rate=1e-4, lr_schedule={

1000: 1,

2000: 0.1

}),

metrics=['accuracy'],

)

# 转换数据集

train_generator = data_generator(train_data, batch_size)

valid_generator = data_generator(valid_data, batch_size)

# test_generator = data_generator(test_data, batch_size)

def evaluate(data):

total, right = 0., 0.

for x_true, y_true in data:

y_pred = model.predict(x_true).argmax(axis=1)

y_true = y_true[:, 0]

total += len(y_true)

right += (y_true == y_pred).sum()

return right / total

class Evaluator(keras.callbacks.Callback):

def __init__(self):

self.best_val_acc = 0.

def on_epoch_end(self, epoch, logs=None):

val_acc = evaluate(valid_generator)

if val_acc > self.best_val_acc:

self.best_val_acc = val_acc

model.save_weights('./model/albert_email.weights')

# test_acc = evaluate(test_generator)

print(

u'val_acc: %.5f, best_val_acc: %.5f\n' %

(val_acc, self.best_val_acc)

)

evaluator = Evaluator()

model.fit_generator(

train_generator.forfit(),

steps_per_epoch=len(train_generator),

epochs=10,

callbacks=[evaluator]

)

model.load_weights('./model/albert_email.weights')

model.save('./model/albert_email.h5') #保存模型

加载模型预测邮件类别

predicts.py 预测结果

#! -*- coding:utf-8 -*-

# @Time: 2020/5/15 14:26

# @Author: huangcy

# @Email: [email protected]

# @File: predicts.py

import numpy as np

from bert4keras.snippets import sequence_padding, DataGenerator

from bert4keras.tokenizers import Tokenizer

from keras.models import load_model

from bert4keras.optimizers import Adam, extend_with_piecewise_linear_lr

maxlen = 128

dict_path = 'albert/model/vocab.txt'

tokenizer = Tokenizer(dict_path, do_lower_case=True)

class data_generator(DataGenerator):

"""数据生成器

"""

def __iter__(self, random=False):

batch_token_ids, batch_segment_ids, batch_labels = [], [], []

for is_end, (text, label) in self.sample(random):

token_ids, segment_ids = tokenizer.encode(text, max_length=maxlen)

batch_token_ids.append(token_ids)

batch_segment_ids.append(segment_ids)

batch_labels.append([label])

if len(batch_token_ids) == self.batch_size or is_end:

batch_token_ids = sequence_padding(batch_token_ids)

batch_segment_ids = sequence_padding(batch_segment_ids)

batch_labels = sequence_padding(batch_labels)

yield [batch_token_ids, batch_segment_ids], batch_labels

batch_token_ids, batch_segment_ids, batch_labels = [], [], []

class Albert(object):

def __init__(self):

AdamLR = extend_with_piecewise_linear_lr(Adam, name='AdamLR')

self.model = load_model('albert/model/albert_email.h5')

def predict(self,email):

test_sentence = data_generator([(email, 1)]).__iter__().__next__()[0]

predictions = self.model.predict(test_sentence)[0, :] #预测邮件类别

prob = predictions.max() #获取邮件类别数组的最大概率

return predictions

if __name__ == '__main__':

# model = build_transformer_model(config_path, checkpoint_path,model='albert') # 建立模型,加载权重

# model.load_weights('./model/albert_email.weights')

email = "疫情防控站好岗,不忘初心勇担当——运营管鼓励员工低碳出行培养节能环保意识公司工会向有车一族。"

albert = Albert()

ret = albert.predict(email)

print("ret:", ret)

模型训练结果

由于训练数据实在是太少了,训练集的准确度达到了100%。。。

本人只是菜鸟一枚,代码写的不太好,欢迎大家一起交流,提出改进意见~~