年收入50w级别的预测

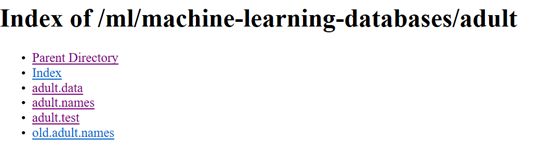

数据来源

数据链接

看数据内容像是美国的数据

每一行数据是美国某位公民的相关信息,一共有15行,分别介绍了年龄,社保号,工作性质,教育程度,受教育年限,婚姻状况,职位,居住情况,家庭状态,种族,captial-gain和captial-loss,每周工作时长,原生国家,以及年收入级别情况。要能根据前14个标签判断出最后一个标签的情况。所以将前14行作为训练数据。其中adult.data可以作为训练集数据,adult.name介绍了数据情况,可以从中提取出每一列的名称,adult.text作为预测数据使用。

每一行数据是美国某位公民的相关信息,一共有15行,分别介绍了年龄,社保号,工作性质,教育程度,受教育年限,婚姻状况,职位,居住情况,家庭状态,种族,captial-gain和captial-loss,每周工作时长,原生国家,以及年收入级别情况。要能根据前14个标签判断出最后一个标签的情况。所以将前14行作为训练数据。其中adult.data可以作为训练集数据,adult.name介绍了数据情况,可以从中提取出每一列的名称,adult.text作为预测数据使用。

下载adult.data,adult.text,adult.names,以待使用

数据预处理

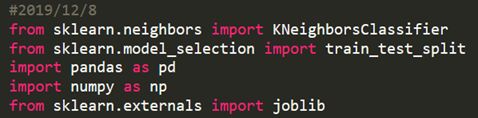

使用python语言进行编程,sklearn导入数据挖掘工具包。具体如下:

使用csv方式直接读取文件,并获取对应的dataFrame,因为adult.text中的第一行是无用数据,所以需要跳过(采用skiprows)。

训练数据trainSet一共有32560行,15列;测试数据testSet删除第一行后有16280行,15列。

从adult.name中可以获取每一列的名称。

![]() 此时,数据还是raw数据需要进行一定的处理,设定convert函数,将colLabels一一对应上每一列的名称,并且经过观察发现education和education_num这两个数据是重复数据,可以删除,社保号fnlwgt,captial_loss和captial_gain是无关数据,都需要进行删除,减少代码工作量。

此时,数据还是raw数据需要进行一定的处理,设定convert函数,将colLabels一一对应上每一列的名称,并且经过观察发现education和education_num这两个数据是重复数据,可以删除,社保号fnlwgt,captial_loss和captial_gain是无关数据,都需要进行删除,减少代码工作量。

之后由于每一列的数据可能是int64类型,或者是String类型,不好进行训练,对每一列进行数据转换,将字符串变为数字,非连续性数字如education_num这类也要统计出一共有多少种,并转换为数字。年龄不进行转换。主要由convert函数进行处理:

将代码中的5改为10就可以看到10邻域结果

knn2=KNeighborsClassifier(10)代码

from sklearn.neighbors import KNeighborsClassifier

from sklearn.model_selection import train_test_split

import pandas as pd

import numpy as np

from sklearn.externals import joblib

def convert(data):

data.columns=colLabels

# data.drop('fnlwgt',axis = 1,inplace=True)

# data.drop('education_num',axis = 1,inplace=True)#same with education

# data.drop('capital_loss',axis = 1,inplace=True)

# data.drop('capital_gain',axis = 1,inplace=True)

colNums=data.shape[1]# jump age

for num in range(1,colNums):# 1-9

# print(data.iloc[:,num])

colData=data.iloc[:,num]

# print(colData)

label=colData.unique()

# print(label)

# colData=colData.map(lambda x:np.argwhere(x==label)[0,0])

data.iloc[:,num]=colData.map(lambda x: np.argwhere(x==label)[0,0])

# print(data.iloc[:,num])

# print(data)

return data

#read files

trainSet=pd.read_csv('adult.txt')

# print(train_data.shape)(32560, 15)

testSet=pd.read_csv('adult.test',skiprows=1)

# print(test_data.shape) (16281, 1)

colLabels = ['age', 'workclass', 'fnlwgt', 'education', 'education_num', 'marital_status','occupation', 'relationship', 'race', 'sex', 'capital_gain', 'capital_loss','hours_per_week', 'native_country', 'wage_class']

trainSet=convert(trainSet)

testSet=convert(testSet)

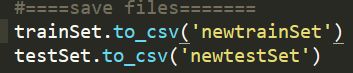

#====save files=======

trainSet.to_csv('newtrainSet')

testSet.to_csv('newtestSet')

xTrain,xTest,yTrain,yTest = train_test_split(trainSet.iloc[:,:-1],trainSet.iloc[:,-1],test_size = 1000)

# print(xTest,yTest)

# ====use knn to train=====

# #加载文件

# knn = joblib.load('year_receive5nei.m')

newknn=KNeighborsClassifier(5)

newknn.fit(xTrain,yTrain)

trainScore=newknn.score(xTrain,yTrain)

testScore=newknn.score(xTest,yTest)

print("==========5neighbor-train-accuracy:%f==========="%trainScore)

print("==========5neighbor-test-accuracy:%f==========="%testScore)

print(" ")

print("============now we predict !=============")

xPredict1,xPredict2,yPredict1,yPredict2 = train_test_split(testSet.iloc[:,:-1],testSet.iloc[:,-1],test_size =0.1)

y_=newknn.predict(xPredict1)

predictScore=newknn.score(testSet.iloc[:,:-1],testSet.iloc[:,-1])

print("==========predict-accuracy:%f==========="%predictScore)

#save model

joblib.dump(newknn,'newKnn5nei.m')使用SVM进行训练的代码

#2019/12/8

from sklearn.model_selection import train_test_split

import pandas as pd

import numpy as np

from sklearn.externals import joblib

from sklearn import svm

def convert(data):

data.columns=colLabels

data.drop('fnlwgt',axis = 1,inplace=True)

data.drop('education_num',axis = 1,inplace=True)#same with education

data.drop('capital_loss',axis = 1,inplace=True)

data.drop('capital_gain',axis = 1,inplace=True)

colNums=data.shape[1]# jump age

for num in range(1,colNums):# 1-9

# print(data.iloc[:,num])

colData=data.iloc[:,num]

# print(colData)

label=colData.unique()

data.iloc[:,num]=colData.map(lambda x: np.argwhere(x==label)[0,0])

# print(data.iloc[:,num])

# print(data)

return data

#=======load files========

trainSet=pd.read_csv('/home/longzihan/code/DataminCourse/trainSet')

trainSet=trainSet.drop(trainSet.columns[0],axis=1)

testSet=pd.read_csv("/home/longzihan/code/DataminCourse/testSet")

testSet=testSet.drop(testSet.columns[0],axis=1)

#get data

# testSet=testSet.iloc[:,:-1]

#they donot have col names

colLabels = ['age', 'workclass', 'fnlwgt', 'education', 'education_num', 'marital_status','occupation', 'relationship', 'race', 'sex', 'capital_gain', 'capital_loss','hours_per_week', 'native_country', 'wage_class']

trainSet=convert(trainSet)

testSet=convert(testSet)

xTrain,xTest,yTrain,yTest = train_test_split(trainSet.iloc[:,:-1],trainSet.iloc[:,-1],test_size = 1000)

# ====use SVM to train=====

clf=svm.SVC()

clf.fit(xTrain,yTrain)

trainScore=clf.score(xTrain,yTrain)

testScore=clf.score(xTest,yTest)

print("==========SVM-train-accuracy:%f==========="%trainScore)

print("==========SVM-test-accuracy:%f==========="%testScore)

print("============now we test !=============")

y_=clf.predict(testSet.iloc[:,:-1])

predictScore=clf.score(testSet.iloc[:,:-1],testSet.iloc[:,-1])

print("==========训练准确率:%f==========="%predictScore)

#save model

joblib.dump(clf,'SVM_model.m')