[TensorFlow-Guide] ==> TensorFlow中读取csv文件的几种方法总结

tensorflow之dataset:https://www.tensorflow.org/guide/datasets

pandas.read_csv文档:http://pandas.pydata.org/pandas-docs/stable/generated/pandas.read_csv.html#pandas.read_csv

逗号分隔值(Comma-Separated Values,CSV,有时也称为字符分隔值,因为分隔字符也可以不是逗号),其文件以纯文本形式存储表格数据(数字和文本)。纯文本意味着该文件是一个字符序列,不含必须像二进制数字那样被解读的数据。CSV文件由任意数目的记录组成,记录间以某种换行符分隔;每条记录由字段组成,字段间的分隔符是其它字符或字符串,最常见的是逗号或制表符。通常,所有记录都有完全相同的字段序列.

特点:

- 读取出的数据一般为字符类型,如果是数字需要人为转换为数字

- 以行为单位读取数据

- 列之间以半角逗号或制表符为分隔,一般为半角逗号

- 一般为每行开头不空格,第一行是属性列,数据列之间以间隔符为间隔无空格,行之间无空行。

- 行之间无空行十分重要,如果有空行或者数据集中行末有空格,读取数据时一般会出错,引发[list index out of range]错误。PS:已经被这个错误坑过很多次!

对数据的前期处理clean_data():

为了避免原始的csv文件中每一行的末尾处空格带来的干扰,需要先对数据进行清洗。

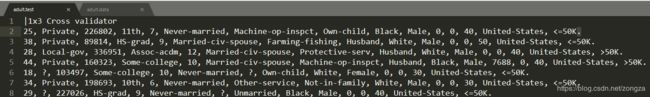

比如Census Income Data Set的adult.data(也就是训练集)长这样:

adult.test(也就是测试集)长这样:

那么对于测试集除了去空格还要注意去掉最后的句号.

代码如下(清洗后的数据保存在另外一个文件中):

def clean_data():

#训练集

with tf.gfile.Open(train_data_path,'r') as read_f:

with tf.gfile.Open(new_train_data_path,'w') as write_f:

for line in read_f.readlines():

line = line.strip()#去掉首尾的空格

line = line.replace(', ',',')

if not line or ',' not in line:

continue

line += '\n'

write_f.write(line)

#测试集

with tf.gfile.Open(test_data_path,'r') as read_f1:

with tf.gfile.Open(new_test_data_path,'w') as write_f1:

for line in read_f1.readlines():

line = line[:-1] #过滤. 测试集和训练集不一样

line = line.strip()

line = line.replace(', ',',')

if not line or ',' not in line:#过滤第一行和结束行

continue

line += '\n'

write_f1.write(line)参考:

- 使用 Estimator 构建线性模型(census income data示例):code

- 使用 Estimator 进行 wide&deep learning(census income data示例):code

正式读取数据input_fn():

常用的有两种方法

方法1:先读成dataframe再转换成dataset

利用pd.read_csv(csv_file, dtype, names, header, index_col,,) 和 tf.data.Dataset.xxx( (feature,label) )

该方法中xxx可以是 zip 也可以是 from_tensor_slice

比如:

def input_fn(data_file,shuffle = False,batch_size=32,num_epochs = 5 ):

#读取出dataframe

df = pd.read_csv(data_file,header=None,names=_CSV_COLUMNS) #header是第一列的名字(只能是数字,如header=0表示第一列名为0)如果自定义了names(列名)就不需要header

#转换成dataset

label = df[_LABEL_KEY]

label = [i=='>50k' for i in label]

df.drop(columns=_LABEL_KEY)#去掉label列的df就成feature了

'''

#在转换成dataset之前还可选取dataframe中的特定行作为训练集和测试集,如:

train_data = df[train_start:train_start+train_count]

test_data = df[eval_start:eval_start+eval_count]

#也可以对dataframe进行压缩,比如boosted_trees章节的:

with gzip.open(tempfiles,"rb") as csv_file:

data = pd.read_csv(csv_file,dtype=np.float32,names=["c%02d" % i for i in range(29)]).as_matrix()# label + 28 features.

f = tempfile.NamedTemporaryFile()#注意前面那个tempfile路径名不要和这个重名,否则报错str 没有NamedTemporaryFile属性

np.savez_compressed(f, data=data)#注意这里的data是一个dataframe类型的实例

tf.gfile.Copy(f.name, np_filename)

'''

ds = tf.data.Dataset.from_tensor_slices((dict(df),label))

if shuffle:

ds.shuffle(10000)

ds = ds.batch(batch_size).repeat(num_epochs)

return ds参考:

- 使用 Estimator 构建线性模型(census income data示例):code

- 使用 Estimator 进行 wide&deep learning(census income data示例):code

方法2:先读成dataset再进行map处理

利用 tf.data.TextLineDataset(csv_file) 和 dataset.map(调用tf.decode_csv(one_row, column_types ,,) 的函数)

比如:

def input_fn(data_file, num_epochs, shuffle, batch_size):

"""Generate an input function for the Estimator."""

assert tf.gfile.Exists(data_file), (

'%s not found. Please make sure you have run census_dataset.py and '

'set the --data_dir argument to the correct path.' % data_file)

def parse_csv(value):

tf.logging.info('Parsing {}'.format(data_file))

columns = tf.decode_csv(value, record_defaults=_CSV_COLUMN_DEFAULT)

#其中value就是csv文件或者dataset实例中的一行,record_default就是该行中每一列的数据类型[[0.],[''],,,]

features = dict(zip(_CSV_COLUMNS, columns))

#_CSV_COLUMNS就是列名的list ['salary','name',,,] ,column就是上面被decode后的一行数据

#得到的结果是一个dict

labels = features.pop('income_bracket')

classes = tf.equal(labels, '>50K') # binary classification

return features, classes#返回的都是dic

# Extract lines from input files using the Dataset API.

dataset = tf.data.TextLineDataset(data_file) #tf.data.TextLineDataset是一个类,可以看是相同类型“元素”的有序列表,想要读取出数据需要将这个类进行实例化

if shuffle:

dataset = dataset.shuffle(buffer_size=_NUM_EXAMPLES['train'])

dataset = dataset.map(parse_csv, num_parallel_calls=5)

# We call repeat after shuffling, rather than before, to prevent separate

# epochs from blending together.

dataset = dataset.repeat(num_epochs)#先shuffle再repeat防止不同的epoch中数据不一致

dataset = dataset.batch(batch_size)

return dataset参考:

- 使用 Estimator 构建线性模型(census income data示例):code

- 使用 Estimator 进行 wide&deep learning(census income data示例):code

两种方法的比较

可以看到在census income data中,如果是完全读取原始数据而不进行任何处理,两种方法都是可行的,那么如果要对数据进行一些小的修改呢?我们来看一下:

某一列是字符串,把他转换成数字

比如census 中最后一列salary,有两种可能值 ‘<=50k’ 和 ‘>50k’,把他们分别转换成0和1

对于方法1:在转换成dataset之前就对整列值进行转换

label = [i=='>50k' for i in label]

#具体见上一节对于方法2:需要在map的函数中针对每一行数据的该列值进行转换

classes = tf.equal(labels, '>50K') # binary classification

#具体如上节所示某一列是连续数字,把他分成几个段落,每一段用一个值标识

比如census 中age一列,年龄从0到100不定,可以把它划分成几个段落,减少feature维度

对于方法1和2都只需要在定义feature_column时做文章:

age = fc.numeric_column('age')

age_buckets = fc.bucketized_column(age, boundaries=[18, 25, 30, 35, 40, 45, 50, 55, 60, 65])对于 HIGGS Data Set,长这样的,分段的边界不像年龄那样容易人工划分:

第一列是label,表示是不是higgs粒子(0或1),后面都是feature,而且都是连续型数值,需要进行分段,难点在于分段的方法:

这时候常用第一种方法,因为方法1可以在转成dataset之前把每一列取出来的(普通的numpy格式)在后面的boundaries中用得到。

分段的方法是用 np.percentile() 找到边界值,然后利用 tf.feature_column.bucketized_column()

#这个函数把input_fn 和 column_fn 集成了

def make_inputs_from_np_arrays(features_np, label_np):

"""Makes and returns input_fn and feature_columns from numpy arrays.

The generated input_fn will return tf.data.Dataset of feature dictionary and a

label, and feature_columns will consist of the list of

tf.feature_column.BucketizedColumn.

Note, for in-memory training, tf.data.Dataset should contain the whole data

as a single tensor. Don't use batch.

Args:

features_np: A numpy ndarray (shape=[batch_size, num_features]) for

float32 features.

label_np: A numpy ndarray (shape=[batch_size, 1]) for labels.

Returns:

input_fn: A function returning a Dataset of feature dict and label.

feature_names: A list of feature names.

feature_column: A list of tf.feature_column.BucketizedColumn.

"""

num_features = features_np.shape[1]

features_np_list = np.split(features_np, num_features, axis=1)

# 1-based feature names.

feature_names = ["feature_%02d" % (i + 1) for i in range(num_features)]

# Create source feature_columns and bucketized_columns.

def get_bucket_boundaries(feature):

"""Returns bucket boundaries for feature by percentiles."""

return np.unique(np.percentile(feature, range(0, 100))).tolist()

#返回一个边界:这个边界是把culomn中所有值排好序然后均分成100份,取每一份的边界值构成一个lsit,在bucketized_column中需要用到

'''

For example, if the inputs are

```python

boundaries = [0, 10, 100]

input tensor = [[-5, 10000]

[150, 10]

[5, 100]]

```

then the output will be

```python

output = [[0, 3]

[3, 2]

[1, 3]]

```

'''

#进行分段之前必须先

source_columns = [

tf.feature_column.numeric_column(

feature_name, dtype=tf.float32,

# Although higgs data have no missing values, in general, default

# could be set as 0 or some reasonable value for missing values.

default_value=0.0)

for feature_name in feature_names

]

bucketized_columns = [

tf.feature_column.bucketized_column(

source_columns[i],

boundaries=get_bucket_boundaries(features_np_list[i]))

for i in range(num_features)#对features中每一列特征都进行分段化

]

# Make an input_fn that extracts source features.

def input_fn():

"""Returns features as a dictionary of numpy arrays, and a label."""

features = {

feature_name: tf.constant(features_np_list[i])

for i, feature_name in enumerate(feature_names)

}

return tf.data.Dataset.zip((tf.data.Dataset.from_tensors(features),

tf.data.Dataset.from_tensors(label_np),))

return input_fn, feature_names, bucketized_columns补充

dataframe和dataset都是一种用于存储数据的类,不同点可以比对源码

dataframe部分源码:

class DataFrame(NDFrame):

""" Two-dimensional size-mutable, potentially heterogeneous tabular data

structure with labeled axes (rows and columns). Arithmetic operations

align on both row and column labels. Can be thought of as a dict-like

container for Series objects. The primary pandas data structure.

Parameters

----------

data : numpy ndarray (structured or homogeneous), dict, or DataFrame

Dict can contain Series, arrays, constants, or list-like objects

.. versionchanged :: 0.23.0

If data is a dict, argument order is maintained for Python 3.6

and later.

index : Index or array-like

Index to use for resulting frame. Will default to RangeIndex if

no indexing information part of input data and no index provided

columns : Index or array-like

Column labels to use for resulting frame. Will default to

RangeIndex (0, 1, 2, ..., n) if no column labels are provided

dtype : dtype, default None

Data type to force. Only a single dtype is allowed. If None, infer

copy : boolean, default False

Copy data from inputs. Only affects DataFrame / 2d ndarray input

Examples

--------

Constructing DataFrame from a dictionary.

>>> d = {'col1': [1, 2], 'col2': [3, 4]}

>>> df = pd.DataFrame(data=d)

>>> df

col1 col2

0 1 3

1 2 4

Notice that the inferred dtype is int64.

>>> df.dtypes

col1 int64

col2 int64

dtype: object

To enforce a single dtype:

>>> df = pd.DataFrame(data=d, dtype=np.int8)

>>> df.dtypes

col1 int8

col2 int8

dtype: object

Constructing DataFrame from numpy ndarray:

>>> df2 = pd.DataFrame(np.random.randint(low=0, high=10, size=(5, 5)),

... columns=['a', 'b', 'c', 'd', 'e'])

>>> df2

a b c d e

0 2 8 8 3 4

1 4 2 9 0 9

2 1 0 7 8 0

3 5 1 7 1 3

4 6 0 2 4 2

See also

--------

DataFrame.from_records : constructor from tuples, also record arrays

DataFrame.from_dict : from dicts of Series, arrays, or dicts

DataFrame.from_items : from sequence of (key, value) pairs

pandas.read_csv, pandas.read_table, pandas.read_clipboard

"""

@property

def _constructor(self):

return DataFrame

_constructor_sliced = Series

_deprecations = NDFrame._deprecations | frozenset(

['sortlevel', 'get_value', 'set_value', 'from_csv', 'from_items'])

_accessors = set()

@property

def _constructor_expanddim(self):

from pandas.core.panel import Panel

return Panel

def __init__(self, data=None, index=None, columns=None, dtype=None,

copy=False):

if data is None:

data = {}

if dtype is not None:

dtype = self._validate_dtype(dtype)

if isinstance(data, DataFrame):

data = data._data

if isinstance(data, BlockManager):

mgr = self._init_mgr(data, axes=dict(index=index, columns=columns),

dtype=dtype, copy=copy)

elif isinstance(data, dict):

mgr = self._init_dict(data, index, columns, dtype=dtype)

elif isinstance(data, ma.MaskedArray):

import numpy.ma.mrecords as mrecords

# masked recarray

if isinstance(data, mrecords.MaskedRecords):

mgr = _masked_rec_array_to_mgr(data, index, columns, dtype,

copy)

# a masked array

else:

mask = ma.getmaskarray(data)

if mask.any():

data, fill_value = maybe_upcast(data, copy=True)

data[mask] = fill_value

else:

data = data.copy()

mgr = self._init_ndarray(data, index, columns, dtype=dtype,

copy=copy)

elif isinstance(data, (np.ndarray, Series, Index)):

if data.dtype.names:

data_columns = list(data.dtype.names)

data = {k: data[k] for k in data_columns}

if columns is None:

columns = data_columns

mgr = self._init_dict(data, index, columns, dtype=dtype)

elif getattr(data, 'name', None) is not None:

mgr = self._init_dict({data.name: data}, index, columns,

dtype=dtype)

else:

mgr = self._init_ndarray(data, index, columns, dtype=dtype,

copy=copy)

elif isinstance(data, (list, types.GeneratorType)):

if isinstance(data, types.GeneratorType):

data = list(data)

if len(data) > 0:

if is_list_like(data[0]) and getattr(data[0], 'ndim', 1) == 1:

if is_named_tuple(data[0]) and columns is None:

columns = data[0]._fields

arrays, columns = _to_arrays(data, columns, dtype=dtype)

columns = _ensure_index(columns)

# set the index

if index is None:

if isinstance(data[0], Series):

index = _get_names_from_index(data)

elif isinstance(data[0], Categorical):

index = com._default_index(len(data[0]))

else:

index = com._default_index(len(data))

mgr = _arrays_to_mgr(arrays, columns, index, columns,

dtype=dtype)

else:

mgr = self._init_ndarray(data, index, columns, dtype=dtype,

copy=copy)

else:

mgr = self._init_dict({}, index, columns, dtype=dtype)

elif isinstance(data, collections.Iterator):

raise TypeError("data argument can't be an iterator")

else:

try:

arr = np.array(data, dtype=dtype, copy=copy)

except (ValueError, TypeError) as e:

exc = TypeError('DataFrame constructor called with '

'incompatible data and dtype: {e}'.format(e=e))

raise_with_traceback(exc)

if arr.ndim == 0 and index is not None and columns is not None:

values = cast_scalar_to_array((len(index), len(columns)),

data, dtype=dtype)

mgr = self._init_ndarray(values, index, columns,

dtype=values.dtype, copy=False)

else:

raise ValueError('DataFrame constructor not properly called!')

NDFrame.__init__(self, mgr, fastpath=True)

dataset部分源码:

class Dataset(object):

"""Represents a potentially large set of elements.

A `Dataset` can be used to represent an input pipeline as a

collection of elements (nested structures of tensors) and a "logical

plan" of transformations that act on those elements.

"""

__metaclass__ = abc.ABCMeta

def __init__(self):

pass

def _as_serialized_graph(self):

"""Produces serialized graph representation of the dataset.

Returns:

A scalar `tf.Tensor` of `tf.string` type, representing this dataset as a

serialized graph.

"""

return gen_dataset_ops.dataset_to_graph(self._as_variant_tensor())

@abc.abstractmethod

def _as_variant_tensor(self):

"""Creates a scalar `tf.Tensor` of `tf.variant` representing this dataset.

Returns:

A scalar `tf.Tensor` of `tf.variant` type, which represents this dataset.

"""

raise NotImplementedError("Dataset._as_variant_tensor")

def make_initializable_iterator(self, shared_name=None):

"""Creates an `Iterator` for enumerating the elements of this dataset.

Note: The returned iterator will be in an uninitialized state,

and you must run the `iterator.initializer` operation before using it:

```python

dataset = ...

iterator = dataset.make_initializable_iterator()

# ...

sess.run(iterator.initializer)

```

Args:

shared_name: (Optional.) If non-empty, the returned iterator will be

shared under the given name across multiple sessions that share the

same devices (e.g. when using a remote server).

Returns:

An `Iterator` over the elements of this dataset.

Raises:

RuntimeError: If eager execution is enabled.

"""

if context.executing_eagerly():

raise RuntimeError(

"dataset.make_initializable_iterator is not supported when eager "

"execution is enabled.")

if shared_name is None:

shared_name = ""

if compat.forward_compatible(2018, 8, 3):

iterator_resource = gen_dataset_ops.iterator_v2(

container="", shared_name=shared_name, **flat_structure(self))

else:

iterator_resource = gen_dataset_ops.iterator(

container="", shared_name=shared_name, **flat_structure(self))

with ops.colocate_with(iterator_resource):

initializer = gen_dataset_ops.make_iterator(self._as_variant_tensor(),

iterator_resource)

return iterator_ops.Iterator(iterator_resource, initializer,

self.output_types, self.output_shapes,

self.output_classes)

def __iter__(self):

"""Creates an `Iterator` for enumerating the elements of this dataset.

The returned iterator implements the Python iterator protocol and therefore

can only be used in eager mode.

Returns:

An `Iterator` over the elements of this dataset.

Raises:

RuntimeError: If eager execution is not enabled.

"""

if context.executing_eagerly():

return iterator_ops.EagerIterator(self)

else:

raise RuntimeError("dataset.__iter__() is only supported when eager "

"execution is enabled.")

def make_one_shot_iterator(self):

"""Creates an `Iterator` for enumerating the elements of this dataset.

Note: The returned iterator will be initialized automatically.

A "one-shot" iterator does not currently support re-initialization.

Returns:

An `Iterator` over the elements of this dataset.

"""

if context.executing_eagerly():

return iterator_ops.EagerIterator(self)

# NOTE(mrry): We capture by value here to ensure that `_make_dataset()` is

# a 0-argument function.

@function.Defun(capture_by_value=True)

def _make_dataset():

return self._as_variant_tensor() # pylint: disable=protected-access

try:

_make_dataset.add_to_graph(ops.get_default_graph())

except ValueError as err:

if "Cannot capture a stateful node" in str(err):

raise ValueError(

"Failed to create a one-shot iterator for a dataset. "

"`Dataset.make_one_shot_iterator()` does not support datasets that "

"capture stateful objects, such as a `Variable` or `LookupTable`. "

"In these cases, use `Dataset.make_initializable_iterator()`. "

"(Original error: %s)" % err)

else:

six.reraise(ValueError, err)

return iterator_ops.Iterator(

gen_dataset_ops.one_shot_iterator(

dataset_factory=_make_dataset, **flat_structure(self)),

None, self.output_types, self.output_shapes, self.output_classes)

@abc.abstractproperty

def output_classes(self):

"""Returns the class of each component of an element of this dataset.

The expected values are `tf.Tensor` and `tf.SparseTensor`.

Returns:

A nested structure of Python `type` objects corresponding to each

component of an element of this dataset.

"""

raise NotImplementedError("Dataset.output_classes")

@abc.abstractproperty

def output_shapes(self):

"""Returns the shape of each component of an element of this dataset.

Returns:

A nested structure of `tf.TensorShape` objects corresponding to each

component of an element of this dataset.

"""

raise NotImplementedError("Dataset.output_shapes")

@abc.abstractproperty

def output_types(self):

"""Returns the type of each component of an element of this dataset.

Returns:

A nested structure of `tf.DType` objects corresponding to each component

of an element of this dataset.

"""

raise NotImplementedError("Dataset.output_types")

def __repr__(self):

output_shapes = nest.map_structure(str, self.output_shapes)

output_shapes = str(output_shapes).replace("'", "")

output_types = nest.map_structure(repr, self.output_types)

output_types = str(output_types).replace("'", "")

return ("<%s shapes: %s, types: %s>" % (type(self).__name__, output_shapes,

output_types))

@staticmethod

def from_tensors(tensors):

"""Creates a `Dataset` with a single element, comprising the given tensors.

Note that if `tensors` contains a NumPy array, and eager execution is not

enabled, the values will be embedded in the graph as one or more

`tf.constant` operations. For large datasets (> 1 GB), this can waste

memory and run into byte limits of graph serialization. If tensors contains

one or more large NumPy arrays, consider the alternative described in

[this guide](https://tensorflow.org/guide/datasets#consuming_numpy_arrays).

Args:

tensors: A nested structure of tensors.

Returns:

Dataset: A `Dataset`.

"""

return TensorDataset(tensors)

@staticmethod

def from_tensor_slices(tensors):

"""Creates a `Dataset` whose elements are slices of the given tensors.

Note that if `tensors` contains a NumPy array, and eager execution is not

enabled, the values will be embedded in the graph as one or more

`tf.constant` operations. For large datasets (> 1 GB), this can waste

memory and run into byte limits of graph serialization. If tensors contains

one or more large NumPy arrays, consider the alternative described in

[this guide](https://tensorflow.org/guide/datasets#consuming_numpy_arrays).

Args:

tensors: A nested structure of tensors, each having the same size in the

0th dimension.

Returns:

Dataset: A `Dataset`.

"""

return TensorSliceDataset(tensors)

![[TensorFlow-Guide] ==> TensorFlow中读取csv文件的几种方法总结_第1张图片](http://img.e-com-net.com/image/info8/883a630fa86f4d2696fdacbe24555718.jpg)

![[TensorFlow-Guide] ==> TensorFlow中读取csv文件的几种方法总结_第2张图片](http://img.e-com-net.com/image/info8/a1e8b7884cf24b1c9465f86aab9b3243.jpg)