Hadoop完全分布式集群总结

觉得有帮助的,请多多支持博主,点赞关注哦~

文章目录

- Hadoop完全分布式集群搭建

- 一、新建虚拟机

- 二、规划集群与服务

- 1、逻辑结构

- 2、物理结构

- 3、总结构

- 三、配置集群

- 1、主节点基础网络配置

- 1.1、配置固定ip地址

- 1.2、修改主机名

- 1.3、配置dns

- 1.4、配置 ip 地址和主机名映射

- 1.5、关闭防火墙

- 1.6、重启网卡

- 2、新建用户及权限配置

- 2.1、创建目录

- 2.2、创建用户组和用户

- 2.3、修改权限

- 2.4、切换用户

- 3、配置安装JDK

- 3.1、安装jdk

- 3.2、配置环境变量并检查

- 4、根据主节点hadoop01克隆两份系统

- 5、修改各个从节点网络配置

- 6、主从节点实现免密登录

- 6.1、生成密钥

- 6.2、复制公钥

- 6.3、验证免密登录

- 7、同步集群时间

- 7.1、主节点配置时间服务器

- 7.1.1、检查NTP包是否安装

- 7.1.2、安装NTP服务

- 7.1.3、设置时间配置文件

- 7.1.4、设置BIOS与系统时间同步

- 7.1.5、启动ntp服务并测试

- 7.2、从节点配置时间与主节点时间同步

- 7.2.1、 先关闭非时间服务器节点(这里是从节点)的ntpd服务

- 7.2.2、配置与主节点同步

- 定时同步

- 8、主节点配置安装Hadoop

- 8.1、安装

- 8.2、配置

- 9、修改主节点配置文件

- 9.1、创建所需文件目录

- 9.2、hadoop-env.sh

- 9.3、core-site.xml

- 9.4、hdfs-site.xml

- 9.5、mapred-site.xml

- 9.6、 yarn-site.xml

- 9.7、msater

- 9.8、slaves

- 10、分发hadoop2.7到各个从节点

- 10.1、手动方式

- 10.2、shell脚本方式

- 四、启动集群(主节点执行)

- 1、初始化

- 2、启动hdfs服务

- 3、启动yarn服务

- 4、启动任务历史服务器

- 5、集群机器查看

- 5.1、hadoop01

- 5.2、hadoop02

- 5.3、hadoop03

- 6、查看结果

- 6.1、NameNode

- 6.2、SecondaryNameNode

- 6.3、ResourceManager

- 6.4、NodeManager

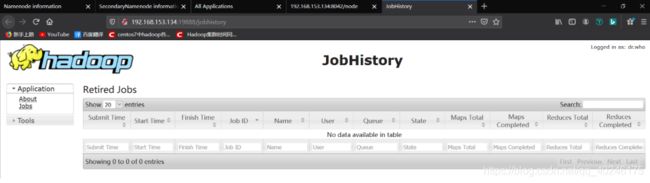

- 6.5、JobHistoryServer

- 五、基准测试

- 1、写入测试

- 2、读取测试

- 3、清理测试数据

Hadoop完全分布式集群搭建

一、新建虚拟机

没啥说的,注意分区即可,我这里是演示,配置不高。

- /boot:300M足够

- /swap:一般为内存的两倍,2048M

- /home:2000M足够

- /:剩余空间

二、规划集群与服务

1、逻辑结构

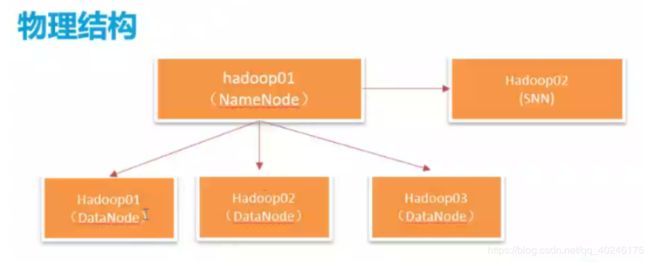

2、物理结构

3、总结构

-

192.168.153.134:Hadoop01(主节点):

包括NameNode、DataNode、ResourceManager、NodeManager、JobHistoryServer

-

192.168.153.135:Hadoop02(从节点):

包括SecondaryNameNode、DataNode、NodeManager

-

192.168.153.136:Hadoop03(从节点):

包括NameNode、DataNode

三、配置集群

1、主节点基础网络配置

1)配置固定ip地址

2)配置主机名

3)配置主机名和ip地址映射

4)关闭防火墙

5)ping:宿主机和insternt,保证网络通讯正常

1.1、配置固定ip地址

# 配置固定ip

vi /etc/sysconfig/network-scripts/ifcfg-ens33

# 参考配置

IPADDR=192.168.153.134

NETMASK=255.255.255.0

GATEWAY=192.168.153.2

DNS1=114.114.114.114

DNS2=8.8.8.8

1.2、修改主机名

# 一、

# 修改network,修改主机名

vi /etc/sysconfig/network

# 参考配置

NETWORKING=yes

HOSTNAME=hadoop01

# 二、

# 修改主机名

vi /etc/hostname

# 参考配置

hadoop01

1.3、配置dns

# 修改resolv.conf,配置dns

vi /etc/resolv.conf

# 参考配置

nameserver 114.114.114.114

nameserver 8.8.8.8

1.4、配置 ip 地址和主机名映射

# 配置 ip 地址和主机名映射

vi /etc/hosts

# 参考配置

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.153.134 hadoop01

192.168.153.135 hadoop02

192.168.153.136 hadoop03

1.5、关闭防火墙

systemctl stop firewalld.service

systemctl disable firewalld.service

# 相关命令

查看防火墙状态:firewall-cmd --state

关闭防火墙: systemctl stop firewalld.service

开启防火墙: systemctl start firewalld.service

禁止防火墙开机启动:systemctl disable firewalld.service

1.6、重启网卡

service network restart

2、新建用户及权限配置

2.1、创建目录

# -p递归创建,在存放临时文件的opt文件夹中分别创建software和model

mkdir -p /opt/software

mkdir -p /opt/model

2.2、创建用户组和用户

以后操作需要使用新建用户操作,不要直接使用root用户。

# 创建用户组 -g:指定组id

groupadd -g 1111 hadoopenv

# 创建用户 -m:自动创建家目录 -u:指定用户id -g:指定所属组

useradd -m -u 1111 -g hadoopenv hadoopenv

# 修改用户密码

passwd hadoopenv

# 可以查看组和用户

cat /etc/group

cat /etc/passwd

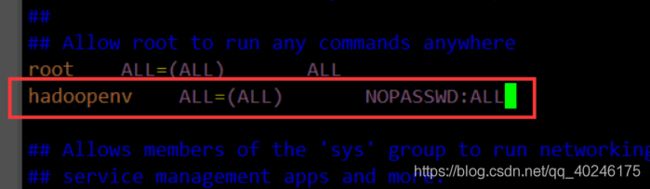

2.3、修改权限

需要给新建用户赋予root权限,才能完成一系列操作。

# 首先查看一下配置文件权限为440

ll /etc/sudoers

# 440是不能修改的,需要修改文件权限大一些,777,反正一会儿要改回来

chmod 777 /etc/sudoers

# 修改配置文件

vi /etc/sudoers

# 加入,可以使用G直接查看文末,进行添加

hadoopenv ALL=(ALL) NOPASSWD:ALL

# 将文件权限改回440,安全

chmod 440 /etc/sudoers

2.4、切换用户

# 切换到刚创建的用户

su hadoop01

# 进入opt目录

cd /opt

# 使用sudo进行目录拥有者修改

# sudo就是root权限

# 可以使用id修改

sudo chown -R 1111:1111 model

# 也可以使用名称修改

sudo chown -R 1111:1111 software

3、配置安装JDK

3.1、安装jdk

# 进入software目录

cd /opt/software

# 上传本地文件命令:rz

# 如果无法使用,则需要安装:sudo yum install lrzsz -y

rz

# 解压jdk到model目录下,-C:指定目录

tar -zxvf jdk -C ../model

# 进入model目录

cd ../model

# 修改jdk名称

mv jdk jdk1.8

3.2、配置环境变量并检查

# 配置环境变量

sudo vim /etc/profile

# 参考配置:

# 可以使用G,到末尾添加

export JAVA_HOME=/opt/model/jdk1.8

export CLASSPATH=.:$JAVA_HOME/lib

export PATH=$PATH:$JAVA_HOME/bin

# 使配置文件生效

source /etc/profile

# 查看配置是否成功

java -version

echo $JAVA_HOME

which java

4、根据主节点hadoop01克隆两份系统

将hadoop01先关机,然后鼠标右击管理->克隆->创建完整克隆->填写名称、选择存放位置即可

5、修改各个从节点网络配置

# 修改两个从节点ip分别为

192.168.153.135

192.168.153.136

# 修改两个主机名分别为

hadoop02

hadoop03

# 重启

reboot

# 连接Xshell

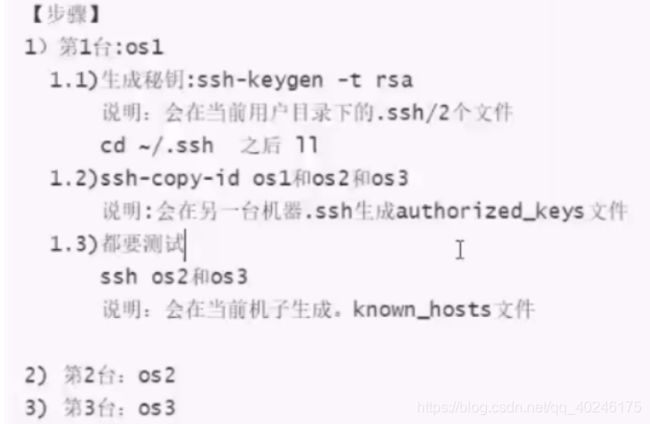

6、主从节点实现免密登录

6.1、生成密钥

# 三台机器都要操作

[hadoopenv@hadoop01 ~]$ ssh-keygen -t rsa

6.2、复制公钥

# 将 hadoop01 的公钥写到本机和远程机器的 ~/ .ssh/authorized_keys 文件中(另外两台机器上需要做同样的动作)

[hadoopenv@hadoop01 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop01

[hadoopenv@hadoop01 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop02

[hadoopenv@hadoop01 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop03

6.3、验证免密登录

ssh hadoop01

ssh hadoop02

ssh hadoop03

7、同步集群时间

7.1、主节点配置时间服务器

7.1.1、检查NTP包是否安装

[hadoopenv@hadoop01 ~]$ rpm -qa | grep ntp

7.1.2、安装NTP服务

[hadoopenv@hadoop01 ~]$ sudo yum install ntp -y

7.1.3、设置时间配置文件

[hadoopenv@hadoop01 ~]$ sudo vi /etc/ntp.conf

# 参考配置:

# 修改一(设置本地网络上的主机不受限制)

#restrict 192.168.1.0 mask 255.255.255.0 nomodify notrap 为

restrict 192.168.239.0 mask 255.255.255.0 nomodify notrap

# 修改二(添加默认的一个内部时钟数据,使用它为局域网用户提供服务)

server 127.127.1.0

fudge 127.127.1.0 stratum 10

# 修改三(设置为不采用公共的服务器)

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst 为

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

7.1.4、设置BIOS与系统时间同步

[hadoopenv@hadoop01 ~]$ sudo vi /etc/sysconfig/ntpd

# 参考配置:

# Command line options for ntpd

SYNC_HWCLOCK=yes

OPTIONS="-u ntp:ntp -p /var/run/ntpd.pid -g"

7.1.5、启动ntp服务并测试

# 启动ntp服务

[hadoopenv@hadoop01 ~]$ sudo systemctl start ntpd

# 查看ntp状态

[hadoopenv@hadoop01 ~]$ systemctl status ntpd

● ntpd.service - Network Time Service

Loaded: loaded (/usr/lib/systemd/system/ntpd.service; disabled; vendor preset: disabled)

Active: active (running) since 日 2020-02-16 19:14:17 CST; 7s ago

Process: 32513 ExecStart=/usr/sbin/ntpd -u ntp:ntp $OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 32514 (ntpd)

CGroup: /system.slice/ntpd.service

└─32514 /usr/sbin/ntpd -u ntp:ntp -u ntp:ntp -p /var/run/ntpd.pid -g

# 设置为开机自启

[hadoopenv@hadoop01 ~]$ sudo systemctl enable ntpd.service

Created symlink from /etc/systemd/system/multi-user.target.wants/ntpd.service to /usr/lib/systemd/system/ntpd.service.

# 测试

[hadoopenv@hadoop01 ~]$ ntpstat

synchronised to local net (127.127.1.0) at stratum 11

time correct to within 7948 ms

polling server every 64 s

[hadoopenv@hadoop01 ~]$ sudo ntpq -p

remote refid st t when poll reach delay offset jitter

==============================================================================

*LOCAL(0) .LOCL. 10 l 35 64 1 0.000 0.000 0.000

[hadoopenv@hadoop01 ~]$

7.2、从节点配置时间与主节点时间同步

7.2.1、 先关闭非时间服务器节点(这里是从节点)的ntpd服务

# 关闭ntp服务

[hadoopenv@hadoop02 ~]$ sudo systemctl stop ntpd.service

# 关闭开机自启

[hadoopenv@hadoop02 ~]$ sudo systemctl disable ntpd.service

7.2.2、配置与主节点同步

-

手动同步

[hadoopenv@hadoop02 ~]$ sudo ntpdate hadoop01 24 Nov 11:25:13 ntpdate[2878]: step time server 192.168.239.125 offset 24520304.363894 sec [hadoopenv@hadoop02 ~]$ date 2020年 11月 24日 星期二 11:25:20 CST -

定时同步

# 查看从节点的定时任务 [hadoopenv@hadoop02 ~]$ sudo crontab -l [hadoopenv@hadoop03 ~]$ sudo crontab -l # 添加定时任务 [hadoopenv@hadoop02 ~]$ sudo crontab -e [hadoopenv@hadoop03 ~]$ sudo crontab -e # 参考配置: # 在其他机器配置10分钟与时间服务器同步一次 */10 * * * * /usr/sbin/ntpdate hadoop01

8、主节点配置安装Hadoop

8.1、安装

# 与jdk相同,不解释了

cd /opt/software

rz

tar -zxvf hadoop -C ../model

8.2、配置

# 修改环境变量

sudo vim /etc/profile

# 参考配置:

export JAVA_HOME=/opt/model/jdk1.8

export CLASSPATH=.:$JAVA_HOME/lib

export HADOOP_HOME=/opt/model/hadoop-2.7.7

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

# 使配置生效

source /etc/profile

9、修改主节点配置文件

9.1、创建所需文件目录

[hadoopenv@hadoop01 hadoop-2.7.7]$ mkdir /opt/model/hadoop-2.7.7/tmp

[hadoopenv@hadoop01 hadoop-2.7.7]$ mkdir -p /opt/model/hadoop-2.7.7/dfs/namenode_data

[hadoopenv@hadoop01 hadoop-2.7.7]$ mkdir -p /opt/model/hadoop-2.7.7/dfs/datanode_data

[hadoopenv@hadoop01 hadoop-2.7.7]$ mkdir -p /opt/model/hadoop-2.7.7/checkpoint/dfs/cname

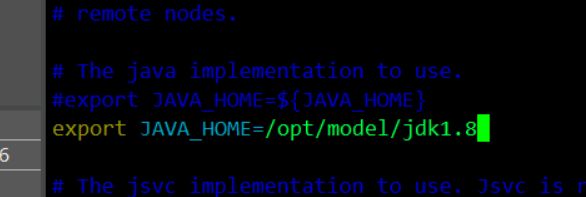

9.2、hadoop-env.sh

exoprt JAVA_HOME=/opt/model/jdk1.8

9.3、core-site.xml

<configuration>

<property>

<!--用来指定hdfs的老大,namenode的地址-->

<name>fs.defaultFS</name>

<value>hdfs://hadoop01:9000</value>

</property>

<property>

<!--用来指定hadoop运行时产生文件的存放目录-->

<name>hadoop.tmp.dir</name>

<value>file:///opt/model/hadoop-2.7.7/tmp</value>

</property>

<property>

<!--设置缓存大小,默认4kb-->

<name>io.file.buffer.size</name>

<value>4096</value>

</property>

</configuration>

9.4、hdfs-site.xml

<configuration>

<property>

<!--数据块默认大小128M-->

<name>dfs.block.size</name>

<value>134217728</value>

</property>

<property>

<!--副本数量,不配置的话默认为3-->

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<!--定点检查-->

<name>fs.checkpoint.dir</name>

<value>file:///opt/model/hadoop-2.7.7/checkpoint/dfs/cname</value>

</property>

<property>

<!--namenode节点数据(元数据)的存放位置-->

<name>dfs.name.dir</name>

<value>file:///opt/model/hadoop-2.7.7/dfs/namenode_data</value>

</property>

<property>

<!--datanode节点数据(元数据)的存放位置-->

<name>dfs.data.dir</name>

<value>file:///opt/model/hadoop-2.7.7/dfs/datanode_data</value>

</property>

<property>

<!--指定secondarynamenode的web地址-->

<name>dfs.namenode.secondary.http-address</name>

<value>hadoop02:50090</value>

</property>

<property>

<!--hdfs文件操作权限,false为不验证-->

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

9.5、mapred-site.xml

<configuration>

<property>

<!--指定mapreduce运行在yarn上-->

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<!--配置任务历史服务器IPC-->

<name>mapreduce.jobhistory.address</name>

<value>hadoop01:10020</value>

</property>

<property>

<!--配置任务历史服务器web-UI地址-->

<name>mapreduce.jobhistory.webapp.address</name>

<value>hadoop01:19888</value>

</property>

</configuration>

9.6、 yarn-site.xml

<configuration>

<property>

<!--指定yarn的老大resourcemanager的地址-->

<name>yarn.resourcemanager.hostname</name>

<value>hadoop01</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>hadoop01:8032</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>hadoop01:8088</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>hadoop01:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>hadoop01:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>hadoop01:8033</value>

</property>

<property>

<!--NodeManager获取数据的方式-->

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<!--开启日志聚集功能-->

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<!--配置日志保留7天-->

<name>yarn.log-aggregation.retain-seconds</name>

<value>604800</value>

</property>

</configuration>

9.7、msater

[hadoopenv@hadoop01 hadoop]$ vim master

hadoop01

9.8、slaves

hadoop01

hadoop02

hadoop03

10、分发hadoop2.7到各个从节点

注意:分发后,需要在从节点分别配置一下Hadoop的环境变量

10.1、手动方式

# 将安装包分发到hadoop02

[hadoopenv@hadoop01 hadoop]$ sudo scp -r /opt/model/hadoop-2.7.7/ hadoopenv@hadoop02:/opt/model

或

[hadoopenv@hadoop01 hadoop]$ sudo scp -r /opt/model/hadoop-2.7.7/ hadoop02:/opt/model

# 将安装包分发到hadoop03

[hadoopenv@hadoop01 hadoop]$ sudo scp -r /opt/model/hadoop-2.7.7/ hadoopenv@hadoop03:/opt/model

或

[hadoopenv@hadoop01 hadoop]$ sudo scp -r /opt/model/hadoop-2.7.7/ hadoop03:/opt/model

10.2、shell脚本方式

cp-config.sh

# cp-config.sh

#!/bin/bash

# 节点间复制文件

# 首先判断参数是否存在

args=$#

if [ args -eq 0 ];then

echo "no args"

exit 1

fi

# 获取文件名称

p1=$1

fname=$(basename $p1)

echo faname=$fname

# 获取上级目录到绝对路径

pdir=$(cd $(dirname $p1);pwd -P)

echo pdir=$pdir

# 获取当前用户名称

user=$(whoami)

# 循环分发

for(( host=2;host<4;host++ ));do

echo "------hadoop0$host------"

scp -r $pdir/$fname $user@hadoop0$host:$pdir

done

echo "------分发完成------"

执行命令:[hadoopenv@hadoop01 model]$ ./cp_config.sh /opt/model/hadoop-2.7.7/

四、启动集群(主节点执行)

在 hadoop01 上启动 Hadoop集群。此时 hadoop02 和 hadoop03 上的相关服务也会被启动

1、初始化

# 第一次执行的时候一定要格式化文件系统,不要重复执行

[hadoopenv@hadoop01 ~]$ hdfs namenode -format

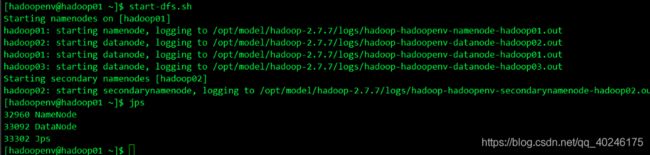

2、启动hdfs服务

[hadoopenv@hadoop01 ~]$ start-dfs.sh

[hadoopenv@hadoop01 ~]$ jps

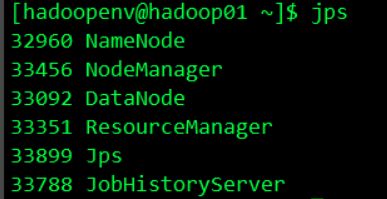

3、启动yarn服务

[hadoopenv@hadoop01 ~]$ start-yarn.sh

[hadoopenv@hadoop01 ~]$ jps

4、启动任务历史服务器

[hadoopenv@hadoop01 ~]$ mr-jobhistory-daemon.sh start historyserver

[hadoopenv@hadoop01 ~]$ jps

5、集群机器查看

5.1、hadoop01

5.2、hadoop02

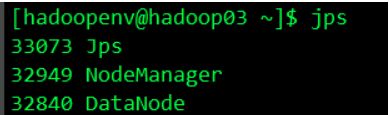

5.3、hadoop03

6、查看结果

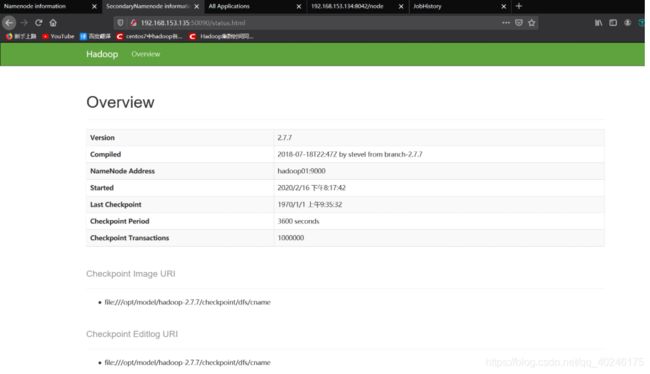

6.1、NameNode

http://192.168.153.134:50070

6.2、SecondaryNameNode

http://192.168.153.135:50090

6.3、ResourceManager

http://192.168.153.134:8088

6.4、NodeManager

http://192.168.153.134:8042

6.5、JobHistoryServer

http://192.168.153.134:19888

五、基准测试

1、写入测试

[hadoopenv@hadoop01 mapreduce]$ hadoop jar /opt/model/hadoop-2.7.7/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.7.7-tests.jar TestDFSIO -write -nrFiles 10 -size 100MB

可直接进入web端查看。

50070查看新生成的目录,或19888查看相应的日志

2、读取测试

[hadoopenv@hadoop01 mapreduce]$ hadoop jar /opt/model/hadoop-2.7.7/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.7.7-tests.jar TestDFSIO -read -nrFiles 10 -size 100MB

可直接进入web端查看。

50070查看新生成的目录,或19888查看相应的日志

3、清理测试数据

[hadoopenv@hadoop01 mapreduce]$ hadoop jar /opt/model/hadoop-2.7.7/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.7.7-tests.jar TestDFSIO -clean

觉得有帮助的,请多多支持博主,点赞关注哦~