把onnx模型转TensorRT模型的trt模型报错:Your ONNX model has been generated with INT64 weights. while TensorRT

把onnx模型转TensorRT模型的trt模型报错:[TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

文章目录:

- 1 错误原因分析

- 2 错误解决方式

- 2.1 错误解决方式一(亲测可行)

- 2.2 解决方法二:从新生成onnx模型的精度为INT32(还没有尝试)

本人环境声明:

系统环境:Ubuntu18.04.1cuda版本:10.2.89cudnn版本:7.6.5torch版本:1.5.0torchvision版本:0.6.0mmcv版本:0.5.5- 项目代码

mmdetection v2.0.0,官网是在20200506正式发布的v2.0.0版本 TensorRT-7.0.0.11uff0.6.5

1 错误原因分析

我是在把mmdetection的模型转换为onnx模型之后,再把onnx模型转化为trt模式的时候,遇到的这个错误。从Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32. 提示信息可以看出;

我们转化后的ONNX模型的参数类型是INT64- 然而:

TensorRT本身不支持INT64 - 而对于:

INT32的精度,TensorRT是支持的,因此可以尝试把ONNX模型的精度改为INT32,然后再进行转换

错误代码内容:

(mmdetection) shl@zfcv:~/TensorRT-7.0.0.11/bin$ ./trtexec --onnx=retinate_hat_hair_beard.onnx --saveEngine=retinate_hat_hair_beard.trt --device=1

&&&& RUNNING TensorRT.trtexec # ./trtexec --onnx=retinate_hat_hair_beard.onnx --saveEngine=retinate_hat_hair_beard.trt --device=1

[07/31/2020-13:56:39] [I] === Model Options ===

[07/31/2020-13:56:39] [I] Format: ONNX

[07/31/2020-13:56:39] [I] Model: retinate_hat_hair_beard.onnx

[07/31/2020-13:56:39] [I] Output:

[07/31/2020-13:56:39] [I] === Build Options ===

[07/31/2020-13:56:39] [I] Max batch: 1

[07/31/2020-13:56:39] [I] Workspace: 16 MB

[07/31/2020-13:56:39] [I] minTiming: 1

[07/31/2020-13:56:39] [I] avgTiming: 8

[07/31/2020-13:56:39] [I] Precision: FP32

[07/31/2020-13:56:39] [I] Calibration:

[07/31/2020-13:56:39] [I] Safe mode: Disabled

[07/31/2020-13:56:39] [I] Save engine: retinate_hat_hair_beard.trt

[07/31/2020-13:56:39] [I] Load engine:

[07/31/2020-13:56:39] [I] Inputs format: fp32:CHW

[07/31/2020-13:56:39] [I] Outputs format: fp32:CHW

[07/31/2020-13:56:39] [I] Input build shapes: model

[07/31/2020-13:56:39] [I] === System Options ===

[07/31/2020-13:56:39] [I] Device: 1

[07/31/2020-13:56:39] [I] DLACore:

[07/31/2020-13:56:39] [I] Plugins:

[07/31/2020-13:56:39] [I] === Inference Options ===

[07/31/2020-13:56:39] [I] Batch: 1

[07/31/2020-13:56:39] [I] Iterations: 10

[07/31/2020-13:56:39] [I] Duration: 3s (+ 200ms warm up)

[07/31/2020-13:56:39] [I] Sleep time: 0ms

[07/31/2020-13:56:39] [I] Streams: 1

[07/31/2020-13:56:39] [I] ExposeDMA: Disabled

[07/31/2020-13:56:39] [I] Spin-wait: Disabled

[07/31/2020-13:56:39] [I] Multithreading: Disabled

[07/31/2020-13:56:39] [I] CUDA Graph: Disabled

[07/31/2020-13:56:39] [I] Skip inference: Disabled

[07/31/2020-13:56:39] [I] Input inference shapes: model

[07/31/2020-13:56:39] [I] Inputs:

[07/31/2020-13:56:39] [I] === Reporting Options ===

[07/31/2020-13:56:39] [I] Verbose: Disabled

[07/31/2020-13:56:39] [I] Averages: 10 inferences

[07/31/2020-13:56:39] [I] Percentile: 99

[07/31/2020-13:56:39] [I] Dump output: Disabled

[07/31/2020-13:56:39] [I] Profile: Disabled

[07/31/2020-13:56:39] [I] Export timing to JSON file:

[07/31/2020-13:56:39] [I] Export output to JSON file:

[07/31/2020-13:56:39] [I] Export profile to JSON file:

[07/31/2020-13:56:39] [I]

----------------------------------------------------------------

Input filename: retinate_hat_hair_beard.onnx

ONNX IR version: 0.0.6

Opset version: 9

Producer name: pytorch

Producer version: 1.5

Domain:

Model version: 0

Doc string:

----------------------------------------------------------------

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[07/31/2020-13:56:40] [W] [TRT] onnx2trt_utils.cpp:198: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

While parsing node number 191 [Upsample]:

ERROR: builtin_op_importers.cpp:3240 In function importUpsample:

[8] Assertion failed: scales_input.is_weights()

[07/31/2020-13:56:40] [E] Failed to parse onnx file

[07/31/2020-13:56:40] [E] Parsing model failed

[07/31/2020-13:56:40] [E] Engine creation failed

[07/31/2020-13:56:40] [E] Engine set up failed

&&&& FAILED TensorRT.trtexec # ./trtexec --onnx=retinate_hat_hair_beard.onnx --saveEngine=retinate_hat_hair_beard.trt --device=1

(mmdetection) shl@zfcv:~/TensorRT-7.0.0.11/bin$ ls

2 错误解决方式

2.1 错误解决方式一(亲测可行)

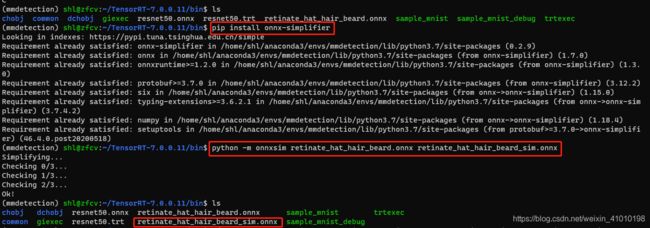

可能是我们生成的.onnx模型的graph太复杂,我们先把它变简单点

1、安装onnx-simplifier

pip install onnx-simplifier

2、把之前转化的onnx模型转化为更简单的onnx模型

python -m onnxsim retinate_hat_hair_beard.onnx retinate_hat_hair_beard_sim.onnx

3、然后在把onnx模型转换为TensorRT的trt模型

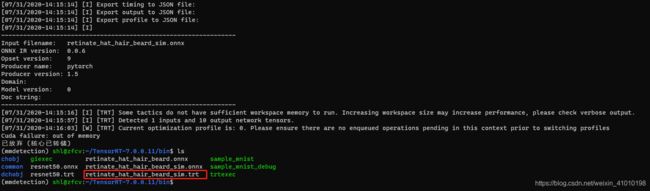

./trtexec --onnx=retinate_hat_hair_beard_sim.onnx --saveEngine=retinate_hat_hair_beard_sim.trt --device=1

(mmdetection) shl@zfcv:~/TensorRT-7.0.0.11/bin$ ./trtexec --onnx=retinate_hat_hair_beard_sim.onnx --saveEngine=retinate_hat_hair_beard_sim.trt --device=1

&&&& RUNNING TensorRT.trtexec # ./trtexec --onnx=retinate_hat_hair_beard_sim.onnx --saveEngine=retinate_hat_hair_beard_sim.trt --device=1

[07/31/2020-14:15:14] [I] === Model Options ===

[07/31/2020-14:15:14] [I] Format: ONNX

[07/31/2020-14:15:14] [I] Model: retinate_hat_hair_beard_sim.onnx

[07/31/2020-14:15:14] [I] Output:

[07/31/2020-14:15:14] [I] === Build Options ===

[07/31/2020-14:15:14] [I] Max batch: 1

[07/31/2020-14:15:14] [I] Workspace: 16 MB

[07/31/2020-14:15:14] [I] minTiming: 1

[07/31/2020-14:15:14] [I] avgTiming: 8

[07/31/2020-14:15:14] [I] Precision: FP32

[07/31/2020-14:15:14] [I] Calibration:

[07/31/2020-14:15:14] [I] Safe mode: Disabled

[07/31/2020-14:15:14] [I] Save engine: retinate_hat_hair_beard_sim.trt

[07/31/2020-14:15:14] [I] Load engine:

[07/31/2020-14:15:14] [I] Inputs format: fp32:CHW

[07/31/2020-14:15:14] [I] Outputs format: fp32:CHW

[07/31/2020-14:15:14] [I] Input build shapes: model

[07/31/2020-14:15:14] [I] === System Options ===

[07/31/2020-14:15:14] [I] Device: 1

[07/31/2020-14:15:14] [I] DLACore:

[07/31/2020-14:15:14] [I] Plugins:

[07/31/2020-14:15:14] [I] === Inference Options ===

[07/31/2020-14:15:14] [I] Batch: 1

[07/31/2020-14:15:14] [I] Iterations: 10

[07/31/2020-14:15:14] [I] Duration: 3s (+ 200ms warm up)

[07/31/2020-14:15:14] [I] Sleep time: 0ms

[07/31/2020-14:15:14] [I] Streams: 1

[07/31/2020-14:15:14] [I] ExposeDMA: Disabled

[07/31/2020-14:15:14] [I] Spin-wait: Disabled

[07/31/2020-14:15:14] [I] Multithreading: Disabled

[07/31/2020-14:15:14] [I] CUDA Graph: Disabled

[07/31/2020-14:15:14] [I] Skip inference: Disabled

[07/31/2020-14:15:14] [I] Input inference shapes: model

[07/31/2020-14:15:14] [I] Inputs:

[07/31/2020-14:15:14] [I] === Reporting Options ===

[07/31/2020-14:15:14] [I] Verbose: Disabled

[07/31/2020-14:15:14] [I] Averages: 10 inferences

[07/31/2020-14:15:14] [I] Percentile: 99

[07/31/2020-14:15:14] [I] Dump output: Disabled

[07/31/2020-14:15:14] [I] Profile: Disabled

[07/31/2020-14:15:14] [I] Export timing to JSON file:

[07/31/2020-14:15:14] [I] Export output to JSON file:

[07/31/2020-14:15:14] [I] Export profile to JSON file:

[07/31/2020-14:15:14] [I]

----------------------------------------------------------------

Input filename: retinate_hat_hair_beard_sim.onnx

ONNX IR version: 0.0.6

Opset version: 9

Producer name: pytorch

Producer version: 1.5

Domain:

Model version: 0

Doc string:

----------------------------------------------------------------

[07/31/2020-14:15:16] [I] [TRT] Some tactics do not have sufficient workspace memory to run. Increasing workspace size may increase performance, please check verbose output.

[07/31/2020-14:15:57] [I] [TRT] Detected 1 inputs and 10 output network tensors.

[07/31/2020-14:16:03] [W] [TRT] Current optimization profile is: 0. Please ensure there are no enqueued operations pending in this context prior to switching profiles

Cuda failure: out of memory

已放弃 (核心已转储)

2.2 解决方法二:从新生成onnx模型的精度为INT32(还没有尝试)

![]()

♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠ ⊕ ♠