YOLOV3 源码 module.py

主要是说明程序解析cfg文件 实现实例化module的流程。

以及网络cfg各个层次与module各个层次的对应

源码地址:https://github.com/ultralytics/yolov3

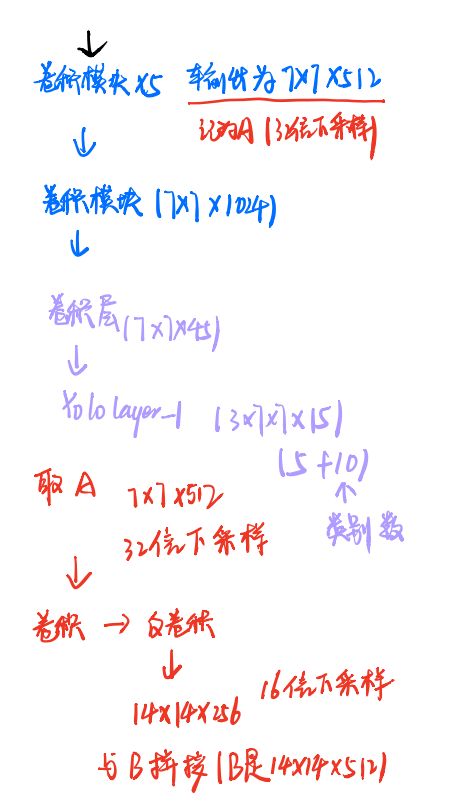

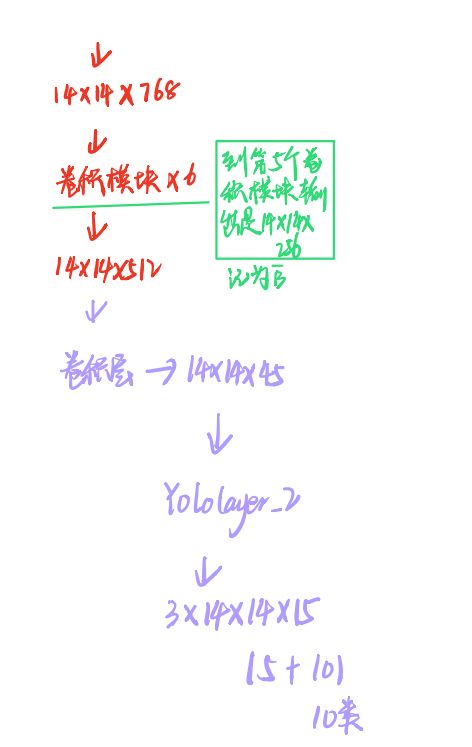

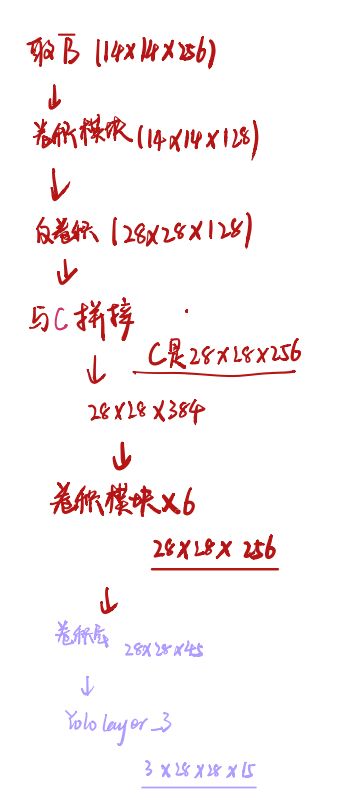

一.YOLOV3流程图

纯手画的。。。

这里input shape 是 224 224 3

检测的类别数是10类

二.cfg文件

1网络一些设定

动量优化算法的系数

学习率的衰减系数

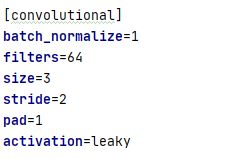

2卷积层

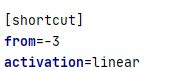

3shortcut层

对应residual block 中 shortcut操作

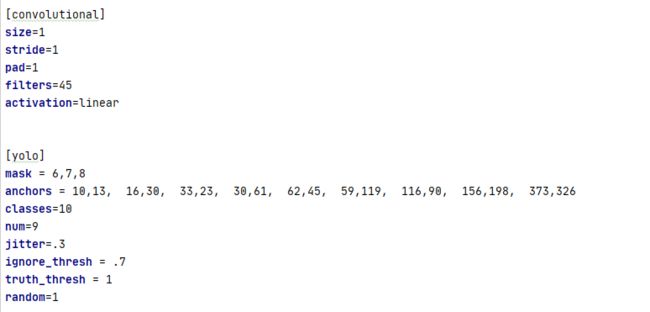

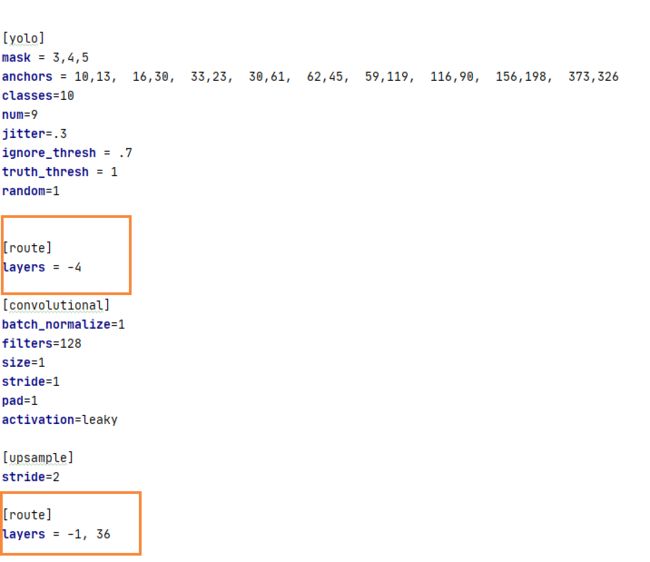

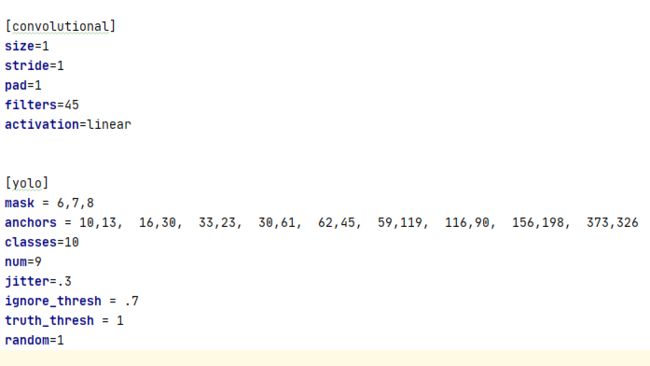

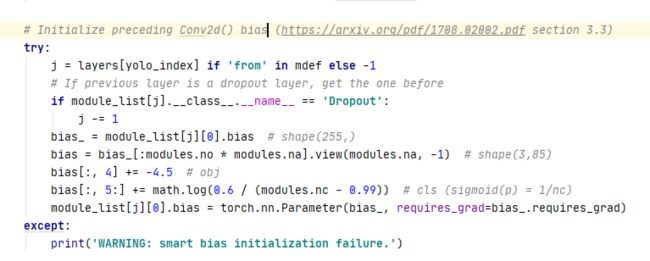

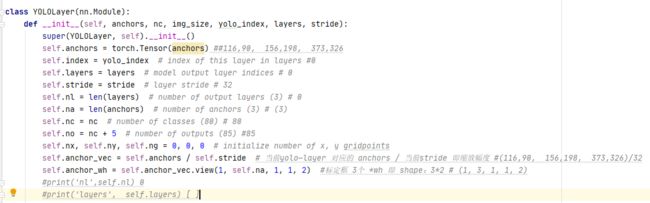

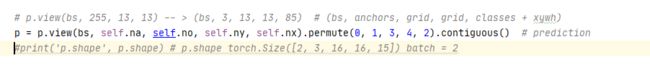

4yolo层以及yolo层之前的conv层

yolo之前的卷积层相当于检测的结果,我这里设定的是10分类,这里45对应3*(5+classnumbers)

3指的是每个网格对应三个anchor的检测

5指4+1, 4是anchor 的中心坐标 及 长宽 1 指是否包含物体

yolo层中mask的编号是指使用哪三个尺度的anchor

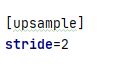

5 反卷积层

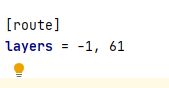

6route层

指用于把feature 进行concat操作。

三.打印模型的Summary

Model Summary: 222 layers, 6.15722e+07 parameters, 6.15722e+07 gradients

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 32, 224, 224] 864

BatchNorm2d-2 [-1, 32, 224, 224] 64

LeakyReLU-3 [-1, 32, 224, 224] 0

Conv2d-4 [-1, 64, 112, 112] 18,432

BatchNorm2d-5 [-1, 64, 112, 112] 128

LeakyReLU-6 [-1, 64, 112, 112] 0

Conv2d-7 [-1, 32, 112, 112] 2,048

BatchNorm2d-8 [-1, 32, 112, 112] 64

LeakyReLU-9 [-1, 32, 112, 112] 0

Conv2d-10 [-1, 64, 112, 112] 18,432

BatchNorm2d-11 [-1, 64, 112, 112] 128

LeakyReLU-12 [-1, 64, 112, 112] 0

WeightedFeatureFusion-13 [-1, 64, 112, 112] 0

Conv2d-14 [-1, 128, 56, 56] 73,728

BatchNorm2d-15 [-1, 128, 56, 56] 256

LeakyReLU-16 [-1, 128, 56, 56] 0

Conv2d-17 [-1, 64, 56, 56] 8,192

BatchNorm2d-18 [-1, 64, 56, 56] 128

LeakyReLU-19 [-1, 64, 56, 56] 0

Conv2d-20 [-1, 128, 56, 56] 73,728

BatchNorm2d-21 [-1, 128, 56, 56] 256

LeakyReLU-22 [-1, 128, 56, 56] 0

WeightedFeatureFusion-23 [-1, 128, 56, 56] 0

Conv2d-24 [-1, 64, 56, 56] 8,192

BatchNorm2d-25 [-1, 64, 56, 56] 128

LeakyReLU-26 [-1, 64, 56, 56] 0

Conv2d-27 [-1, 128, 56, 56] 73,728

BatchNorm2d-28 [-1, 128, 56, 56] 256

LeakyReLU-29 [-1, 128, 56, 56] 0

WeightedFeatureFusion-30 [-1, 128, 56, 56] 0

Conv2d-31 [-1, 256, 28, 28] 294,912

BatchNorm2d-32 [-1, 256, 28, 28] 512

LeakyReLU-33 [-1, 256, 28, 28] 0

Conv2d-34 [-1, 128, 28, 28] 32,768

BatchNorm2d-35 [-1, 128, 28, 28] 256

LeakyReLU-36 [-1, 128, 28, 28] 0

Conv2d-37 [-1, 256, 28, 28] 294,912

BatchNorm2d-38 [-1, 256, 28, 28] 512

LeakyReLU-39 [-1, 256, 28, 28] 0

WeightedFeatureFusion-40 [-1, 256, 28, 28] 0

Conv2d-41 [-1, 128, 28, 28] 32,768

BatchNorm2d-42 [-1, 128, 28, 28] 256

LeakyReLU-43 [-1, 128, 28, 28] 0

Conv2d-44 [-1, 256, 28, 28] 294,912

BatchNorm2d-45 [-1, 256, 28, 28] 512

LeakyReLU-46 [-1, 256, 28, 28] 0

WeightedFeatureFusion-47 [-1, 256, 28, 28] 0

Conv2d-48 [-1, 128, 28, 28] 32,768

BatchNorm2d-49 [-1, 128, 28, 28] 256

LeakyReLU-50 [-1, 128, 28, 28] 0

Conv2d-51 [-1, 256, 28, 28] 294,912

BatchNorm2d-52 [-1, 256, 28, 28] 512

LeakyReLU-53 [-1, 256, 28, 28] 0

WeightedFeatureFusion-54 [-1, 256, 28, 28] 0

Conv2d-55 [-1, 128, 28, 28] 32,768

BatchNorm2d-56 [-1, 128, 28, 28] 256

LeakyReLU-57 [-1, 128, 28, 28] 0

Conv2d-58 [-1, 256, 28, 28] 294,912

BatchNorm2d-59 [-1, 256, 28, 28] 512

LeakyReLU-60 [-1, 256, 28, 28] 0

WeightedFeatureFusion-61 [-1, 256, 28, 28] 0

Conv2d-62 [-1, 128, 28, 28] 32,768

BatchNorm2d-63 [-1, 128, 28, 28] 256

LeakyReLU-64 [-1, 128, 28, 28] 0

Conv2d-65 [-1, 256, 28, 28] 294,912

BatchNorm2d-66 [-1, 256, 28, 28] 512

LeakyReLU-67 [-1, 256, 28, 28] 0

WeightedFeatureFusion-68 [-1, 256, 28, 28] 0

Conv2d-69 [-1, 128, 28, 28] 32,768

BatchNorm2d-70 [-1, 128, 28, 28] 256

LeakyReLU-71 [-1, 128, 28, 28] 0

Conv2d-72 [-1, 256, 28, 28] 294,912

BatchNorm2d-73 [-1, 256, 28, 28] 512

LeakyReLU-74 [-1, 256, 28, 28] 0

WeightedFeatureFusion-75 [-1, 256, 28, 28] 0

Conv2d-76 [-1, 128, 28, 28] 32,768

BatchNorm2d-77 [-1, 128, 28, 28] 256

LeakyReLU-78 [-1, 128, 28, 28] 0

Conv2d-79 [-1, 256, 28, 28] 294,912

BatchNorm2d-80 [-1, 256, 28, 28] 512

LeakyReLU-81 [-1, 256, 28, 28] 0

WeightedFeatureFusion-82 [-1, 256, 28, 28] 0

Conv2d-83 [-1, 128, 28, 28] 32,768

BatchNorm2d-84 [-1, 128, 28, 28] 256

LeakyReLU-85 [-1, 128, 28, 28] 0

Conv2d-86 [-1, 256, 28, 28] 294,912

BatchNorm2d-87 [-1, 256, 28, 28] 512

LeakyReLU-88 [-1, 256, 28, 28] 0

WeightedFeatureFusion-89 [-1, 256, 28, 28] 0

Conv2d-90 [-1, 512, 14, 14] 1,179,648

BatchNorm2d-91 [-1, 512, 14, 14] 1,024

LeakyReLU-92 [-1, 512, 14, 14] 0

Conv2d-93 [-1, 256, 14, 14] 131,072

BatchNorm2d-94 [-1, 256, 14, 14] 512

LeakyReLU-95 [-1, 256, 14, 14] 0

Conv2d-96 [-1, 512, 14, 14] 1,179,648

BatchNorm2d-97 [-1, 512, 14, 14] 1,024

LeakyReLU-98 [-1, 512, 14, 14] 0

WeightedFeatureFusion-99 [-1, 512, 14, 14] 0

Conv2d-100 [-1, 256, 14, 14] 131,072

BatchNorm2d-101 [-1, 256, 14, 14] 512

LeakyReLU-102 [-1, 256, 14, 14] 0

Conv2d-103 [-1, 512, 14, 14] 1,179,648

BatchNorm2d-104 [-1, 512, 14, 14] 1,024

LeakyReLU-105 [-1, 512, 14, 14] 0

WeightedFeatureFusion-106 [-1, 512, 14, 14] 0

Conv2d-107 [-1, 256, 14, 14] 131,072

BatchNorm2d-108 [-1, 256, 14, 14] 512

LeakyReLU-109 [-1, 256, 14, 14] 0

Conv2d-110 [-1, 512, 14, 14] 1,179,648

BatchNorm2d-111 [-1, 512, 14, 14] 1,024

LeakyReLU-112 [-1, 512, 14, 14] 0

WeightedFeatureFusion-113 [-1, 512, 14, 14] 0

Conv2d-114 [-1, 256, 14, 14] 131,072

BatchNorm2d-115 [-1, 256, 14, 14] 512

LeakyReLU-116 [-1, 256, 14, 14] 0

Conv2d-117 [-1, 512, 14, 14] 1,179,648

BatchNorm2d-118 [-1, 512, 14, 14] 1,024

LeakyReLU-119 [-1, 512, 14, 14] 0

WeightedFeatureFusion-120 [-1, 512, 14, 14] 0

Conv2d-121 [-1, 256, 14, 14] 131,072

BatchNorm2d-122 [-1, 256, 14, 14] 512

LeakyReLU-123 [-1, 256, 14, 14] 0

Conv2d-124 [-1, 512, 14, 14] 1,179,648

BatchNorm2d-125 [-1, 512, 14, 14] 1,024

LeakyReLU-126 [-1, 512, 14, 14] 0

WeightedFeatureFusion-127 [-1, 512, 14, 14] 0

Conv2d-128 [-1, 256, 14, 14] 131,072

BatchNorm2d-129 [-1, 256, 14, 14] 512

LeakyReLU-130 [-1, 256, 14, 14] 0

Conv2d-131 [-1, 512, 14, 14] 1,179,648

BatchNorm2d-132 [-1, 512, 14, 14] 1,024

LeakyReLU-133 [-1, 512, 14, 14] 0

WeightedFeatureFusion-134 [-1, 512, 14, 14] 0

Conv2d-135 [-1, 256, 14, 14] 131,072

BatchNorm2d-136 [-1, 256, 14, 14] 512

LeakyReLU-137 [-1, 256, 14, 14] 0

Conv2d-138 [-1, 512, 14, 14] 1,179,648

BatchNorm2d-139 [-1, 512, 14, 14] 1,024

LeakyReLU-140 [-1, 512, 14, 14] 0

WeightedFeatureFusion-141 [-1, 512, 14, 14] 0

Conv2d-142 [-1, 256, 14, 14] 131,072

BatchNorm2d-143 [-1, 256, 14, 14] 512

LeakyReLU-144 [-1, 256, 14, 14] 0

Conv2d-145 [-1, 512, 14, 14] 1,179,648

BatchNorm2d-146 [-1, 512, 14, 14] 1,024

LeakyReLU-147 [-1, 512, 14, 14] 0

WeightedFeatureFusion-148 [-1, 512, 14, 14] 0

Conv2d-149 [-1, 1024, 7, 7] 4,718,592

BatchNorm2d-150 [-1, 1024, 7, 7] 2,048

LeakyReLU-151 [-1, 1024, 7, 7] 0

Conv2d-152 [-1, 512, 7, 7] 524,288

BatchNorm2d-153 [-1, 512, 7, 7] 1,024

LeakyReLU-154 [-1, 512, 7, 7] 0

Conv2d-155 [-1, 1024, 7, 7] 4,718,592

BatchNorm2d-156 [-1, 1024, 7, 7] 2,048

LeakyReLU-157 [-1, 1024, 7, 7] 0

WeightedFeatureFusion-158 [-1, 1024, 7, 7] 0

Conv2d-159 [-1, 512, 7, 7] 524,288

BatchNorm2d-160 [-1, 512, 7, 7] 1,024

LeakyReLU-161 [-1, 512, 7, 7] 0

Conv2d-162 [-1, 1024, 7, 7] 4,718,592

BatchNorm2d-163 [-1, 1024, 7, 7] 2,048

LeakyReLU-164 [-1, 1024, 7, 7] 0

WeightedFeatureFusion-165 [-1, 1024, 7, 7] 0

Conv2d-166 [-1, 512, 7, 7] 524,288

BatchNorm2d-167 [-1, 512, 7, 7] 1,024

LeakyReLU-168 [-1, 512, 7, 7] 0

Conv2d-169 [-1, 1024, 7, 7] 4,718,592

BatchNorm2d-170 [-1, 1024, 7, 7] 2,048

LeakyReLU-171 [-1, 1024, 7, 7] 0

WeightedFeatureFusion-172 [-1, 1024, 7, 7] 0

Conv2d-173 [-1, 512, 7, 7] 524,288

BatchNorm2d-174 [-1, 512, 7, 7] 1,024

LeakyReLU-175 [-1, 512, 7, 7] 0

Conv2d-176 [-1, 1024, 7, 7] 4,718,592

BatchNorm2d-177 [-1, 1024, 7, 7] 2,048

LeakyReLU-178 [-1, 1024, 7, 7] 0

WeightedFeatureFusion-179 [-1, 1024, 7, 7] 0

Conv2d-180 [-1, 512, 7, 7] 524,288

BatchNorm2d-181 [-1, 512, 7, 7] 1,024

LeakyReLU-182 [-1, 512, 7, 7] 0

Conv2d-183 [-1, 1024, 7, 7] 4,718,592

BatchNorm2d-184 [-1, 1024, 7, 7] 2,048

LeakyReLU-185 [-1, 1024, 7, 7] 0

Conv2d-186 [-1, 512, 7, 7] 524,288

BatchNorm2d-187 [-1, 512, 7, 7] 1,024

LeakyReLU-188 [-1, 512, 7, 7] 0

Conv2d-189 [-1, 1024, 7, 7] 4,718,592

BatchNorm2d-190 [-1, 1024, 7, 7] 2,048

LeakyReLU-191 [-1, 1024, 7, 7] 0

Conv2d-192 [-1, 512, 7, 7] 524,288

BatchNorm2d-193 [-1, 512, 7, 7] 1,024

LeakyReLU-194 [-1, 512, 7, 7] 0

Conv2d-195 [-1, 1024, 7, 7] 4,718,592

BatchNorm2d-196 [-1, 1024, 7, 7] 2,048

LeakyReLU-197 [-1, 1024, 7, 7] 0

Conv2d-198 [-1, 45, 7, 7] 46,125

YOLOLayer-199 [-1, 3, 7, 7, 15] 0

FeatureConcat-200 [-1, 512, 7, 7] 0

Conv2d-201 [-1, 256, 7, 7] 131,072

BatchNorm2d-202 [-1, 256, 7, 7] 512

LeakyReLU-203 [-1, 256, 7, 7] 0

Upsample-204 [-1, 256, 14, 14] 0

FeatureConcat-205 [-1, 768, 14, 14] 0

Conv2d-206 [-1, 256, 14, 14] 196,608

BatchNorm2d-207 [-1, 256, 14, 14] 512

LeakyReLU-208 [-1, 256, 14, 14] 0

Conv2d-209 [-1, 512, 14, 14] 1,179,648

BatchNorm2d-210 [-1, 512, 14, 14] 1,024

LeakyReLU-211 [-1, 512, 14, 14] 0

Conv2d-212 [-1, 256, 14, 14] 131,072

BatchNorm2d-213 [-1, 256, 14, 14] 512

LeakyReLU-214 [-1, 256, 14, 14] 0

Conv2d-215 [-1, 512, 14, 14] 1,179,648

BatchNorm2d-216 [-1, 512, 14, 14] 1,024

LeakyReLU-217 [-1, 512, 14, 14] 0

Conv2d-218 [-1, 256, 14, 14] 131,072

BatchNorm2d-219 [-1, 256, 14, 14] 512

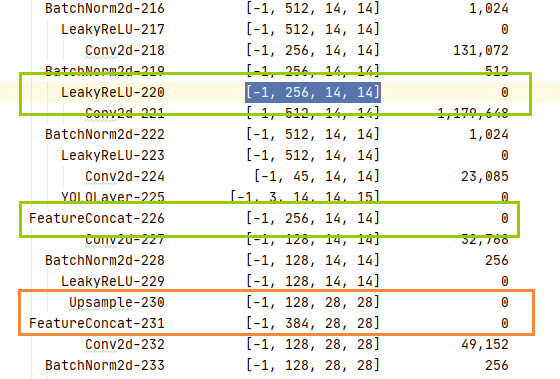

LeakyReLU-220 [-1, 256, 14, 14] 0

Conv2d-221 [-1, 512, 14, 14] 1,179,648

BatchNorm2d-222 [-1, 512, 14, 14] 1,024

LeakyReLU-223 [-1, 512, 14, 14] 0

Conv2d-224 [-1, 45, 14, 14] 23,085

YOLOLayer-225 [-1, 3, 14, 14, 15] 0

FeatureConcat-226 [-1, 256, 14, 14] 0

Conv2d-227 [-1, 128, 14, 14] 32,768

BatchNorm2d-228 [-1, 128, 14, 14] 256

LeakyReLU-229 [-1, 128, 14, 14] 0

Upsample-230 [-1, 128, 28, 28] 0

FeatureConcat-231 [-1, 384, 28, 28] 0

Conv2d-232 [-1, 128, 28, 28] 49,152

BatchNorm2d-233 [-1, 128, 28, 28] 256

LeakyReLU-234 [-1, 128, 28, 28] 0

Conv2d-235 [-1, 256, 28, 28] 294,912

BatchNorm2d-236 [-1, 256, 28, 28] 512

LeakyReLU-237 [-1, 256, 28, 28] 0

Conv2d-238 [-1, 128, 28, 28] 32,768

BatchNorm2d-239 [-1, 128, 28, 28] 256

LeakyReLU-240 [-1, 128, 28, 28] 0

Conv2d-241 [-1, 256, 28, 28] 294,912

BatchNorm2d-242 [-1, 256, 28, 28] 512

LeakyReLU-243 [-1, 256, 28, 28] 0

Conv2d-244 [-1, 128, 28, 28] 32,768

BatchNorm2d-245 [-1, 128, 28, 28] 256

LeakyReLU-246 [-1, 128, 28, 28] 0

Conv2d-247 [-1, 256, 28, 28] 294,912

BatchNorm2d-248 [-1, 256, 28, 28] 512

LeakyReLU-249 [-1, 256, 28, 28] 0

Conv2d-250 [-1, 45, 28, 28] 11,565

YOLOLayer-251 [-1, 3, 28, 28, 15] 0

================================================================

Total params: 61,572,199

Trainable params: 61,572,199

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 292.12

Params size (MB): 234.88

Estimated Total Size (MB): 527.58

WeightedFeatureFusion 对应着shotcut 操作

FeatureConcat

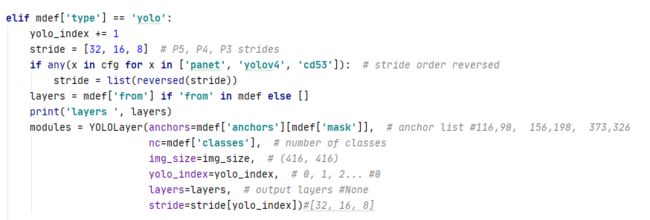

四.YOLO layer的实现