推荐系统- NCF(Neural Collaborative Filtering)的推荐模型与python实现

引言:

本文主要表述隐式反馈的基础上解决推荐算法中的关键问题协同过滤。

尽管最近的一些工作已经将深度学习用于推荐系统中,但他们主要将其用于辅助信息建模,例如物品的文字描述和音乐的声学特征。 当涉及到协同过滤建模的关键因素即用户和物品特征之间的交互时,他们仍然致力于使用矩阵分解,并在用户和物品的潜在特征上应用了内积运算。

通过将内积运算替换为可以从数据中学习任意函数的神经体系结构,本文提出了一个名为NCF(Neural network based Collaborative Filtering)的通用框架。 NCF是通用的,可以在其框架下表示和推广矩阵分解。 为了使NCF建模具有非线性效果,我们提出利用多层感知器来学习用户与物品的交互函数。 在两个真实世界的数据集上进行的大量实验表明,与现有方法相比,本文提出的NCF框架有了显著的改进。经验证据表明,使用更深层次的神经网络可以提供更好的推荐性能。

个性化推荐系统的关键在于根据用户过去的互动(如评分和点击),为用户对项目的爱好进行建模,这就是所谓的协同过滤。在各式各样的协同过滤技术中,矩阵分解(matrix factorization)是最受欢迎的一种,它利用潜在特征向量来表示用户或项目,将用户和项目投影到共享的潜在空间中。然后,将用户对项目的交互建模为其潜在向量的内积。

神经网络已被证明能够逼近任何连续函数,并且最近深度神经网络(DNNs)被发现在几个领域都是有效的,从计算机视觉、语音识别到文本处理。但是,与大量有关矩阵分解方法的文献相比,使用深度神经网络运用在推荐系统中的工作相对较少。尽管一些最近提出的方法运用了深度神经网络在推荐任务并且展示出了显著的结果,他们大多是使用深度神经网络对辅助信息进行建模,例如项目的文本描述,音乐的声学特征和图像的视觉内容。在对关键的协同过滤效果进行建模时,他们仍然采用MF,使用内积将用户和项目的潜在特征结合起来,本文通过一种用于协同过滤的神经网络建模方法来解决上述研究问题。我们注重隐性反馈,通过观看视频、购买产品、点击物品等行为,间接反映用户的偏好。与明确的反馈相比(例如,评分和评价)隐式反馈可以自动跟踪,因此更容易为内容提供商收集。然而,由于没有观察到用户满意度,而且自然缺乏负面反馈,因此使用起来更具挑战性。在这篇论文中,探讨的中心主题是如何利用DNNs对有噪声的隐式反馈信号进行建模。

论文贡献

提出了一种基于神经网络的协同过滤神经网络体系结构,并设计了一个基于神经网络的协同过滤神经网络框架。

证明了MF可以被解释为NCF的一种特殊化,并且使用多层感知器赋予NCF模型高阶的非线性。

我们在两个真实世界的数据集上进行了大量的实验,以证明我们的NCF方法的有效性和协同过滤的深度学习的前景。

准备知识

令M和N分别表示用户数和物品数。定义用户-物品交互矩阵 ![]() ,从用户的隐式反馈来看

,从用户的隐式反馈来看

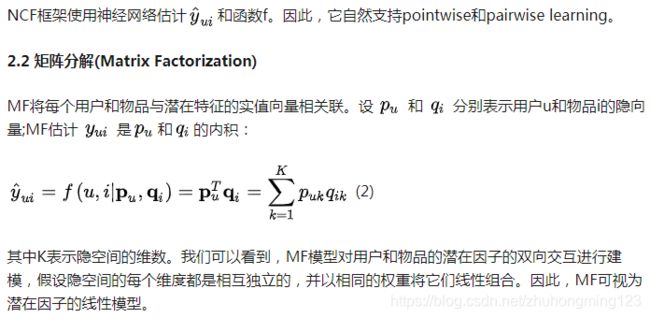

图1说明了内积函数如何限制MF的表现力。为了更好地理解这个示例,有两个设置需要事先明确说明。第一,由于MF将用户和项目映射到相同的隐空间,所以两个用户之间的相似度也可以用一个内积(隐向量夹角的余弦值)来衡量。第二,在不失一般性的前提下,使用Jaccard系数作为MF需要重新获得的两个用户之间的相似度。(原文:we use the Jaccard coefficient as the ground-truth similarity of two users that MF needs to recover.)

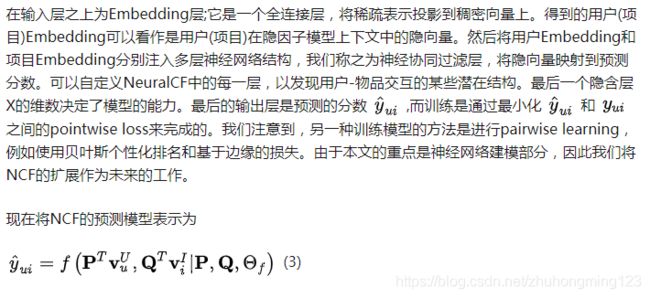

NeuralCF模型

首先提出通用的NCF框架,详细的解释了使用概率模型强调隐式数据的二值属性的NCF如何进行学习。然后证明了在NCF下MF可以被表达和推广。为了探索深度神经网络的协同过滤,提出了NCF的实例化,使用多层感知器(MLP)学习用户-项目的交互函数。最后,提出了一个新型的神经矩阵分解模型,在NCF框架下结合了MF和MLP;在对用户-物品潜在结构的建模过程中它综合了MF的线性优点和MLP的非线性优点,让模型的表达能力更强。

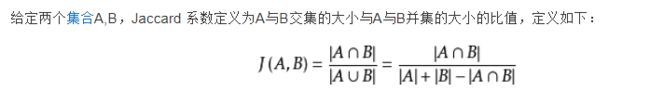

通用框架

为了允许对协同过滤进行完整的神经处理,我们采用多层表示对用户-物品交互 ![]() 进行建模,如图2所示,其中一层的输出作为下一层的输入。底层输入层由两个特征向量 和 组成分别描述用户u和物品i的特征,它们可以被定制以支持用户和项目的建模。由于本文工作的重点是纯粹的协同过滤设置,我们只使用一个用户的身份和一个项目作为输入特征,将其转换为一个具有one-hot编码的二值化稀疏向量。注意,有了这样一个用于输入的通用特征表示,我们的方法可以很容易地进行调整,通过使用内容特征来表示用户和项目,从而解决冷启动问题。

进行建模,如图2所示,其中一层的输出作为下一层的输入。底层输入层由两个特征向量 和 组成分别描述用户u和物品i的特征,它们可以被定制以支持用户和项目的建模。由于本文工作的重点是纯粹的协同过滤设置,我们只使用一个用户的身份和一个项目作为输入特征,将其转换为一个具有one-hot编码的二值化稀疏向量。注意,有了这样一个用于输入的通用特征表示,我们的方法可以很容易地进行调整,通过使用内容特征来表示用户和项目,从而解决冷启动问题。

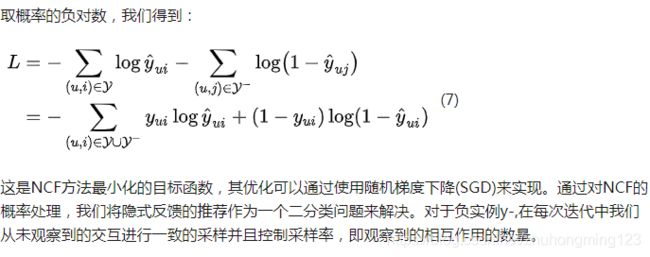

NCF的学习

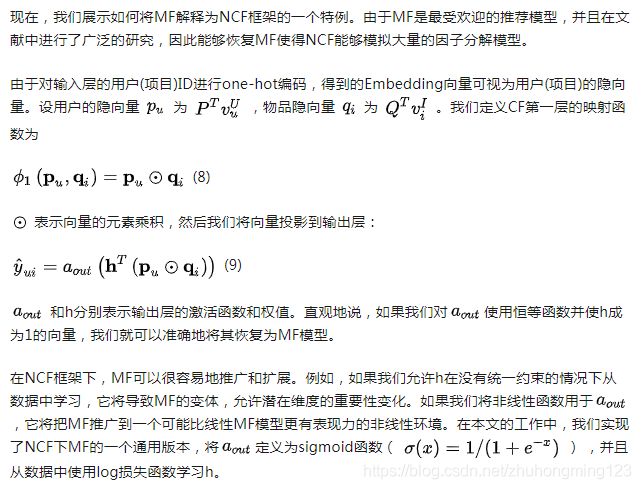

广义矩阵分解(GMF)

多层感知器(MLP)

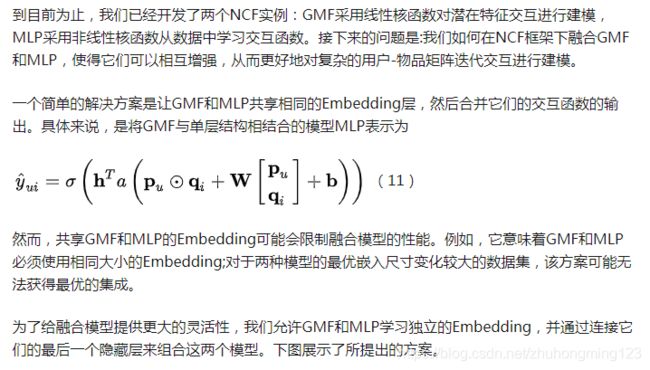

由于NCF采用两种路径来对用户和项目进行建模,所以将这两种路径的特征串联起来是很直观的。然而,简单的向量连接并不能解释用户和物品的潜在特征之间的任何交互,这对于协同过滤进行建模效果是不够的。为了解决这个问题,我们建议在连接的向量上添加隐藏层,使用一个标准的MLP来学习用户和物品潜在特征之间的交互。从这个意义上说,我们可以赋予模型很大程度的灵活性和

对于网络结构的设计,通常的解决方案是采用塔状结构,底层最宽,每一层的神经元数量逐级变少(如图2)。本文根据经验实现了塔式结构,将每一层的尺寸减半。

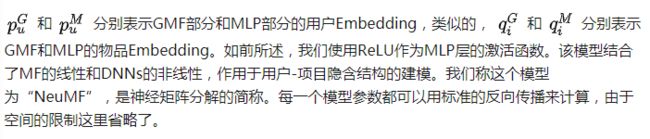

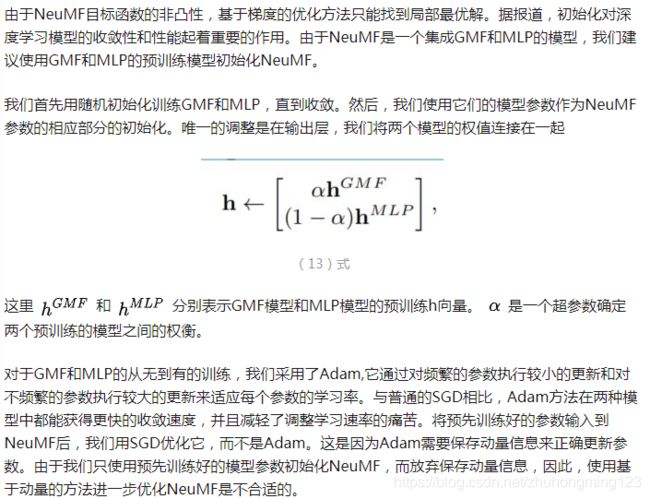

GMF与MLP的融合

预训练

Python 实现:

input:

import numpy as np

import pandas as pd

import tensorflow as tf

import os

DATA_DIR = 'data/ratings.dat'

DATA_PATH = 'data/'

COLUMN_NAMES = ['user', 'item']

def re_index(s):

i = 0

s_map = {}

for key in s:

s_map[key] = i

i += 1

return s_map

def load_data():

full_data = pd.read_csv(DATA_DIR, sep='::', header=None, names=COLUMN_NAMES,

usecols=[0, 1], dtype={0: np.int32, 1: np.int32}, engine='python')

full_data.user = full_data['user'] - 1

user_set = set(full_data['user'].unique())

item_set = set(full_data['item'].unique())

user_size = len(user_set)

item_size = len(item_set)

item_map = re_index(item_set)

item_list = []

full_data['item'] = full_data['item'].map(lambda x: item_map[x])

item_set = set(full_data.item.unique())

user_bought = {}

for i in range(len(full_data)):

u = full_data['user'][i]

t = full_data['item'][i]

if u not in user_bought:

user_bought[u] = []

user_bought[u].append(t)

user_negative = {}

for key in user_bought:

user_negative[key] = list(item_set - set(user_bought[key]))

user_length = full_data.groupby('user').size().tolist()

split_train_test = []

for i in range(len(user_set)):

for _ in range(user_length[i] - 1):

split_train_test.append('train')

split_train_test.append('test')

full_data['split'] = split_train_test

train_data = full_data[full_data['split'] == 'train'].reset_index(drop=True)

test_data = full_data[full_data['split'] == 'test'].reset_index(drop=True)

del train_data['split']

del test_data['split']

labels = np.ones(len(train_data), dtype=np.int32)

train_features = train_data

train_labels = labels.tolist()

test_features = test_data

test_labels = test_data['item'].tolist()

return ((train_features, train_labels),

(test_features, test_labels),

(user_size, item_size),

(user_bought, user_negative))

def add_negative(features, user_negative, labels, numbers, is_training):

feature_user, feature_item, labels_add, feature_dict = [], [], [], {}

for i in range(len(features)):

user = features['user'][i]

item = features['item'][i]

label = labels[i]

feature_user.append(user)

feature_item.append(item)

labels_add.append(label)

neg_samples = np.random.choice(user_negative[user], size=numbers, replace=False).tolist()

if is_training:

for k in neg_samples:

feature_user.append(user)

feature_item.append(k)

labels_add.append(0)

else:

for k in neg_samples:

feature_user.append(user)

feature_item.append(k)

labels_add.append(k)

feature_dict['user'] = feature_user

feature_dict['item'] = feature_item

return feature_dict, labels_add

def dump_data(features, labels, user_negative, num_neg, is_training):

if not os.path.exists(DATA_PATH):

os.makedirs(DATA_PATH)

features, labels = add_negative(features, user_negative, labels, num_neg, is_training)

data_dict = dict([('user', features['user']),

('item', features['item']), ('label', labels)])

print(data_dict)

if is_training:

np.save(os.path.join(DATA_PATH, 'train_data.npy'), data_dict)

else:

np.save(os.path.join(DATA_PATH, 'test_data.npy'), data_dict)

def train_input_fn(features, labels, batch_size, user_negative, num_neg):

data_path = os.path.join(DATA_PATH, 'train_data.npy')

if not os.path.exists(data_path):

dump_data(features, labels, user_negative, num_neg, True)

data = np.load(data_path,allow_pickle=True).item()

dataset = tf.data.Dataset.from_tensor_slices(data)

dataset = dataset.shuffle(100000).batch(batch_size)

return dataset

def eval_input_fn(features, labels, user_negative, test_neg):

""" Construct testing dataset. """

data_path = os.path.join(DATA_PATH, 'test_data.npy')

if not os.path.exists(data_path):

dump_data(features, labels, user_negative, test_neg, False)

data = np.load(data_path).item()

print("Loading testing data finished!")

dataset = tf.data.Dataset.from_tensor_slices(data)

dataset = dataset.batch(test_neg + 1)

return dataset

Model

import numpy as np

import tensorflow as tf

class NCF(object):

def __init__(self, embed_size, user_size, item_size, lr,

optim, initializer, loss_func, activation_func,

regularizer_rate, iterator, topk, dropout, is_training):

"""

Important Arguments.

embed_size: The final embedding size for users and items.

optim: The optimization method chosen in this model.

initializer: The initialization method.

loss_func: Loss function, we choose the cross entropy.

regularizer_rate: L2 is chosen, this represents the L2 rate.

iterator: Input dataset.

topk: For evaluation, computing the topk items.

"""

self.embed_size = embed_size

self.user_size = user_size

self.item_size = item_size

self.lr = lr

self.initializer = initializer

self.loss_func = loss_func

self.activation_func = activation_func

self.regularizer_rate = regularizer_rate

self.optim = optim

self.topk = topk

self.dropout = dropout

self.is_training = is_training

self.iterator = iterator

def get_data(self):

sample = self.iterator.get_next()

self.user = sample['user']

self.item = sample['item']

self.label = tf.cast(sample['label'], tf.float32)

def inference(self):

""" Initialize important settings """

self.regularizer = tf.contrib.layers.l2_regularizer(self.regularizer_rate)

if self.initializer == 'Normal':

self.initializer = tf.truncated_normal_initializer(stddev=0.01)

elif self.initializer == 'Xavier_Normal':

self.initializer = tf.contrib.layers.xavier_initializer()

else:

self.initializer = tf.glorot_uniform_initializer()

if self.activation_func == 'ReLU':

self.activation_func = tf.nn.relu

elif self.activation_func == 'Leaky_ReLU':

self.activation_func = tf.nn.leaky_relu

elif self.activation_func == 'ELU':

self.activation_func = tf.nn.elu

if self.loss_func == 'cross_entropy':

# self.loss_func = lambda labels, logits: -tf.reduce_sum(

# (labels * tf.log(logits) + (

# tf.ones_like(labels, dtype=tf.float32) - labels) *

# tf.log(tf.ones_like(logits, dtype=tf.float32) - logits)), 1)

self.loss_func = tf.nn.sigmoid_cross_entropy_with_logits

if self.optim == 'SGD':

self.optim = tf.train.GradientDescentOptimizer(self.lr,

name='SGD')

elif self.optim == 'RMSProp':

self.optim = tf.train.RMSPropOptimizer(self.lr, decay=0.9,

momentum=0.0, name='RMSProp')

elif self.optim == 'Adam':

self.optim = tf.train.AdamOptimizer(self.lr, name='Adam')

def create_model(self):

with tf.name_scope('input'):

self.user_onehot = tf.one_hot(self.user, self.user_size, name='user_onehot')

self.item_onehot = tf.one_hot(self.item, self.item_size, name='item_onehot')

with tf.name_scope('embed'):

self.user_embed_GMF = tf.layers.dense(inputs=self.user_onehot,

units=self.embed_size,

activation=self.activation_func,

kernel_initializer=self.initializer,

kernel_regularizer=self.regularizer,

name='user_embed_GMF')

self.item_embed_GMF = tf.layers.dense(inputs=self.item_onehot,

units=self.embed_size,

activation=self.activation_func,

kernel_initializer=self.initializer,

kernel_regularizer=self.regularizer,

name='item_embed_GMF')

self.user_embed_MLP = tf.layers.dense(inputs=self.user_onehot,

units=self.embed_size,

activation=self.activation_func,

kernel_initializer=self.initializer,

kernel_regularizer=self.regularizer,

name='user_embed_MLP')

self.item_embed_MLP = tf.layers.dense(inputs=self.item_onehot,

units=self.embed_size,

activation=self.activation_func,

kernel_initializer=self.initializer,

kernel_regularizer=self.regularizer,

name='item_embed_MLP')

with tf.name_scope("GMF"):

self.GMF = tf.multiply(self.user_embed_GMF, self.item_embed_GMF, name='GMF')

with tf.name_scope("MLP"):

self.interaction = tf.concat([self.user_embed_MLP, self.item_embed_MLP],

axis=-1, name='interaction')

self.layer1_MLP = tf.layers.dense(inputs=self.interaction,

units=self.embed_size * 2,

activation=self.activation_func,

kernel_initializer=self.initializer,

kernel_regularizer=self.regularizer,

name='layer1_MLP')

self.layer1_MLP = tf.layers.dropout(self.layer1_MLP, rate=self.dropout)

self.layer2_MLP = tf.layers.dense(inputs=self.layer1_MLP,

units=self.embed_size,

activation=self.activation_func,

kernel_initializer=self.initializer,

kernel_regularizer=self.regularizer,

name='layer2_MLP')

self.layer2_MLP = tf.layers.dropout(self.layer2_MLP, rate=self.dropout)

self.layer3_MLP = tf.layers.dense(inputs=self.layer2_MLP,

units=self.embed_size // 2,

activation=self.activation_func,

kernel_initializer=self.initializer,

kernel_regularizer=self.regularizer,

name='layer3_MLP')

self.layer3_MLP = tf.layers.dropout(self.layer3_MLP, rate=self.dropout)

with tf.name_scope('concatenation'):

self.concatenation = tf.concat([self.GMF, self.layer3_MLP], axis=-1, name='concatenation')

self.logits = tf.layers.dense(inputs=self.concatenation,

units=1,

activation=None,

kernel_initializer=self.initializer,

kernel_regularizer=self.regularizer,

name='predict')

self.logits_dense = tf.reshape(self.logits, [-1])

with tf.name_scope("loss"):

self.loss = tf.reduce_mean(self.loss_func(

labels=self.label, logits=self.logits_dense, name='loss'))

# self.loss = tf.reduce_mean(self.loss_func(self.label, self.logits),

# name='loss')

with tf.name_scope("optimzation"):

self.optimzer = self.optim.minimize(self.loss)

def eval(self):

with tf.name_scope("evaluation"):

self.item_replica = self.item

_, self.indice = tf.nn.top_k(tf.sigmoid(self.logits_dense), self.topk)

def summary(self):

""" Create summaries to write on tensorboard. """

self.writer = tf.summary.FileWriter('./graphs/NCF', tf.get_default_graph())

with tf.name_scope("summaries"):

tf.summary.scalar('loss', self.loss)

tf.summary.histogram('histogram loss', self.loss)

self.summary_op = tf.summary.merge_all()

def build(self):

self.get_data()

self.inference()

self.create_model()

self.eval()

self.summary()

self.saver = tf.train.Saver(tf.global_variables())

def step(self, session, step):

""" Train the model step by step. """

if self.is_training:

loss, optim, summaries = session.run(

[self.loss, self.optimzer, self.summary_op])

self.writer.add_summary(summaries, global_step=step)

else:

indice, item = session.run([self.indice, self.item_replica])

prediction = np.take(item, indice)

return prediction, item

metrics:

import numpy as np

def mrr(gt_item, pred_items):

if gt_item in pred_items:

index = np.where(pred_items == gt_item)[0][0]

return np.reciprocal(float(index + 1))

else:

return 0

def hit(gt_item, pred_items):

if gt_item in pred_items:

return 1

return 0

def ndcg(gt_item, pred_items):

if gt_item in pred_items:

index = np.where(pred_items == gt_item)[0][0]

return np.reciprocal(np.log2(index + 2))

return 0

main

import os, sys, time

import numpy as np

import tensorflow as tf

import recommend.ncf.NCF_input as NCF_input

import recommend.ncf.NCF as NCF

import recommend.ncf.metrics as metrics

FLAGS = tf.app.flags.FLAGS

tf.app.flags.DEFINE_integer('batch_size', 128, 'size of mini-batch.')

tf.app.flags.DEFINE_integer('negative_num', 4, 'number of negative samples.')

tf.app.flags.DEFINE_integer('test_neg', 99, 'number of negative samples for test.')

tf.app.flags.DEFINE_integer('embedding_size', 16, 'the size for embedding user and item.')

tf.app.flags.DEFINE_integer('epochs', 20, 'the number of epochs.')

tf.app.flags.DEFINE_integer('topK', 10, 'topk for evaluation.')

tf.app.flags.DEFINE_string('optim', 'Adam', 'the optimization method.')

tf.app.flags.DEFINE_string('initializer', 'Xavier', 'the initializer method.')

tf.app.flags.DEFINE_string('loss_func', 'cross_entropy', 'the loss function.')

tf.app.flags.DEFINE_string('activation', 'ReLU', 'the activation function.')

tf.app.flags.DEFINE_string('model_dir', 'model/', 'the dir for saving model.')

tf.app.flags.DEFINE_float('regularizer', 0.0, 'the regularizer rate.')

tf.app.flags.DEFINE_float('lr', 0.001, 'learning rate.')

tf.app.flags.DEFINE_float('dropout', 0.0, 'dropout rate.')

def train(train_data, test_data, user_size, item_size):

with tf.Session() as sess:

iterator = tf.data.Iterator.from_structure(train_data.output_types,

train_data.output_shapes)

model = NCF.NCF(FLAGS.embedding_size, user_size, item_size, FLAGS.lr,

FLAGS.optim, FLAGS.initializer, FLAGS.loss_func, FLAGS.activation,

FLAGS.regularizer, iterator, FLAGS.topK, FLAGS.dropout, is_training=True)

model.build()

ckpt = tf.train.get_checkpoint_state(FLAGS.model_dir)

if ckpt:

print("Reading model parameters from %s" % ckpt.model_checkpoint_path)

model.saver.restore(sess, ckpt.model_checkpoint_path)

else:

print("Creating model with fresh parameters.")

sess.run(tf.global_variables_initializer())

count = 0

for epoch in range(FLAGS.epochs):

sess.run(model.iterator.make_initializer(train_data))

model.is_training = True

model.get_data()

start_time = time.time()

try:

while True:

model.step(sess, count)

count += 1

except tf.errors.OutOfRangeError:

print("Epoch %d training " % epoch + "Took: " + time.strftime("%H: %M: %S",

time.gmtime(time.time() - start_time)))

sess.run(model.iterator.make_initializer(test_data))

model.is_training = False

model.get_data()

start_time = time.time()

HR, MRR, NDCG = [], [], []

prediction, label = model.step(sess, None)

try:

while True:

prediction, label = model.step(sess, None)

label = int(label[0])

HR.append(metrics.hit(label, prediction))

MRR.append(metrics.mrr(label, prediction))

NDCG.append(metrics.ndcg(label, prediction))

except tf.errors.OutOfRangeError:

hr = np.array(HR).mean()

mrr = np.array(MRR).mean()

ndcg = np.array(NDCG).mean()

print("Epoch %d testing " % epoch + "Took: " + time.strftime("%H: %M: %S",

time.gmtime(time.time() - start_time)))

print("HR is %.3f, MRR is %.3f, NDCG is %.3f" % (hr, mrr, ndcg))

################################## SAVE MODEL ################################

checkpoint_path = os.path.join(FLAGS.model_dir, "NCF.ckpt")

model.saver.save(sess, checkpoint_path)

def main():

((train_features, train_labels),

(test_features, test_labels),

(user_size, item_size),

(user_bought, user_negative)) = NCF_input.load_data()

print(train_features[:10])

train_data = NCF_input.train_input_fn(train_features, train_labels, FLAGS.batch_size, user_negative,

FLAGS.negative_num)

# print(train_data)

test_data = NCF_input.eval_input_fn(test_features, test_labels,

user_negative, FLAGS.test_neg)

train(train_data, test_data, user_size, item_size)

if __name__ == '__main__':

main()