【cs231n学习笔记(2017)】——— 课程作业assignment1及拓展(KNN)

构建模型

L1模型

代码实现:

import numpy as np

class KNN_L1:

def __init__(self):

pass

def train(self,X, y):

self.X_train = X

self.y_train = y

def predict(self, x):

num_test = x.shape[0]

y_pred = np.zeros(num_test, dtype=self.y_train.dtype)

for i in range(num_test):

distances = np.sum(np.abs(self.X_train-x[i, :]), axis=1)

min_index = np.argmin(distances)

y_pred[i] = self.y_train[min_index]

return y_pred将这个模型保存为cs231n_KNN_L1.py文件

L2模型

代码实现:

import numpy as np

class KNN_L2:

def __init__(self):

pass

def train(self,X,y):

self.X_train = X

self.y_train = y

def predict(self,X,k=1,num_loops=0):

if num_loops==0:

dists=self.compute_distances_no_loops(X)

elif num_loops==1:

dists=self.compute_distances_one_loops(X)

elif num_loops==2:

dists=self.compute_distances_one_loops(X)

return self.predict_labels(dists,k=k)

#双重循环

def compute_distances_two_loops(self, X):

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test,num_train))

for i in range(num_test):

for j in range(num_train):

dists[i, j] = np.sqrt(np.sum((X[i, :]-self.X_train[j, :])**2))

return dists

# 一层循环

def compute_distances_one_loop(self, X):

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

for i in range(num_test):

dists[i, :] = np.sqrt(np.sum(np.square(self.X_train - X[i, :]), axis=1))

return dists

#无循环

def compute_distances_no_loops(self, X):

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

test_sum = np.sum(np.square(X), axis=1)

train_sum = np.sum(np.square(self.X_train), axis=1)

inner_product = np.dot(X, self.X_train.T)

dists = np.sqrt(-2 * inner_product + test_sum.reshape(-1, 1) + train_sum)

return dists

def predict_labels(self, dists, k=1):

num_test = dists.shape[0]

y_pred = np.zeros(num_test)

for i in range(num_test):

closest_y = []

y_indicies = np.argsort(dists[i, :], axis=0)

closest_y = self.y_train[y_indicies[: k]]

y_pred[i] = np.argmax(np.bincount(closest_y))

return y_pred将这个文件保存为cs231n_KNN_L2.py文件

这样我们的模型就构建好了

数据载入

下载地址

CIFAR-10用官网所说的模块进行读取import pickle

import pickle

import numpy as np

import os

def load_cifar_batch(filename):

with open(filename, 'rb') as f:

datadict = pickle.load(f, encoding='bytes')

x = datadict[b'data']

y = datadict[b'labels']

x = x.reshape(10000, 3, 32, 32).transpose(0, 2, 3, 1).astype('float')

y = np.array(y)

return x, y

# 将图片转换为数组形式

def load_cifar10(root):

xs = []

ys = []

for b in range(1, 6):

f = os.path.join(root, 'data_batch_%d' % (b,))

x, y = load_cifar_batch(f)

xs.append(x)

ys.append(y)

Xtrain = np.concatenate(xs)

Ytrain = np.concatenate(ys)

del x, y

Xtest, Ytest=load_cifar_batch(os.path.join(root, 'test_batch'))

return Xtrain, Ytrain, Xtest, Ytest将这个文件保存为cs231n_data_utils.py文件

训练及预测

将数据集载入模型

cifar10_path='C://Users//Nicht_sehen//Desktop//assignment1//cs231n//datasets//cifar-10-batches-py'

x_train,y_train,x_test,y_test=load_cifar10(cifar10_path) 为了对数据有一个大概的了解,我们来看一下数据集的大小:

print('training data shape:',x_train.shape)

print('training labels shape:',y_train.shape)

print('test data shape:',x_test.shape)

print('test labels shape:',y_test.shape) 结果如下:

training data shape: (50000, 32, 32, 3)

training labels shape: (50000,)

test data shape: (10000, 32, 32, 3)

test labels shape: (10000,)

可以看出共有50000张训练集,10000张测试集。我们这里选取10000张训练集,1000张测试集

num_training=10000

mask=range(num_training)

x_train=x_train[mask]

y_train=y_train[mask]

num_test=1000

mask=range(num_test)

x_test=x_test[mask]

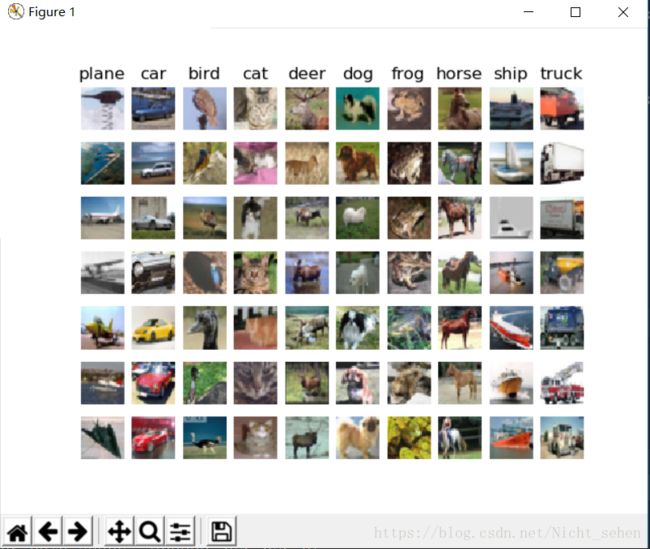

y_test=y_test[mask] 挑选几张图片看看:

classes=['plane','car','bird','cat','deer','dog','frog','horse','ship','truck']

num_claesses=len(classes)

samples_per_class=7

for y ,cls in enumerate(classes):

idxs=np.flatnonzero(y_train==y)

idxs=np.random.choice(idxs,samples_per_class,replace=False)

for i ,idx in enumerate(idxs):

plt_idx=i*num_claesses+y+1

plt.subplot(samples_per_class,num_claesses,plt_idx)

plt.imshow(x_train[idx].astype('uint8'))

plt.axis('off')

if i ==0:

plt.title(cls)

plt.show() x_train=np.reshape(x_train,(x_train.shape[0],-1))

x_test=np.reshape(x_test,(x_test.shape[0],-1))

print(x_train.shape,x_test.shape) 利用L1模型的完整代码

import numpy as np

from cs231n_data_utils import load_cifar10

from cs231n_KNN_L1 import KNN_L1

# 载入数据

cifar10_path='C://Users//Nicht_sehen//Desktop//assignment1//cs231n//datasets//cifar-10-batches-py'

x_train,y_train,x_test,y_test=load_cifar10(cifar10_path)

#选取10000张训练集,1000张测试集

num_training=10000

mask=range(num_training)

x_train=x_train[mask]

y_train=y_train[mask]

num_test=1000

mask=range(num_test)

x_test=x_test[mask]

y_test=y_test[mask]

# 将图像数据拉长为行向量

x_train=np.reshape(x_train,(x_train.shape[0],-1))

x_test=np.reshape(x_test,(x_test.shape[0],-1))

print(x_train.shape,x_test.shape)

# 计算距离

classifier=KNN_L1()

classifier.train(x_train,y_train)

# 对测试集进行预测

y_test_pred = classifier.predict(x_test)

# 模型评估

num_correct = np.sum(y_test_pred == y_test)

accuracy = float(num_correct) / num_test

print ('got %d / %d correct => accuracy: %f' % (num_correct, num_test, accuracy)) 结果如下:

(10000, 3072) (1000, 3072)

got 316 / 1000 correct => accuracy: 0.316000利用L2模型

L2模型要取K值,这里用交叉验证的方法求K值

将数据集平均分成5份,对每个k值,选取一份测试,其余训练,计算准确率.

num_folds=5

k_choices=[1,3,5,8,10,12,15,20,50,100]

x_train_folds=[]

y_train_folds=[]

y_train=y_train.reshape(-1,1)

x_train_folds=np.array_split(x_train,num_folds)

y_train_folds=np.array_split(y_train,num_folds)

k_to_accuracies={}

for k in k_choices:

k_to_accuracies.setdefault(k,[])

for i in range(num_folds):

classifier=KNN_L2()

x_val_train=np.vstack(x_train_folds[0:i]+x_train_folds[i+1:])

y_val_train = np.vstack(y_train_folds[0:i] + y_train_folds[i + 1:])

y_val_train=y_val_train[:,0]

classifier.train(x_val_train,y_val_train)

for k in k_choices:

y_val_pred=classifier.predict(x_train_folds[i],k=k)

num_correct=np.sum(y_val_pred==y_train_folds[i][:,0])

accuracy=float(num_correct)/len(y_val_pred)

k_to_accuracies[k]=k_to_accuracies[k]+[accuracy]

for k in sorted(k_to_accuracies):

sum_accuracy=0

for accuracy in k_to_accuracies[k]:

print('k=%d, accuracy=%f' % (k,accuracy))

sum_accuracy+=accuracy

print('the average accuracy is :%f' % (sum_accuracy/5)) 结果如下:

k=1, accuracy=0.288500

k=1, accuracy=0.284000

k=1, accuracy=0.282500

k=1, accuracy=0.274500

k=1, accuracy=0.277000

the average accuracy is :0.281300

k=3, accuracy=0.287500

k=3, accuracy=0.274000

k=3, accuracy=0.278500

k=3, accuracy=0.267500

k=3, accuracy=0.265500

the average accuracy is :0.274600

k=5, accuracy=0.294500

k=5, accuracy=0.284000

k=5, accuracy=0.297500

k=5, accuracy=0.275000

k=5, accuracy=0.278500

the average accuracy is :0.285900

k=8, accuracy=0.298500

k=8, accuracy=0.296000

k=8, accuracy=0.284000

k=8, accuracy=0.274000

k=8, accuracy=0.286000

the average accuracy is :0.287700

k=10, accuracy=0.302500

k=10, accuracy=0.287000

k=10, accuracy=0.284000

k=10, accuracy=0.266000

k=10, accuracy=0.285000

the average accuracy is :0.284900

k=12, accuracy=0.304500

k=12, accuracy=0.293500

k=12, accuracy=0.285500

k=12, accuracy=0.265000

k=12, accuracy=0.275500

the average accuracy is :0.284800

k=15, accuracy=0.294000

k=15, accuracy=0.297000

k=15, accuracy=0.275000

k=15, accuracy=0.274000

k=15, accuracy=0.276000

the average accuracy is :0.283200

k=20, accuracy=0.294500

k=20, accuracy=0.299000

k=20, accuracy=0.284000

k=20, accuracy=0.272000

k=20, accuracy=0.279500

the average accuracy is :0.285800

k=50, accuracy=0.273500

k=50, accuracy=0.289000

k=50, accuracy=0.284000

k=50, accuracy=0.256000

k=50, accuracy=0.262000

the average accuracy is :0.272900

k=100, accuracy=0.267000

k=100, accuracy=0.269500

k=100, accuracy=0.269000

k=100, accuracy=0.246500

k=100, accuracy=0.257500

the average accuracy is :0.261900所以最适合的K值为8

L2模型的完整代码如下:

import numpy as np

from cs231n_data_utils import load_cifar10

from cs231n_KNN_L2 import KNN_L2

# 载入数据

cifar10_path='C://Users//Nicht_sehen//Desktop//assignment1//cs231n//datasets//cifar-10-batches-py'

x_train,y_train,x_test,y_test=load_cifar10(cifar10_path)

# 选取10000张训练集,1000张测试集

num_training=10000

mask=range(num_training)

x_train=x_train[mask]

y_train=y_train[mask]

num_test=1000

mask=range(num_test)

x_test=x_test[mask]

y_test=y_test[mask]

# 将图像数据拉长为行向量

x_train=np.reshape(x_train,(x_train.shape[0],-1))

x_test=np.reshape(x_test,(x_test.shape[0],-1))

print(x_train.shape,x_test.shape)

# 计算欧式距离

classifier=KNN_L2()

classifier.train(x_train,y_train)

distis=classifier.compute_distances_two_loops(x_test)

# 对测试集进行预测,k取8

y_test_pred=classifier.predict_labels(distis,k=8)

# 模型评估

num_correct = np.sum(y_test_pred == y_test)

accuracy = float(num_correct) / num_test

print ('got %d / %d correct => accuracy: %f' % (num_correct, num_test, accuracy)) 结果如下:

(10000, 3072) (1000, 3072)

got 276 / 1000 correct => accuracy: 0.276000看来在这个上L1略高一点啊~~