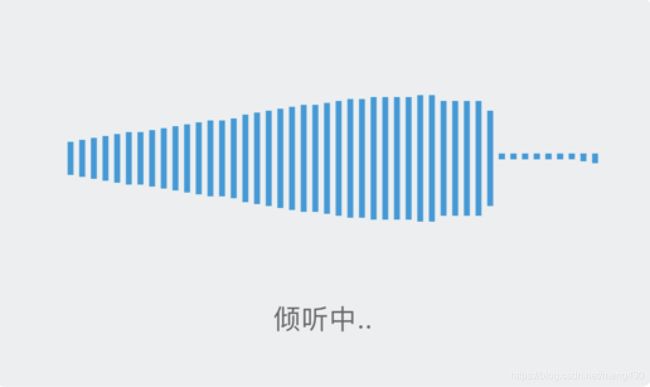

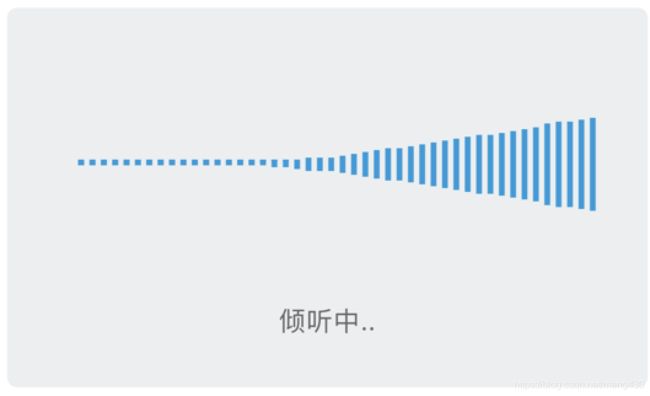

一个好玩的波形声音图

class VoiceWaveView: UIView {

var audioRecorder:AVAudioRecorder!

lazy var audioRecorderDict = Dictionary

var filePath = ""

var audioRecorderTimer : Timer!

let path1 = UIBezierPath()

let layer1 = CAShapeLayer()

let path2 = UIBezierPath()

let layer2 = CAShapeLayer()

/// 波形更新间隔

private let updateFequency = 0.05

/// 声音数据数组

private var soundMeters = [Float]()

/// 声音数据数组容量

private let soundMeterCount = Int((ScreenWidth - 76 - 60)/6)

/// 录音时间

private var recordTime = 0.00

var tip:UILabel! //倾听中提示

var timer_remove:Timer! //3秒没有输入语音自动删除

var timeSecond = 0 //3秒时间计算

override init(frame: CGRect) {

super.init(frame: frame)

}

init(frame: CGRect,froLan:String,Tolan:String) {

super.init(frame: frame)

self.timer_remove = Timer.scheduledTimer(withTimeInterval: 1.0, repeats: true) { (timer) in

//监听3秒后删除

self.timerReduce()

}

self.backgroundColor = UIColor.init(hexString: "EBEEF0") //背景色

self.layer.cornerRadius = 5

self.layer.masksToBounds = true

//根据手机刷新频率刷新曲线

let display = CADisplayLink(target: self, selector: #selector(drawWaveLine))

display.add(to: RunLoop.current, forMode: RunLoop.Mode.common)

layer1.strokeColor = ThemeColor?.cgColor

layer1.fillColor = UIColor.clear.cgColor

layer1.lineWidth = 3

layer2.strokeColor = ThemeColor?.cgColor

layer2.fillColor = UIColor.clear.cgColor

layer2.lineWidth = 3

self.layer.addSublayer(layer1)

self.layer.addSublayer(layer2)

//添加通知,画波形图

NotificationCenter.default.addObserver(self, selector: #selector(updateView(notice:)), name: NSNotification.Name.init("updateMeters"), object: nil)

//开启录音

goRecoder()

//提示

tip = UILabel.init(frame: CGRect.init(x: 0, y: 150, width: ScreenWidth - 76, height: 30))

tip.text = "倾听中.."

tip.textAlignment = .center

tip.textColor = UIColor.init(hexString: "666666")

tip.font = getFont(14)

self.addSubview(tip)

}

func timerReduce()

{

timeSecond = timeSecond + 1

if timeSecond == 3 {

self.removeSelf()

}

}

func removeSelf(){

killtime()

self.timer_remove.invalidate()

Haw.stopDictating()

UIView.animate(withDuration: 0.3, animations: {

self.alpha = 0

}) { (over: Bool) in

if over{

self.removeFromSuperview()

}

}

}

@objc private func updateView(notice: Notification) {

soundMeters = notice.object as! [Float]

path1.removeAllPoints()

path2.removeAllPoints()

if soundMeters.count > 0 {

for i in (0...soundMeters.count - 1 ).reversed() {

let item = soundMeters[i]

var index = 0

index = soundMeters.count - i

// print(item)

var barHeight = 0.0//通过当前声音表计算应该显示的声音表高度

// 声音在 - 46 ~55 之间

// print(barHeight)

if item<0

{

if item < -46.0

{

barHeight = 0.0

}else

{

barHeight = Double(item) + 46.0

timeSecond = 0

}

}else

{

if item > 55

{

barHeight = 55

timeSecond = 0

}else

{

barHeight = Double(item) + 46

timeSecond = 0

}

}

path1.move(to: CGPoint(x: index * 6 + 3 + 30, y: 80))

path1.addLine(to: CGPoint(x: index * 6 + 3 + 30, y: Int(83 - barHeight)))

path2.move(to: CGPoint(x: index * 6 + 3 + 30, y: 80))

path2.addLine(to: CGPoint(x: index * 6 + 3 + 30, y: Int( barHeight + 83)))

}

layer1.path = path1.cgPath

layer2.path = path2.cgPath

}

}

@objc func drawWaveLine() {

path1.removeAllPoints()

path1.move(to: CGPoint(x: 10, y: self.bounds.height / 2))

}

func goRecoder()

{

self.filePath = SYAudioGetFilePathWithDate()

audioRecorderStartWithFilePath(filepatch: filePath)

}

func timerStart()

{

self.audioRecorderTimer = Timer.scheduledTimer(timeInterval: 0.02, target: self, selector: #selector(self.device), userInfo: nil, repeats: true)

}

@objc func device()

{

self.audioRecorder.updateMeters()

recordTime += updateFequency

//获取声音值

addSoundMeter(item: audioRecorder.averagePower(forChannel: 0))

}

//将声音值放在数组中

private func addSoundMeter(item: Float) {

if soundMeters.count < soundMeterCount {

soundMeters.append(item)

} else {

for (index, _) in soundMeters.enumerated() {

if index < soundMeterCount - 1 {

soundMeters[index] = soundMeters[index + 1]

}

}

// 插入新数据

soundMeters[soundMeterCount - 1] = item

NotificationCenter.default.post(name: NSNotification.Name.init("updateMeters"), object: soundMeters)

}

}

//录音设置

func setaudioRecorderDict()->Dictionary

{

self.audioRecorderDict.updateValue(NSNumber.init(value: kAudioFormatMPEG4AAC), forKey: AVFormatIDKey)

self.audioRecorderDict.updateValue(NSNumber.init(value: 11025), forKey: AVSampleRateKey)

self.audioRecorderDict.updateValue(NSNumber.init(value: 2), forKey: AVNumberOfChannelsKey)

self.audioRecorderDict.updateValue(NSNumber.init(value: 16), forKey: AVLinearPCMBitDepthKey)

self.audioRecorderDict.updateValue(NSNumber.init(value: AVAudioQuality.high.rawValue), forKey: AVEncoderAudioQualityKey)

return self.audioRecorderDict

}

func killtime()

{

self.audioRecorderTimer.invalidate()

}

func audioRecorderStartWithFilePath(filepatch:String)

{

let urlAudioRecorder = NSURL.fileURL(withPath: filepatch)

print(urlAudioRecorder)

do {

try self.audioRecorder = AVAudioRecorder.init(url: urlAudioRecorder, settings: setaudioRecorderDict())

}catch

{

}

self.audioRecorder.isMeteringEnabled = true

self.audioRecorder.delegate = self

if (self.audioRecorder != nil) {

let recordSession = AVAudioSession.sharedInstance()

do{

try recordSession.setCategory(.playAndRecord, mode: .default, options: [])

try recordSession.setMode(AVAudioSession.Mode.measurement)

//激活Session

try recordSession.setActive(true, options: .notifyOthersOnDeactivation)

}catch{

print("Throws:\(error)")

}

}

if self.audioRecorder.prepareToRecord() {

self.audioRecorder.record()

timerStart()

print("self.fromeLan\(self.fromeLan)")

}

}

}

}

required init?(coder aDecoder: NSCoder) {

fatalError("init(coder:) has not been implemented")

}

}

extension VoiceWaveView:AVAudioRecorderDelegate

{

func audioRecorderEncodeErrorDidOccur(_ recorder: AVAudioRecorder, error: Error?) {

}

func audioRecorderDidFinishRecording(_ recorder: AVAudioRecorder, successfully flag: Bool) {

}

}