python 爬虫:下载小说

最近在水滴阅读看英文原著《绿野仙踪》,在PP作文中下载中文版本.

下载到第12章的时候,好像是html网页出错,拿不到下一章的url. 跳过12章,将首页地址更新为第13章,可以继续下

#coding=utf-8

import sys

import io

sys.stdout = io.TextIOWrapper(sys.stdout.buffer, encoding='utf-8')

from bs4 import BeautifulSoup

import urllib.request

import os

base_dir = "D:/python/src/lvyexianzong/"

base_url = "https://www.ppzuowen.com/"

def parseURL(url):

if url.find("http",0) == -1:

url=base_url+url

req0 = urllib.request.Request(url)

req0.add_header("User-Agent","Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.115 Safari/537.36") #伪装成浏览器

html0 = urllib.request.urlopen(req0).read()

soup0 = BeautifulSoup(html0,'lxml')

try:

div_top = soup0.find('div',class_="articleBody articleContent1")

h2_string = div_top.find('h2').string

if h2_string is not None:

fileName = h2_string

else:

fileName = "unknown"

content = div_top.find('p').get_text().replace("

","").replace('"',"")

with open(base_dir+fileName+".txt",'w',encoding='utf-8-sig') as f:

f.write(" "*10 + fileName + "\n"*2)

f.write(" "*4 + content)

print("downloading " + fileName + " finished")

except:

print("parse" + url + " error")

finally:

try:

buttom = soup0.find('div',class_="www3")

pages = buttom.find_all('span',class_="www4")

for item in pages:

try:

a = item.find('a')

if a == None:

pass

else:

name = a.string

if name.find("下一",0) != -1:

return a['href']

except:

pass

except:

print("Parse error")

def main():

url = "https://www.ppzuowen.com/book/lvyexianzong/9419.html"

while True:

if url != None:

url = parseURL(url)

else:

break

if __name__ == "__main__":

if not os.path.exists(base_dir):

os.mkdir(base_dir)

main()

print("Exit!!")

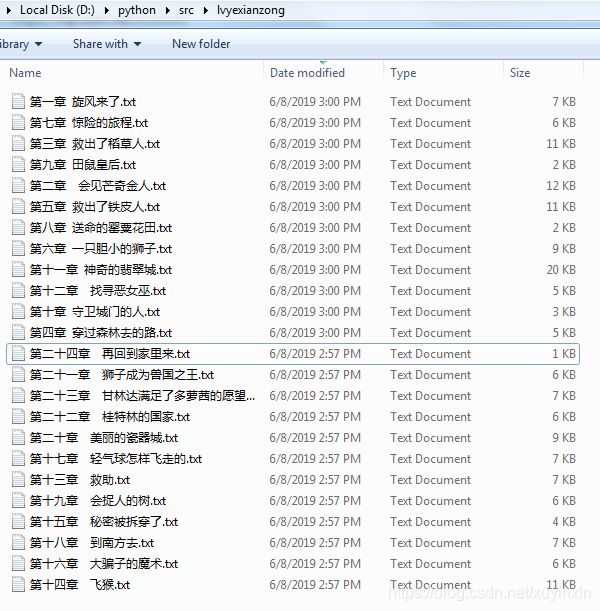

结果: