SSD Keras版源码史上最详细解读系列之训练模型

SSD Keras版源码史上最详细解读系列之训练模型

- 训练

训练

上次讲了怎么跑起来测试,这篇将怎么跑起来训练,话不多说,我们可以看ssd300_training.ipynb的代码,但是需要一些修改,一段段来分析吧,最开始就是一些参数的设置,比如图像输入的宽高,通道,类别,缩放比例等等,具体可以看论文,后面也会有分析,这里就先提下,先了解下训练流程:

img_height = 300 # Height of the model input images

img_width = 300 # Width of the model input images

img_channels = 3 # Number of color channels of the model input images

mean_color = [123, 117, 104] # The per-channel mean of the images in the dataset. Do not change this value if you're using any of the pre-trained weights.

swap_channels = [2, 1, 0] # The color channel order in the original SSD is BGR, so we'll have the model reverse the color channel order of the input images.

n_classes = 20 # Number of positive classes, e.g. 20 for Pascal VOC, 80 for MS COCO

scales_pascal = [0.1, 0.2, 0.37, 0.54, 0.71, 0.88, 1.05] # The anchor box scaling factors used in the original SSD300 for the Pascal VOC datasets

scales_coco = [0.07, 0.15, 0.33, 0.51, 0.69, 0.87, 1.05] # The anchor box scaling factors used in the original SSD300 for the MS COCO datasets

scales = scales_pascal

aspect_ratios = [[1.0, 2.0, 0.5],

[1.0, 2.0, 0.5, 3.0, 1.0/3.0],

[1.0, 2.0, 0.5, 3.0, 1.0/3.0],

[1.0, 2.0, 0.5, 3.0, 1.0/3.0],

[1.0, 2.0, 0.5],

[1.0, 2.0, 0.5]] # The anchor box aspect ratios used in the original SSD300; the order matters

two_boxes_for_ar1 = True

steps = [8, 16, 32, 64, 100, 300] # The space between two adjacent anchor box center points for each predictor layer.

offsets = [0.5, 0.5, 0.5, 0.5, 0.5, 0.5] # The offsets of the first anchor box center points from the top and left borders of the image as a fraction of the step size for each predictor layer.

clip_boxes = False # Whether or not to clip the anchor boxes to lie entirely within the image boundaries

variances = [0.1, 0.1, 0.2, 0.2] # The variances by which the encoded target coordinates are divided as in the original implementation

normalize_coords = True

然后就是创建模型,模型里面的内容后面会讲:

K.clear_session() # Clear previous models from memory.

model = ssd_300(image_size=(img_height, img_width, img_channels),

n_classes=n_classes,

mode='training',

l2_regularization=0.0005,

scales=scales,

aspect_ratios_per_layer=aspect_ratios,

two_boxes_for_ar1=two_boxes_for_ar1,

steps=steps,

offsets=offsets,

clip_boxes=clip_boxes,

variances=variances,

normalize_coords=normalize_coords,

subtract_mean=mean_color,

swap_channels=swap_channels)

model.summary()

然后载入预训练的VGG16模型,主要有个损失函数是自定义的,后面也会讲,其实就是论文里的:

# 预训练模型参数 vgg16

# TODO: Set the path to the weights you want to load.

weights_path = 'VGG_ILSVRC_16_layers_fc_reduced.h5'

# 加载参数

model.load_weights(weights_path, by_name=True)

# 3: Instantiate an optimizer and the SSD loss function and compile the model.

# If you want to follow the original Caffe implementation, use the preset SGD

# optimizer, otherwise I'd recommend the commented-out Adam optimizer.

# 原始论文Caffe实现是用SGD,Adam当然也可以

#adam = Adam(lr=0.001, beta_1=0.9, beta_2=0.999, epsilon=1e-08, decay=0.0)

sgd = SGD(lr=0.001, momentum=0.9, decay=0.0, nesterov=False)

# 获得损失函数

ssd_loss = SSDLoss(neg_pos_ratio=3, alpha=1.0)

model.compile(optimizer=sgd, loss=ssd_loss.compute_loss)

模型链接:https://pan.baidu.com/s/1y19WX5FocB1xidL2GLF0qg&shfl=sharepset

提取码:t51o

然后是数据生成器和解析标签文件,记得路径要填对:

train_dataset = DataGenerator(load_images_into_memory=False, hdf5_dataset_path=None)

val_dataset = DataGenerator(load_images_into_memory=False, hdf5_dataset_path=None)

# 2: Parse the image and label lists for the training and validation datasets. This can take a while.

# TODO: Set the paths to the datasets here.

# 配置相关数据路径

# The directories that contain the images.

VOC_2007_images_dir = '../dataset/VOCtrainval_06-Nov-2007/VOCdevkit/VOC2007/JPEGImages/'

VOC_2012_images_dir = '../dataset/VOCtrainval_11-May-2012/VOCdevkit/VOC2012/JPEGImages/'

VOC_2007_test_images_dir = '../dataset/VOCtest_06-Nov-2007/VOCdevkit/VOC2007/JPEGImages/'

# The directories that contain the annotations.

VOC_2007_annotations_dir = '../dataset/VOCtrainval_06-Nov-2007/VOCdevkit/VOC2007/Annotations/'

VOC_2012_annotations_dir = '../dataset/VOCtrainval_11-May-2012/VOCdevkit/VOC2012/Annotations/'

VOC_2007_test_annotations_dir = '../dataset/VOCtest_06-Nov-2007/VOCdevkit/VOC2007/Annotations/'

# The paths to the image sets.

VOC_2007_train_image_set_filename = '../dataset/VOCtrainval_06-Nov-2007/VOCdevkit/VOC2007/ImageSets/Main/train.txt'

VOC_2012_train_image_set_filename = '../dataset/VOCtrainval_11-May-2012/VOCdevkit/VOC2012/ImageSets/Main/train.txt'

VOC_2007_val_image_set_filename = '../dataset/VOCtrainval_06-Nov-2007/VOCdevkit/VOC2007/ImageSets/Main/val.txt'

VOC_2012_val_image_set_filename = '../dataset/VOCtrainval_11-May-2012/VOCdevkit/VOC2012/ImageSets/Main/val.txt'

VOC_2007_trainval_image_set_filename = '../dataset/VOCtrainval_06-Nov-2007/VOCdevkit/VOC2007/ImageSets/Main/trainval.txt'

VOC_2012_trainval_image_set_filename = '../dataset/VOCtrainval_11-May-2012/VOCdevkit/VOC2012/ImageSets/Main/trainval.txt'

VOC_2007_test_image_set_filename = '../dataset/VOCtest_06-Nov-2007/VOCdevkit/VOC2007/ImageSets/Main/test.txt'

# The XML parser needs to now what object class names to look for and in which order to map them to integers.

classes = ['background',

'aeroplane', 'bicycle', 'bird', 'boat',

'bottle', 'bus', 'car', 'cat',

'chair', 'cow', 'diningtable', 'dog',

'horse', 'motorbike', 'person', 'pottedplant',

'sheep', 'sofa', 'train', 'tvmonitor']

# 解析xml 获取标签

train_dataset.parse_xml(images_dirs=[VOC_2007_images_dir,

VOC_2012_images_dir],

image_set_filenames=[VOC_2007_trainval_image_set_filename,

VOC_2012_trainval_image_set_filename],

annotations_dirs=[VOC_2007_annotations_dir,

VOC_2012_annotations_dir],

classes=classes,

include_classes='all',

exclude_truncated=False,

exclude_difficult=False,

ret=False)

val_dataset.parse_xml(images_dirs=[VOC_2007_test_images_dir],

image_set_filenames=[VOC_2007_test_image_set_filename],

annotations_dirs=[VOC_2007_test_annotations_dir],

classes=classes,

include_classes='all',

exclude_truncated=False,

exclude_difficult=True,

ret=False)

然后对数据进行增强,编码处理:

# 3: Set the batch size.

# 批量数

batch_size = 8 # Change the batch size if you like, or if you run into GPU memory issues.

# 4: Set the image transformations for pre-processing and data augmentation options.

# For the training generator:

# 数据增强

ssd_data_augmentation = SSDDataAugmentation(img_height=img_height,

img_width=img_width,

background=mean_color)

# For the validation generator:

# 改变颜色 BGR RGB的问题

convert_to_3_channels = ConvertTo3Channels()

# 缩放

resize = Resize(height=img_height, width=img_width)

# 5: Instantiate an encoder that can encode ground truth labels into the format needed by the SSD loss function.

# The encoder constructor needs the spatial dimensions of the model's predictor layers to create the anchor boxes.

predictor_sizes = [model.get_layer('conv4_3_norm_mbox_conf').output_shape[1:3],

model.get_layer('fc7_mbox_conf').output_shape[1:3],

model.get_layer('conv6_2_mbox_conf').output_shape[1:3],

model.get_layer('conv7_2_mbox_conf').output_shape[1:3],

model.get_layer('conv8_2_mbox_conf').output_shape[1:3],

model.get_layer('conv9_2_mbox_conf').output_shape[1:3]]

# 对数据进行编码和处理

ssd_input_encoder = SSDInputEncoder(img_height=img_height,

img_width=img_width,

n_classes=n_classes,

predictor_sizes=predictor_sizes,

scales=scales,

aspect_ratios_per_layer=aspect_ratios,

two_boxes_for_ar1=two_boxes_for_ar1,

steps=steps,

offsets=offsets,

clip_boxes=clip_boxes,

variances=variances,

matching_type='multi',

pos_iou_threshold=0.5,

neg_iou_limit=0.5,

normalize_coords=normalize_coords)

# 6: Create the generator handles that will be passed to Keras' `fit_generator()` function.

train_generator = train_dataset.generate(batch_size=batch_size,

shuffle=True,

transformations=[ssd_data_augmentation],

label_encoder=ssd_input_encoder,

returns={'processed_images',

'encoded_labels'},

keep_images_without_gt=False)

val_generator = val_dataset.generate(batch_size=batch_size,

shuffle=False,

transformations=[convert_to_3_channels,

resize],

label_encoder=ssd_input_encoder,

returns={'processed_images',

'encoded_labels'},

keep_images_without_gt=False)

# Get the number of samples in the training and validations datasets.

train_dataset_size = train_dataset.get_dataset_size()

val_dataset_size = val_dataset.get_dataset_size()

print("Number of images in the training dataset:\t{:>6}".format(train_dataset_size))

print("Number of images in the validation dataset:\t{:>6}".format(val_dataset_size))

最后定义可调整的学习率,和一些监测点,回调:

# Define a learning rate schedule.

# 学习率的调整

def lr_schedule(epoch):

if epoch < 80:

return 0.001

elif epoch < 100:

return 0.0001

else:

return 0.00001

# In[16]:

# Define model callbacks.

# TODO: Set the filepath under which you want to save the model.

# 一些检测点 监测val_loss 只保存最好的模型,

model_checkpoint = ModelCheckpoint(filepath='ssd300_pascal_07+12_epoch-{epoch:02d}_loss-{loss:.4f}_val_loss-{val_loss:.4f}.h5',

monitor='val_loss',

verbose=1,

save_best_only=True,

save_weights_only=False,

mode='auto',

period=1)

#model_checkpoint.best =

# log写入csv

csv_logger = CSVLogger(filename='ssd300_pascal_07+12_training_log.csv',

separator=',',

append=True)

# 按照epoch的次数自动调整学习率

learning_rate_scheduler = LearningRateScheduler(schedule=lr_schedule,

verbose=1)

# 遇到NaN损失则停止

terminate_on_nan = TerminateOnNaN()

# 回调

callbacks = [model_checkpoint,

csv_logger,

learning_rate_scheduler,

terminate_on_nan]

# ## 5. Train

# In order to reproduce the training of the "07+12" model mentioned above, at 1,000 training steps per epoch you'd have to train for 120 epochs. That is going to take really long though, so you might not want to do all 120 epochs in one go and instead train only for a few epochs at a time. You can find a summary of a full training [here](https://github.com/pierluigiferrari/ssd_keras/blob/master/training_summaries/ssd300_pascal_07%2B12_training_summary.md).

#

# In order to only run a partial training and resume smoothly later on, there are a few things you should note:

# 1. Always load the full model if you can, rather than building a new model and loading previously saved weights into it. Optimizers like SGD or Adam keep running averages of past gradient moments internally. If you always save and load full models when resuming a training, then the state of the optimizer is maintained and the training picks up exactly where it left off. If you build a new model and load weights into it, the optimizer is being initialized from scratch, which, especially in the case of Adam, leads to small but unnecessary setbacks every time you resume the training with previously saved weights.

# 2. In order for the learning rate scheduler callback above to work properly, `fit_generator()` needs to know which epoch we're in, otherwise it will start with epoch 0 every time you resume the training. Set `initial_epoch` to be the next epoch of your training. Note that this parameter is zero-based, i.e. the first epoch is epoch 0. If you had trained for 10 epochs previously and now you'd want to resume the training from there, you'd set `initial_epoch = 10` (since epoch 10 is the eleventh epoch). Furthermore, set `final_epoch` to the last epoch you want to run. To stick with the previous example, if you had trained for 10 epochs previously and now you'd want to train for another 10 epochs, you'd set `initial_epoch = 10` and `final_epoch = 20`.

# 3. In order for the model checkpoint callback above to work correctly after a kernel restart, set `model_checkpoint.best` to the best validation loss from the previous training. If you don't do this and a new `ModelCheckpoint` object is created after a kernel restart, that object obviously won't know what the last best validation loss was, so it will always save the weights of the first epoch of your new training and record that loss as its new best loss. This isn't super-important, I just wanted to mention it.

# In[17]:

# If you're resuming a previous training, set `initial_epoch` and `final_epoch` accordingly.

initial_epoch = 0

final_epoch = 120

steps_per_epoch = 1000

# 一批批训练

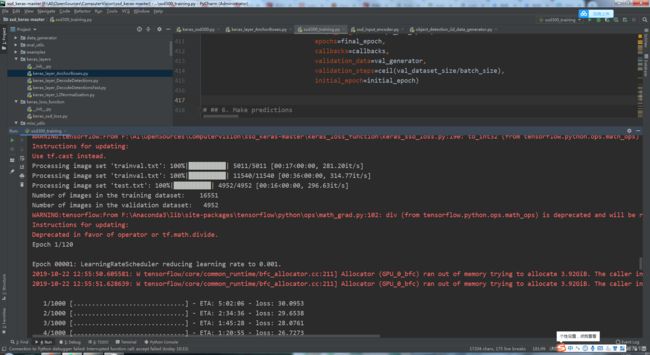

history = model.fit_generator(generator=train_generator,

steps_per_epoch=steps_per_epoch,

epochs=final_epoch,

callbacks=callbacks,

validation_data=val_generator,

validation_steps=ceil(val_dataset_size/batch_size),

initial_epoch=initial_epoch)

其他的代码就不需要了,这样就可以训练起来了,后面我会慢慢讲里面的一些细节,比如模型类,锚框类,数据生成器等等。

来看看一些LOG吧,比如每一层的结构,这样可以帮助我们更好的了解架构:

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) (None, 300, 300, 3) 0

__________________________________________________________________________________________________

identity_layer (Lambda) (None, 300, 300, 3) 0 input_1[0][0]

__________________________________________________________________________________________________

input_mean_normalization (Lambd (None, 300, 300, 3) 0 identity_layer[0][0]

__________________________________________________________________________________________________

input_channel_swap (Lambda) (None, 300, 300, 3) 0 input_mean_normalization[0][0]

__________________________________________________________________________________________________

conv1_1 (Conv2D) (None, 300, 300, 64) 1792 input_channel_swap[0][0]

__________________________________________________________________________________________________

conv1_2 (Conv2D) (None, 300, 300, 64) 36928 conv1_1[0][0]

__________________________________________________________________________________________________

pool1 (MaxPooling2D) (None, 150, 150, 64) 0 conv1_2[0][0]

__________________________________________________________________________________________________

conv2_1 (Conv2D) (None, 150, 150, 128 73856 pool1[0][0]

__________________________________________________________________________________________________

conv2_2 (Conv2D) (None, 150, 150, 128 147584 conv2_1[0][0]

__________________________________________________________________________________________________

pool2 (MaxPooling2D) (None, 75, 75, 128) 0 conv2_2[0][0]

__________________________________________________________________________________________________

conv3_1 (Conv2D) (None, 75, 75, 256) 295168 pool2[0][0]

__________________________________________________________________________________________________

conv3_2 (Conv2D) (None, 75, 75, 256) 590080 conv3_1[0][0]

__________________________________________________________________________________________________

conv3_3 (Conv2D) (None, 75, 75, 256) 590080 conv3_2[0][0]

__________________________________________________________________________________________________

pool3 (MaxPooling2D) (None, 38, 38, 256) 0 conv3_3[0][0]

__________________________________________________________________________________________________

conv4_1 (Conv2D) (None, 38, 38, 512) 1180160 pool3[0][0]

__________________________________________________________________________________________________

conv4_2 (Conv2D) (None, 38, 38, 512) 2359808 conv4_1[0][0]

__________________________________________________________________________________________________

conv4_3 (Conv2D) (None, 38, 38, 512) 2359808 conv4_2[0][0]

__________________________________________________________________________________________________

pool4 (MaxPooling2D) (None, 19, 19, 512) 0 conv4_3[0][0]

__________________________________________________________________________________________________

conv5_1 (Conv2D) (None, 19, 19, 512) 2359808 pool4[0][0]

__________________________________________________________________________________________________

conv5_2 (Conv2D) (None, 19, 19, 512) 2359808 conv5_1[0][0]

__________________________________________________________________________________________________

conv5_3 (Conv2D) (None, 19, 19, 512) 2359808 conv5_2[0][0]

__________________________________________________________________________________________________

pool5 (MaxPooling2D) (None, 19, 19, 512) 0 conv5_3[0][0]

__________________________________________________________________________________________________

fc6 (Conv2D) (None, 19, 19, 1024) 4719616 pool5[0][0]

__________________________________________________________________________________________________

fc7 (Conv2D) (None, 19, 19, 1024) 1049600 fc6[0][0]

__________________________________________________________________________________________________

conv6_1 (Conv2D) (None, 19, 19, 256) 262400 fc7[0][0]

__________________________________________________________________________________________________

conv6_padding (ZeroPadding2D) (None, 21, 21, 256) 0 conv6_1[0][0]

__________________________________________________________________________________________________

conv6_2 (Conv2D) (None, 10, 10, 512) 1180160 conv6_padding[0][0]

__________________________________________________________________________________________________

conv7_1 (Conv2D) (None, 10, 10, 128) 65664 conv6_2[0][0]

__________________________________________________________________________________________________

conv7_padding (ZeroPadding2D) (None, 12, 12, 128) 0 conv7_1[0][0]

__________________________________________________________________________________________________

conv7_2 (Conv2D) (None, 5, 5, 256) 295168 conv7_padding[0][0]

__________________________________________________________________________________________________

conv8_1 (Conv2D) (None, 5, 5, 128) 32896 conv7_2[0][0]

__________________________________________________________________________________________________

conv8_2 (Conv2D) (None, 3, 3, 256) 295168 conv8_1[0][0]

__________________________________________________________________________________________________

conv9_1 (Conv2D) (None, 3, 3, 128) 32896 conv8_2[0][0]

__________________________________________________________________________________________________

conv4_3_norm (L2Normalization) (None, 38, 38, 512) 512 conv4_3[0][0]

__________________________________________________________________________________________________

conv9_2 (Conv2D) (None, 1, 1, 256) 295168 conv9_1[0][0]

__________________________________________________________________________________________________

conv4_3_norm_mbox_conf (Conv2D) (None, 38, 38, 84) 387156 conv4_3_norm[0][0]

__________________________________________________________________________________________________

fc7_mbox_conf (Conv2D) (None, 19, 19, 126) 1161342 fc7[0][0]

__________________________________________________________________________________________________

conv6_2_mbox_conf (Conv2D) (None, 10, 10, 126) 580734 conv6_2[0][0]

__________________________________________________________________________________________________

conv7_2_mbox_conf (Conv2D) (None, 5, 5, 126) 290430 conv7_2[0][0]

__________________________________________________________________________________________________

conv8_2_mbox_conf (Conv2D) (None, 3, 3, 84) 193620 conv8_2[0][0]

__________________________________________________________________________________________________

conv9_2_mbox_conf (Conv2D) (None, 1, 1, 84) 193620 conv9_2[0][0]

__________________________________________________________________________________________________

conv4_3_norm_mbox_loc (Conv2D) (None, 38, 38, 16) 73744 conv4_3_norm[0][0]

__________________________________________________________________________________________________

fc7_mbox_loc (Conv2D) (None, 19, 19, 24) 221208 fc7[0][0]

__________________________________________________________________________________________________

conv6_2_mbox_loc (Conv2D) (None, 10, 10, 24) 110616 conv6_2[0][0]

__________________________________________________________________________________________________

conv7_2_mbox_loc (Conv2D) (None, 5, 5, 24) 55320 conv7_2[0][0]

__________________________________________________________________________________________________

conv8_2_mbox_loc (Conv2D) (None, 3, 3, 16) 36880 conv8_2[0][0]

__________________________________________________________________________________________________

conv9_2_mbox_loc (Conv2D) (None, 1, 1, 16) 36880 conv9_2[0][0]

__________________________________________________________________________________________________

conv4_3_norm_mbox_conf_reshape (None, 5776, 21) 0 conv4_3_norm_mbox_conf[0][0]

__________________________________________________________________________________________________

fc7_mbox_conf_reshape (Reshape) (None, 2166, 21) 0 fc7_mbox_conf[0][0]

__________________________________________________________________________________________________

conv6_2_mbox_conf_reshape (Resh (None, 600, 21) 0 conv6_2_mbox_conf[0][0]

__________________________________________________________________________________________________

conv7_2_mbox_conf_reshape (Resh (None, 150, 21) 0 conv7_2_mbox_conf[0][0]

__________________________________________________________________________________________________

conv8_2_mbox_conf_reshape (Resh (None, 36, 21) 0 conv8_2_mbox_conf[0][0]

__________________________________________________________________________________________________

conv9_2_mbox_conf_reshape (Resh (None, 4, 21) 0 conv9_2_mbox_conf[0][0]

__________________________________________________________________________________________________

conv4_3_norm_mbox_priorbox (Anc (None, 38, 38, 4, 8) 0 conv4_3_norm_mbox_loc[0][0]

__________________________________________________________________________________________________

fc7_mbox_priorbox (AnchorBoxes) (None, 19, 19, 6, 8) 0 fc7_mbox_loc[0][0]

__________________________________________________________________________________________________

conv6_2_mbox_priorbox (AnchorBo (None, 10, 10, 6, 8) 0 conv6_2_mbox_loc[0][0]

__________________________________________________________________________________________________

conv7_2_mbox_priorbox (AnchorBo (None, 5, 5, 6, 8) 0 conv7_2_mbox_loc[0][0]

__________________________________________________________________________________________________

conv8_2_mbox_priorbox (AnchorBo (None, 3, 3, 4, 8) 0 conv8_2_mbox_loc[0][0]

__________________________________________________________________________________________________

conv9_2_mbox_priorbox (AnchorBo (None, 1, 1, 4, 8) 0 conv9_2_mbox_loc[0][0]

__________________________________________________________________________________________________

mbox_conf (Concatenate) (None, 8732, 21) 0 conv4_3_norm_mbox_conf_reshape[0]

fc7_mbox_conf_reshape[0][0]

conv6_2_mbox_conf_reshape[0][0]

conv7_2_mbox_conf_reshape[0][0]

conv8_2_mbox_conf_reshape[0][0]

conv9_2_mbox_conf_reshape[0][0]

__________________________________________________________________________________________________

conv4_3_norm_mbox_loc_reshape ( (None, 5776, 4) 0 conv4_3_norm_mbox_loc[0][0]

__________________________________________________________________________________________________

fc7_mbox_loc_reshape (Reshape) (None, 2166, 4) 0 fc7_mbox_loc[0][0]

__________________________________________________________________________________________________

conv6_2_mbox_loc_reshape (Resha (None, 600, 4) 0 conv6_2_mbox_loc[0][0]

__________________________________________________________________________________________________

conv7_2_mbox_loc_reshape (Resha (None, 150, 4) 0 conv7_2_mbox_loc[0][0]

__________________________________________________________________________________________________

conv8_2_mbox_loc_reshape (Resha (None, 36, 4) 0 conv8_2_mbox_loc[0][0]

__________________________________________________________________________________________________

conv9_2_mbox_loc_reshape (Resha (None, 4, 4) 0 conv9_2_mbox_loc[0][0]

__________________________________________________________________________________________________

conv4_3_norm_mbox_priorbox_resh (None, 5776, 8) 0 conv4_3_norm_mbox_priorbox[0][0]

__________________________________________________________________________________________________

fc7_mbox_priorbox_reshape (Resh (None, 2166, 8) 0 fc7_mbox_priorbox[0][0]

__________________________________________________________________________________________________

conv6_2_mbox_priorbox_reshape ( (None, 600, 8) 0 conv6_2_mbox_priorbox[0][0]

__________________________________________________________________________________________________

conv7_2_mbox_priorbox_reshape ( (None, 150, 8) 0 conv7_2_mbox_priorbox[0][0]

__________________________________________________________________________________________________

conv8_2_mbox_priorbox_reshape ( (None, 36, 8) 0 conv8_2_mbox_priorbox[0][0]

__________________________________________________________________________________________________

conv9_2_mbox_priorbox_reshape ( (None, 4, 8) 0 conv9_2_mbox_priorbox[0][0]

__________________________________________________________________________________________________

mbox_conf_softmax (Activation) (None, 8732, 21) 0 mbox_conf[0][0]

__________________________________________________________________________________________________

mbox_loc (Concatenate) (None, 8732, 4) 0 conv4_3_norm_mbox_loc_reshape[0][

fc7_mbox_loc_reshape[0][0]

conv6_2_mbox_loc_reshape[0][0]

conv7_2_mbox_loc_reshape[0][0]

conv8_2_mbox_loc_reshape[0][0]

conv9_2_mbox_loc_reshape[0][0]

__________________________________________________________________________________________________

mbox_priorbox (Concatenate) (None, 8732, 8) 0 conv4_3_norm_mbox_priorbox_reshap

fc7_mbox_priorbox_reshape[0][0]

conv6_2_mbox_priorbox_reshape[0][

conv7_2_mbox_priorbox_reshape[0][

conv8_2_mbox_priorbox_reshape[0][

conv9_2_mbox_priorbox_reshape[0][

__________________________________________________________________________________________________

predictions (Concatenate) (None, 8732, 33) 0 mbox_conf_softmax[0][0]

mbox_loc[0][0]

mbox_priorbox[0][0]

==================================================================================================

Total params: 26,285,486

Trainable params: 26,285,486

Non-trainable params: 0

__________________________________________________________________________________________________

好了,今天就到这里了,希望对学习理解有帮助,大神看见勿喷,仅为自己的学习理解,能力有限,请多包涵,部分图片来自网络,侵删。