文章目录

- 1.激活函数的两种用法

- 1.1.softmax激活函数

- 1.2.sigmoid激活函数

- 1.3.tanh激活函数

- 1.4.relu激活函数

- 1.5.leaky_relu激活函数

- 2.用激活函数的不同方法构造函数

- 2.1.nn.ReLU()法

- 2.2.torch.relu()法

- 3.可视化不同激活函数

- 3.1.sigmoid

- 3.2.tanh

- 3.3.relu

- 3.4.leaky_relu

- 3.5.阶梯函数

1.激活函数的两种用法

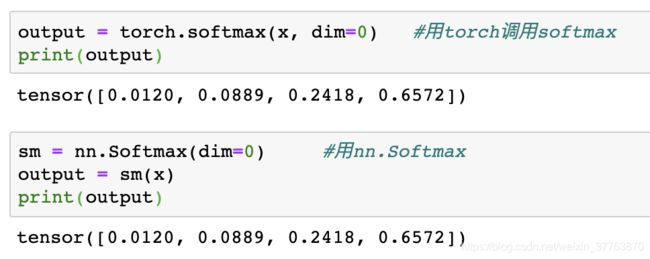

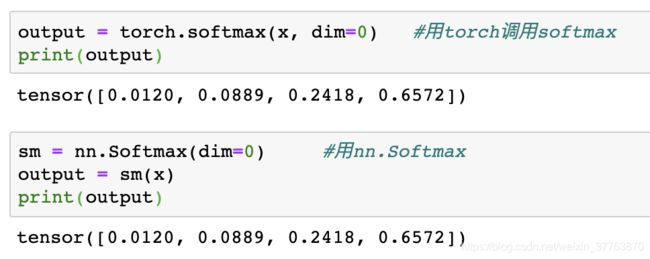

1.1.softmax激活函数

import torch

import torch.nn as nn

import torch.nn.functional as F

x = torch.tensor([-1.0, 1.0, 2.0, 3.0])

output = torch.softmax(x, dim=0)

print(output)

sm = nn.Softmax(dim=0)

output = sm(x)

print(output)

1.2.sigmoid激活函数

output = torch.sigmoid(x)

print(output)

s = nn.Sigmoid()

output = s(x)

print(output)

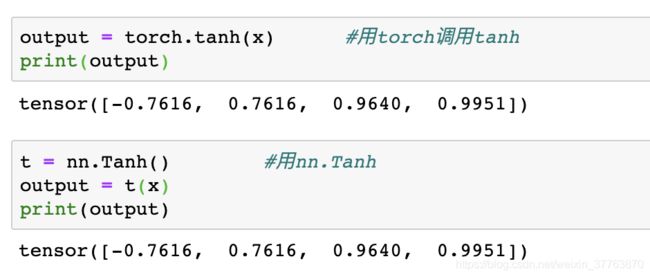

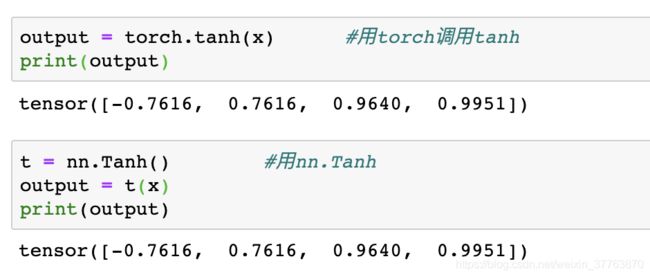

1.3.tanh激活函数

output = torch.tanh(x)

print(output)

t = nn.Tanh()

output = t(x)

print(output)

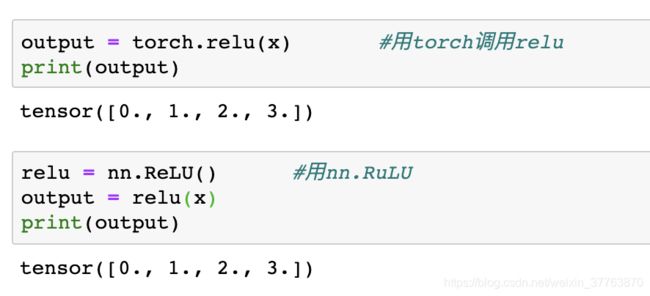

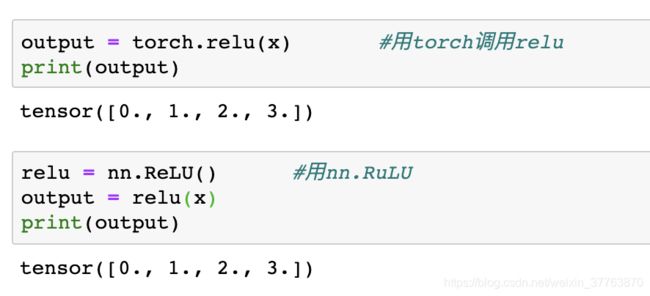

1.4.relu激活函数

output = torch.relu(x)

print(output)

relu = nn.ReLU()

output = relu(x)

print(output)

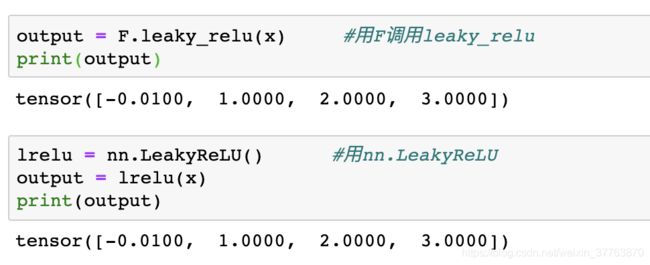

1.5.leaky_relu激活函数

output = F.leaky_relu(x)

print(output)

lrelu = nn.LeakyReLU()

output = lrelu(x)

print(output)

2.用激活函数的不同方法构造函数

2.1.nn.ReLU()法

class NeuralNet(nn.Module):

def __init__(self, input_size, hidden_size):

super(NeuralNet, self).__init__()

self.Linear1 = nn.Linear(input_size, hidden_size)

self.relu = nn.ReLU()

self.linear2 = nn.Linear(hidden_size, 1)

self.sigmoid = nn.Sigmoid()

def forward(self, x):

out = self.linear1(x)

out = self.relu(out)

out = slef.linear2(out)

out = self.sigmoid(out)

return out

2.2.torch.relu()法

class NeuralNet(nn.Module):

def __init__(self, input_size, hidden_size):

super(NerualNet, self).__init__()

self.Linear1 = nn.Linear(input_size, hidden_size)

self.Linear2 = nn.Linear(hidden_size, 1)

def forward(self, x):

out = torch.relu(self.Linear1(x))

out = torch.sigmoid(self.Linear2(out))

return out

3.可视化不同激活函数

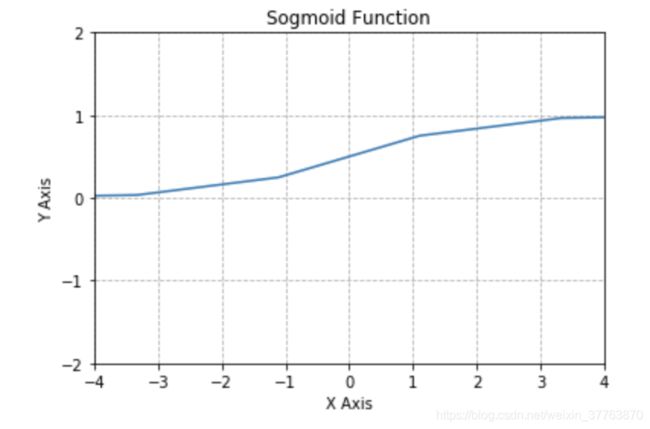

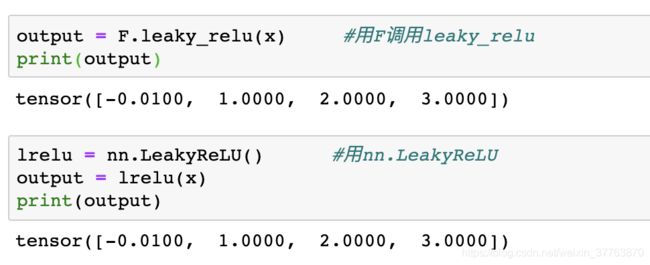

3.1.sigmoid

import numpy as np

import matplotlib.pyplot as plt

sigmoid = lambda x: 1 / (1 + np.exp(-x))

x = np.linspace(-10, 10, 10)

y = np.linspace(-10, 10, 10)

fig = plt.figure()

plt.plot(y, sigmoid(y))

plt.grid(linestyle='--')

plt.xlabel('X Axis')

plt.ylabel('Y Axis')

plt.title('Sogmoid Function')

plt.xticks([-4, -3, -2, -1, 0, 1, 2, 3, 4])

plt.yticks([-2, -1, 0, 1, 2])

plt.xlim(-4,4)

plt.ylim(-2,2)

plt.show()

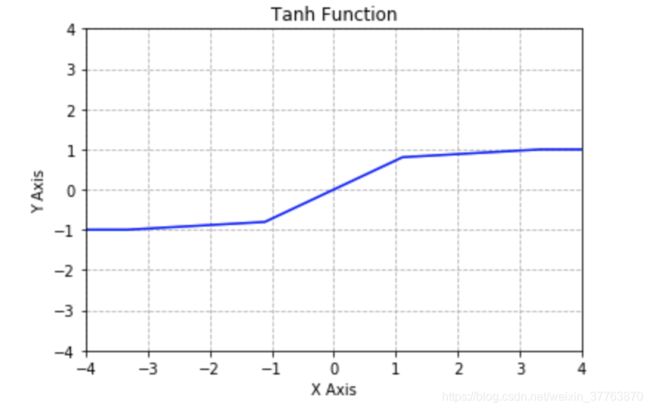

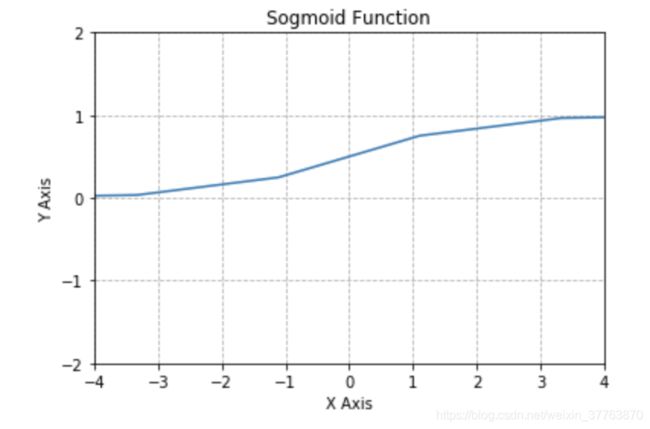

3.2.tanh

tanh = lambda x: 2*sigmoid(2*x)-1

x = np.linspace(-10, 10, 10)

y = np.linspace(-10, 10, 10)

plt.plot(y, tanh(y), 'b', label='linspace(-10, 10, 100)')

plt.grid(linestyle='--')

plt.xlabel('X Axis')

plt.ylabel('Y Axis')

plt.title('Tanh Function')

plt.xticks([-4, -3, -2, -1, 0, 1, 2, 3, 4])

plt.yticks([-4, -3, -2, -1, 0, 1, 2, 3, 4])

plt.xlim(-4,4)

plt.ylim(-4,4)

plt.show()

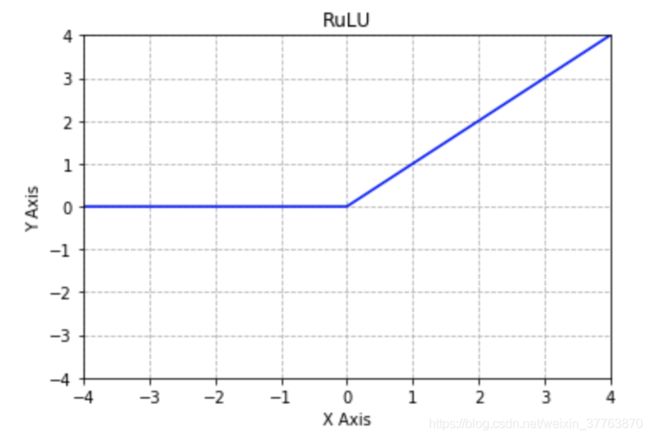

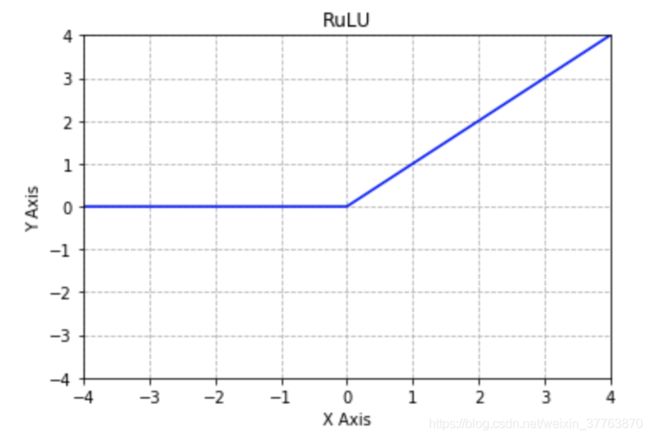

3.3.relu

relu = lambda x: np.where(x>=0, x, 0)

x = np.linspace(-10, 10, 10)

y = np.linspace(-10, 10, 1000)

plt.plot(y, relu(y), 'b', label='linspace(-10, 10, 100)')

plt.grid(linestyle='--')

plt.xlabel('X Axis')

plt.ylabel('Y Axis')

plt.title('RuLU')

plt.xticks([-4, -3, -2, -1, 0, 1, 2, 3, 4])

plt.yticks([-4, -3, -2, -1, 0, 1, 2, 3, 4])

plt.xlim(-4,4)

plt.ylim(-4,4)

plt.show()

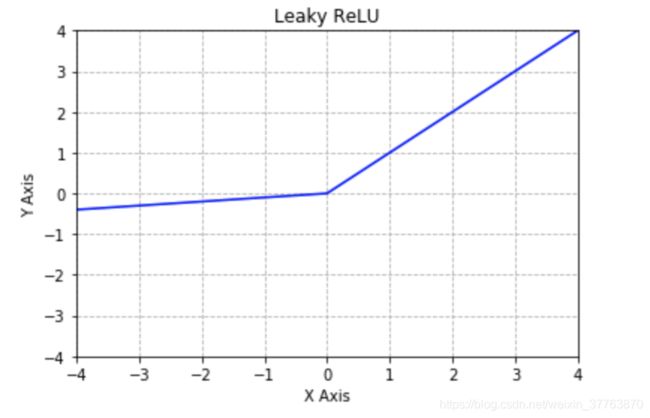

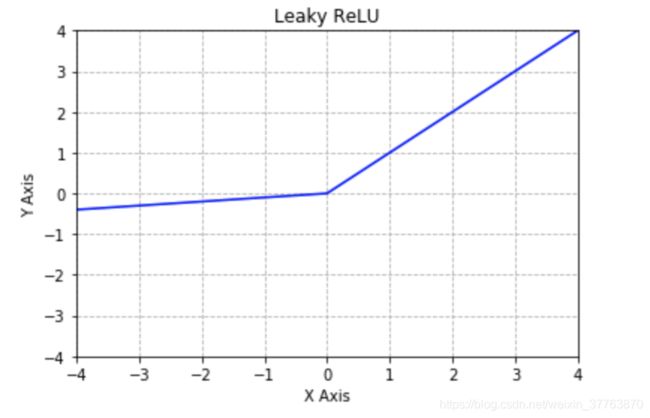

3.4.leaky_relu

leakyrelu = lambda x: np.where(x>=0, x, 0.1*x)

x = np.linspace(-10, 10, 10)

y = np.linspace(-10, 10, 1000)

plt.plot(y, leakyrelu(y), 'b', label='linspace(-10, 10, 100)')

plt.grid(linestyle='--')

plt.xlabel('X Axis')

plt.ylabel('Y Axis')

plt.title('Leaky ReLU')

plt.xticks([-4, -3, -2, -1, 0, 1, 2, 3, 4])

plt.yticks([-4, -3, -2, -1, 0, 1, 2, 3, 4])

plt.xlim(-4, 4)

plt.ylim(-4, 4)

plt.show()

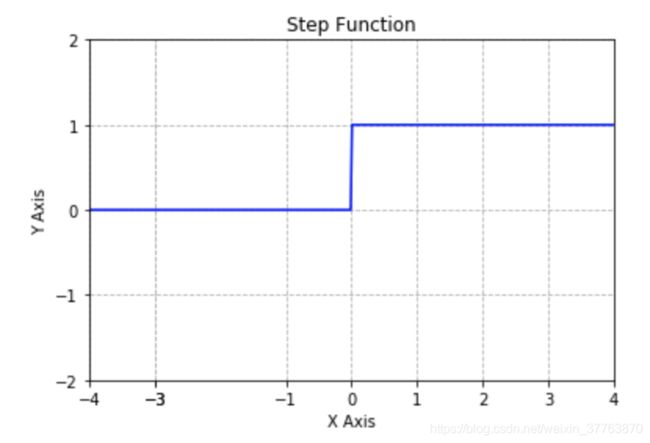

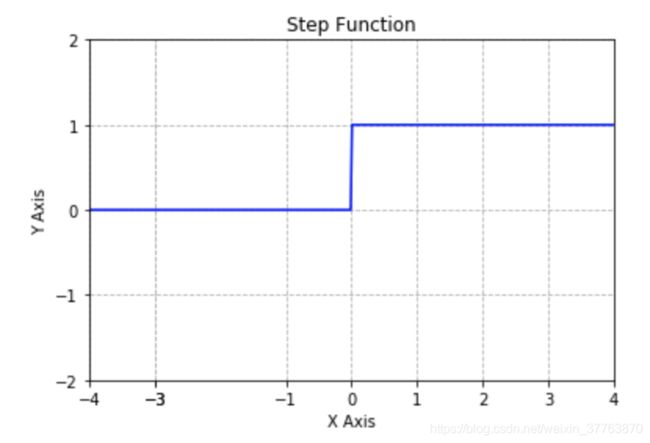

3.5.阶梯函数

bstep = lambda x: np.where(x>=0, 1, 0)

x = np.linspace(-10, 10, 10)

y = np.linspace(-10, 10, 1000)

plt.plot(y, bstep(y), 'b', label='linspace(-10, 10, 100)')

plt.grid(linestyle='--')

plt.xlabel('X Axis')

plt.ylabel('Y Axis')

plt.title('Step Function')

plt.xticks([-4, -3, -3, -1, 0, 1, 2, 3, 4])

plt.yticks([-2, -1, 0, 1, 2])

plt.xlim(-4, 4)

plt.ylim(-2, 2)

plt.show()