Hadoop3.1.3 + Hbase2.1.7 设置Snappy压缩算法

一、查看Linux是否有系统自带的snappy库,如果有删除掉自带的snappy库

① 查看Linux是否有系统自带的snappy库

ll /usr/lib64 | grep snappy

yum -y remove snappy

二、安装snappy本地库

① 下载snappy:

wget https://src.fedoraproject.org/repo/pkgs/snappy/snappy-1.1.4.tar.gz/sha512/873f655713611f4bdfc13ab2a6d09245681f427fbd4f6a7a880a49b8c526875dbdd623e203905450268f542be24a2dc9dae50e6acc1516af1d2ffff3f96553da/snappy-1.1.4.tar.gz

② 安装

tar zxvf snappy-1.1.4.tar.gz -C /usr/local/snappy

cd /usr/local/snappy/snappy-1.1.4

./autogen.sh

./configure

make

make install

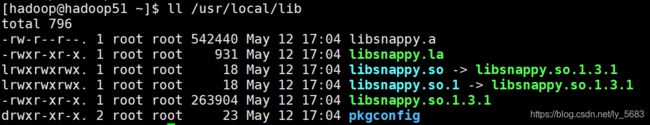

默认安装到/usr/local/lib目录

③ 添加Snappy本地库至/usr/lib64目录下

cp -d /usr/local/lib/* /usr/lib64

三、安装hadoop-snappy

① 安装hadoop-snappy需要一系列的依赖

sudo yum -y install gcc c++ autoconf automake libtool

② 安装

git clone https://github.com/electrum/hadoop-snappy.gitcd hadoop-snappy/mvn package

四、hadoop配置snappy

① 添加Snappy本地库至$HADOOP_HOME/lib/native/目录下

cp -d /usr/local/lib/* /usr/local/hadoop/hadoop-3.1.3/lib/native

②将hadoop-snappy-0.0.1-SNAPSHOT.jar和snappy的library分别拷贝到 $HADOOP_HOME/lib 和$HADOOP_HOME/lib/native/ 目录下即可

cp /home/hadoop/snappy/hadoop-snappy/target/hadoop-snappy-0.0.1-SNAPSHOT.jar $HADOOP_HOME/lib

cp /home/hadoop/snappy/hadoop-snappy/target/hadoop-snappy-0.0.1-SNAPSHOT-tar/hadoop-snappy-0.0.1-SNAPSHOT/lib/native/Linux-amd64-64/* $HADOOP_HOME/lib/native/

③ 配置 hadoop-env.sh 和 core-site.xml,mapred-site.xml

vim hadoop-env.sh

export LD_LIBRARY_PATH=/usr/local/hadoop/hadoop-3.1.3/lib/native:/usr/local/lib/

vim core-site.xml

<property>

<name>io.compression.codecsname>

<value>org.apache.hadoop.io.compress.GzipCodec,org.apache.hadoop.io.compress.DefaultCodec,org.apache.hadoop.io.compress.BZip2Codec,org.apache.hadoop.io.compress.SnappyCodecvalue>

property>

<property>

<name>io.compression.codec.lzo.classname

> org.apache.hadoop.io.compress.SnappyCodecvalue>

property>

vim mapred-site.xml

<property>

<name>mapreduce.output.fileoutputformat.compressname>

<value>truevalue>

property>

<property>

<name>mapreduce.map.output.compressname>

<value>truevalue>

property>

<property>

<name>mapreduce.output.fileoutputformat.compress.codecname>

<value>org.apache.hadoop.io.compress.SnappyCodecvalue>

property>

④ 验证

hadoop jar /usr/local/hadoop/hadoop-3.1.3/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount /input /output

五、HBase配置snappy

① 将 hadoop-snappy-0.0.1-SNAPSHOT.jar 和 snappy的library 拷贝到 $HBASE_HOME/lib 目录下即可

cp /home/hadoop/snappy/hadoop-snappy/target/hadoop-snappy-0.0.1-SNAPSHOT.jar $HBASE_HOME/lib

mkdir -p $HADOOP_HOME/lib/native/Linux-amd64-64/

cp /home/hadoop/snappy/hadoop-snappy/target/hadoop-snappy-0.0.1-SNAPSHOT-tar/hadoop-snappy-0.0.1-SNAPSHOT/lib/native/Linux-amd64-64/* $HADOOP_HOME/lib/native/Linux-amd64-64/

② 配置 hbase-env.sh 和 hbase-site.xml

vim hbase-env.sh

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/hadoop/hadoop-3.1.3/lib/native/:/usr/local/lib

export HBASE_LIBRARY_PATH=$HBASE_LIBRARY_PATH:/usr/local/hbase/hbase-2.1.7/lib/native/Linux-amd64-64/:/usr/local/lib/

export CLASSPATH=$CLASSPATH:$HBASE_LIBRARY_PATH

vim hbase-site.xml

<property>

<name>hbase.regionserver.codecsname>

<value>snappyvalue>

property>

③ 验证snappy

hbase org.apache.hadoop.hbase.util.CompressionTest file:///home/hadoop/ouput snappy

hbase shell

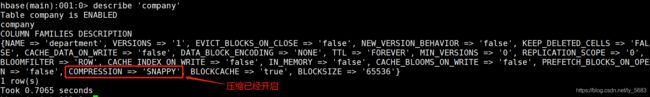

> create 'company', { NAME => 'department', COMPRESSION => 'snappy'}

> put 'company', '001', 'department:name', 'develop'

> put 'company', '001', 'department:address', 'sz'

> describe 'company'

可以看到 COMPRESSION => 'SNAPPY' ,证明已经开启了snappy压缩

六、snappy安装过程中遇见的错误

错误

[exec] libtool: link: gcc -shared src/org/apache/hadoop/io/compress/snappy/.libs/SnappyCompressor.o src/org/apache/hadoop/io/compress/snappy/.libs/SnappyDecompressor.o -L/usr/local/lib -ljvm -ldl -m64 -Wl,-soname -Wl,libhadoopsnappy.so.0 -o .libs/libhadoopsnappy.so.0.0.1

[exec] /usr/bin/ld: cannot find -ljvm

[exec] collect2: ld returned 1 exit status

[exec] make: *** [libhadoopsnappy.la] Error 1

或者

[exec] /bin/sh ./libtool --tag=CC --mode=link gcc -g -Wall -fPIC -O2 -m64 -g -O2 -version-info 0:1:0 -L/usr/local/lib -o libhadoopsna/usr/bin/ld: cannot find -ljvm

[exec] collect2: ld returned 1 exit status

[exec] make: *** [libhadoopsnappy.la] Error 1

[exec] ppy.la -rpath /usr/local/lib src/org/apache/hadoop/io/compress/snappy/SnappyCompressor.lo src/org/apache/hadoop/io/compress/snappy/SnappyDecompressor.lo -ljvm -ldl

[exec] libtool: link: gcc -shared src/org/apache/hadoop/io/compress/snappy/.libs/SnappyCompressor.o src/org/apache/hadoop/io/compress/snappy/.libs/SnappyDecompressor.o -L/usr/local/lib -ljvm -ldl -m64 -Wl,-soname -Wl,libhadoopsnappy.so.0 -o .libs/libhadoopsnappy.so.0.0.1

[ant] Exiting /home/hadoop/codes/hadoop-snappy/maven/build-compilenative.xml.

因为没有把安装 jvm 的 libjvm.so symbolic 软连接到 usr/local/lib 。如果你的系统时amd64,可到 /usr/local/tools/java-se-8u40-ri/jre/lib/amd64/server/ 查看 libjvm.so 软连接到的地方,这里修改如下: ln -s /usr/local/tools/java-se-8u40-ri/jre/lib/amd64/server/libjvm.so /usr/local/lib/ 问题即可解决。