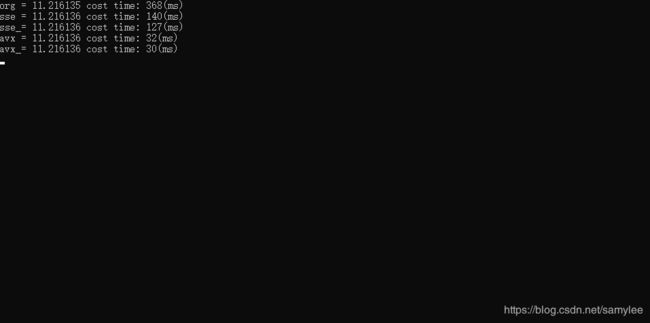

单线程、SSE、AVX运行效率对比——乘法累加运算

前言

_mm_fmadd_ps执行效率比_mm_mul_ps + _mm_add_ps快!

同样_mm256_fmadd_ps也是如此!

math_function.h

#pragma once

#include

#include

float MathMulAdd(const float *input1, const float *input2, int size);

float SSEMulAdd(const float *input1, const float *input2, int size);

float SSEFmAdd(const float *input1, const float *input2, int size);

float AVXMulAdd(const float *input1, const float *input2, int size);

float AVXFmAdd(const float *input1, const float *input2, int size); math_function.cpp

#include "math_function.h"

float MathMulAdd(const float *input1, const float *input2, int size)

{

float output = 0.0;

for (int i = 0; i < size; i++)

{

output += input1[i] * input2[i];

}

return output;

}

float SSEMulAdd(const float *input1, const float *input2, int size)

{

if (input1 == nullptr || input2 == nullptr)

{

printf("input data is null\n");

return -1;

}

int nBlockWidth = 4;

int cntBlock = size / nBlockWidth;

int cntRem = size % nBlockWidth;

float output = 0;

__m128 loadData1, loadData2;

__m128 mulData = _mm_setzero_ps();

__m128 sumData = _mm_setzero_ps();

const float *p1 = input1;

const float *p2 = input2;

for (int i = 0; i < cntBlock; i++)

{

loadData1 = _mm_load_ps(p1);

loadData2 = _mm_load_ps(p2);

mulData = _mm_mul_ps(loadData1, loadData2);

sumData = _mm_add_ps(sumData, mulData);

p1 += nBlockWidth;

p2 += nBlockWidth;

}

sumData = _mm_hadd_ps(sumData, sumData); // p[0] + p[1] + p[4] + p[5] + ...

sumData = _mm_hadd_ps(sumData, sumData); // p[2] + p[3] + p[6] + p[7] + ...

output += sumData.m128_f32[(0)]; // 前4组

for (int i = 0; i < cntRem; i++)

{

output += p1[i] * p2[i];

}

return output;

}

float SSEFmAdd(const float *input1, const float *input2, int size)

{

if (input1 == nullptr || input2 == nullptr)

{

printf("input data is null\n");

return -1;

}

int nBlockWidth = 4;

int cntBlock = size / nBlockWidth;

int cntRem = size % nBlockWidth;

float output = 0;

__m128 loadData1, loadData2;

//__m128 mulData = _mm_setzero_ps();

__m128 sumData = _mm_setzero_ps();

const float *p1 = input1;

const float *p2 = input2;

for (int i = 0; i < cntBlock; i++)

{

loadData1 = _mm_load_ps(p1);

loadData2 = _mm_load_ps(p2);

//mulData = _mm_mul_ps(loadData1, loadData2);

//sumData = _mm_add_ps(sumData, mulData);

sumData = _mm_fmadd_ps(loadData1, loadData2, sumData);

p1 += nBlockWidth;

p2 += nBlockWidth;

}

sumData = _mm_hadd_ps(sumData, sumData); // p[0] + p[1] + p[4] + p[5] + ...

sumData = _mm_hadd_ps(sumData, sumData); // p[2] + p[3] + p[6] + p[7] + ...

output += sumData.m128_f32[(0)]; // 前4组

for (int i = 0; i < cntRem; i++)

{

output += p1[i] * p2[i];

}

return output;

}

float AVXMulAdd(const float *input1, const float *input2, int size)

{

if (input1 == nullptr || input2 == nullptr)

{

printf("input data is null\n");

return -1;

}

int nBlockWidth = 8;

int cntBlock = size / nBlockWidth;

int cntRem = size % nBlockWidth;

float output = 0;

__m256 loadData1, loadData2;

__m256 mulData = _mm256_setzero_ps();

__m256 sumData = _mm256_setzero_ps();

const float *p1 = input1;

const float *p2 = input2;

for (int i = 0; i < cntBlock; i++)

{

loadData1 = _mm256_load_ps(p1);

loadData2 = _mm256_load_ps(p2);

mulData = _mm256_mul_ps(loadData1, loadData2);

sumData = _mm256_add_ps(sumData, mulData);

p1 += nBlockWidth;

p2 += nBlockWidth;

}

sumData = _mm256_hadd_ps(sumData, sumData); // p[0] + p[1] + p[4] + p[5] + p[8] + p[9] + p[12] + p[13] + ...

sumData = _mm256_hadd_ps(sumData, sumData); // p[2] + p[3] + p[6] + p[7] + p[10] + p[11] + p[14] + p[15] + ...

output += sumData.m256_f32[(0)]; // 前4组

output += sumData.m256_f32[(4)]; // 后4组

for (int i = 0; i < cntRem; i++)

{

output += p1[i] * p2[i];

}

return output;

}

float AVXFmAdd(const float *input1, const float *input2, int size)

{

if (input1 == nullptr || input2 == nullptr)

{

printf("input data is null\n");

return -1;

}

int nBlockWidth = 8;

int cntBlock = size / nBlockWidth;

int cntRem = size % nBlockWidth;

float output = 0;

__m256 loadData1, loadData2;

//__m256 mulData = _mm256_setzero_ps();

__m256 sumData = _mm256_setzero_ps();

const float *p1 = input1;

const float *p2 = input2;

for (int i = 0; i < cntBlock; i++)

{

loadData1 = _mm256_load_ps(p1);

loadData2 = _mm256_load_ps(p2);

//mulData = _mm256_mul_ps(loadData1, loadData2);

//sumData = _mm256_add_ps(sumData, mulData);

sumData = _mm256_fmadd_ps(loadData1, loadData2, sumData);

p1 += nBlockWidth;

p2 += nBlockWidth;

}

sumData = _mm256_hadd_ps(sumData, sumData); // p[0] + p[1] + p[4] + p[5] + p[8] + p[9] + p[12] + p[13] + ...

sumData = _mm256_hadd_ps(sumData, sumData); // p[2] + p[3] + p[6] + p[7] + p[10] + p[11] + p[14] + p[15] + ...

output += sumData.m256_f32[(0)]; // 前4组

output += sumData.m256_f32[(4)]; // 后4组

for (int i = 0; i < cntRem; i++)

{

output += p1[i] * p2[i];

}

return output;

}main.cpp

#include "math_function.h"

#include

#include

using std::default_random_engine;

using std::uniform_real_distribution;

int main(int argc, char* argv[])

{

int size = 33;

float *input1 = (float *)malloc(sizeof(float) * size);

float *input2 = (float *)malloc(sizeof(float) * size);

default_random_engine e;

uniform_real_distribution u(0, 1); //随机数分布对象

for (int i = 0; i < size; i++)

{

input1[i] = u(e);

input2[i] = u(e);

}

int cntLoop = 10000000;

clock_t start_t = clock();

float org = 0.0;

for (int i = 0; i < cntLoop; i++)

org = MathMulAdd(input1, input2, size);

printf("org = %f\t", org);

printf("cost time: %d(ms)\n", clock() - start_t);

start_t = clock();

float sse = 0.0;

for (int i = 0; i < cntLoop; i++)

sse = SSEMulAdd(input1, input2, size);

printf("sse = %f\t", sse);

printf("cost time: %d(ms)\n", clock() - start_t);

start_t = clock();

float sse_ = 0.0;

for (int i = 0; i < cntLoop; i++)

sse_ = SSEFmAdd(input1, input2, size);

printf("sse_= %f\t", sse_);

printf("cost time: %d(ms)\n", clock() - start_t);

start_t = clock();

float avx = 0.0;

for (int i = 0; i < cntLoop; i++)

avx = AVXMulAdd(input1, input2, size);

printf("avx = %f\t", avx);

printf("cost time: %d(ms)\n", clock() - start_t);

start_t = clock();

float avx_ = 0.0;

for (int i = 0; i < cntLoop; i++)

avx_ = AVXFmAdd(input1, input2, size);

printf("avx_= %f\t", avx_);

printf("cost time: %d(ms)\n", clock() - start_t);

getchar();

free(input1);

free(input2);

return 0;

} 运行结果

测试硬件:CPU-i7-9700K

预处理器:_WINDOWS

命令行:/arch:AVX

优化项:/O2

任何问题请加唯一QQ2258205918(名称samylee)!