VGGNet---网络进一步加深

完整代码:VGGNet实现完整代码

VGGNet

VGGNet由牛津大学计算机视觉组(Visual Geometry Group)和Google DeepMind公司研究员一起研发的深度卷积神经网络。VGGNet获得了ILSVRC 2014年比赛的亚军和定位项目的冠军,在top5上的错误率为7.5%。它主要的贡献是展示出网络的深度(depth)是算法优良性能的关键部分。VGGNet作者总结出LRN层作用不大。

论文:Very Deep Convolutional Networks for Large-Scale Image Recognition

VGGNet结构图

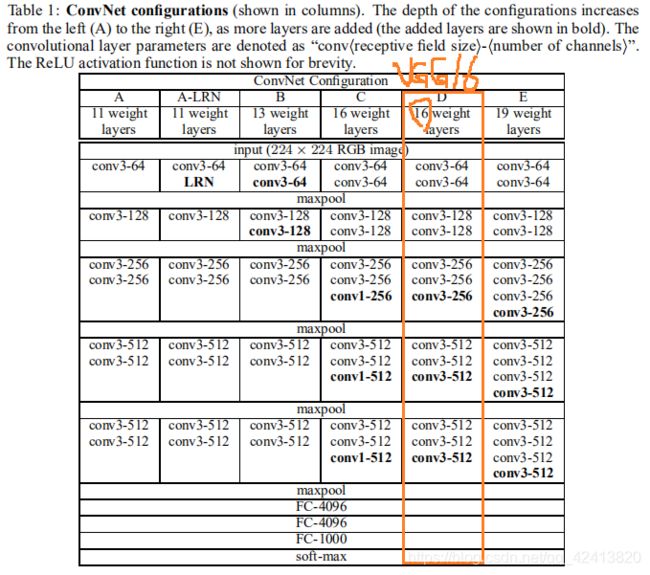

VGGNet的网络结构如下图所示,VGGNet包含多层网络,深度从11层到19层不等,较为常用的是VGG16和VGG19。

VGGNet参数表:

接下来我们以VGG16为例,即上图中的D,介绍VGGNet。

| 操作 | 输入数据维度 | 输出数据维度 | 卷积核 | 卷积步长 | 池化核大小 | 池化步长 | 填充 | 激活函数 |

|---|---|---|---|---|---|---|---|---|

| 输入层 | — | (224,224,3) | — | — | — | — | — | — |

| 卷积层 | (224,224,3) | (224,224,64) | (3,3,64) | 1 | — | — | same | relu |

| 卷积层 | (224,224,64) | (224,224,64) | (3,3,64) | 1 | — | — | same | relu |

| 最大池化层 | (224,224,64) | (112,112,64) | — | — | (2,2) | 2 | valid | — |

| 卷积层 | (112,112,64) | (112,112,128) | (3,3,128) | 1 | — | — | same | relu |

| 卷积层 | (112,112,128) | (112,112,128) | (3,3,128) | 1 | — | — | same | relu |

| 最大池化层 | (112,112,128) | (56,56,128) | — | — | (2,2) | 2 | valid | — |

| 卷积层 | (56,56,128) | (56,56,256) | (3,3,256) | 1 | — | — | same | relu |

| 卷积层 | (56,56,256) | (56,56,256) | (3,3,256) | 1 | — | — | same | relu |

| 卷积层 | (56,56,256) | (56,56,256) | (3,3,256) | 1 | — | — | same | relu |

| 最大池化层 | (56,56,256) | (28,28,256) | — | — | (2,2) | 2 | valid | — |

| 卷积层 | (28,28,256) | (28,28,512) | (3,3,512) | 1 | — | — | same | relu |

| 卷积层 | (28,28,512) | (28,28,512) | (3,3,512) | 1 | — | — | same | relu |

| 卷积层 | (28,28,512) | (28,28,512) | (3,3,512) | 1 | — | — | same | relu |

| 最大池化层 | (28,28,512) | (14,14,512) | — | — | (2,2) | 2 | valid | — |

| 卷积层 | (14,14,512) | (14,14,512) | (3,3,512) | 1 | — | — | same | relu |

| 卷积层 | (14,14,512) | (14,14,512) | (3,3,512) | 1 | — | — | same | relu |

| 卷积层 | (14,14,512) | (14,14,512) | (3,3,512) | 1 | — | — | same | relu |

| 最大池化层 | (14,14,512) | (7,7,512) | — | — | (2,2) | 2 | valid | — |

| 全连接层 | (25088,) | (4096,) | — | — | — | — | — | relu |

| 全连接层 | (4096,) | (4096,) | — | — | — | — | — | relu |

| 全连接层(输出层) | (4096,) | (1000,) | – | – | – | — | — | softmax |

VGGNet特点

- VGGNet全部使用 ( 3 , 3 ) (3,3) (3,3)的卷积核和 ( 2 , 2 ) (2,2) (2,2)的池化核,通过不断加深网络深度来提升网络性能。作者认为,两个 ( 3 , 3 ) (3,3) (3,3)卷积层的串联相当于1个 ( 5 , 5 ) (5,5) (5,5)的卷积层,3个 ( 3 , 3 ) (3,3) (3,3)的卷积层串联相当于1个 ( 7 , 7 ) (7,7) (7,7)的卷积层,即3个 ( 3 , 3 ) (3,3) (3,3)卷积层的感受野大小相当于1个 ( 7 , 7 ) (7,7) (7,7)的卷积层。但是3个 ( 3 , 3 ) (3,3) (3,3)的卷积层参数量只有 ( 7 , 7 ) (7,7) (7,7)的一半左右,同时前者可以有3个非线性操作,而后者只有1个非线性操作,这样使得前者对于特征的学习能力更强。

- VGGNet的显著特点:特征图的空间分辨率单调递减,特征图的通道数单调递增。

- 由于参数量主要集中在最后的三个FC当中,所以网络加深并不会带来参数爆炸的问题;

- VGG由于层数多而且最后的三个全连接层参数众多,导致其占用了更多的内存(140M)

其他小的特点:VGGNet在训练的时候先训级别A的简单网络,再复用A网络的权重来初始化后面的几个复杂模型,这样收敛速度更快。

keras实现VGG16

import os

from keras import layers

from keras import models

def VGG16(input_shape=None, classes=None):

img_input = layers.Input(shape=input_shape)

# Block 1

x = layers.Conv2D(64, (3, 3),activation='relu',padding='same',name='block1_conv1')(img_input)

x = layers.Conv2D(64, (3, 3),activation='relu',padding='same',name='block1_conv2')(x)

x = layers.MaxPooling2D((2, 2), strides=(2, 2), name='block1_pool')(x)

# Block 2

x = layers.Conv2D(128, (3, 3),activation='relu',padding='same',name='block2_conv1')(x)

x = layers.Conv2D(128, (3, 3),activation='relu',padding='same',name='block2_conv2')(x)

x = layers.MaxPooling2D((2, 2), strides=(2, 2), name='block2_pool')(x)

# Block 3

x = layers.Conv2D(256, (3, 3),activation='relu',padding='same',name='block3_conv1')(x)

x = layers.Conv2D(256, (3, 3),activation='relu',padding='same',name='block3_conv2')(x)

x = layers.Conv2D(256, (3, 3),activation='relu',padding='same',name='block3_conv3')(x)

x = layers.MaxPooling2D((2, 2), strides=(2, 2), name='block3_pool')(x)

# Block 4

x = layers.Conv2D(512, (3, 3),activation='relu',padding='same',name='block4_conv1')(x)

x = layers.Conv2D(512, (3, 3),activation='relu',padding='same',name='block4_conv2')(x)

x = layers.Conv2D(512, (3, 3),activation='relu',padding='same',name='block4_conv3')(x)

x = layers.MaxPooling2D((2, 2), strides=(2, 2), name='block4_pool')(x)

# Block 5

x = layers.Conv2D(512, (3, 3),activation='relu',padding='same',name='block5_conv1')(x)

x = layers.Conv2D(512, (3, 3),activation='relu',padding='same',name='block5_conv2')(x)

x = layers.Conv2D(512, (3, 3),activation='relu',padding='same',name='block5_conv3')(x)

x = layers.MaxPooling2D((2, 2), strides=(2, 2), name='block5_pool')(x)

x = layers.Flatten(name='flatten')(x)

x = layers.Dense(4096, activation='relu', name='fc1')(x)

x = layers.Dense(4096, activation='relu', name='fc2')(x)

x = layers.Dense(classes, activation='softmax', name='predictions')(x)

# Create model.

model = models.Model(img_input, x, name='vgg16')

return model