Hadoop授权令牌解释(原标题 Hadoop Delegation Tokens Explained)

转载:https://blog.cloudera.com/hadoop-delegation-tokens-explained/

很好的文章,但是要转载给国内的伙伴,有问题请联系删除

第一部分谷歌翻译版;下边有英语版

Apache Hadoop的安全性是在2009年左右设计和实施的,此后一直保持稳定。但是,由于缺少有关此领域的文档,因此出现问题时很难理解或调试。设计了委托令牌,并将其作为身份验证方法在Hadoop生态系统中广泛使用。这篇博客文章介绍了Hadoop分布式文件系统(HDFS)和Hadoop密钥管理服务器(KMS)上下文中的Hadoop委托令牌的概念,并提供了一些基本代码和故障排除示例。值得注意的是,Hadoop生态系统中还有许多其他服务也使用委托令牌,但为简洁起见,我们仅讨论HDFS和KMS。

此博客假设读者了解的基本概念验证,Kerberos的,为了理解认证流程的目的; 以及HDFS体系结构和HDFS透明加密,以了解什么是HDFS和KMS。对于对HDFS透明加密不感兴趣的读者,可以忽略此博客文章中的KMS部分。可以在此处找到有关Hadoop中的一般授权和身份验证的先前博客文章。

Hadoop安全性快速入门

Hadoop最初是在没有真实身份验证的情况下实施的,这意味着存储在Hadoop中的数据很容易遭到破坏。此安全功能后来于2010年通过HADOOP-4487添加,其主要目的有以下两个:

- 防止未经授权访问HDFS中存储的数据

- 在实现目标1的同时不增加大量成本。

为了实现第一个目标,我们需要确保

- 任何访问群集的客户端都经过身份验证,以确保它们是他们声称的身份。

- 集群中的所有服务器都经过身份验证,可以成为集群的一部分。

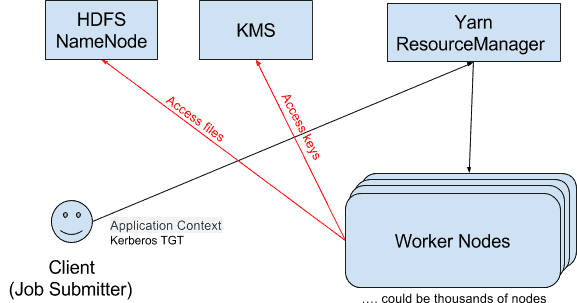

为了这个目标,选择Kerberos作为基础身份验证服务。添加了其他机制,例如委托令牌,块访问令牌,信任等,以补充Kerberos。特别是,引入了代币令牌以实现第二个目标(有关方法,请参阅下一节)。以下是简化图,说明了在HDFS上下文中使用Kerberos和委派令牌的位置(其他服务类似):

在上面的简单HDFS示例中,有几种身份验证机制在起作用:

- 最终用户(乔)可以使用Kerberos与HDFS NameNode对话

- 最终用户(joe)提交的分布式任务可以使用joe的委派令牌访问HDFS NameNode。这将是本博客文章其余部分的重点

- HDFS数据节点使用Kerberos与HDFS NameNode对话

- 最终用户和分布式任务可以使用“块访问令牌”访问HDFS DataNode。

我们将在本博文结尾的“ 其他使用令牌的方式 ”部分中简要介绍上述机制。要了解Hadoop的安全设计的详细信息,请参阅设计文档在HADOOP-4487和Hadoop的安全架构演示。

什么是授权令牌?

从理论上讲,可以完全使用Kerberos进行身份验证,但是在像Hadoop这样的分布式系统中使用时,它就有其自身的问题。想象一下,对于每个MapReduce作业,如果所有工作任务都必须使用委派的TGT(票证授予票证)通过Kerberos进行身份验证,则Kerberos密钥分发中心(KDC)将很快成为瓶颈。下图中的红线说明了这个问题:一个工作可能有成千上万的节点到节点通信,导致相同数量的KDC流量。实际上,它会在非常大的集群中无意中对KDC 进行分布式拒绝服务攻击。

因此,引入了委托令牌作为一种轻量级身份验证方法,以补充Kerberos身份验证。Kerberos是一种三方协议。相反,委托令牌身份验证是一种两方身份验证协议。

授权令牌的工作方式是:

- 客户端最初通过Kerberos向每台服务器进行身份验证,然后从该服务器获取委托令牌。

- 客户端使用委派令牌来与服务器进行后续身份验证,而不是使用Kerberos。

客户端可以并且经常确实将委托令牌传递给其他服务(例如YARN),以便其他服务(例如映射器和化简器)可以作为客户端进行身份验证并代表客户端运行作业。换句话说,客户端可以将凭据“委派”给那些服务。授权令牌有一个到期时间,需要定期更新以保持其有效性。但是,它们不能无限期地更新–使用寿命最长。在过期之前,也可以取消委托令牌。

委托令牌消除了分发Kerberos TGT或密钥表的需求,如果这些密钥或密钥表受到破坏,将授予对所有服务的访问权限。另一方面,委派令牌严格与其相关的服务绑定在一起,并最终到期,如果暴露,则造成的损害较小。此外,委派令牌使凭证更新更加轻便。这是因为更新的设计方式使得更新过程仅涉及更新程序和服务。令牌本身保持不变,因此不必更新已经使用令牌的所有参与者。

出于可用性的原因,委派令牌由服务器保留。HDFS NameNode将委托令牌保留到其元数据(也称为fsimage和编辑日志)。KMS以ZNodes的形式将委派令牌保持在Apache ZooKeeper中。即使服务器重新启动或故障转移,这也可使委托令牌可用。

服务器和客户端在处理委托令牌方面有不同的职责。以下两个部分提供了一些详细信息。

服务器端的委托令牌

该服务器(即图2中的HDFS NN和KMS)负责:

- 发行委托令牌,并存储它们以进行验证。

- 根据请求续订委派令牌。

- 在客户端取消委派令牌或它们到期时删除委派令牌。

- 通过对照存储的委托令牌验证提供的委托令牌来验证客户端。

Hadoop中的委托令牌是根据HMAC机制生成和验证的。委托令牌中有两部分信息:公共部分和私有部分。委托令牌以哈希图的形式存储在服务器端,公共信息作为键,私有信息作为值。

公共信息以标识符对象的形式用于令牌标识。它包括:

| 类 | 令牌的种类(HDFS_DELEGATION_TOKEN或kms-dt)。令牌还包含种类,与标识符的种类匹配。 |

| 所有者 | 拥有令牌的用户。 |

| 续订 | 可以续签令牌的用户。 |

| 真实用户 | 仅在所有者被假冒时相关。如果令牌是由模拟用户创建的,则这将标识模拟用户。 例如,当oozie冒充用户joe时,所有者将为joe,而实际用户将为oozie。 |

| 发行日期 | 令牌发行的时代时间。 |

| 最长日期 | 令牌可以更新到的时期。 |

| 序列 号 | UUID以标识令牌。 |

| 主密钥ID | 用于创建和验证令牌的主密钥的ID。 |

表1:令牌标识符(委托令牌的公共部分)

私有信息由AbstractDelegationTokenSecretManager中的类DealerTokenInformation表示,它对安全性至关重要,并且包含以下字段:

| 更新日期 | 令牌预计将更新的时期。 如果小于当前时间,则表示令牌已过期。 |

| 密码 | 使用主密钥作为HMAC密钥计算为令牌标识符的HMAC的密码。 它用于验证客户端提供给服务器的委派令牌。 |

| 跟踪号码 | 跟踪标识符,可用于在多个客户端会话之间关联令牌的用法。 它被计算为令牌标识符的MD5。 |

表2:委托令牌信息(委托令牌的私有部分)

请注意表1中的主密钥ID,这是服务器上存在的主密钥的ID。主密钥用于生成每个委派令牌。它以配置的时间间隔滚动,并且永不离开服务器。

服务器还具有指定更新间隔的配置(默认为24小时),这也是委托令牌的到期时间。过期的委托令牌不能用于身份验证,并且服务器具有后台线程,可以从服务器的令牌存储中删除过期的委托令牌。

在过期之前,只有授权令牌的续订者才能续签。成功的续订将授权令牌的有效期延长了另一个续订间隔,直到达到其最大生命周期(默认为7天)。

附录A中的表提供了HDFS和KMS的配置名称及其默认值。

客户端的委托令牌

客户负责

- 从服务器请求新的委派令牌。请求令牌时可以指定续订。

- 续订委派令牌(如果客户将自己指定为“续订者”),或要求另一方(指定的“续订者”)续订委派令牌。

- 请求服务器取消委派令牌。

- 出示委派令牌以与服务器进行身份验证。

客户端可见的Token类在此处定义。下表描述了令牌中包含的内容。

| 识别码 | 与服务器端的公共信息部分匹配的令牌标识符。 |

| 密码 | 与服务器端密码匹配的密码。 |

| 类 | 令牌的种类(例如HDFS_DELEGATION_TOKEN或kms-dt),它与标识符的种类匹配。 |

| 服务 | 服务的名称(例如,对于HDFS为ha-hdfs: |

| 更新 | 可以续签令牌(例如纱线)的用户。 |

表3:客户端的委托令牌

下一部分将说明使用委派令牌进行身份验证的方式。

以下是作业提交时的示例日志摘录。INFO日志打印有来自所有服务的令牌。在下面的示例中,我们看到一个HDFS委托令牌和一个KMS委托令牌。

$ hadoop jar /opt/cloudera/parcels/CDH/jars/hadoop-mapreduce-examples-2.6.0-cdh5.13.0.jar pi 2 3 Number of Maps = 2 Samples per Map = 3 Wrote input for Map #0 Wrote input for Map #1 Starting Job 17/10/22 20:50:03 INFO client.RMProxy: Connecting to ResourceManager at example.cloudera.com/172.31.113.88:8032 17/10/22 20:50:03 INFO hdfs.DFSClient: Created token for xiao: HDFS_DELEGATION_TOKEN [email protected], renewer=yarn, realUser=, issueDate=1508730603423, maxDate=1509335403423, sequenceNumber=4, masterKeyId=39 on ha-hdfs:ns1 17/10/22 20:50:03 INFO security.TokenCache: Got dt for hdfs://ns1; Kind: HDFS_DELEGATION_TOKEN, Service: ha-hdfs:ns1, Ident: (token for xiao: HDFS_DELEGATION_TOKEN [email protected], renewer=yarn, realUser=, issueDate=1508730603423, maxDate=1509335403423, sequenceNumber=4, masterKeyId=39) 17/10/22 20:50:03 INFO security.TokenCache: Got dt for hdfs://ns1; Kind: kms-dt, Service: 172.31.113.88:16000, Ident: (kms-dt owner=xiao, renewer=yarn, realUser=, issueDate=1508730603474, maxDate=1509335403474, sequenceNumber=7, masterKeyId=69) … |

对于想要编写Java代码进行身份验证的读者,附录B提供了示例代码。

示例:委托令牌的生命周期

现在我们了解了委托令牌是什么,让我们看一下它在实践中的用法。下图显示了用于运行典型应用程序的身份验证示例流程,其中该作业是通过YARN提交的,然后被分发到集群中的多个工作程序节点以执行。

为简便起见,省略了Kerberos身份验证的步骤以及有关任务分发的详细信息。图中一般有5个步骤:

- 客户端要在集群中运行作业。它从HDFS NameNode获取一个HDFS委托令牌,并从KMS获得一个KMS委托令牌。

- 客户端将作业提交给YARN资源管理器(RM),并传递刚获取的委派令牌以及ApplicationSubmissionContext。

- YARN RM通过立即续签来验证委托令牌是否有效。然后,它将启动作业,该作业(与委托令牌一起)分发到集群中的工作节点。

- 每个工作节点在访问HDFS数据时都使用HDFS委派令牌向HDFS进行身份验证,并在解密加密区域中的HDFS文件时使用KMS委派令牌向KMS进行身份验证。

- 作业完成后,RM取消该作业的委派令牌。

注意:上图中未绘制的步骤是,RM还将跟踪每个委托令牌的到期时间,并在到期时间的90%时更新委托令牌。请注意,在RM中会单独跟踪委派令牌,而不是基于令牌种类。这样,具有不同更新间隔的令牌都可以正确更新。令牌更新类是使用Java ServiceLoader实现的,因此RM不必知道令牌的种类。对于非常感兴趣的读者,相关代码在RM的PrincipledTokenRenewer类中。

这是什么InvalidToken错误?

现在我们知道什么是委托令牌,以及在执行典型任务时如何使用它们。但是不要在这里停下来!让我们从应用程序日志中查看一些典型的与委托令牌相关的错误消息,并弄清它们的含义。

令牌已过期

有时,应用程序会因AuthenticationException而失败,并在其中封装了InvalidToken异常。异常消息指示“令牌已过期”。猜猜为什么会发生这种情况?

| … 17/10/22 20:50:12 INFO mapreduce.Job: Job job_1508730362330_0002 failed with state FAILED due to: Application application_1508730362330_0002 failed 2 times due to AM Container for appattempt_1508730362330_0002_000002 exited with exitCode: -1000 |

在缓存中找不到令牌

有时,应用程序会因AuthenticationException而失败,并在其中封装了InvalidToken异常。异常消息指示“在缓存中找不到令牌”。猜猜为什么会发生这种情况,与“令牌已过期”错误有什么区别?

| …。 17/10/22 20:55:47 INFO mapreduce.Job: Job job_1508730362330_0003 failed with state FAILED due to: Application application_1508730362330_0003 failed 2 times due to AM Container for appattempt_1508730362330_0003_000002 exited with exitCode: -1000 For more detailed output, check application tracking page:https://example.cloudera.com:8090/proxy/application_1508730362330_0003/Then, click on links to logs of each attempt. Diagnostics: org.apache.hadoop.security.authentication.client.AuthenticationException: org.apache.hadoop.security.token.SecretManager$InvalidToken: token (kms-dt owner=xiao, renewer=yarn, realUser=, issueDate=1508730937041, maxDate=1509335737041, sequenceNumber=8, masterKeyId=73) can’t be found in cache java.io.IOException: org.apache.hadoop.security.authentication.client.AuthenticationException: org.apache.hadoop.security.token.SecretManager$InvalidToken: token (kms-dt owner=xiao, renewer=yarn, realUser=, issueDate=1508730937041, maxDate=1509335737041, sequenceNumber=8, masterKeyId=73) can’t be found in cache at org.apache.hadoop.crypto.key.kms.LoadBalancingKMSClientProvider.decryptEncryptedKey(LoadBalancingKMSClientProvider.java:294) at org.apache.hadoop.crypto.key.KeyProviderCryptoExtension.decryptEncryptedKey(KeyProviderCryptoExtension.java:528) at org.apache.hadoop.hdfs.DFSClient.decryptEncryptedDataEncryptionKey(DFSClient.java:1448) … at org.apache.hadoop.fs.FileSystem.open(FileSystem.java:784) at org.apache.hadoop.fs.FileUtil.copy(FileUtil.java:367) at org.apache.hadoop.yarn.util.FSDownload.copy(FSDownload.java:265) at org.apache.hadoop.yarn.util.FSDownload.access$000(FSDownload.java:61) at org.apache.hadoop.yarn.util.FSDownload$2.run(FSDownload.java:359) at org.apache.hadoop.yarn.util.FSDownload$2.run(FSDownload.java:357) … at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) Caused by: org.apache.hadoop.security.authentication.client.AuthenticationException: org.apache.hadoop.security.token.SecretManager$InvalidToken: token (kms-dt owner=xiao, renewer=yarn, realUser=, issueDate=1508730937041, maxDate=1509335737041, sequenceNumber=8, masterKeyId=73) can’t be found in cache … at org.apache.hadoop.crypto.key.kms.KMSClientProvider.call(KMSClientProvider.java:535) … … |

说明:

上面的2个错误具有相同的原因:用于身份验证的令牌已过期,不能再用于身份验证。

第一条消息能够明确告知令牌已过期,因为该令牌仍存储在服务器中。因此,当服务器验证令牌时,对到期时间的验证将失败,并引发“令牌已过期”异常。

现在您可以猜测何时会发生第二个错误吗?请记住,在“服务器端的委托令牌”部分中,我们解释了服务器具有后台线程来删除过期的令牌。因此,如果在服务器的后台线程删除令牌后将令牌用于身份验证,则服务器上的验证逻辑将找不到该令牌。这导致抛出异常,说“找不到令牌”。

下图显示了这些事件的顺序。

请注意,当令牌被明确取消后,它将立即从商店中删除。因此,对于已取消的令牌,错误将始终是“找不到令牌”。

为了进一步调试这些错误,有必要在客户端日志和服务器日志中添加特定令牌序列号(在上述示例中为“ sequenceNumber = 7”或“ sequenceNumber = 8”)。您应该能够在服务器日志中看到与令牌创建,续订(如果有),取消(如果有)有关的事件。

长期运行的应用程序

至此,您已经了解了有关Hadoop授权令牌的所有基础知识。但除此之外,还有一个缺失的环节:我们知道,授权令牌无法在其最大生存期之后进行更新,那么对于需要运行超过最大生存期的应用程序,会发生什么呢?

坏消息是,Hadoop没有为所有应用程序提供统一的方法来执行此操作,因此不存在任何应用程序都可以打开以使其“正常运行”的魔术配置。但这仍然是可能的。对于Spark提交的应用程序来说,好消息是,Spark已经实现了这些神奇的参数。Spark获取委托令牌并将其用于身份验证,类似于我们在前面的部分中所述。但是,Spark不会续签令牌,而只是在即将过期时获取新令牌。这使应用程序可以永远运行。相关代码在这里。请注意,这需要为您的Spark应用程序提供Kerberos密钥表。

但是,如果您要实现一个长期运行的服务应用程序,并希望该应用程序显式处理令牌,该怎么办?这将涉及两个部分:更新令牌,直到达到最大生存期;在最大使用寿命后处理令牌替换。

请注意,这不是通常的做法,只有在实现服务时才建议这样做。

实施令牌续订

让我们首先研究应该如何进行令牌更新。最好的方法是研究YARN RM的PrincipledTokenRenewer代码。

该类的一些关键点是:

- 它是管理所有令牌的单一类。在内部,它有一个续订令牌的线程池,另一个有取消令牌的线程。更新在到期时间的90%发生。取消会有一个小的延迟(30秒),以防止比赛。

- 每个令牌的到期时间是分开管理的。通过调用令牌的renew()API以编程方式检索令牌的到期时间。该API的返回值是到期时间。

dttr.expirationDate =

UserGroupInformation.getLoginUser().doAs(

new PrivilegedExceptionAction() {

@Override

public Long run() throws Exception {

return dttr.token.renew(dttr.conf);

}

}); 这是YARN RM收到令牌后立即更新令牌的另一个原因-知道令牌何时到期。

3.通过解码令牌的标识符并调用其getMaxDate()API来检索每个令牌的最大生存期 。标识符中的其他字段也可以通过调用类似的API获得。

if (token.getKind().equals(HDFS_DELEGATION_KIND)) {

try {

AbstractDelegationTokenIdentifier identifier =

(AbstractDelegationTokenIdentifier) token.decodeIdentifier();

maxDate = identifier.getMaxDate();

} catch (IOException e) {

throw new YarnRuntimeException(e);

}

}4.由于2和3,无需读取配置即可确定更新间隔和最大寿命。服务器的配置可能会不时更改。客户不应该依赖它,也没有办法知道它。

Max Lifetime之后处理令牌

令牌续签将仅续签令牌,直至其最大使用寿命。最长寿命后,该作业将失败。如果您的应用程序是长期运行的,则应考虑使用YARN文档中描述的有关长期服务的机制,或者在自己的委托令牌更新类中添加逻辑,以便在现有委托令牌即将达到最大值时检索新的委托令牌。一生。

其他使用令牌的方式

恭喜你!现在,您已经阅读完有关委托令牌的概念和详细信息。本博客文章中未涉及一些相关方面。以下是它们的简要介绍。

其他服务中的委托令牌:Apache Oozie,Apache Hive和Apache Hadoop的YARN RM等服务也利用委托令牌进行身份验证。

块访问令牌:HDFS客户端通过首先联系NameNode来获取文件的块位置,然后直接在DataNode上访问这些块来访问文件。文件权限检查在NameNode中进行。但是,对于随后在DataNodes上进行的数据块访问,也需要进行授权检查。块访问令牌用于此目的。它们由HDFS NameNode发布给客户端,然后由客户端传递给DataNode。阻止访问令牌的寿命很短(默认情况下为10小时),无法更新。如果“阻止访问令牌”到期,则客户端必须请求一个新令牌。

身份验证令牌:我们仅介绍了授权令牌。Hadoop还具有身份验证令牌的概念,该令牌被设计为更便宜且可扩展的身份验证机制。它就像服务器和客户端之间的cookie。身份验证令牌是由服务器授予的,不能被更新或用于模拟其他人。并且与委托令牌不同,不需要由服务器单独存储。您不需要针对身份验证令牌显式编码。

结论

委托令牌在Hadoop生态系统中扮演重要角色。现在,您应该了解委托令牌的用途,如何使用它们以及为什么以这种方式使用它们。在编写和调试应用程序时,此知识必不可少。

附录

附录A.服务器端的配置。

下表是与委托令牌相关的配置表。请参阅服务器端的委托令牌以获取这些属性的说明。

| 属性 | HDFS中的 配置名称 | HDFS中的 默认 值 | KMS中的 配置名称 | KMS中的 默认 值 |

| 更新 间隔 | dfs.namenode.delegation.token.renew- 间隔 |

86400000 (1天) |

hadoop.kms.authentication.delegation-token.renew- interval.sec |

86400 (1天) |

| 最长寿命 | dfs.namenode.delegation.token.max- 生存期 |

604800000 (7天) |

hadoop.kms.authentication.delegation-token.max- lifetime.sec |

604800 (7天) |

| 后台 删除 过期令牌的 间隔 |

不可配置 | 3600000 (1小时) |

hadoop.kms.authentication.delegation-token.removal -scan-interval.sec |

3600 (1小时) |

| 主密钥滚动间隔 | dfs.namenode.delegation.key.update- 间隔 |

86400000 (1天) |

hadoop.kms.authentication.delegation-token.update- interval.sec |

86400 (1天) |

表4:HDFS和KMS的配置属性和默认值

附录B.使用委托令牌进行身份验证的示例代码。

在查看下面的代码之前,需要理解的一个概念是UserGroupInformation(UGI)类。UGI是Hadoop的公共API,用于针对身份验证进行编码。它在下面的代码示例中使用,并且还早些时候出现在某些异常堆栈跟踪中。

GetFileStatus用作使用UGI访问HDFS的示例。有关详细信息,请参见FileSystem类javadoc。

UserGroupInformation tokenUGI = UserGroupInformation.createRemoteUser("user_name");

UserGroupInformation kerberosUGI = UserGroupInformation.loginUserFromKeytabAndReturnUGI("principal_in_keytab", "path_to_keytab_file");

Credentials creds = new Credentials();

kerberosUGI.doAs((PrivilegedExceptionAction) () -> {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

fs.getFileStatus(filePath); // ← kerberosUGI can authenticate via Kerberos

// get delegation tokens, add them to the provided credentials. set renewer to ‘yarn’

Token[] newTokens = fs.addDelegationTokens("yarn", creds);

// Add all the credentials to the UGI object

tokenUGI.addCredentials(creds);

// Alternatively, you can add the tokens to UGI one by one.

// This is functionally the same as the above, but you have the option to log each token acquired.

for (Token token : newTokens) {

tokenUGI.addToken(token);

}

return null;

}); 请注意,使用Kerberos身份验证调用addDelegationTokens RPC调用。否则,将导致抛出IOException,文字为“只能使用kerberos或Web身份验证才能发布委托令牌”。

现在,我们可以使用获取的委托令牌来访问HDFS。

tokenUGI.doAs((PrivilegedExceptionAction) () -> {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

fs.getFileStatus(filePath); // ← tokenUGI can authenticate via Delegation Token

return null;

}); ---------------------------------------------------------下面是英文版------------------------------------------------------------------

Apache Hadoop’s security was designed and implemented around 2009, and has been stabilizing since then. However, due to a lack of documentation around this area, it’s hard to understand or debug when problems arise. Delegation tokens were designed and are widely used in the Hadoop ecosystem as an authentication method. This blog post introduces the concept of Hadoop Delegation Tokens in the context of Hadoop Distributed File System (HDFS) and Hadoop Key Management Server (KMS), and provides some basic code and troubleshooting examples. It is noteworthy that there are a lot of other services in the Hadoop ecosystem that also utilize delegation tokens, but for brevity we will only discuss about HDFS and KMS.

This blog assumes the readers understand the basic concept of authentication, Kerberos, for the purpose of understanding the authentication flow; as well as HDFS Architecture and HDFS Transparent Encryption, for the purpose of understanding what HDFS and KMS are. For readers who are not interested in HDFS transparent encryption, the KMS portions in this blog post can be ignored. A previous blog post about general Authorization and Authentication in Hadoop can be found here.

Quick Introduction to Hadoop Security

Hadoop was initially implemented without real authentication, which means data stored in Hadoop could be easily compromised. The security feature was added on later via HADOOP-4487 in 2010 with the following two fundamental goals:

- Preventing the data stored in HDFS from unauthorized access

- Not adding significant cost while achieving goal #1.

In order to achieve the first goal, we need to ensure

- Any clients accessing the cluster are authenticated to ensure they are who they claimed to be.

- Any servers of the cluster are authenticated to be part of the cluster.

For this goal, Kerberos was chosen as the underlying authentication service. Other mechanisms such as Delegation Token, Block Access Token, Trust etc. are added to complement Kerberos. Especially, Delegation Token is introduced to achieve the second goal (see next section for how). Below is a simplified diagram that illustrates where Kerberos and Delegation Tokens are used in the context of HDFS (other services are similar):

Figure 1: Simplified Diagram of HDFS Authentication Mechanisms

In a simple HDFS example above, there are several authentication mechanisms in play:

- The end user (joe) can talk to the HDFS NameNode using Kerberos

- The distributed tasks the end user (joe) submits can access the HDFS NameNode using joe’s Delegation Tokens. This will be the focus for the rest of this blog post

- The HDFS DataNodes talk to the HDFS NameNode using Kerberos

- The end user and the distributed tasks can access HDFS DataNodes using Block Access Tokens.

We will give a brief introduction of some of the above mechanisms in the Other Ways Tokens Are Used section at the end of this blog post. To read about more details of Hadoop security design, please refer to the design doc in HADOOP-4487 and the Hadoop Security Architecture Presentation.

What is a Delegation Token?

While it’s theoretically possible to solely use Kerberos for authentication, it has its own problem when being used in a distributed system like Hadoop. Imagine for each MapReduce job, if all the worker tasks have to authenticate via Kerberos using a delegated TGT (Ticket Granting Ticket), the Kerberos Key Distribution Center (KDC) would quickly become the bottleneck. The red lines in the graph below demonstrates the issue: there could be thousands of node-to-node communications for a job, resulting in the same magnitude of KDC traffic. In fact, it would inadvertently be performing a distributed denial of service attack on the KDC in very large clusters.

Figure 2: Simplified Diagram Showing The Authentication Scaling Problem in Hadoop

Thus Delegation Tokens were introduced as a lightweight authentication method to complement Kerberos authentication. Kerberos is a three-party protocol; in contrast, Delegation Token authentication is a two-party authentication protocol.

The way Delegation Tokens works is:

- The client initially authenticates with each server via Kerberos, and obtains a Delegation Token from that server.

- The client uses the Delegation Tokens for subsequent authentications with the servers instead of using Kerberos.

The client can and very often does pass Delegation Tokens to other services (such as YARN) so that the other services (such as the mappers and reducers) can authenticate as, and run jobs on behalf of, the client. In other words, the client can ‘delegate’ credentials to those services. Delegation Tokens have an expiration time and require periodic renewals to keep their validity. However, they can’t be renewed indefinitely – there is a max lifetime. Before it expires, the Delegation Token can be cancelled too.

Delegation Tokens eliminate the need to distribute a Kerberos TGT or keytab, which, if compromised, would grant access to all services. On the other hand, a Delegation Token is strictly tied to its associated service and eventually expires, causing less damage if exposed. Moreover, Delegation Tokens make credential renewal more lightweight. This is because the renewal is designed in such a way that only the renewer and the service are involved in the renewal process. The token itself remains the same, so all parties already using the token do not have to be updated.

For availability reasons, the Delegation Tokens are persisted by the servers. HDFS NameNode persists the Delegation Tokens to its metadata (aka. fsimage and edit logs). KMS persists the Delegation Tokens into Apache ZooKeeper, in the form of ZNodes. This allows the Delegation Tokens to be usable even if the servers restart or failover.

The server and the client have different responsibilities for handling Delegation Tokens. The two sections below provide some details.

Delegation Tokens at the Server-side

The server (that is, HDFS NN and KMS in Figure 2.) is responsible for:

- Issuing Delegation Tokens, and storing them for verification.

- Renewing Delegation Tokens upon request.

- Removing the Delegation Tokens either when they are canceled by the client, or when they expire.

- Authenticating clients by verifying the provided Delegation Tokens against the stored Delegation Tokens.

Delegation Tokens in Hadoop are generated and verified following the HMAC mechanism. There are two parts of information in a Delegation Token: a public part and a private part. Delegation Tokens are stored at the server side as a hashmap with the public information as the key and the private information as the value.

The public information is used for token identification in the form of an identifier object. It consists of:

| Kind | The kind of token (HDFS_DELEGATION_TOKEN, or kms-dt). The token also contains the kind, matching the identifier’s kind. |

| Owner | The user who owns the token. |

| Renewer | The user who can renew the token. |

| Real User | Only relevant if the owner is impersonated. If the token is created by an impersonating user, this will identify the impersonating user. For example, when oozie impersonates user joe, Owner will be joe and Real User will be oozie. |

| Issue Date | Epoch time when the token was issued. |

| Max Date | Epoch time when the token can be renewed until. |

| Sequence Number | UUID to identify the token. |

| Master Key ID | ID of the master key used to create and verify the token. |

Table 1: Token Identifier (public part of a Delegation Token)

The private information is represented by class DelegationTokenInformation in AbstractDelegationTokenSecretManager, it is critical for security and contains the following fields:

| renewDate | Epoch time when the token is expected to be renewed. If it’s smaller than the current time, it means the token has expired. |

| password | The password calculated as HMAC of Token Identifier using master key as the HMAC key. It’s used to validate the Delegation Token provided by the client to the server. |

| trackingId | A tracking identifier that can be used to associate usages of a token across multiple client sessions. It is computed as the MD5 of the token identifier. |

Table 2: Delegation Token Information (private part of a Delegation Token)

Notice the master key ID in Table 1, which is the ID of the master key living on the server. The master key is used to generate every Delegation Token. It is rolled at a configured interval, and never leaves the server.

The server also has a configuration to specify a renew-interval (default is 24 hours), which is also the expiration time of the Delegation Token. Expired Delegation Tokens cannot be used to authenticate, and the server has a background thread to remove expired Delegation Tokens from the server’s token store.

Only the renewer of the Delegation Token can renew it, before it expires. A successful renewal extends the Delegation Token’s expiration time for another renew-interval, until it reaches its max lifetime (default is 7 days).

The table in Appendix A gives the configuration names and their default values for HDFS and KMS.

Delegation tokens at the client-side

The client is responsible for

- Requesting new Delegation Tokens from the server. A renewer can be specified when requesting the token.

- Renewing Delegation Tokens (if the client specifies itself as the ‘renewer’), or ask another party (the specified ‘renewer’) to renew Delegation Tokens.

- Requesting the server to cancel Delegation Tokens.

- Present a Delegation Token to authenticate with the server.

The client-side-visible Token class is defined here. The following table describes what’s contained in the Token.

| identifier | The token identifier matching the public information part at the server side. |

| password | The password matching the password at the server side. |

| kind | The kind of token (e.g. HDFS_DELEGATION_TOKEN, or kms-dt), it matches the identifier’s kind. |

| service | The name of the service (e.g. ha-hdfs: |

| renewer | The user who can renew the token (e.g. yarn). |

Table 3: Delegation Token at Client Side

How authentication with Delegation Tokens works is explained in the next section.

Below is an example log excerpt at job submission time. The INFO logs are printed with tokens from all services. In the example below, we see an HDFS Delegation Token, and a KMS Delegation Token.

| $ hadoop jar /opt/cloudera/parcels/CDH/jars/hadoop-mapreduce-examples-2.6.0-cdh5.13.0.jar pi 2 3 Number of Maps = 2 Samples per Map = 3 Wrote input for Map #0 Wrote input for Map #1 Starting Job 17/10/22 20:50:03 INFO client.RMProxy: Connecting to ResourceManager at example.cloudera.com/172.31.113.88:8032 17/10/22 20:50:03 INFO hdfs.DFSClient: Created token for xiao: HDFS_DELEGATION_TOKEN [email protected], renewer=yarn, realUser=, issueDate=1508730603423, maxDate=1509335403423, sequenceNumber=4, masterKeyId=39 on ha-hdfs:ns1 17/10/22 20:50:03 INFO security.TokenCache: Got dt for hdfs://ns1; Kind: HDFS_DELEGATION_TOKEN, Service: ha-hdfs:ns1, Ident: (token for xiao: HDFS_DELEGATION_TOKEN [email protected], renewer=yarn, realUser=, issueDate=1508730603423, maxDate=1509335403423, sequenceNumber=4, masterKeyId=39) 17/10/22 20:50:03 INFO security.TokenCache: Got dt for hdfs://ns1; Kind: kms-dt, Service: 172.31.113.88:16000, Ident: (kms-dt owner=xiao, renewer=yarn, realUser=, issueDate=1508730603474, maxDate=1509335403474, sequenceNumber=7, masterKeyId=69) … |

For readers who want to write Java code to authenticate, a sample code is provided at Appendix B.

Example: Delegation Tokens’ Lifecycle

Now that we understand what a Delegation Token is, let’s take a look at how it’s used in practice. The graph below shows an example flow of authentications for running a typical application, where the job is submitted through YARN, then is distributed to multiple worker nodes in the cluster to execute.

Figure 3: How Delegation Token is Used for Authentication In A Typical Job

For brevity, the steps of Kerberos authentication and the details about the task distribution are omitted. There are 5 steps in general in the graph:

- The client wants to run a job in the cluster. It gets an HDFS Delegation Token from HDFS NameNode, and a KMS Delegation Token from the KMS.

- The client submits the job to the YARN Resource Manager (RM), passing the Delegation Tokens it just acquired, as well as the ApplicationSubmissionContext.

- YARN RM verifies the Delegation Tokens are valid by immediately renewing them. Then it launches the job, which is distributed (together with the Delegation Tokens) to the worker nodes in the cluster.

- Each worker node authenticates with HDFS using the HDFS Delegation Token as they access HDFS data, and authenticates with KMS using the KMS Delegation Token as they decrypt the HDFS files in encryption zones.

- After the job is finished, the RM cancels the Delegation Tokens for the job.

Note: A step not drawn in the above diagram is, RM also tracks each Delegation Token’s expiration time, and renews the Delegation Token when it’s at 90% of the expiration time. Note that Delegation Tokens are tracked in the RM on an individual basis, not Token-Kind basis. This way, tokens with different renewal intervals can all be renewed correctly. The token renewer classes are implemented using Java ServiceLoader, so RM doesn’t have to be aware of the token kinds. For extremely interested readers, the relevant code is in RM’s DelegationTokenRenewer class.

What is this InvalidToken error?

Now we know what Delegation Tokens are and how they are used when running a typical job. But don’t stop here! Let’s look at some typical Delegation Token related error messages from application logs, and figure out what they mean.

Token is expired

Sometimes, the application fails with AuthenticationException, with an InvalidToken exception wrapped inside. The exception message indicates that “token is expired”. Guess why this could happen?

| …. 17/10/22 20:50:12 INFO mapreduce.Job: Job job_1508730362330_0002 failed with state FAILED due to: Application application_1508730362330_0002 failed 2 times due to AM Container for appattempt_1508730362330_0002_000002 exited with exitCode: -1000 For more detailed output, check application tracking page:https://example.cloudera.com:8090/proxy/application_1508730362330_0002/Then, click on links to logs of each attempt. Diagnostics: org.apache.hadoop.security.authentication.client.AuthenticationException: org.apache.hadoop.security.token.SecretManager$InvalidToken: token (kms-dt owner=xiao, renewer=yarn, realUser=, issueDate=1508730603474, maxDate=1509335403474, sequenceNumber=7, masterKeyId=69) is expired, current time: 2017-10-22 20:50:12,166-0700 expected renewal time: 2017-10-22 20:50:05,518-0700 …. Caused by: org.apache.hadoop.security.authentication.client.AuthenticationException: org.apache.hadoop.security.token.SecretManager$InvalidToken: token (kms-dt owner=xiao, renewer=yarn, realUser=, issueDate=1508730603474, maxDate=1509335403474, sequenceNumber=7, masterKeyId=69) is expired, current time: 2017-10-22 20:50:12,166-0700 expected renewal time: 2017-10-22 20:50:05,518-0700 at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) … at org.apache.hadoop.crypto.key.kms.KMSClientProvider.call(KMSClientProvider.java:535) … |

Token can’t be found in cache

Sometimes, the application fails with AuthenticationException, with an InvalidToken exception wrapped inside. The exception message indicates that “token can’t be found in cache”. Guess why this could happen, and what’s the difference with the “token is expired” error?

| …. 17/10/22 20:55:47 INFO mapreduce.Job: Job job_1508730362330_0003 failed with state FAILED due to: Application application_1508730362330_0003 failed 2 times due to AM Container for appattempt_1508730362330_0003_000002 exited with exitCode: -1000 For more detailed output, check application tracking page:https://example.cloudera.com:8090/proxy/application_1508730362330_0003/Then, click on links to logs of each attempt. Diagnostics: org.apache.hadoop.security.authentication.client.AuthenticationException: org.apache.hadoop.security.token.SecretManager$InvalidToken: token (kms-dt owner=xiao, renewer=yarn, realUser=, issueDate=1508730937041, maxDate=1509335737041, sequenceNumber=8, masterKeyId=73) can’t be found in cache java.io.IOException: org.apache.hadoop.security.authentication.client.AuthenticationException: org.apache.hadoop.security.token.SecretManager$InvalidToken: token (kms-dt owner=xiao, renewer=yarn, realUser=, issueDate=1508730937041, maxDate=1509335737041, sequenceNumber=8, masterKeyId=73) can’t be found in cache at org.apache.hadoop.crypto.key.kms.LoadBalancingKMSClientProvider.decryptEncryptedKey(LoadBalancingKMSClientProvider.java:294) at org.apache.hadoop.crypto.key.KeyProviderCryptoExtension.decryptEncryptedKey(KeyProviderCryptoExtension.java:528) at org.apache.hadoop.hdfs.DFSClient.decryptEncryptedDataEncryptionKey(DFSClient.java:1448) … at org.apache.hadoop.fs.FileSystem.open(FileSystem.java:784) at org.apache.hadoop.fs.FileUtil.copy(FileUtil.java:367) at org.apache.hadoop.yarn.util.FSDownload.copy(FSDownload.java:265) at org.apache.hadoop.yarn.util.FSDownload.access$000(FSDownload.java:61) at org.apache.hadoop.yarn.util.FSDownload$2.run(FSDownload.java:359) at org.apache.hadoop.yarn.util.FSDownload$2.run(FSDownload.java:357) … at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) Caused by: org.apache.hadoop.security.authentication.client.AuthenticationException: org.apache.hadoop.security.token.SecretManager$InvalidToken: token (kms-dt owner=xiao, renewer=yarn, realUser=, issueDate=1508730937041, maxDate=1509335737041, sequenceNumber=8, masterKeyId=73) can’t be found in cache … at org.apache.hadoop.crypto.key.kms.KMSClientProvider.call(KMSClientProvider.java:535) … |

Explanation:

The 2 errors above share the same cause: the token that was used to authenticate has expired, and can no longer be used to authenticate.

The first message was able to tell explicitly that the token has expired, because that token is still stored in the server. Therefore when the server verifies the token, the verification on expiration time fails, throwing the “token is expired” exception.

Now can you guess when the second error could happen? Remember in the ‘Delegation Tokens at the Server-side’ section, we explained the server has a background thread to remove expired tokens. So if the token is being used for authentication after the server’s background thread has removed it, the verification logic on the server cannot find this token. This results in an exception being thrown, saying “token can’t be found”.

The graph below shows the sequence of these events.

Figure 4: Life Cycle of Delegation Token

Note that when a token is explicitly canceled, it will be immediately removed from the store. So for canceled tokens, the error will always be “token can’t be found”.

To further debug these errors, grepping the client logs as well as the server logs for the specific token sequence number (“sequenceNumber=7” or “sequenceNumber=8” in the above examples) is necessary. You should be able to see events related to token creations, renewals (if any), cancelations (if any) in the server logs.

Long-running Applications

At this point, you know all the basics about Hadoop Delegation Tokens. But there is one missing piece beyond it: we know that Delegations Tokens cannot be renewed beyond their max lifetimes, so what happens to applications that do need to run longer than the max lifetime?

The bad news is that Hadoop does not have a unified way for all applications to do this, so there does not exist a magic configuration which any application can turn on to let it ‘just work’. But this is still possible. Good news for Spark-submitted applications is, Spark has implemented these magical parameters. Spark get the Delegation Tokens and uses them for authentication, similar to what we described in the earlier sections. However, Spark does not renew the tokens, and instead just gets a new token when it’s about to expire. This allows the application to run forever. The relevant code is here. Note that this requires giving a Kerberos keytab to your Spark application.

But what if you are implementing a long-running service application, and want the tokens handled explicitly by the application? This would involve two parts: renew the tokens until the max lifetime; handle the token replacement after max lifetime.

Note that this is not a usual practice, and is only recommended if you’re implementing a service.

Implementing a Token Renewer

Let’s first study how token renewal should be done. The best way is to study YARN RM’s DelegationTokenRenewer code.

Some key points from that class are:

- It is a single class to manage all the tokens. Internally it has a thread pool to renew tokens, and another thread to cancel tokens. Renew happens at 90% of the expiration time. Cancel has a small delay (30 seconds) to prevent races.

- Expiration time for each token is managed separately. The token’s expiration time is programmatically retrieved by calling the token’s renew() API. The return value of that API is the expiration time.

dttr.expirationDate =

UserGroupInformation.getLoginUser().doAs(

new PrivilegedExceptionAction() {

@Override

public Long run() throws Exception {

return dttr.token.renew(dttr.conf);

}

});

This is another reason why YARN RM renews a token immediately after receiving it – to know when it will expire.

3. The max lifetime of each token is retrieved by decoding the token’s identifier, and calling its getMaxDate() API. Other fields in the identifier can also be obtained by calling similar APIs.

if (token.getKind().equals(HDFS_DELEGATION_KIND)) {

try {

AbstractDelegationTokenIdentifier identifier =

(AbstractDelegationTokenIdentifier) token.decodeIdentifier();

maxDate = identifier.getMaxDate();

} catch (IOException e) {

throw new YarnRuntimeException(e);

}

}

4. Because of 2 and 3, no configuration needs to be read to determine the renew interval and max lifetime. The server’s configuration may change from time to time. The client should not depend on it, and do not have a way to know it.

Handling Tokens after Max Lifetime

The token renewer will only renew the token until its max lifetime. After max lifetime, the job will fail. If your application is long-running, you should consider either utilizing the mechanisms described in YARN documentation about long-lived services, or add logic to your own delegation token renewer class, to retrieve new delegation tokens when the existing ones are about to reach max lifetime.

Other Ways Tokens Are Used

Congratulations! You have now finished reading the concepts and details about Delegation Tokens. There are a few related aspects not covered by this blog post. Below is a brief introduction of them.

Delegation Tokens in other services: Services such as Apache Oozie, Apache Hive, and Apache Hadoop’s YARN RM also utilize Delegation Tokens for authentication.

Block Access Token: HDFS clients access a file by first contacting the NameNode, to get the block locations of a specific file, then access the blocks directly on the DataNode. File permission checking takes place in the NameNode. But for the subsequent data block accesses on DataNodes, authorization checks are required as well. Block Access Tokens are used for this purpose. They are issued by HDFS NameNode to the client, and then passed to DataNode by the client. Block Access Token has a short lifetime (10 hours by default) and can not be renewed. If Block Access Token expires, the client has to request a new one.

Authentication Token: We have covered exclusively Delegation Tokens. Hadoop also has the concept of an Authentication Token, which is designed to be an even cheaper and more scalable authentication mechanism. It acts like a cookie between the server and the client. Authentication Tokens are granted by the server, cannot be renewed or used to impersonate others. And unlike Delegation Tokens, don’t need to be individually stored by the server. You should not need to explicitly code against Authentication Token.

Conclusion

Delegation Tokens play an important role in the Hadoop ecosystem. You should now understand the purpose of Delegation Tokens, how they are used, and why they are used this way. This knowledge is essential when writing and debugging applications.

Appendices

Appendix A. Configurations at the server-side.

Below is the table of configurations related to Delegation Tokens. Please see Delegation Tokens at the Server-side for explanations of these properties.

| Property | Configuration Name in HDFS |

Default Value in HDFS |

Configuration Name in KMS |

Default Value in KMS |

| Renew Interval | dfs.namenode.delegation.token.renew- interval |

86400000 (1 day) |

hadoop.kms.authentication.delegation-token.renew- interval.sec |

86400 (1 day) |

| Max Lifetime | dfs.namenode.delegation.token.max- lifetime |

604800000 (7 days) |

hadoop.kms.authentication.delegation-token.max- lifetime.sec |

604800 (7 days) |

| Interval of background removal of expired Tokens |

Not configurable | 3600000 (1 hour) |

hadoop.kms.authentication.delegation-token.removal- scan-interval.sec |

3600 (1 hour) |

| Master Key Rolling Interval | dfs.namenode.delegation.key.update- interval |

86400000 (1 day) |

hadoop.kms.authentication.delegation-token.update- interval.sec |

86400 (1 day) |

Table 4: Configuration Properties and Default Values for HDFS and KMS

Appendix B. Example Code of Authenticating with Delegation Tokens.

One concept to understand before looking at the code below is the UserGroupInformation (UGI) class. UGI is Hadoop’s public API about coding against authentication. It is used in the code examples below, and also appeared in some of the exception stack traces earlier.

GetFileStatus is used as an example of using UGIs to access HDFS. For details, see FileSystem class javadoc.

UserGroupInformation tokenUGI = UserGroupInformation.createRemoteUser("user_name");

UserGroupInformation kerberosUGI = UserGroupInformation.loginUserFromKeytabAndReturnUGI("principal_in_keytab", "path_to_keytab_file");

Credentials creds = new Credentials();

kerberosUGI.doAs((PrivilegedExceptionAction) () -> {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

fs.getFileStatus(filePath); // ← kerberosUGI can authenticate via Kerberos

// get delegation tokens, add them to the provided credentials. set renewer to ‘yarn’

Token[] newTokens = fs.addDelegationTokens("yarn", creds);

// Add all the credentials to the UGI object

tokenUGI.addCredentials(creds);

// Alternatively, you can add the tokens to UGI one by one.

// This is functionally the same as the above, but you have the option to log each token acquired.

for (Token token : newTokens) {

tokenUGI.addToken(token);

}

return null;

});

Note that the addDelegationTokens RPC call is invoked with Kerberos authentication. Otherwise, it would result in an IOException thrown, with text saying “Delegation Token can be issued only with kerberos or web authentication”.

Now, we can use the acquired delegation token to access HDFS.

tokenUGI.doAs((PrivilegedExceptionAction) () -> { Configuration conf = new Configuration(); FileSystem fs = FileSystem.get(conf); fs.getFileStatus(filePath); // ← tokenUGI can authenticate via Delegation Token return null; });