构建大数据ETL通道--Json数据的流式转换--Json转Avro(一)

公司之前收集日志数据,采用的是Json格式(因为原始日志格式就是Json的)。采用Json格式的优点是开发简单、数据可读性强;缺点是占用的存储空间太大、相应Hive表的查询速度很慢。因此,我们开展调研工作,准备解决这两个痛点。调研的过程分了很多步,接下来我将写几篇文章来大概描述一下。因为也是在探索,有的工作做得不是很充分,大家可以多提建议,共同交流。

为了提高数据的传输效率,我们打算将日志的生成格式改成Avro。因为Avro依赖于schema。当读取Avro数据时,总是能够获取到写入该数据时用到的schema。这样一来,每次写入数据都无需多余的开销,进而加快序列化速度、减小序列的大小。

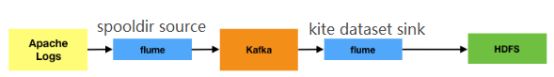

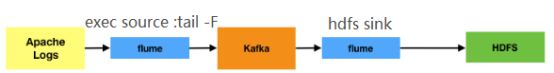

在修改日志生成格式之前,我们先做了一个简单调研。即Avro数据流通过Flume到Kafka,再到HDFS的流式转换。得到了如下结果:

1 采用exec source,tail -F会破坏avro数据结构。不可行!

https://stackoverflow.com/questions/21617025/flume-directory-to-avro-avro-to-hdfs-not-valid-avro-after-transfer?rq=1

(1) json数据:

{"name": "litao", "age": 18}

{"name": "

litao", "age": 18}

{"name": "

litao", "age": 18}

(2) schema:

{

"namespace": "com.howdy",

"name": "some_schema",

"type": "record",

"fields": [ { "name":"name","type":"string"},

{ "name":"age","type":"int"}

]

}

(3) Flume的配置文件:

为了体验Flume的Avro Sink,我特意在ngnix日志生成服务器、kafka服务器进行了Flume配置(Kafka配置和HDFS可以合并在一起的,我是为了方便测试拆开的,大家可以根据实际情况配置)。

Ngnix 配置:

# Name the components on this agent

a1.sources = r

a1.sinks = k_kafka

a1.channels = c_mem

# Channels info

a1.channels.c_mem.type = memory

a1.channels.c_mem.capacity = 2000

a1.channels.c_mem.transactionCapacity = 300

a1.channels.c_mem.keep-alive = 60

# Sources info

a1.sources.r.type = spooldir

a1.sources.r.channels = c_mem

a1.sources.r.spoolDir = /home/litao/avro_file/

a1.sources.r.fileHeader = true

a1.sources.r.deserializer = avro

# Sinksinfo

a1.sinks.k_kafka.type = avro

a1.sinks.k_kafka.hostname = localhost

a1.sinks.k_kafka.port = 55555

a1.sinks.k_kafka.channel = c_memKafka 配置:

# Name the components on this agent

a1.sources = r1

a1.channels = c1

a1.sinks = k1

# Sources info

a1.sources.r1.channels = c1

a1.sources.r1.type = avro

a1.sources.r1.bind = localhost

a1.sources.r1.port = 55555

# Channels info

a1.channels.c1.type = memory

a1.channels.c1.capacity = 2000

a1.channels.c1.transactionCapacity =500

a1.channels.c1.keep-alive = 50

# Sinksinfo

a1.sinks.k1.channel = c1

a1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink

a1.sinks.k1.kafka.bootstrap.servers = kafka1:9093,kafka2:9093,kafka3:9093,kafka4:9093,kafka5:9093,kafka6:9093

a1.sinks.k1.kafka.topic = test_2018-03-14

a1.sinks.k1.kafka.flumeBatchSize = 5

a1.sinks.k1.kafka.producer.acks =1HDFS 配置:

# Name the components on this agent

a1.sources = r1

a1.channels = c1

a1.sinks = k1

# Sources info

a1.sources.r1.channels = c1

a1.sources.r1.type = com.bigo.flume.source.kafka.KafkaSource

a1.sources.r1.kafka.bootstrap.servers = kafka1:9093,kafka2:9093,kafka3:9093,kafka4:9093,kafka5:9093,kafka6:9093

a1.sources.r1.kafka.topics = test_2018-03-14

a1.sources.r1.kafka.consumer.group.id = test_2018-03-14.conf_flume_group

a1.sources.r1.kafka.consumer.timeout.ms = 100

#Inject the Schema into the header so the AvroEventSerializer can pick it up

a1.sources.r1.interceptors=i1

a1.sources.r1.interceptors.i1.type = static

a1.sources.r1.interceptors.i1.key=flume.avro.schema.url

a1.sources.r1.interceptors.i1.value=hdfs://bigocluster/user/litao/litao.avsc

# Channels info

a1.channels.c1.type = memory

a1.channels.c1.capacity = 5000

a1.channels.c1.transactionCapacity =1000

a1.channels.c1.keep-alive = 50

# Sinksinfo

a1.sinks.k1.type = hdfs

a1.sinks.k1.channel = c1

a1.sinks.k1.serializer = org.apache.flume.serialization.AvroEventSerializer$Builder

a1.sinks.k1.hdfs.writeFormat=Text

a1.sinks.k1.hdfs.path = hdfs://bigocluster/flume/bigolive/test_2018-03-14

a1.sinks.k1.hdfs.filePrefix = test.%Y-%m-%d

a1.sinks.k1.hdfs.fileSuffix = .avro

a1.sinks.k1.hdfs.rollCount = 0

a1.sinks.k1.hdfs.idleTimeout = 603

a1.sinks.k1.hdfs.useLocalTimeStamp = false

a1.sinks.k1.hdfs.fileType = DataStream

(4) Hive表创建语句(两种方式均可):

SET hive.exec.compress.output=true;

SET avro.output.codec=snappy;

CREATE EXTERNAL TABLE tmp.test_hdfs_litao

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.avro.AvroSerDe'

WITH SERDEPROPERTIES ('avro.schema.url'='hdfs://bigocluster/user/litao/litao.avsc')

STORED AS

INPUTFORMAT 'org.apache.hadoop.hive.ql.io.avro.AvroContainerInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.avro.AvroContainerOutputFormat'

LOCATION 'hdfs://bigocluster/flume/bigolive/test_2018-03-14';

CREATE EXTERNAL TABLE tmp.test_hdfs_litao

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.avro.AvroSerDe'

STORED AS

INPUTFORMAT 'org.apache.hadoop.hive.ql.io.avro.AvroContainerInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.avro.AvroContainerOutputFormat'

LOCATION 'hdfs://bigocluster/flume/bigolive/test_2018-03-14'

TBLPROPERTIES (

'avro.schema.literal'='{

"namespace": "com.howdy",

"name": "some_schema",

"type": "record",

"fields": [ { "name":"name","type":"string"},

{ "name":"age","type":"int"}

]

}'

);

(5) 校验数据

(7) 保存在HDFS的Avro数据

3 结论

如果我们的日志是定时生成一个个的Avro文件,那么可以通过Flume的Spooldir Source读取日志,并流式传输,保存在HDFS,并且可以通过Hive读取。