句法分析

关键词解释

-

序号 符号 解释 1 IP 简单从句 2 NP 名词短语,noun phrase 3 VP 动词短语,verb phrase 4 PP 介词短语,preposition phrase 5 ADVP 副词短语:adverb phrase 6 ADJP 形容词短语:adjective phrase 7 NN 常用名词:normal noun 8 NT 时间名词 9 PN 代词:pronoun 10 VV 动词 11 VA 表语形容词等 12 DT 冠词:derterminer 13 VI 不及物动词:intransitive verb 14 VT 及物动词:transitive verb 15 IN 介词:preposition

定理

- 一个句中存在一个成分称之为根,这个成分不依赖于其他成分

- 其它成分直接依赖于某一成分

- 任何一个成分不能依赖于两个或两个以上的成分

- 如果A成分依赖于B成分,而C成分在句中位于A和B之间,那么C或者直接依赖于B或者直接依存于A和B之间的某一成分

- 中心成分左右两面的其他成分相互不发生关系

PCFG 概率上下文无关语法

-

定义

probabilisitic context free gramma

-

结构

G = ( N , ∑ , R , S ) G=(N,\sum,R,S) G=(N,∑,R,S) -

实例

s → N P , V P s \rightarrow NP,VP s→NP,VP

→ ( D T , N N ) ( V T , N P ) \rightarrow (DT,NN)(VT,NP) →(DT,NN)(VT,NP)

→ ( D T , N N ) ( V T , ( D T , N N ) ) \rightarrow (DT,NN)(VT,(DT,NN)) →(DT,NN)(VT,(DT,NN))实例

-

带有概率时

G = ( N , ∑ , S , R ) G=(N,\sum,S,R) G=(N,∑,S,R)

q ( a → b ) q(a \rightarrow b) q(a→b)

q代表从a状态转化为b状态的概率 -

概率来源

根据语料库获取转化概率

根据转换概率与句法规则构建句法结构树 -

生成数结构(CKY)

non_terminal = {'S','NP','VP','PP','DT','Vi','Vt','NN','IN'} start_symbol = 'S' terminal = {'sleeps','saw','man','woman','dog','telescope','the','with','in'} rules_prob = { 'S':{('NP','VP'):1.0}, 'VP':{('Vt','NP'):0.8,('VP','PP'):0.2}, 'NP':{('DT','NN'):0.8,('NP','PP'):0.2}, 'PP':{('IN','NP'):1.0}, 'Vi':{('sleeps',):1.0}, 'Vt':{('saw',):1.0}, 'NN':{('man',):0.1,('woman',):0.1,('telescope',):0.3,('dog',):0.5}, 'DT':{('the',):1.0}, 'IN':{('with',):0.6,('in',):0.4} } ## 构建类 class PCFG: def __init__(self,non_terminal,terminal,rules_prob,start_symbol): self.non_terminal = non_terminal self.terminal = terminal self.rules_prob = rules_prob self.start_symbol = start_symbol def parse_sentence(self,sentence): sents = sentence.split() best_path = [[{} for _ in range(len(sents))] for _ in range(len(sents))] ##初始化 for i in range(len(sents)): for x in self.non_terminal: best_path[i][i][x]={} if (sents[i],) in self.rules_prob[x].keys(): best_path[i][i][x]['prob']=self.rules_prob[x][(sents[i],)] best_path[i][i][x]['path']={'split':None,'rule':sents[i]} else: best_path[i][i][x]['prob']=0 best_path[i][i][x]['path'] = {'split':None,'rule':None} for l in range(1,len(sents)): for i in range(len(sents)-l): j = i+l for x in self.non_terminal: temp_best_x = {'prob':0,'path':None} for key,value in self.rules_prob[x].items(): if key[0] not in self.non_terminal: break for s in range(i,j): temp_prob = value*best_path[i][s][key[0]]['prob']*best_path[s+1][j][key[1]]['prob'] if temp_prob>temp_best_x['prob']: temp_best_x['prob']=temp_prob temp_best_x['path']={'split':s,'rule':key} best_path[i][j][x]=temp_best_x self.best_path = best_path self._parse_result(0,len(sents)-1,self.start_symbol) print('prob=',self.best_path[0][len(sents)-1][self.start_symbol]['prob']) def _parse_result(self,left_idx,right_idx,root,ind=0): node = self.best_path[left_idx][right_idx][root] if node['path']['split'] is not None: print('\t'*ind,(root,self,rules_prob[root].get(node['path']['rule']))) self._parse_result(left_idx,node['path']['split'],node['path']['rule'][0],ind+1) self._parse_result(node['path']['split']+1,right_idx,node['path']['rule'][1],ind+1) else: print('\t'*ind,self.rules_prob[root].get((node['path']['rule'],))) print('----->',node['path']['rule']) ## 测试 pcfg = PCFG(non_terminal, terminal, rules_prob, start_symbol) sentence = "the man saw the dog with the telescope" pcfg.parse_sentence(sentence)

依存句法分析

-

标注

当前词在句中序号 当前词或标点 当前词的原型与词干 当前词的词性(粗粒度) 当前词的词性(细粒度) 句法特征 当前词的中心词 当前此与中心词的依存关系 1 坚决 坚决 a ad _ 2 方式 2 惩治 惩治 v v _ 0 核心成分 3 贪污 贪污 v v _ 7 限定 4 贿赂 贿赂 n n _ 3 连接依存 5 等 等 u udeng _3 连接依存 6 经济 经济 n n _ 7 限定 7 犯罪 犯罪 v vn _2 受事 -

依存关系类型

关系类型 Tag Description Example 主谓关系 SBV subject-verg 我送她一束花(我<–送) 动宾关系 VOB 直接宾语 我送她一束花(送–>花) 间宾关系 IOB 间接宾语 我送她一束花(送–>她) 前置宾语 FOB 前置宾语 他什么书都读(书<–读) 兼语 DBL double 她请我吃饭(请–>我) 定中关系 ATT attribute 红苹果(红<–苹果) 状中结构 ADV adverbial 非常美丽(非常<–美丽) 动补结构 CMP complement 做完了作业(做–>完) 并列关系 COO Coordinate 大山和大海(大山–>大海) 介宾关系 POB preposition-object 在贸易区内(在–>内) 左附加关系 LAD left adjunct 大山和大海(和<–大海) 右附加关系 RAD right adjunct 孩子们(孩子–>们) 独立结构 IS independent structure 两个单句在结构上批次独立 核心关系 HED head 指句子的核心 -

标签定义

对于依存句法分析,本质上可以转化为分类问题,所以将依存句法作为序列标注任务进行解决也是可行的。鉴于依存关系类过多,直接作为分类标签会导致效果不理想,这里需要进行处理。

根据依存文法,决定两个词之间的依存关系主要由两个因素:方向和距离,因此可以将类别标签定义为以下内容:

[+|-]dPOS,其中[+|-]标识中心词在句子中的相对坐标方向;POS代表中心词具有的词性类别;d表示距离中心词与中心词词性相同的词的数量,即距离。具体内容如下:世界 n n 1_n 第 m m 1_a 八 m m -1_m 大 a a 1_n 奇迹 n n 1_v 世界 n n 1_n 最 d d 1_a 先进 a a 1_n 的 u ude1 -1_a 清真寺 n n 1_v -

配置文件

# -*- coding: utf-8 -*- """ CONFIG ------ 对配置的封装 """ config_instance = None class Config: def __init__(self): self.config_dict = { 'depparser': { 'train_path': 'train.conll', 'train_process_path': 'train.data', 'test_path': 'dev.conll', 'test_process_path': 'dev.data' }, 'model': { 'algorithm': 'lbfgs', 'c1': 0.1, 'c2': 0.1, 'max_iterations': 100, 'model_path': '{}.pkl' } } def get(self, section_name, arg_name): return self.config_dict[section_name][arg_name] def get_config(): global config_instance if not config_instance: config_instance = Config() return config_instance -

语料

__corpus = None class Corpus: _config = get_config() @staticmethod def process_sentence(lines): '''处理句子''' sentence = [] sentences = [] for line in lines: if not line.strip(): sentences.append(sentence) sentence = [] else: sentence.append(line.strip().split(u'\t')) return sentences @classmethod def generator(cls,train=True): if train: sentences = cls.train_sentences else: sentences = cls.test_sentences return cls.extract_feature(sentences) @classmethod def extract_feature(cls,sentences): ''' 提取特征 ''' features,tags=[],[] for index in range(len(sentences)): feature_list,tag_list=[],[] for i in range(len(sentences[index])): feature={ 'w0':sentences[index][i][0], 'p0':sentences[index][i][1], 'w-1':sentences[index][i-1][0] if i!=0 else "BOS", 'w+1':sentences[index][i+1][0] if i!=len(sentences[index])-1 else 'EOS', 'p-1':sentences[index][i-1][1] if i!=0 else 'un', 'p+1':sentences[index][i+1][1] if i!=len(sentences[index])-1 else 'un' } feature['w-1:w0']=feature['w-1']+feature['w0'] feature['w0:w+1']=feature['w0']+feature['w+1'] feature["p-1:p0"] = feature["p-1"]+feature["p0"] feature["p0:p+1"] = feature["p0"]+feature["p+1"] feature["p-1:w0"] = feature["p-1"]+feature["w0"] feature["w0:p+1"] = feature["w0"]+feature["p+1"] feature_list.append(feature) tag_list.append(sentences[index][i][-1]) features.append(feature_list) tags.append(tag_list) return features,tags @classmethod def initialize(cls): train_process_path = cls._config.get('depparser', 'train_process_path') test_process_path = cls._config.get('depparser', 'test_process_path') train_lines = cls.read_corpus_from_file(train_process_path) test_lines = cls.read_corpus_from_file(test_process_path) cls.train_sentences = [sentence for sentence in cls.process_sentence(train_lines)] cls.test_sentences = [sentence for sentence in cls.process_sentence(test_lines)] @classmethod def read_corpus_from_file(cls,file_path): f = open(file_path,'r') lines = f.readlines() f.close() return lines @classmethod def write_corpus_to_file(cls,data,file_path): f=open(file_path,'w') f.write(data) f.close() Corpus.initialize() def get_corpus(): global __corpus if not __corpus: __corpus = Corpus return __corpus-

模型

import sklearn_crfsuite from sklearn_crfsuite import metrics from sklearn.externals import joblib class DepParser: def __init__(self): self.corpus = get_corpus() self.corpus.initialize() self.config = get_config() self.model = None def initialize_model(self): """ 初始化 """ algorithm = self.config.get('model', 'algorithm') c1 = float(self.config.get('model', 'c1')) c2 = float(self.config.get('model', 'c2')) max_iterations = int(self.config.get('model', 'max_iterations')) self.model = sklearn_crfsuite.CRF(algorithm=algorithm, c1=c1, c2=c2, max_iterations=max_iterations, all_possible_transitions=True) def train(self): self.initialize_model() x_train, y_train = self.corpus.generator() self.model.fit(x_train[:500], y_train[:500]) labels = list(self.model.classes_) x_test, y_test = self.corpus.generator(train=False) y_predict = self.model.predict(x_test) metrics.flat_f1_score(y_test, y_predict, average='weighted', labels=labels) sorted_labels = sorted(labels, key=lambda name: (name[1:], name[0])) print(metrics.flat_classification_report(y_test, y_predict, labels=sorted_labels, digits=3)) self.save_model() def predict(self, sentences): """ 预测 """ self.load_model() features, _ = self.corpus.extract_feature(sentences) return self.model.predict(features) def save_model(self, name='model'): """ 保存模型 """ model_path = self.config.get('model', 'model_path').format(name) joblib.dump(self.model, model_path)

-

StanfordCoreNLP

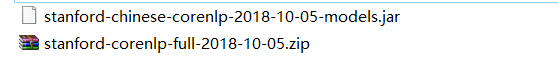

- 下载必要文件

实例:

- 文件准备

- 解压stanford-corenlp-full-2018-10.05.zip

- 将stanford-chinese-corenlp-2018-10-05-models.jar放入上面的加压包中

-

编程

from stanfordcorenlp import StanfordCoreNLP nlp = StanfordCoreNLP(r'D:\Stanford\stanford-corenlp-full-2018-10-05',lang='zh') sentence = '清华大学位于北京' print ('Tokenize:', nlp.word_tokenize(sentence)) print ('Part of Speech:', nlp.pos_tag(sentence)) print ('Named Entities:', nlp.ner(sentence)) print ('Constituency Parsing:', nlp.parse(sentence))#语法树 print ('Dependency Parsing:', nlp.dependency_parse(sentence))#依存句法