【云端大数据实战】大数据误区、大数据处理步骤分析

1.背景

首先感谢这次博客的主办方CSDN以及在初赛为我投票的网友们,你们的支持是Garvin前进的动力。本文思路的依据来源于本次天猫大数据竞赛长达三个月的参赛体验。博主作为一个在校的学生,可能如果不是这次比赛,很难能够拥有一套完整的云环境来对TB级的数据进行分析和实践。下面是博主的一点心得,在此抛砖引玉,希望能给大家在云端大数据处理方面带来一点启示。

2.对于大数据和云的一些误区

(1)误区一:云的计算能力是无穷的,不用考虑效率?

我们都知道,云计算的本质就是分布式计算,将现有的工作量,分割开来让n个不同的服务器协同完成。说白了云计算的计算能力虽然比我们的pc有优越许多,但是它是有计算成本的。当我们处理TB级数据的时候,我们还是尽量要考虑一下效率,否则会陷入漫长的等待。

ps:博主参加比赛的时候,因为一开始没有使用抽样算法,将整个训练集拿去训练,占用了大量的资源,常常一等就是24小时。

(2)误区二:数据量越大,预测效果越好?

大数据计算,顾名思义。就是需要一个很大的数据量,通过一些算法,找到它们和目标序列的特定规则和联系。这就容易造成一个误区,是不是数据量越大,结果准确。其实对于推荐系统来讲,当我们使用随机森林或是gbrt这些算法的时候,数据集在几百万的时候往往能得到比数据集在几千万的时候更好的计算效果。因为对于一个算法来讲,如果数据集过大,容易造成过拟合。

所以在面对一个真正的大数据集的时候,不要盲目的拿来全部训练,做好抽样和正负样本的比例调整,可能会得到更好的效果。

(3)误区三: 算法的参数是一成不变的?

在对大数据进行处理的时候,我们往往使用一些已经比较成熟的算法。例如常用的分类算法有svm(支持向量机)、rf(随机森林)、lr(逻辑回归)等等。在使用这些算法的时候往往要面对一个比较头疼的问题就是调参。是否能调到一个理想的参数,对于最后的计算结果有着至关重要的作用。

对于参数,我觉得没有最好的参数,只有最合适的参数。不要迷信论文或是网上的一些评论,如果你的训练集是独一无二的,就要静下心来,认真调试最适合自己的参数。影响一个算法的参数的因素很多,包括样本数量或是正负样本比例等。

(4)误区四:特征越多越好么?

特征重在质量而不是数量,好的特征对于结果的影响可能会比普通特征大100倍,所以千万不要随便的组合数据集的一些字段。有的时候一些不好的特征,会对结果产生消极的影响。区分特征对与结果的影响的方法有很多,下面给一个信息熵的方法:

def chooseBestFeatureToSplit(dataSet):

numFeatures = len(dataSet[0]) - 1 #the last column is used for the labels

baseEntropy = calcShannonEnt(dataSet)

bestInfoGain = 0.0; bestFeature = -1

for i in range(numFeatures): #iterate over all the features

featList = [example[i] for example in dataSet]#create a list of all the examples of this feature

uniqueVals = set(featList) #get a set of unique values

newEntropy = 0.0

for value in uniqueVals:

subDataSet = splitDataSet(dataSet, i, value)

prob = len(subDataSet)/float(len(dataSet))

newEntropy += prob * calcShannonEnt(subDataSet)

infoGain = baseEntropy - newEntropy #calculate the info gain; ie reduction in entropy

if (infoGain > bestInfoGain): #compare this to the best gain so far

bestInfoGain = infoGain #if better than current best, set to best

bestFeature = i

return bestFeature #returns an integer

3.大数据云处理步骤

(1)首先要了解集成环境

既然是大数据处理,那么一定是要有一个云环境作为依托。我觉得首先要了解自己的集成环境的基本使用方法,像是spark、hadoop或是odps,他们都有自己的特点。比如博主使用的odps,对于数据库的处理可以用命令行执行sql语句,也可以用MapReduce的方法将写好的jar文件放到云端处理。

(2)数据集去噪

对于一个比较大的数据集,肯定是存在一些噪声部分影响我们的计算操作。将这部分噪音去掉可以保证计算结果的准确性。去噪的方法有很多,这里举出一个常用的方法,就是将数值在 [均值- 3倍方差,均值 + 3倍方差] 以外的数据滤掉。下面是我写的一个实现以上去噪方法的代码,执行DenoisMat函数可以实现此功能。

from __future__ import division

def GetAverage(mat):

n=len(mat)

m= width(mat)

num = [0]*m

for j in range(0,m):

for i in mat:

num[j]=num[j]+i[j]

num[j]=num[j]/n

return num

def width(lst):

i=0

for j in lst[0]:

i=i+1

return i

def GetVar(average,mat):

ListMat=[]

for i in mat:

ListMat.append(list(map(lambda x: x[0]-x[1], zip(average, i))))

n=len(ListMat)

m= width(ListMat)

num = [0]*m

for j in range(0,m):

for i in ListMat:

num[j]=num[j]+(i[j]*i[j])

num[j]=num[j]/n

return num

def DenoisMat(mat):

average=GetAverage(mat)

variance=GetVar(average,mat)

section=list(map(lambda x: x[0]+x[1], zip(average, variance)))

n=len(mat)

m= width(mat)

num = [0]*m

denoisMat=[]

for i in mat:

for j in range(0,m):

if i[j]>section[j]:

i[j]=section[j]

denoisMat.append(i)

return denoisMat (3)训练集采样

上文中已经提到了,正确的采样可以提高计算的准确性。常用的采样的方法有随机采样、系统采样、分层采样。

随机采样:根据样本的编号random出来需要的样本数量。

系统采样:即先将总体的观察单位按某一顺序号分成n个部分,再从第一部分随机抽取第k号观察单位,依次用相等间距,从每一部分各抽取一个观察 单位组成样本。

分层采样:先按对观察指标影响较大的某种特征,将总体分为若干个类别,再从每一层内随机抽取一定数量的观察单位,合起来组成样本。有按比例 分配和最优分配两种方案。

效果的比较是,分层采样>系统采样>随机采样。以下代码实现了系统采样和随机采样,分层采样可以根据自己的数据集结合聚类算法来实现。如果是监督学习的话,记得调整正副样本的比例哦。

'''

Sampling archive

@author: Garvin Li

'''

import random

def RandomSampling(dataMat,number):

try:

slice = random.sample(dataMat, number)

return slice

except:

print 'sample larger than population'

def SystematicSampling(dataMat,number):

length=len(dataMat)

k=length/number

sample=[]

i=0

if k>0 :

while len(sample)!=number:

sample.append(dataMat[0+i*k])

i+=1

return sample

else :

return RandomSampling(dataMat,number)

(4)选择算法训练样本

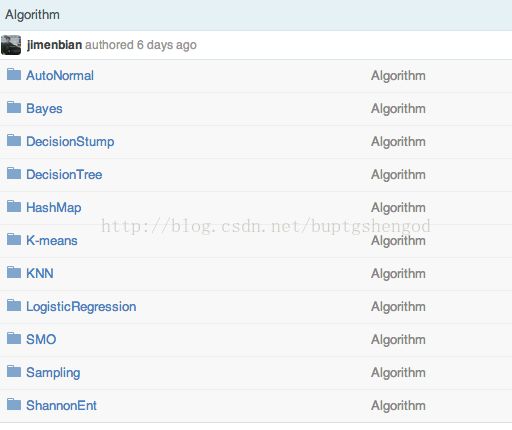

上面已经提到了很多的算法。针对不同的数据集,应该选用不同的算法。举个例子,这次比赛在线下赛的时候,因为数据集比较小,我们使用的逻辑回归算法就取得了不错的成绩。但是到了线上比赛,随着数据量增加到TB级别,发现逻辑回归已经不给力了。使用GBRT可以取得更理想的效果,具体用法可以参考阿里大数据比赛sesson2_RF&GBRT.一些常用的算法也可以clone我的github代码库(不断更新中),本文最下方给出地址。

图 3-1 My git-repo

(5)模型融合

模型融合的概念也是这次参加比赛第一次听说的。其实原理很简单,每种算法因为自身的收敛方式不同、对特征的调配方式不同,在结果上是有比较大的差异的。如果将不同的模型计算结果加权求和的话,可以充分体现每个算法的长处,对于结果的提升是有比较大帮助的。

4.总结

我始终坚信大数据和云计算可以改变我们的生活方式甚至我们的意识形态。在进行大数据处理的过程中,看着自己的成绩一点一滴的提升,内心会有极大的满足感。希望有相同志趣的朋友可以留言讨论,大家一起学习进步,谢谢大家。

我的Github页:点击打开链接

本文参加了csdn博客大赛,请为我投票!

本文参考了:《机器学习与算法》和 csdn

u010691898的专栏

/********************************

* 本文来自博客 “李博Garvin“

* 转载请标明出处:http://blog.csdn.net/buptgshengod

******************************************/