⭐ 李宏毅2020机器学习作业4-RNN:句子情感分类

更多作业,请查看⭐ 李宏毅2020机器学习资料汇总

如果大家看本次作业有困难,可以先看一下博主搬运过来的关于NLP的Pytorch官方教程:

- 第一篇:【Pytorch官方教程】从零开始自己搭建RNN1 - 字母级RNN的分类任务

- 第二篇:【Pytorch官方教程】从零开始自己搭建RNN2 - 字母级RNN的生成任务

- 第三篇:【Pytorch官方教程】从零开始自己搭建RNN3 - 含注意力机制的Seq2Seq机器翻译模型

文章目录

- 0 作业链接

- 1 作业说明

- 环境

- 任务说明

- 任务要求

- 数据说明

- 作业概述

- 2 基本原理与概念

- 单词的表示

- 句子的表示

- 1-of-N encoding

- Bag of Words (BOW)

- word embedding

- Semi-supervised Learning 半监督学习

- 3 原始代码

- warning设置

- 一些函数的定义

- 词嵌入 word2vec

- 数据预处理

- 定义Dataset

- 定义模型LSTM

- training

- testing

- 修改代码

- 修改1:加入无标记的数据

- 修改2:self-training

- 修改3:去除标点符号 + Bi-LSTM+ Attention

- 汇总

0 作业链接

直接在李宏毅课程主页可以找到作业:

- 李宏毅的课程网页:点击此处跳转

如果你打不开colab,下方是搬运的jupyter notebook文件和助教的说明ppt:

- 2020版课后作业范例和作业说明:点击此处跳转

- 数据链接:https://pan.baidu.com/s/1xWVKnm4P6bBawASzLYskaw 提取码:akti

1 作业说明

环境

- jupyter notebook

- python3

- pytorch-gpu

任务说明

通过循环神经网络(Recurrent Neural Networks, RNN)对句子进行情感分类。

给定一句句子,判断这句句子是正面还是负面的(正面标1,负面标0)

任务要求

- 必须使用 RNN

- 不能使用额外的数据 (禁止使用其他语料或预训练的模型)

数据说明

从百度网盘下载得到三个文件,分别是testing_data.txt、training_label.txt、training_nolabel.txt,直接放于hw4_RNN.ipynb的目录下。

文本数据是从推特上收集到的推文(英文文本),每篇推文都会被标注为正面或者负面。

注:由于.txt文件太大,建议用Notepad++打开,如果用记事本打开会卡顿。

-

training_label.txt:有 label 的 training data,约20万句句子。

格式为:标签 +++$+++ 文本 (标签是 0 或 1,+++$+++ 只是分隔符号,不需要理它)

比如:1 +++$+++ are wtf … awww thanks !

这里的1表示句子“are wtf … awww thanks !”是正面的。

-

training_nolabel.txt:没有 label 的 training data(只有句子),用来做半监督学习,约120万句句子。

比如: hates being this burnt !! ouch,前面没有0或者1的标签

-

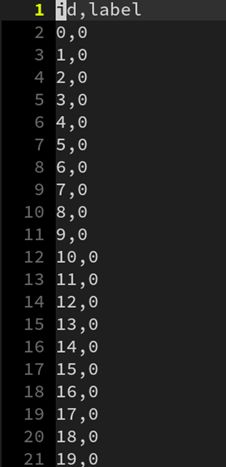

testing_data.txt:测试数据,最终需要判断 testing data 里面的句子是 0 或 1,约20万句句子(10万句句子是Public,10万句句子是Private)。

具体格式如下,第一行是表头,从第二行开始是数据,第一列是id,第二列是文本

最终,预测结果的保存形式为:第一行是表头,第二行开始是预测结果。每一行有两列,第一列是id,第二列是label(标签),用逗号隔开。

作业概述

输入:英文句子

输出:0或1(如果句子是正面的,标1;如果句子是负面的,标0)

模型:循环神经网络(Recurrent Neural Networks, RNN)

2 基本原理与概念

单词的表示

人可以理解文字,但是对于机器来说,数字是更好理解的(因为数字可以进行运算),因此,我们需要把文字变成数字。

- 中文句子以“字”为单位。一句中文句子是由一个个字组成的,每个字都分别变成词向量,用一个向量vector来表示一个字的意思。

- 英文句子以“单词”为单位。一句英文句子是由一个个单词组成的,每个单词都分别变成词向量,用一个向量vector来表示一个单词的意思。

句子的表示

对于一句句子的处理,先建立字典,字典内含有每一个字所对应到的索引。比如:

- “I have a pen.” -> [1, 2, 3, 4]

- “I have an apple.” -> [1, 2, 5, 6]

得到句子的向量有两种方法:

- 直接用 bag of words (BOW) 的方式获得一个代表该句的向量。

- 我们已经用一个向量 vector 来表示一个单词,然后我们就可以用RNN模型来得到一个表示句子向量。

1-of-N encoding

一个向量,长度为N,其中有 1 1 1个是1, N − 1 N-1 N−1个都是0,也叫one-hot编码,中文翻译成“独热编码”。

现在假设,有一句4个单词组成的英文句子“I have an apple.”,先把它变成一个字典:

“I have an apple.” -> [1, 2, 5, 6]

然后,对每个字进行 1-of-N encoding:

1 -> [1,0,0,0]

2 -> [0,1,0,0]

5 -> [0,0,1,0]

6 -> [0,0,0,1]

这里的顺序是人为指定的,可以任意赋值,比如打乱顺序:

5 -> [1,0,0,0]

6 -> [0,1,0,0]

1 -> [0,0,1,0]

2 -> [0,0,0,1]

1-of-N encoding非常简单,非常容易理解,但是问题是:

- 缺少字与字之间的关联性 (当然你可以相信 NN 很强大,它会自己想办法)

- 占用内存大:总共有多少个字,向量就有多少维,但是其中很多都是0,只有1个是1.

比如:200000(data)*30(length)*20000(vocab size) *4(Byte) = 4.8 ∗ 1 0 11 4.8*10^{11} 4.8∗1011 = 480 GB

Bag of Words (BOW)

BOW 的概念就是将句子里的文字变成一个袋子装着这些词,BOW不考虑文法以及词的顺序。

比如,有两句句子:

1. John likes to watch movies. Mary likes movies too.

2. John also likes to watch football games.

有一个字典:[ “John”, “likes”, “to”, “watch”, “movies”, “also”, “football”, “games”, “Mary”, “too” ]

在 BOW 的表示方法下,第一句句子 “John likes to watch movies. Mary likes movies too.” 在该字典中,每个单词的出现次数为:

- John:1次

- likes:2次

- to:1次

- watch:1次

- movies:2次

- also:0次

- football:0次

- games:0次

- Mary:1次

- too:1次

因此,“John likes to watch movies. Mary likes movies too.”的表示向量即为:[1, 2, 1, 1, 2, 0, 0, 0, 1, 1],第二句句子同理,最终两句句子的表示向量如下:

1. John likes to watch movies. Mary likes movies too. -> [1, 2, 1, 1, 2, 0, 0, 0, 1, 1]

2. John also likes to watch football games. -> [1, 1, 1, 1, 0, 1, 1, 1, 0, 0]

之后,把句子的BOW输入DNN,得到预测值,与标签进行对比。

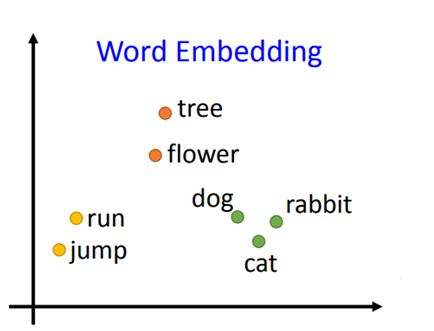

word embedding

词嵌入(word embedding),也叫词的向量化(word to vector),即把单词变成向量(vector)。训练词嵌入的方法有两种:

-

可以用一些方法 (比如 skip-gram, CBOW) 预训练(pretrain)出 word embedding ,在本次作业中只能用已有.txt中的数据进行预训练。

Semi-supervised Learning 半监督学习

在机器学习中,最宝贵的可能是有标注的数据。想要得到无标注的数据很容易,爬虫去网络上爬取一些文本即可,但是想要得到有标注的数据,就需要人工手动标注,成本很高。

半监督学习,简单来说,就是机器利用一部分有标注的数据(通常比较少) 和 一部分无标注的数据(通常比较多) 来进行训练。

半监督学习的方法有很多种,最容易理解、也最好操作的一种是Self-Training:把训练好的模型对无标签的数据( unlabeled data )做预测,将预测值作为该数据的标签(label),并加入这些新的有标签的数据做训练。可以通过调整阈值(threshold),或是多次取样来得到比较可信的数据。

比如:在测试阶段,prediction > 0.5 的数据会被标上 1,prediction < 0.5 的数据被标上0 (= 0.5 的情况,你自己提前指定是0或者是1,并始终保持一致)。在 Self-Training 中,你可以设置 pos_threshold = 0.8,意思是只有 prediction > 0.8 的数据会被标上 1,并放入训练集,而 0.5 < prediction < 0.8 的数据仍然属于无标签的数据。

3 原始代码

warning设置

由于python库的版本等问题,在程序运行时可能会出现一些warning(警告),但是它们并不会影响程序运行,出于程序员的强迫症的考虑,屏蔽它们。

# 设置后可以过滤一些无用的warning

import warnings

warnings.filterwarnings('ignore')

一些函数的定义

定义了两个读取training和testing数据的函数,还定义了评估结果的函数evaluation()。

Python库中的 strip() 方法用于移除字符串头尾指定的字符(默认为空格或换行符)或字符序列。

注:如果遇到和我一样的编码错误

UnicodeDecodeError: ‘gbk’ codec can’t decode byte 0xb9 in position x: illegal multibyte sequence

可以将下列代码中所有的with open(path, 'r') as f:改成open(path,'r', encoding='UTF-8' ) as f:

# utils.py

# 用来定义一些之后常用到的函数

import torch

import numpy as np

import pandas as pd

import torch.optim as optim

import torch.nn.functional as F

def load_training_data(path='training_label.txt'):

# 读取 training 需要的数据

# 如果是 'training_label.txt',需要读取 label,如果是 'training_nolabel.txt',不需要读取 label

if 'training_label' in path:

with open(path, 'r') as f:

lines = f.readlines()

# lines是二维数组,第一维是行line(按回车分割),第二维是每行的单词(按空格分割)

lines = [line.strip('\n').split(' ') for line in lines]

# 每行按空格分割后,第2个符号之后都是句子的单词

x = [line[2:] for line in lines]

# 每行按空格分割后,第0个符号是label

y = [line[0] for line in lines]

return x, y

else:

with open(path, 'r') as f:

lines = f.readlines()

# lines是二维数组,第一维是行line(按回车分割),第二维是每行的单词(按空格分割)

x = [line.strip('\n').split(' ') for line in lines]

return x

def load_testing_data(path='testing_data'):

# 读取 testing 需要的数据

with open(path, 'r') as f:

lines = f.readlines()

# 第0行是表头,从第1行开始是数据

# 第0列是id,第1列是文本,按逗号分割,需要逗号之后的文本

X = ["".join(line.strip('\n').split(",")[1:]).strip() for line in lines[1:]]

X = [sen.split(' ') for sen in X]

return X

def evaluation(outputs, labels):

# outputs => 预测值,概率(float)

# labels => 真实值,标签(0或1)

outputs[outputs>=0.5] = 1 # 大于等于 0.5 为正面

outputs[outputs<0.5] = 0 # 小于 0.5 为负面

accuracy = torch.sum(torch.eq(outputs, labels)).item()

return accuracy

词嵌入 word2vec

word2vec 即 word to vector 的缩写。把 training 和 testing 中的每个单词都分别变成词向量,这里用到了 Gensim 来进行 word2vec 的操作。没有 gensim 的可以用 conda install gensim 或者 pip install gensim 安装一下。

Gensim是一款开源的第三方Python工具包,用于从原始的非结构化的文本中,无监督地学习到文本隐层的主题向量表达。

它支持包括TF-IDF,LSA,LDA,和word2vec在内的多种主题模型算法。

详情请看:Gensim英文官方文档

Word2Vec 模块具体的 API 如下:

class gensim.models.word2vec.Word2Vec(

sentences=None,

size=100,

alpha=0.025,

window=5,

min_count=5,

max_vocab_size=None,

sample=0.001,

seed=1,

workers=3,

min_alpha=0.0001,

sg=0,

hs=0,

negative=5,

cbow_mean=1,

hashfxn=<built-in function hash>,

iter=5,

null_word=0,

trim_rule=None,

sorted_vocab=1,

batch_words=10000,

compute_loss=False)

参数含义(摘自Gensim 中 word2vec 函数的使用):

- size: 词向量的维度。

- alpha: 模型初始的学习率。

- window: 表示在一个句子中,当前词于预测词在一个句子中的最大距离。

- min_count: 用于过滤操作,词频少于 min_count 次数的单词会被丢弃掉,默认值为 5。

- max_vocab_size: 设置词向量构建期间的 RAM 限制。如果所有的独立单词数超过这个限定词,那么就删除掉其中词频最低的那个。根据统计,每一千万个单词大概需要 1GB 的RAM。如果我们把该值设置为 None ,则没有限制。

- sample: 高频词汇的随机降采样的配置阈值,默认为 1e-3,范围是 (0, 1e-5)。

- seed: 用于随机数发生器。与词向量的初始化有关。

- workers: 控制训练的并行数量。

- min_alpha: 随着训练进行,alpha 线性下降到 min_alpha。

- sg: 用于设置训练算法。当 sg=0,使用 CBOW 算法来进行训练;当 sg=1,使用 skip-gram 算法来进行训练。

- hs: 如果设置为 1 ,那么系统会采用 hierarchica softmax 技巧。如果设置为 0(默认情况),则系统会采用 negative samping 技巧。

- negative: 如果这个值大于 0,那么 negative samping 会被使用。该值表示 “noise words” 的数量,一般这个值是 5 - 20,默认是 5。如果这个值设置为 0,那么 negative samping 没有使用。

- cbow_mean: 如果这个值设置为 0,那么就采用上下文词向量的总和。如果这个值设置为 1 (默认情况下),那么我们就采用均值。但这个值只有在使用 CBOW 的时候才起作用。

- hashfxn: hash函数用来初始化权重,默认情况下使用 Python 自带的 hash 函数。

- iter: 算法迭代次数,默认为 5。

- trim_rule: 用于设置词汇表的整理规则,用来指定哪些词需要被剔除,哪些词需要保留。默认情况下,如果 word count < min_count,那么该词被剔除。这个参数也可以被设置为 None,这种情况下 min_count 会被使用。

- sorted_vocab: 如果这个值设置为 1(默认情况下),则在分配 word index 的时候会先对单词基于频率降序排序。

- batch_words: 每次批处理给线程传递的单词的数量,默认是 10000。

这段代码在训练 word to vector 时是用 cpu,可能要花 10 分钟以上。

from gensim.models import Word2Vec

def train_word2vec(x):

# 训练 word to vector 的 word embedding

# window:滑动窗口的大小,min_count:过滤掉语料中出现频率小于min_count的词

model = Word2Vec(x, size=250, window=5, min_count=5, workers=12, iter=10, sg=1)

return model

# 读取 training 数据

print("loading training data ...")

train_x, y = load_training_data('training_label.txt')

train_x_no_label = load_training_data('training_nolabel.txt')

# 读取 testing 数据

print("loading testing data ...")

test_x = load_testing_data('testing_data.txt')

# 把 training 中的 word 变成 vector

# model = train_word2vec(train_x + train_x_no_label + test_x) # w2v_all

model = train_word2vec(train_x + test_x) # w2v

# 保存 vector

print("saving model ...")

# model.save('w2v_all.model')

model.save('w2v.model')

数据预处理

定义一个预处理的类Preprocess():

- w2v_path:word2vec的存储路径

- sentences:句子

- sen_len:句子的固定长度

- idx2word 是一个列表,比如:self.idx2word[1] = ‘he’

- word2idx 是一个字典,记录单词在 idx2word 中的下标,比如:self.word2idx[‘he’] = 1

- embedding_matrix 是一个列表,记录词嵌入的向量,比如:self.embedding_matrix[1] = ‘he’ vector

对于句子,我们就可以通过 embedding_matrix[word2idx[‘he’] ] 找到 ‘he’ 的词嵌入向量。

Preprocess()的调用如下:

- 训练模型:

preprocess = Preprocess(train_x, sen_len, w2v_path=w2v_path) - 测试模型:

preprocess = Preprocess(test_x, sen_len, w2v_path=w2v_path)

另外,这里除了出现在 train_x 和 test_x 中的单词外,还需要两个单词(或者叫特殊符号):

- “

”:Padding的缩写,把所有句子都变成一样长度时,需要用" "补上空白符 - “

”:Unknown的缩写,凡是在 train_x 和 test_x 中没有出现过的单词,都用" "来表示

# 数据预处理

class Preprocess():

def __init__(self, sentences, sen_len, w2v_path):

self.w2v_path = w2v_path # word2vec的存储路径

self.sentences = sentences # 句子

self.sen_len = sen_len # 句子的固定长度

self.idx2word = []

self.word2idx = {}

self.embedding_matrix = []

def get_w2v_model(self):

# 读取之前训练好的 word2vec

self.embedding = Word2Vec.load(self.w2v_path)

self.embedding_dim = self.embedding.vector_size

def add_embedding(self, word):

# 这里的 word 只会是 "" 或 ""

# 把一个随机生成的表征向量 vector 作为 "" 或 "" 的嵌入

vector = torch.empty(1, self.embedding_dim)

torch.nn.init.uniform_(vector)

# 它的 index 是 word2idx 这个词典的长度,即最后一个

self.word2idx[word] = len(self.word2idx)

self.idx2word.append(word)

self.embedding_matrix = torch.cat([self.embedding_matrix, vector], 0)

def make_embedding(self, load=True):

print("Get embedding ...")

# 获取训练好的 Word2vec word embedding

if load:

print("loading word to vec model ...")

self.get_w2v_model()

else:

raise NotImplementedError

# 遍历嵌入后的单词

for i, word in enumerate(self.embedding.wv.vocab):

print('get words #{}'.format(i+1), end='\r')

# 新加入的 word 的 index 是 word2idx 这个词典的长度,即最后一个

self.word2idx[word] = len(self.word2idx)

self.idx2word.append(word)

self.embedding_matrix.append(self.embedding[word])

print('')

# 把 embedding_matrix 变成 tensor

self.embedding_matrix = torch.tensor(self.embedding_matrix)

# 将 和 加入 embedding

self.add_embedding("" )

self.add_embedding("" )

print("total words: {}".format(len(self.embedding_matrix)))

return self.embedding_matrix

def pad_sequence(self, sentence):

# 将每个句子变成一样的长度,即 sen_len 的长度

if len(sentence) > self.sen_len:

# 如果句子长度大于 sen_len 的长度,就截断

sentence = sentence[:self.sen_len]

else:

# 如果句子长度小于 sen_len 的长度,就补上 符号,缺多少个单词就补多少个

pad_len = self.sen_len - len(sentence)

for _ in range(pad_len):

sentence.append(self.word2idx["" ])

assert len(sentence) == self.sen_len

return sentence

def sentence_word2idx(self):

# 把句子里面的字变成相对应的 index

sentence_list = []

for i, sen in enumerate(self.sentences):

print('sentence count #{}'.format(i+1), end='\r')

sentence_idx = []

for word in sen:

if (word in self.word2idx.keys()):

sentence_idx.append(self.word2idx[word])

else:

# 没有出现过的单词就用 表示

sentence_idx.append(self.word2idx["" ])

# 将每个句子变成一样的长度

sentence_idx = self.pad_sequence(sentence_idx)

sentence_list.append(sentence_idx)

return torch.LongTensor(sentence_list)

def labels_to_tensor(self, y):

# 把 labels 转成 tensor

y = [int(label) for label in y]

return torch.LongTensor(y)

定义Dataset

在 Pytorch 中,我们可以利用 torch.utils.data 的 Dataset 及 DataLoader 来"包装" data,使后续的 training 和 testing 更方便。

Dataset 需要 overload 两个函数:__len__ 及 __getitem__

- __len__ 必须要传回 dataset 的大小

- __getitem__ 则定义了当函数利用 [ ] 取值时,dataset 应该要怎么传回数据。

实际上,在我们的代码中并不会直接使用到这两个函数,但是当 DataLoader 在 enumerate Dataset 时会使用到,如果没有这样做,程序运行阶段会报错。

from torch.utils.data import DataLoader, Dataset

class TwitterDataset(Dataset):

"""

Expected data shape like:(data_num, data_len)

Data can be a list of numpy array or a list of lists

input data shape : (data_num, seq_len, feature_dim)

__len__ will return the number of data

"""

def __init__(self, X, y):

self.data = X

self.label = y

def __getitem__(self, idx):

if self.label is None: return self.data[idx]

return self.data[idx], self.label[idx]

def __len__(self):

return len(self.data)

定义模型LSTM

如李宏毅的视频中所说,因为LSTM(Long Short-Term Memory,长短期记忆网络)的效果比普通的RNN好,所以现在当我们说RNN的时候,一般都是指LSTM.

把句子丢到LSTM中,变成一个输出向量,再把这个输出丢到分类器classifier中,进行二元分类。

from torch import nn

class LSTM_Net(nn.Module):

def __init__(self, embedding, embedding_dim, hidden_dim, num_layers, dropout=0.5, fix_embedding=True):

super(LSTM_Net, self).__init__()

# embedding layer

self.embedding = torch.nn.Embedding(embedding.size(0),embedding.size(1))

self.embedding.weight = torch.nn.Parameter(embedding)

# 是否将 embedding 固定住,如果 fix_embedding 为 False,在训练过程中,embedding 也会跟着被训练

self.embedding.weight.requires_grad = False if fix_embedding else True

self.embedding_dim = embedding.size(1)

self.hidden_dim = hidden_dim

self.num_layers = num_layers

self.dropout = dropout

self.lstm = nn.LSTM(embedding_dim, hidden_dim, num_layers=num_layers, batch_first=True)

self.classifier = nn.Sequential(

nn.Dropout(dropout),

nn.Linear(hidden_dim, 1),

nn.Sigmoid()

)

def forward(self, inputs):

inputs = self.embedding(inputs)

x, _ = self.lstm(inputs, None)

# x 的 dimension (batch, seq_len, hidden_size)

# 取用 LSTM 最后一层的 hidden state 丢到分类器中

x = x[:, -1, :]

x = self.classifier(x)

return x

training

将 training 和 validation 封装成函数

def training(batch_size, n_epoch, lr, train, valid, model, device):

# 输出模型总的参数数量、可训练的参数数量

total = sum(p.numel() for p in model.parameters())

trainable = sum(p.numel() for p in model.parameters() if p.requires_grad)

print('\nstart training, parameter total:{}, trainable:{}\n'.format(total, trainable))

loss = nn.BCELoss() # 定义损失函数为二元交叉熵损失 binary cross entropy loss

t_batch = len(train) # training 数据的batch size大小

v_batch = len(valid) # validation 数据的batch size大小

optimizer = optim.Adam(model.parameters(), lr=lr) # optimizer用Adam,设置适当的学习率lr

total_loss, total_acc, best_acc = 0, 0, 0

for epoch in range(n_epoch):

total_loss, total_acc = 0, 0

# training

model.train() # 将 model 的模式设为 train,这样 optimizer 就可以更新 model 的参数

for i, (inputs, labels) in enumerate(train):

inputs = inputs.to(device, dtype=torch.long) # 因为 device 为 "cuda",将 inputs 转成 torch.cuda.LongTensor

labels = labels.to(device, dtype=torch.float) # 因为 device 为 "cuda",将 labels 转成 torch.cuda.FloatTensor,loss()需要float

optimizer.zero_grad() # 由于 loss.backward() 的 gradient 会累加,所以每一个 batch 后需要归零

outputs = model(inputs) # 模型输入Input,输出output

outputs = outputs.squeeze() # 去掉最外面的 dimension,好让 outputs 可以丢进 loss()

batch_loss = loss(outputs, labels) # 计算模型此时的 training loss

batch_loss.backward() # 计算 loss 的 gradient

optimizer.step() # 更新模型参数

accuracy = evaluation(outputs, labels) # 计算模型此时的 training accuracy

total_acc += (accuracy / batch_size)

total_loss += batch_loss.item()

print('Epoch | {}/{}'.format(epoch+1,n_epoch))

print('Train | Loss:{:.5f} Acc: {:.3f}'.format(total_loss/t_batch, total_acc/t_batch*100))

# validation

model.eval() # 将 model 的模式设为 eval,这样 model 的参数就会被固定住

with torch.no_grad():

total_loss, total_acc = 0, 0

for i, (inputs, labels) in enumerate(valid):

inputs = inputs.to(device, dtype=torch.long) # 因为 device 为 "cuda",将 inputs 转成 torch.cuda.LongTensor

labels = labels.to(device, dtype=torch.float) # 因为 device 为 "cuda",将 labels 转成 torch.cuda.FloatTensor,loss()需要float

outputs = model(inputs) # 模型输入Input,输出output

outputs = outputs.squeeze() # 去掉最外面的 dimension,好让 outputs 可以丢进 loss()

batch_loss = loss(outputs, labels) # 计算模型此时的 training loss

accuracy = evaluation(outputs, labels) # 计算模型此时的 training accuracy

total_acc += (accuracy / batch_size)

total_loss += batch_loss.item()

print("Valid | Loss:{:.5f} Acc: {:.3f} ".format(total_loss/v_batch, total_acc/v_batch*100))

if total_acc > best_acc:

# 如果 validation 的结果优于之前所有的結果,就把当下的模型保存下来,用于之后的testing

best_acc = total_acc

torch.save(model, "ckpt.model")

print('-----------------------------------------------')

调用前面的封装的Preprocess(),training(),进行训练。

train_test_split()的使用说明:

- test_size:样本占比。

- random_state:随机数的种子。 填0或不填,每次都会不一样。填其他数字,每次会固定得到同样的随机分配。

- stratify:保持split前类的分布。一般在数据不平衡时使用。

from sklearn.model_selection import train_test_split

# 通过 torch.cuda.is_available() 的值判断是否可以使用 GPU ,如果可以的话 device 就设为 "cuda",没有的话就设为 "cpu"

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# 定义句子长度、要不要固定 embedding、batch 大小、要训练几个 epoch、 学习率的值、 w2v的路径

sen_len = 20

fix_embedding = True # fix embedding during training

batch_size = 128

epoch = 10

lr = 0.001

w2v_path = 'w2v_all.model'

print("loading data ...") # 读取 'training_label.txt' 'training_nolabel.txt'

train_x, y = load_training_data('training_label.txt')

train_x_no_label = load_training_data('training_nolabel.txt')

# 对 input 跟 labels 做预处理

preprocess = Preprocess(train_x, sen_len, w2v_path=w2v_path)

embedding = preprocess.make_embedding(load=True)

train_x = preprocess.sentence_word2idx()

y = preprocess.labels_to_tensor(y)

# 定义模型

model = LSTM_Net(embedding, embedding_dim=250, hidden_dim=150, num_layers=1, dropout=0.5, fix_embedding=fix_embedding)

model = model.to(device) # device为 "cuda",model 使用 GPU 来训练(inputs 也需要是 cuda tensor)

# 把 data 分为 training data 和 validation data(将一部分 training data 作为 validation data)

X_train, X_val, y_train, y_val = train_test_split(train_x, y, test_size = 0.1, random_state = 1, stratify = y)

print('Train | Len:{} \nValid | Len:{}'.format(len(y_train), len(y_val)))

# 把 data 做成 dataset 供 dataloader 取用

train_dataset = TwitterDataset(X=X_train, y=y_train)

val_dataset = TwitterDataset(X=X_val, y=y_val)

# 把 data 转成 batch of tensors

train_loader = DataLoader(train_dataset, batch_size = batch_size, shuffle = True, num_workers = 0)

val_loader = DataLoader(val_dataset, batch_size = batch_size, shuffle = False, num_workers = 0)

# 开始训练

training(batch_size, epoch, lr, train_loader, val_loader, model, device)

Out:

loading data ...

Get embedding ...

loading word to vec model ...

get words #24694

total words: 24696

sentence count #200000

start training, parameter total:6415351, trainable:241351

Epoch | 1/5

Train | Loss:0.50001 Acc: 74.739

Valid | Loss:0.45416 Acc: 78.080

-----------------------------------------------

Epoch | 2/5

Train | Loss:0.44352 Acc: 79.073

Valid | Loss:0.43715 Acc: 79.250

-----------------------------------------------

Epoch | 3/5

Train | Loss:0.42768 Acc: 80.013

Valid | Loss:0.43578 Acc: 79.339

-----------------------------------------------

Epoch | 4/5

Train | Loss:0.41410 Acc: 80.825

Valid | Loss:0.42171 Acc: 80.220

-----------------------------------------------

Epoch | 5/5

Train | Loss:0.40301 Acc: 81.455

Valid | Loss:0.42282 Acc: 80.180

-----------------------------------------------

testing

同样,将 testing 封装成函数

def testing(batch_size, test_loader, model, device):

model.eval() # 将 model 的模式设为 eval,这样 model 的参数就会被固定住

ret_output = [] # 返回的output

with torch.no_grad():

for i, inputs in enumerate(test_loader):

inputs = inputs.to(device, dtype=torch.long)

outputs = model(inputs)

outputs = outputs.squeeze()

outputs[outputs>=0.5] = 1 # 大于等于0.5为正面

outputs[outputs<0.5] = 0 # 小于0.5为负面

ret_output += outputs.int().tolist()

return ret_output

调用testing()进行预测,预测数据保存为predict.csv,约1.6M

# 测试模型并作预测

# 读取测试数据test_x

print("loading testing data ...")

test_x = load_testing_data('testing_data.txt')

# 对test_x作预处理

preprocess = Preprocess(test_x, sen_len, w2v_path=w2v_path)

embedding = preprocess.make_embedding(load=True)

test_x = preprocess.sentence_word2idx()

test_dataset = TwitterDataset(X=test_x, y=None)

test_loader = DataLoader(test_dataset, batch_size = batch_size, shuffle = False, num_workers = 0)

# 读取模型

print('\nload model ...')

model = torch.load('ckpt.model')

# 测试模型

outputs = testing(batch_size, test_loader, model, device)

# 保存为 csv

tmp = pd.DataFrame({"id":[str(i) for i in range(len(test_x))],"label":outputs})

print("save csv ...")

tmp.to_csv('predict.csv', index=False)

print("Finish Predicting")

Out:

loading testing data ...

Get embedding ...

loading word to vec model ...

get words #24694

total words: 24696

load model ...

save csv ...

Finish Predicting

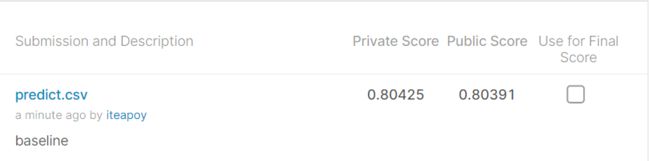

将predict.csv上传到kaggle平台进行评分

Public Score:0.80391

Private Score:0.80425

接下来需要提高score分数。

修改代码

修改1:加入无标记的数据

上述代码在进行 word2vec 时,仅仅使用了 train_x + test_x 的语料数据,下面根据 train_x + train_x_no_label + test_x 的语料数据来建立词典,得到新的词嵌入向量。

把原来的代码

# 把 training 中的 word 变成 vector

# model = train_word2vec(train_x + train_x_no_label + test_x) # w2v_all

model = train_word2vec(train_x + test_x) # w2v

# 保存 vector

print("saving model ...")

# model.save('w2v_all.model')

model.save('w2v.model')

改为:

# 把 training 中的 word 变成 vector

model = train_word2vec(train_x + train_x_no_label + test_x) # w2v_all

# model = train_word2vec(train_x + test_x) # w2v

# 保存 vector

print("saving model ...")

model.save('w2v_all.model')

# model.save('w2v.model')

并且把 w2v_path = 'w2v.model' 改为 w2v_path = 'w2v_all.model'

训练迭代次数 epoch 增加。

完整代码如下:

# utils.py

# 用来定义一些之后常用到的函数

import torch

import numpy as np

import pandas as pd

import torch.optim as optim

import torch.nn.functional as F

def load_training_data(path='training_label.txt'):

# 读取 training 需要的数据

# 如果是 'training_label.txt',需要读取 label,如果是 'training_nolabel.txt',不需要读取 label

if 'training_label' in path:

with open(path, 'r') as f:

lines = f.readlines()

# lines是二维数组,第一维是行line(按回车分割),第二维是每行的单词(按空格分割)

lines = [line.strip('\n').split(' ') for line in lines]

# 每行按空格分割后,第2个符号之后都是句子的单词

x = [line[2:] for line in lines]

# 每行按空格分割后,第0个符号是label

y = [line[0] for line in lines]

return x, y

else:

with open(path, 'r') as f:

lines = f.readlines()

# lines是二维数组,第一维是行line(按回车分割),第二维是每行的单词(按空格分割)

x = [line.strip('\n').split(' ') for line in lines]

return x

def load_testing_data(path='testing_data'):

# 读取 testing 需要的数据

with open(path, 'r') as f:

lines = f.readlines()

# 第0行是表头,从第1行开始是数据

# 第0列是id,第1列是文本,按逗号分割,需要逗号之后的文本

X = ["".join(line.strip('\n').split(",")[1:]).strip() for line in lines[1:]]

X = [sen.split(' ') for sen in X]

return X

def evaluation(outputs, labels):

# outputs => 预测值,概率(float)

# labels => 真实值,标签(0或1)

outputs[outputs>=0.5] = 1 # 大于等于 0.5 为正面

outputs[outputs<0.5] = 0 # 小于 0.5 为负面

accuracy = torch.sum(torch.eq(outputs, labels)).item()

return accuracy

from gensim.models import Word2Vec

def train_word2vec(x):

# 训练 word to vector 的 word embedding

# window:滑动窗口的大小,min_count:过滤掉语料中出现频率小于min_count的词

model = Word2Vec(x, size=250, window=5, min_count=5, workers=12, iter=10, sg=1)

return model

# 读取 training 数据

print("loading training data ...")

train_x, y = load_training_data('training_label.txt')

train_x_no_label = load_training_data('training_nolabel.txt')

# 读取 testing 数据

print("loading testing data ...")

test_x = load_testing_data('testing_data.txt')

# 把 training 中的 word 变成 vector

model = train_word2vec(train_x + train_x_no_label + test_x) # w2v_all

# model = train_word2vec(train_x + test_x) # w2v

# 保存 vector

print("saving model ...")

model.save('w2v_all.model')

# model.save('w2v.model')

# 数据预处理

class Preprocess():

def __init__(self, sentences, sen_len, w2v_path):

self.w2v_path = w2v_path # word2vec的存储路径

self.sentences = sentences # 句子

self.sen_len = sen_len # 句子的固定长度

self.idx2word = []

self.word2idx = {}

self.embedding_matrix = []

def get_w2v_model(self):

# 读取之前训练好的 word2vec

self.embedding = Word2Vec.load(self.w2v_path)

self.embedding_dim = self.embedding.vector_size

def add_embedding(self, word):

# 这里的 word 只会是 "" 或 ""

# 把一个随机生成的表征向量 vector 作为 "" 或 "" 的嵌入

vector = torch.empty(1, self.embedding_dim)

torch.nn.init.uniform_(vector)

# 它的 index 是 word2idx 这个词典的长度,即最后一个

self.word2idx[word] = len(self.word2idx)

self.idx2word.append(word)

self.embedding_matrix = torch.cat([self.embedding_matrix, vector], 0)

def make_embedding(self, load=True):

print("Get embedding ...")

# 获取训练好的 Word2vec word embedding

if load:

print("loading word to vec model ...")

self.get_w2v_model()

else:

raise NotImplementedError

# 遍历嵌入后的单词

for i, word in enumerate(self.embedding.wv.vocab):

print('get words #{}'.format(i+1), end='\r')

# 新加入的 word 的 index 是 word2idx 这个词典的长度,即最后一个

self.word2idx[word] = len(self.word2idx)

self.idx2word.append(word)

self.embedding_matrix.append(self.embedding[word])

print('')

# 把 embedding_matrix 变成 tensor

self.embedding_matrix = torch.tensor(self.embedding_matrix)

# 将 和 加入 embedding

self.add_embedding("" )

self.add_embedding("" )

print("total words: {}".format(len(self.embedding_matrix)))

return self.embedding_matrix

def pad_sequence(self, sentence):

# 将每个句子变成一样的长度,即 sen_len 的长度

if len(sentence) > self.sen_len:

# 如果句子长度大于 sen_len 的长度,就截断

sentence = sentence[:self.sen_len]

else:

# 如果句子长度小于 sen_len 的长度,就补上 符号,缺多少个单词就补多少个

pad_len = self.sen_len - len(sentence)

for _ in range(pad_len):

sentence.append(self.word2idx["" ])

assert len(sentence) == self.sen_len

return sentence

def sentence_word2idx(self):

# 把句子里面的字变成相对应的 index

sentence_list = []

for i, sen in enumerate(self.sentences):

print('sentence count #{}'.format(i+1), end='\r')

sentence_idx = []

for word in sen:

if (word in self.word2idx.keys()):

sentence_idx.append(self.word2idx[word])

else:

# 没有出现过的单词就用 表示

sentence_idx.append(self.word2idx["" ])

# 将每个句子变成一样的长度

sentence_idx = self.pad_sequence(sentence_idx)

sentence_list.append(sentence_idx)

return torch.LongTensor(sentence_list)

def labels_to_tensor(self, y):

# 把 labels 转成 tensor

y = [int(label) for label in y]

return torch.LongTensor(y)

from torch.utils.data import DataLoader, Dataset

class TwitterDataset(Dataset):

"""

Expected data shape like:(data_num, data_len)

Data can be a list of numpy array or a list of lists

input data shape : (data_num, seq_len, feature_dim)

__len__ will return the number of data

"""

def __init__(self, X, y):

self.data = X

self.label = y

def __getitem__(self, idx):

if self.label is None: return self.data[idx]

return self.data[idx], self.label[idx]

def __len__(self):

return len(self.data)

from torch import nn

class LSTM_Net(nn.Module):

def __init__(self, embedding, embedding_dim, hidden_dim, num_layers, dropout=0.5, fix_embedding=True):

super(LSTM_Net, self).__init__()

# embedding layer

self.embedding = torch.nn.Embedding(embedding.size(0),embedding.size(1))

self.embedding.weight = torch.nn.Parameter(embedding)

# 是否将 embedding 固定住,如果 fix_embedding 为 False,在训练过程中,embedding 也会跟着被训练

self.embedding.weight.requires_grad = False if fix_embedding else True

self.embedding_dim = embedding.size(1)

self.hidden_dim = hidden_dim

self.num_layers = num_layers

self.dropout = dropout

self.lstm = nn.LSTM(embedding_dim, hidden_dim, num_layers=num_layers, batch_first=True)

self.classifier = nn.Sequential( nn.Dropout(dropout),

nn.Linear(hidden_dim, 1),

nn.Sigmoid() )

def forward(self, inputs):

inputs = self.embedding(inputs)

x, _ = self.lstm(inputs, None)

# x 的 dimension (batch, seq_len, hidden_size)

# 取用 LSTM 最后一层的 hidden state 丢到分类器中

x = x[:, -1, :]

x = self.classifier(x)

return x

def training(batch_size, n_epoch, lr, train, valid, model, device):

# 输出模型总的参数数量、可训练的参数数量

total = sum(p.numel() for p in model.parameters())

trainable = sum(p.numel() for p in model.parameters() if p.requires_grad)

print('\nstart training, parameter total:{}, trainable:{}\n'.format(total, trainable))

loss = nn.BCELoss() # 定义损失函数为二元交叉熵损失 binary cross entropy loss

t_batch = len(train) # training 数据的batch size大小

v_batch = len(valid) # validation 数据的batch size大小

optimizer = optim.Adam(model.parameters(), lr=lr) # optimizer用Adam,设置适当的学习率lr

total_loss, total_acc, best_acc = 0, 0, 0

for epoch in range(n_epoch):

total_loss, total_acc = 0, 0

# training

model.train() # 将 model 的模式设为 train,这样 optimizer 就可以更新 model 的参数

for i, (inputs, labels) in enumerate(train):

inputs = inputs.to(device, dtype=torch.long) # 因为 device 为 "cuda",将 inputs 转成 torch.cuda.LongTensor

labels = labels.to(device, dtype=torch.float) # 因为 device 为 "cuda",将 labels 转成 torch.cuda.FloatTensor,loss()需要float

optimizer.zero_grad() # 由于 loss.backward() 的 gradient 会累加,所以每一个 batch 后需要归零

outputs = model(inputs) # 模型输入Input,输出output

outputs = outputs.squeeze() # 去掉最外面的 dimension,好让 outputs 可以丢进 loss()

batch_loss = loss(outputs, labels) # 计算模型此时的 training loss

batch_loss.backward() # 计算 loss 的 gradient

optimizer.step() # 更新模型参数

accuracy = evaluation(outputs, labels) # 计算模型此时的 training accuracy

total_acc += (accuracy / batch_size)

total_loss += batch_loss.item()

print('Epoch | {}/{}'.format(epoch+1,n_epoch))

print('Train | Loss:{:.5f} Acc: {:.3f}'.format(total_loss/t_batch, total_acc/t_batch*100))

# validation

model.eval() # 将 model 的模式设为 eval,这样 model 的参数就会被固定住

with torch.no_grad():

total_loss, total_acc = 0, 0

for i, (inputs, labels) in enumerate(valid):

inputs = inputs.to(device, dtype=torch.long) # 因为 device 为 "cuda",将 inputs 转成 torch.cuda.LongTensor

labels = labels.to(device, dtype=torch.float) # 因为 device 为 "cuda",将 labels 转成 torch.cuda.FloatTensor,loss()需要float

outputs = model(inputs) # 模型输入Input,输出output

outputs = outputs.squeeze() # 去掉最外面的 dimension,好让 outputs 可以丢进 loss()

batch_loss = loss(outputs, labels) # 计算模型此时的 training loss

accuracy = evaluation(outputs, labels) # 计算模型此时的 training accuracy

total_acc += (accuracy / batch_size)

total_loss += batch_loss.item()

print("Valid | Loss:{:.5f} Acc: {:.3f} ".format(total_loss/v_batch, total_acc/v_batch*100))

if total_acc > best_acc:

# 如果 validation 的结果优于之前所有的結果,就把当下的模型保存下来,用于之后的testing

best_acc = total_acc

torch.save(model, "ckpt.model")

print('-----------------------------------------------')

def testing(batch_size, test_loader, model, device):

model.eval() # 将 model 的模式设为 eval,这样 model 的参数就会被固定住

ret_output = [] # 返回的output

with torch.no_grad():

for i, inputs in enumerate(test_loader):

inputs = inputs.to(device, dtype=torch.long)

outputs = model(inputs)

outputs = outputs.squeeze()

outputs[outputs>=0.5] = 1 # 大于等于0.5为正面

outputs[outputs<0.5] = 0 # 小于0.5为负面

ret_output += outputs.int().tolist()

return ret_output

from sklearn.model_selection import train_test_split

# 通过 torch.cuda.is_available() 的值判断是否可以使用 GPU ,如果可以的话 device 就设为 "cuda",没有的话就设为 "cpu"

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# 定义句子长度、要不要固定 embedding、batch 大小、要训练几个 epoch、 学习率的值、 w2v的路径

sen_len = 20

fix_embedding = True # fix embedding during training

batch_size = 128

epoch = 10

lr = 0.001

w2v_path = 'w2v_all.model'

print("loading data ...") # 读取 'training_label.txt' 'training_nolabel.txt'

train_x, y = load_training_data('training_label.txt')

train_x_no_label = load_training_data('training_nolabel.txt')

# 对 input 跟 labels 做预处理

preprocess = Preprocess(train_x, sen_len, w2v_path=w2v_path)

embedding = preprocess.make_embedding(load=True)

train_x = preprocess.sentence_word2idx()

y = preprocess.labels_to_tensor(y)

# 定义模型

model = LSTM_Net(embedding, embedding_dim=250, hidden_dim=150, num_layers=1, dropout=0.5, fix_embedding=fix_embedding)

model = model.to(device) # device为 "cuda",model 使用 GPU 来训练(inputs 也需要是 cuda tensor)

# 把 data 分为 training data 和 validation data(将一部分 training data 作为 validation data)

X_train, X_val, y_train, y_val = train_test_split(train_x, y, test_size = 0.1, random_state = 1, stratify = y)

print('Train | Len:{} \nValid | Len:{}'.format(len(y_train), len(y_val)))

# 把 data 做成 dataset 供 dataloader 取用

train_dataset = TwitterDataset(X=X_train, y=y_train)

val_dataset = TwitterDataset(X=X_val, y=y_val)

# 把 data 转成 batch of tensors

train_loader = DataLoader(train_dataset, batch_size = batch_size, shuffle = True, num_workers = 0) # 为了比较模型性能,将shuffle设置为False,实际运用中应该设置成True

val_loader = DataLoader(val_dataset, batch_size = batch_size, shuffle = False, num_workers = 0)

# 开始训练

training(batch_size, epoch, lr, train_loader, val_loader, model, device)

# 测试模型并作预测

# 读取测试数据test_x

print("loading testing data ...")

test_x = load_testing_data('testing_data.txt')

# 对test_x作预处理

preprocess = Preprocess(test_x, sen_len, w2v_path=w2v_path)

embedding = preprocess.make_embedding(load=True)

test_x = preprocess.sentence_word2idx()

test_dataset = TwitterDataset(X=test_x, y=None)

test_loader = DataLoader(test_dataset, batch_size = batch_size, shuffle = False, num_workers = 0)

# 读取模型

print('\nload model ...')

model = torch.load('ckpt.model')

# 测试模型

outputs = testing(batch_size, test_loader, model, device)

# 保存为 csv

tmp = pd.DataFrame({"id":[str(i) for i in range(len(test_x))],"label":outputs})

print("save csv ...")

tmp.to_csv('predict.csv', index=False)

print("Finish Predicting")

结果有略微提升:

Public Score:0.80838

Private Score:0.80988

修改2:self-training

在修改1的基础上,再进行self-training

主要定义了函数 add_label():

def add_label(outputs, threshold=0.9):

id = (outputs>=threshold) | (outputs<1-threshold)

outputs[outputs>=threshold] = 1 # 大于等于 threshold 为正面

outputs[outputs<1-threshold] = 0 # 小于 threshold 为负面

return outputs.long(), id

在 training()函数中增加了 self-training部分。

此外,修改 model 的 classifier 部分,变成了两层全连接层:

self.classifier = nn.Sequential( nn.Dropout(dropout),

nn.Linear(hidden_dim, 64),

nn.Dropout(dropout),

nn.Linear(64, 1),

nn.Sigmoid() )

完整代码如下:

# 设置后可以过滤一些无用的warning

import warnings

warnings.filterwarnings('ignore')

# utils.py

# 用来定义一些之后常用到的函数

import torch

import numpy as np

import pandas as pd

import torch.optim as optim

import torch.nn.functional as F

def load_training_data(path='training_label.txt'):

# 读取 training 需要的数据

# 如果是 'training_label.txt',需要读取 label,如果是 'training_nolabel.txt',不需要读取 label

if 'training_label' in path:

with open(path, 'r') as f:

lines = f.readlines()

# lines是二维数组,第一维是行line(按回车分割),第二维是每行的单词(按空格分割)

lines = [line.strip('\n').split(' ') for line in lines]

# 每行按空格分割后,第2个符号之后都是句子的单词

x = [line[2:] for line in lines]

# 每行按空格分割后,第0个符号是label

y = [line[0] for line in lines]

return x, y

else:

with open(path, 'r') as f:

lines = f.readlines()

# lines是二维数组,第一维是行line(按回车分割),第二维是每行的单词(按空格分割)

x = [line.strip('\n').split(' ') for line in lines]

return x

def load_testing_data(path='testing_data'):

# 读取 testing 需要的数据

with open(path, 'r') as f:

lines = f.readlines()

# 第0行是表头,从第1行开始是数据

# 第0列是id,第1列是文本,按逗号分割,需要逗号之后的文本

X = ["".join(line.strip('\n').split(",")[1:]).strip() for line in lines[1:]]

X = [sen.split(' ') for sen in X]

return X

def evaluation(outputs, labels):

# outputs => 预测值,概率(float)

# labels => 真实值,标签(0或1)

outputs[outputs>=0.5] = 1 # 大于等于 0.5 为正面

outputs[outputs<0.5] = 0 # 小于 0.5 为负面

accuracy = torch.sum(torch.eq(outputs, labels)).item()

return accuracy

from gensim.models import Word2Vec

def train_word2vec(x):

# 训练 word to vector 的 word embedding

# window:滑动窗口的大小,min_count:过滤掉语料中出现频率小于min_count的词

model = Word2Vec(x, size=256, window=5, min_count=5, workers=12, iter=10, sg=1)

return model

# 读取 training 数据

print("loading training data ...")

train_x, y = load_training_data('training_label.txt')

train_x_no_label = load_training_data('training_nolabel.txt')

# 读取 testing 数据

print("loading testing data ...")

test_x = load_testing_data('testing_data.txt')

# 把 training 中的 word 变成 vector

model = train_word2vec(train_x + train_x_no_label + test_x) # w2v_all

# model = train_word2vec(train_x + test_x) # w2v

# 保存 vector

print("saving model ...")

model.save('w2v_all.model')

# model.save('w2v.model')

# 数据预处理

class Preprocess():

def __init__(self, sen_len, w2v_path):

self.w2v_path = w2v_path # word2vec的存储路径

self.sen_len = sen_len # 句子的固定长度

self.idx2word = []

self.word2idx = {}

self.embedding_matrix = []

def get_w2v_model(self):

# 读取之前训练好的 word2vec

self.embedding = Word2Vec.load(self.w2v_path)

self.embedding_dim = self.embedding.vector_size

def add_embedding(self, word):

# 这里的 word 只会是 "" 或 ""

# 把一个随机生成的表征向量 vector 作为 "" 或 "" 的嵌入

vector = torch.empty(1, self.embedding_dim)

torch.nn.init.uniform_(vector)

# 它的 index 是 word2idx 这个词典的长度,即最后一个

self.word2idx[word] = len(self.word2idx)

self.idx2word.append(word)

self.embedding_matrix = torch.cat([self.embedding_matrix, vector], 0)

def make_embedding(self, load=True):

print("Get embedding ...")

# 获取训练好的 Word2vec word embedding

if load:

print("loading word to vec model ...")

self.get_w2v_model()

else:

raise NotImplementedError

# 遍历嵌入后的单词

for i, word in enumerate(self.embedding.wv.vocab):

print('get words #{}'.format(i+1), end='\r')

# 新加入的 word 的 index 是 word2idx 这个词典的长度,即最后一个

self.word2idx[word] = len(self.word2idx)

self.idx2word.append(word)

self.embedding_matrix.append(self.embedding[word])

print('')

# 把 embedding_matrix 变成 tensor

self.embedding_matrix = torch.tensor(self.embedding_matrix)

# 将 和 加入 embedding

self.add_embedding("" )

self.add_embedding("" )

print("total words: {}".format(len(self.embedding_matrix)))

return self.embedding_matrix

def pad_sequence(self, sentence):

# 将每个句子变成一样的长度,即 sen_len 的长度

if len(sentence) > self.sen_len:

# 如果句子长度大于 sen_len 的长度,就截断

sentence = sentence[:self.sen_len]

else:

# 如果句子长度小于 sen_len 的长度,就补上 符号,缺多少个单词就补多少个

pad_len = self.sen_len - len(sentence)

for _ in range(pad_len):

sentence.append(self.word2idx["" ])

assert len(sentence) == self.sen_len

return sentence

def sentence_word2idx(self, sentences):

# 把句子里面的字变成相对应的 index

sentence_list = []

for i, sen in enumerate(sentences):

print('sentence count #{}'.format(i+1), end='\r')

sentence_idx = []

for word in sen:

if (word in self.word2idx.keys()):

sentence_idx.append(self.word2idx[word])

else:

# 没有出现过的单词就用 表示

sentence_idx.append(self.word2idx["" ])

# 将每个句子变成一样的长度

sentence_idx = self.pad_sequence(sentence_idx)

sentence_list.append(sentence_idx)

return torch.LongTensor(sentence_list)

def labels_to_tensor(self, y):

# 把 labels 转成 tensor

y = [int(label) for label in y]

return torch.LongTensor(y)

def get_pad(self):

return self.word2idx["" ]

from torch.utils.data import DataLoader, Dataset

class TwitterDataset(Dataset):

"""

Expected data shape like:(data_num, data_len)

Data can be a list of numpy array or a list of lists

input data shape : (data_num, seq_len, feature_dim)

__len__ will return the number of data

"""

def __init__(self, X, y):

self.data = X

self.label = y

def __getitem__(self, idx):

if self.label is None: return self.data[idx]

return self.data[idx], self.label[idx]

def __len__(self):

return len(self.data)

from torch import nn

class LSTM_Net(nn.Module):

def __init__(self, embedding, embedding_dim, hidden_dim, num_layers, dropout=0.5, fix_embedding=True):

super(LSTM_Net, self).__init__()

# embedding layer

self.embedding = torch.nn.Embedding(embedding.size(0),embedding.size(1))

self.embedding.weight = torch.nn.Parameter(embedding)

# 是否将 embedding 固定住,如果 fix_embedding 为 False,在训练过程中,embedding 也会跟着被训练

self.embedding.weight.requires_grad = False if fix_embedding else True

self.embedding_dim = embedding.size(1)

self.hidden_dim = hidden_dim

self.num_layers = num_layers

self.dropout = dropout

self.lstm = nn.LSTM(embedding_dim, hidden_dim, num_layers=num_layers, batch_first=True)

self.classifier = nn.Sequential( nn.Dropout(dropout),

nn.Linear(hidden_dim, 64),

nn.Dropout(dropout),

nn.Linear(64, 1),

nn.Sigmoid() )

def forward(self, inputs):

inputs = self.embedding(inputs)

x, _ = self.lstm(inputs, None)

# x 的 dimension (batch, seq_len, hidden_size)

# 取用 LSTM 最后一层的 hidden state 丢到分类器中

x = x[:, -1, :]

x = self.classifier(x)

return x

def add_label(outputs, threshold=0.9):

id = (outputs>=threshold) | (outputs<1-threshold)

outputs[outputs>=threshold] = 1 # 大于等于 threshold 为正面

outputs[outputs<1-threshold] = 0 # 小于 threshold 为负面

return outputs.long(), id

def training(batch_size, n_epoch, lr, X_train, y_train, val_loader, train_x_no_label, model, device):

# 输出模型总的参数数量、可训练的参数数量

total = sum(p.numel() for p in model.parameters())

trainable = sum(p.numel() for p in model.parameters() if p.requires_grad)

print('\nstart training, parameter total:{}, trainable:{}\n'.format(total, trainable))

loss = nn.BCELoss() # 定义损失函数为二元交叉熵损失 binary cross entropy loss

optimizer = optim.Adam(model.parameters(), lr=lr) # optimizer用Adam,设置适当的学习率lr

total_loss, total_acc, best_acc = 0, 0, 0

for epoch in range(n_epoch):

print(X_train.shape)

train_dataset = TwitterDataset(X=X_train, y=y_train)

train_loader = DataLoader(train_dataset, batch_size = batch_size, shuffle = True, num_workers = 0)

total_loss, total_acc = 0, 0

# training

model.train() # 将 model 的模式设为 train,这样 optimizer 就可以更新 model 的参数

for i, (inputs, labels) in enumerate(train_loader):

inputs = inputs.to(device, dtype=torch.long) # 因为 device 为 "cuda",将 inputs 转成 torch.cuda.LongTensor

labels = labels.to(device, dtype=torch.float) # 因为 device 为 "cuda",将 labels 转成 torch.cuda.FloatTensor,loss()需要float

optimizer.zero_grad() # 由于 loss.backward() 的 gradient 会累加,所以每一个 batch 后需要归零

outputs = model(inputs) # 模型输入Input,输出output

outputs = outputs.squeeze() # 去掉最外面的 dimension,好让 outputs 可以丢进 loss()

batch_loss = loss(outputs, labels) # 计算模型此时的 training loss

batch_loss.backward() # 计算 loss 的 gradient

optimizer.step() # 更新模型参数

accuracy = evaluation(outputs, labels) # 计算模型此时的 training accuracy

total_acc += (accuracy / batch_size)

total_loss += batch_loss.item()

print('Epoch | {}/{}'.format(epoch+1,n_epoch))

t_batch = len(train_loader)

print('Train | Loss:{:.5f} Acc: {:.3f}'.format(total_loss/t_batch, total_acc/t_batch*100))

model.eval() # 将 model 的模式设为 eval,这样 model 的参数就会被固定住

# self-training

if epoch >= 4 :

train_no_label_dataset = TwitterDataset(X=train_x_no_label, y=None)

train_no_label_loader = DataLoader(train_no_label_dataset, batch_size = batch_size, shuffle = False, num_workers = 0)

train_x_no_label_tmp = torch.Tensor([[]])

with torch.no_grad():

for i, (inputs) in enumerate(train_no_label_loader):

inputs = inputs.to(device, dtype=torch.long) # 因为 device 为 "cuda",将 inputs 转成 torch.cuda.LongTensor

outputs = model(inputs) # 模型输入Input,输出output

outputs = outputs.squeeze() # 去掉最外面的 dimension,好让 outputs 可以丢进 loss()

labels, id = add_label(outputs)

# 加入新标注的数据

X_train = torch.cat((X_train.to(device), inputs[id]), dim=0)

y_train = torch.cat((y_train.to(device), labels[id]), dim=0)

if i == 0:

train_x_no_label = inputs[~id]

else:

train_x_no_label = torch.cat((train_x_no_label.to(device), inputs[~id]), dim=0)

# validation

if val_loader is None:

torch.save(model, "ckpt.model")

else:

with torch.no_grad():

total_loss, total_acc = 0, 0

for i, (inputs, labels) in enumerate(val_loader):

inputs = inputs.to(device, dtype=torch.long) # 因为 device 为 "cuda",将 inputs 转成 torch.cuda.LongTensor

labels = labels.to(device, dtype=torch.float) # 因为 device 为 "cuda",将 labels 转成 torch.cuda.FloatTensor,loss()需要float

outputs = model(inputs) # 模型输入Input,输出output

outputs = outputs.squeeze() # 去掉最外面的 dimension,好让 outputs 可以丢进 loss()

batch_loss = loss(outputs, labels) # 计算模型此时的 training loss

accuracy = evaluation(outputs, labels) # 计算模型此时的 training accuracy

total_acc += (accuracy / batch_size)

total_loss += batch_loss.item()

v_batch = len(val_loader)

print("Valid | Loss:{:.5f} Acc: {:.3f} ".format(total_loss/v_batch, total_acc/v_batch*100))

if total_acc > best_acc:

# 如果 validation 的结果优于之前所有的結果,就把当下的模型保存下来,用于之后的testing

best_acc = total_acc

torch.save(model, "ckpt.model")

print('-----------------------------------------------')

from sklearn.model_selection import train_test_split

# 通过 torch.cuda.is_available() 的值判断是否可以使用 GPU ,如果可以的话 device 就设为 "cuda",没有的话就设为 "cpu"

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# 定义句子长度、要不要固定 embedding、batch 大小、要训练几个 epoch、 学习率的值、 w2v的路径

sen_len = 20

fix_embedding = True # fix embedding during training

batch_size = 128

epoch = 11

lr = 8e-4

w2v_path = 'w2v_all.model'

print("loading data ...") # 读取 'training_label.txt' 'training_nolabel.txt'

train_x, y = load_training_data('training_label.txt')

train_x_no_label = load_training_data('training_nolabel.txt')

# 对 input 跟 labels 做预处理

preprocess = Preprocess(sen_len, w2v_path=w2v_path)

embedding = preprocess.make_embedding(load=True)

train_x = preprocess.sentence_word2idx(train_x)

y = preprocess.labels_to_tensor(y)

train_x_no_label = preprocess.sentence_word2idx(train_x_no_label)

# 把 data 分为 training data 和 validation data(将一部分 training data 作为 validation data)

X_train, X_val, y_train, y_val = train_test_split(train_x, y, test_size = 0.1, random_state = 1, stratify = y)

print('Train | Len:{} \nValid | Len:{}'.format(len(y_train), len(y_val)))

val_dataset = TwitterDataset(X=X_val, y=y_val)

val_loader = DataLoader(val_dataset, batch_size = batch_size, shuffle = False, num_workers = 0)

# 定义模型

model = LSTM_Net(embedding, embedding_dim=256, hidden_dim=128, num_layers=1, dropout=0.5, fix_embedding=fix_embedding)

model = model.to(device) # device为 "cuda",model 使用 GPU 来训练(inputs 也需要是 cuda tensor)

# 开始训练

# training(batch_size, epoch, lr, X_train, y_train, val_loader, train_x_no_label, model, device)

training(batch_size, epoch, lr, train_x, y, None, train_x_no_label, model, device)

def testing(batch_size, test_loader, model, device):

model.eval() # 将 model 的模式设为 eval,这样 model 的参数就会被固定住

ret_output = [] # 返回的output

with torch.no_grad():

for i, inputs in enumerate(test_loader):

inputs = inputs.to(device, dtype=torch.long)

outputs = model(inputs)

outputs = outputs.squeeze()

outputs[outputs>=0.5] = 1 # 大于等于0.5为正面

outputs[outputs<0.5] = 0 # 小于0.5为负面

ret_output += outputs.int().tolist()

return ret_output

# 测试模型并作预测

# 读取测试数据test_x

print("loading testing data ...")

test_x = load_testing_data('testing_data.txt')

# 对test_x作预处理

test_x = preprocess.sentence_word2idx(test_x)

test_dataset = TwitterDataset(X=test_x, y=None)

test_loader = DataLoader(test_dataset, batch_size = batch_size, shuffle = False, num_workers = 0)

# 读取模型

print('\nload model ...')

model = torch.load('ckpt.model')

# 测试模型

outputs = testing(batch_size, test_loader, model, device)

# 保存为 csv

tmp = pd.DataFrame({"id":[str(i) for i in range(len(test_x))],"label":outputs})

print("save csv ...")

tmp.to_csv('predict.csv', index=False)

print("Finish Predicting")

Public Score :0.81251

Private Score:0.81409

修改3:去除标点符号 + Bi-LSTM+ Attention

利用 re 库去除 .,?!' 等标点符号和数字 0-9

原始代码:

def load_training_data(path='training_label.txt'):

# 读取 training 需要的数据

# 如果是 'training_label.txt',需要读取 label,如果是 'training_nolabel.txt',不需要读取 label

if 'training_label' in path:

with open(path, 'r') as f:

lines = f.readlines()

# lines是二维数组,第一维是行line(按回车分割),第二维是每行的单词(按空格分割)

lines = [line.strip('\n').split(' ') for line in lines]

# 每行按空格分割后,第2个符号之后都是句子的单词

x = [line[2:] for line in lines]

# 每行按空格分割后,第0个符号是label

y = [line[0] for line in lines]

return x, y

else:

with open(path, 'r') as f:

lines = f.readlines()

# lines是二维数组,第一维是行line(按回车分割),第二维是每行的单词(按空格分割)

x = [line.strip('\n').split(' ') for line in lines]

return x

def load_testing_data(path='testing_data'):

# 读取 testing 需要的数据

with open(path, 'r') as f:

lines = f.readlines()

# 第0行是表头,从第1行开始是数据

# 第0列是id,第1列是文本,按逗号分割,需要逗号之后的文本

X = ["".join(line.strip('\n').split(",")[1:]).strip() for line in lines[1:]]

X = [sen.split(' ') for sen in X]

return X

修改为:

def load_training_data(path='training_label.txt'):

# 读取 training 需要的数据

# 如果是 'training_label.txt',需要读取 label,如果是 'training_nolabel.txt',不需要读取 label

if 'training_label' in path:

with open(path, 'r', encoding='UTF-8') as f:

lines = f.readlines()

# lines是二维数组,第一维是行line(按回车分割),第二维是每行的单词(按空格分割)

lines = [line.strip('\n') for line in lines]

# 每行按空格分割后,第2个符号之后都是句子的单词

x = [line[10:] for line in lines]

x = [re.sub(r"([.!?,'])", r"", s) for s in x]

x = [' '.join(s.split()) for s in x]

x = [s.split() for s in x]

# 每行按空格分割后,第0个符号是label

y = [line[0] for line in lines]

return x, y

else:

with open(path, 'r', encoding='UTF-8') as f:

lines = f.readlines()

# lines是二维数组,第一维是行line(按回车分割),第二维是每行的单词(按空格分割)

x = [line.strip('\n') for line in lines]

x = [re.sub(r"([.!?,'])", r"", s) for s in x]

x = [' '.join(s.split()) for s in x]

x = [s.split() for s in x]

return x

def load_testing_data(path='testing_data'):

# 读取 testing 需要的数据

with open(path, 'r', encoding='UTF-8') as f:

lines = f.readlines()

# 第0行是表头,从第1行开始是数据

# 第0列是id,第1列是文本,按逗号分割,需要逗号之后的文本

X = ["".join(line.strip('\n').split(",")[1:]).strip() for line in lines[1:]]

X = [re.sub(r"([.!?,'])", r"", s) for s in X]

X = [' '.join(s.split()) for s in X]

X = [s.split() for s in X]

return X

模型用到了双向的 LSTM 模型和注意力机制,模型定义如下:

class Atten_BiLSTM(nn.Module):

def __init__(self, embedding, embedding_dim, hidden_dim, num_layers, dropout=0.5, fix_embedding=True):

super(Atten_BiLSTM, self).__init__()

# embedding layer

self.embedding = torch.nn.Embedding(embedding.size(0), embedding.size(1))

self.embedding.weight = torch.nn.Parameter(embedding)

# 是否将 embedding 固定住,如果 fix_embedding 为 False,在训练过程中,embedding 也会跟着被训练

self.embedding.weight.requires_grad = False if fix_embedding else True

self.embedding_dim = embedding.size(1)

self.hidden_dim = hidden_dim

self.num_layers = num_layers

self.dropout = nn.Dropout(dropout)

self.lstm = nn.LSTM(embedding_dim, hidden_dim, num_layers=num_layers, batch_first=True, bidirectional=True)

self.classifier = nn.Sequential(nn.Dropout(dropout),

nn.Linear(hidden_dim, 64),

nn.Dropout(dropout),

nn.Linear(64, 32),

nn.Dropout(dropout),

nn.Linear(32, 16),

nn.Dropout(dropout),

nn.Linear(16, 1),

nn.Sigmoid())

self.attention_layer = nn.Sequential(

nn.Linear(hidden_dim, hidden_dim),

nn.ReLU()

)

def attention(self, output, hidden):

# output (batch_size, seq_len, hidden_size * num_direction)

# hidden (batch_size, num_layers * num_direction, hidden_size)

output = output[:,:,:self.hidden_dim] + output[:,:,self.hidden_dim:] # (batch_size, seq_len, hidden_size)

hidden = torch.sum(hidden, dim=1)

hidden = hidden.unsqueeze(1) # (batch_size, 1, hidden_size)

atten_w = self.attention_layer(hidden) # (batch_size, 1, hidden_size)

m = nn.Tanh()(output) # (batch_size, seq_len, hidden_size)

atten_context = torch.bmm(atten_w, m.transpose(1, 2))

softmax_w = F.softmax(atten_context, dim=-1)

context = torch.bmm(softmax_w, output)

return context.squeeze(1)

def forward(self, inputs):

inputs = self.embedding(inputs)

# x (batch, seq_len, hidden_size)

# hidden (num_layers *num_direction, batch_size, hidden_size)

x, (hidden, _) = self.lstm(inputs, None)

hidden = hidden.permute(1, 0, 2) # (batch_size, num_layers *num_direction, hidden_size)

# atten_out [batch_size, 1, hidden_dim]

atten_out = self.attention(x, hidden)

return self.classifier(atten_out)

完整代码如下(注:本段代码需要较大的GPU存储空间,建议至少 12G 显存):

# 设置后可以过滤一些无用的warning

import warnings

warnings.filterwarnings('ignore')

# utils.py

# 用来定义一些之后常用到的函数

import torch

import numpy as np

import pandas as pd

import torch.optim as optim

import torch.nn.functional as F

from gensim.models import Word2Vec

from torch.autograd import Variable

from torch import nn

import re

def load_training_data(path='training_label.txt'):

# 读取 training 需要的数据

# 如果是 'training_label.txt',需要读取 label,如果是 'training_nolabel.txt',不需要读取 label

if 'training_label' in path:

with open(path, 'r', encoding='UTF-8') as f:

lines = f.readlines()

# lines是二维数组,第一维是行line(按回车分割),第二维是每行的单词(按空格分割)

lines = [line.strip('\n') for line in lines]

# 每行按空格分割后,第2个符号之后都是句子的单词

x = [line[10:] for line in lines]

x = [re.sub(r"([.!?,'])", r"", s) for s in x]

x = [' '.join(s.split()) for s in x]

x = [s.split() for s in x]

# 每行按空格分割后,第0个符号是label

y = [line[0] for line in lines]

return x, y

else:

with open(path, 'r', encoding='UTF-8') as f:

lines = f.readlines()

# lines是二维数组,第一维是行line(按回车分割),第二维是每行的单词(按空格分割)

x = [line.strip('\n') for line in lines]

x = [re.sub(r"([.!?,'])", r"", s) for s in x]

x = [' '.join(s.split()) for s in x]

x = [s.split() for s in x]

return x

def load_testing_data(path='testing_data'):

# 读取 testing 需要的数据

with open(path, 'r', encoding='UTF-8') as f:

lines = f.readlines()

# 第0行是表头,从第1行开始是数据

# 第0列是id,第1列是文本,按逗号分割,需要逗号之后的文本

X = ["".join(line.strip('\n').split(",")[1:]).strip() for line in lines[1:]]

X = [re.sub(r"([.!?,'])", r"", s) for s in X]

X = [' '.join(s.split()) for s in X]

X = [s.split() for s in X]

return X

def evaluation(outputs, labels):

# outputs => 预测值,概率(float)

# labels => 真实值,标签(0或1)

outputs[outputs>=0.5] = 1 # 大于等于 0.5 为正面

outputs[outputs<0.5] = 0 # 小于 0.5 为负面

accuracy = torch.sum(torch.eq(outputs, labels)).item()

return accuracy

def train_word2vec(x):

# 训练 word to vector 的 word embedding

# window:滑动窗口的大小,min_count:过滤掉语料中出现频率小于min_count的词

model = Word2Vec(x, size=256, window=5, min_count=5, workers=12, iter=10, sg=1)

return model

# 读取 training 数据

print("loading training data ...")

train_x, y = load_training_data('training_label.txt')

train_x_no_label = load_training_data('training_nolabel.txt')

# 读取 testing 数据

print("loading testing data ...")

test_x = load_testing_data('testing_data.txt')

# 把 training 中的 word 变成 vector

model = train_word2vec(train_x + train_x_no_label + test_x) # w2v_all

# model = train_word2vec(train_x + test_x) # w2v

# 保存 vector

print("saving model ...")

model.save('w2v_all.model')

# model.save('w2v.model')

# 数据预处理

class Preprocess():

def __init__(self, sen_len, w2v_path):

self.w2v_path = w2v_path # word2vec的存储路径

self.sen_len = sen_len # 句子的固定长度

self.idx2word = []

self.word2idx = {}

self.embedding_matrix = []

def get_w2v_model(self):

# 读取之前训练好的 word2vec

self.embedding = Word2Vec.load(self.w2v_path)

self.embedding_dim = self.embedding.vector_size

def add_embedding(self, word):

# 这里的 word 只会是 "" 或 ""

# 把一个随机生成的表征向量 vector 作为 "" 或 "" 的嵌入

vector = torch.empty(1, self.embedding_dim)

torch.nn.init.uniform_(vector)

# 它的 index 是 word2idx 这个词典的长度,即最后一个

self.word2idx[word] = len(self.word2idx)

self.idx2word.append(word)

self.embedding_matrix = torch.cat([self.embedding_matrix, vector], 0)

def make_embedding(self, load=True):

print("Get embedding ...")

# 获取训练好的 Word2vec word embedding

if load:

print("loading word to vec model ...")

self.get_w2v_model()

else:

raise NotImplementedError

# 遍历嵌入后的单词

for i, word in enumerate(self.embedding.wv.vocab):

print('get words #{}'.format(i+1), end='\r')

# 新加入的 word 的 index 是 word2idx 这个词典的长度,即最后一个

self.word2idx[word] = len(self.word2idx)

self.idx2word.append(word)

self.embedding_matrix.append(self.embedding[word])

print('')

# 把 embedding_matrix 变成 tensor

self.embedding_matrix = torch.tensor(self.embedding_matrix)

# 将 和 加入 embedding

self.add_embedding("" )

self.add_embedding("" )

print("total words: {}".format(len(self.embedding_matrix)))

return self.embedding_matrix

def pad_sequence(self, sentence):

# 将每个句子变成一样的长度,即 sen_len 的长度

if len(sentence) > self.sen_len:

# 如果句子长度大于 sen_len 的长度,就截断

sentence = sentence[:self.sen_len]

else:

# 如果句子长度小于 sen_len 的长度,就补上 符号,缺多少个单词就补多少个

pad_len = self.sen_len - len(sentence)

for _ in range(pad_len):

sentence.append(self.word2idx["" ])

assert len(sentence) == self.sen_len

return sentence

def sentence_word2idx(self, sentences):

# 把句子里面的字变成相对应的 index

sentence_list = []

for i, sen in enumerate(sentences):

print('sentence count #{}'.format(i+1), end='\r')

sentence_idx = []

for word in sen:

if (word in self.word2idx.keys()):

sentence_idx.append(self.word2idx[word])

else:

# 没有出现过的单词就用 表示

sentence_idx.append(self.word2idx["" ])

# 将每个句子变成一样的长度

sentence_idx = self.pad_sequence(sentence_idx)

sentence_list.append(sentence_idx)

return torch.LongTensor(sentence_list)

def labels_to_tensor(self, y):

# 把 labels 转成 tensor

y = [int(label) for label in y]

return torch.LongTensor(y)

from torch.utils.data import DataLoader, Dataset

class TwitterDataset(Dataset):

"""

Expected data shape like:(data_num, data_len)

Data can be a list of numpy array or a list of lists

input data shape : (data_num, seq_len, feature_dim)

__len__ will return the number of data

"""

def __init__(self, X, y):

self.data = X

self.label = y

def __getitem__(self, idx):

if self.label is None: return self.data[idx]

return self.data[idx], self.label[idx]

def __len__(self):

return len(self.data)

class Atten_BiLSTM(nn.Module):

def __init__(self, embedding, embedding_dim, hidden_dim, num_layers, dropout=0.5, fix_embedding=True):

super(Atten_BiLSTM, self).__init__()

# embedding layer

self.embedding = torch.nn.Embedding(embedding.size(0), embedding.size(1))

self.embedding.weight = torch.nn.Parameter(embedding)

# 是否将 embedding 固定住,如果 fix_embedding 为 False,在训练过程中,embedding 也会跟着被训练

self.embedding.weight.requires_grad = False if fix_embedding else True

self.embedding_dim = embedding.size(1)

self.hidden_dim = hidden_dim

self.num_layers = num_layers

self.dropout = nn.Dropout(dropout)

self.lstm = nn.LSTM(embedding_dim, hidden_dim, num_layers=num_layers, batch_first=True, bidirectional=True)

self.classifier = nn.Sequential(nn.Dropout(dropout),

nn.Linear(hidden_dim, 64),

nn.Dropout(dropout),

nn.Linear(64, 32),

nn.Dropout(dropout),

nn.Linear(32, 16),

nn.Dropout(dropout),

nn.Linear(16, 1),

nn.Sigmoid())

self.attention_layer = nn.Sequential(

nn.Linear(hidden_dim, hidden_dim),

nn.ReLU()

)

def attention(self, output, hidden):

# output (batch_size, seq_len, hidden_size * num_direction)

# hidden (batch_size, num_layers * num_direction, hidden_size)

output = output[:,:,:self.hidden_dim] + output[:,:,self.hidden_dim:] # (batch_size, seq_len, hidden_size)

hidden = torch.sum(hidden, dim=1)

hidden = hidden.unsqueeze(1) # (batch_size, 1, hidden_size)

atten_w = self.attention_layer(hidden) # (batch_size, 1, hidden_size)

m = nn.Tanh()(output) # (batch_size, seq_len, hidden_size)

atten_context = torch.bmm(atten_w, m.transpose(1, 2))

softmax_w = F.softmax(atten_context, dim=-1)

context = torch.bmm(softmax_w, output)

return context.squeeze(1)

def forward(self, inputs):

inputs = self.embedding(inputs)

# x (batch, seq_len, hidden_size)

# hidden (num_layers *num_direction, batch_size, hidden_size)

x, (hidden, _) = self.lstm(inputs, None)

hidden = hidden.permute(1, 0, 2) # (batch_size, num_layers *num_direction, hidden_size)

# atten_out [batch_size, 1, hidden_dim]

atten_out = self.attention(x, hidden)

return self.classifier(atten_out)

def add_label(outputs, threshold=0.9):

id = (outputs>=threshold) | (outputs<1-threshold)

outputs[outputs>=threshold] = 1 # 大于等于 threshold 为正面

outputs[outputs<1-threshold] = 0 # 小于 threshold 为负面

return outputs.long(), id

def training(batch_size, n_epoch, lr, X_train, y_train, val_loader, train_x_no_label, model, device):

# 输出模型总的参数数量、可训练的参数数量

total = sum(p.numel() for p in model.parameters())

trainable = sum(p.numel() for p in model.parameters() if p.requires_grad)

print('\nstart training, parameter total:{}, trainable:{}\n'.format(total, trainable))

loss = nn.BCELoss() # 定义损失函数为二元交叉熵损失 binary cross entropy loss

optimizer = optim.Adam(model.parameters(), lr=lr) # optimizer用Adam,设置适当的学习率lr

total_loss, total_acc, best_acc = 0, 0, 0

start_epoch = 5

for epoch in range(n_epoch):

print(X_train.shape)

train_dataset = TwitterDataset(X=X_train, y=y_train)

train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True, num_workers=0)

total_loss, total_acc = 0, 0

# training

model.train() # 将 model 的模式设为 train,这样 optimizer 就可以更新 model 的参数

for i, (inputs, labels) in enumerate(train_loader):

inputs = inputs.to(device, dtype=torch.long) # 因为 device 为 "cuda",将 inputs 转成 torch.cuda.LongTensor

labels = labels.to(device,

dtype=torch.float) # 因为 device 为 "cuda",将 labels 转成 torch.cuda.FloatTensor,loss()需要float

optimizer.zero_grad() # 由于 loss.backward() 的 gradient 会累加,所以每一个 batch 后需要归零

outputs = model(inputs) # 模型输入Input,输出output

outputs = outputs.squeeze() # 去掉最外面的 dimension,好让 outputs 可以丢进 loss()

batch_loss = loss(outputs, labels) # 计算模型此时的 training loss

batch_loss.backward() # 计算 loss 的 gradient

optimizer.step() # 更新模型参数

accuracy = evaluation(outputs, labels) # 计算模型此时的 training accuracy

total_acc += (accuracy / batch_size)

total_loss += batch_loss.item()

print('Epoch | {}/{}'.format(epoch + 1, n_epoch))

t_batch = len(train_loader)

print('Train | Loss:{:.5f} Acc: {:.3f}'.format(total_loss / t_batch, total_acc / t_batch * 100))

model.eval() # 将 model 的模式设为 eval,这样 model 的参数就会被固定住

# self-training

if epoch >= start_epoch:

train_no_label_dataset = TwitterDataset(X=train_x_no_label, y=None)

train_no_label_loader = DataLoader(train_no_label_dataset, batch_size=batch_size, shuffle=False,

num_workers=0)

with torch.no_grad():

for i, (inputs) in enumerate(train_no_label_loader):

inputs = inputs.to(device, dtype=torch.long) # 因为 device 为 "cuda",将 inputs 转成 torch.cuda.LongTensor

outputs = model(inputs) # 模型输入Input,输出output

outputs = outputs.squeeze() # 去掉最外面的 dimension,好让 outputs 可以丢进 loss()

labels, id = add_label(outputs)

# 加入新标注的数据

X_train = torch.cat((X_train.to(device), inputs[id]), dim=0)

y_train = torch.cat((y_train.to(device), labels[id]), dim=0)

if i == 0:

train_x_no_label = inputs[~id]

else:

train_x_no_label = torch.cat((train_x_no_label.to(device), inputs[~id]), dim=0)

# validation

if val_loader is None:

torch.save(model, "ckpt.model")

else:

with torch.no_grad():

total_loss, total_acc = 0, 0

for i, (inputs, labels) in enumerate(val_loader):

inputs = inputs.to(device, dtype=torch.long) # 因为 device 为 "cuda",将 inputs 转成 torch.cuda.LongTensor

labels = labels.to(device,

dtype=torch.float) # 因为 device 为 "cuda",将 labels 转成 torch.cuda.FloatTensor,loss()需要float

outputs = model(inputs) # 模型输入Input,输出output

outputs = outputs.squeeze() # 去掉最外面的 dimension,好让 outputs 可以丢进 loss()

batch_loss = loss(outputs, labels) # 计算模型此时的 training loss

accuracy = evaluation(outputs, labels) # 计算模型此时的 training accuracy

total_acc += (accuracy / batch_size)

total_loss += batch_loss.item()

v_batch = len(val_loader)

print("Valid | Loss:{:.5f} Acc: {:.3f} ".format(total_loss / v_batch, total_acc / v_batch * 100))

if total_acc > best_acc:

# 如果 validation 的结果优于之前所有的結果,就把当下的模型保存下来,用于之后的testing

best_acc = total_acc

torch.save(model, "ckpt.model")

print('-----------------------------------------------')

from sklearn.model_selection import train_test_split

# 通过 torch.cuda.is_available() 的值判断是否可以使用 GPU ,如果可以的话 device 就设为 "cuda",没有的话就设为 "cpu"

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# 定义句子长度、要不要固定 embedding、batch 大小、要训练几个 epoch、 学习率的值、 w2v的路径

sen_len = 40

fix_embedding = True # fix embedding during training

batch_size = 128

epoch = 20

lr = 2e-3

w2v_path = 'w2v_all.model'

print("loading data ...") # 读取 'training_label.txt' 'training_nolabel.txt'

train_x, y = load_training_data('training_label.txt')

train_x_no_label = load_training_data('training_nolabel.txt')

# 对 input 跟 labels 做预处理

preprocess = Preprocess(sen_len, w2v_path=w2v_path)

embedding = preprocess.make_embedding(load=True)

train_x = preprocess.sentence_word2idx(train_x)

y = preprocess.labels_to_tensor(y)

train_x_no_label = preprocess.sentence_word2idx(train_x_no_label)

# 把 data 分为 training data 和 validation data(将一部分 training data 作为 validation data)

X_train, X_val, y_train, y_val = train_test_split(train_x, y, test_size = 0.1, random_state = 1, stratify = y)

print('Train | Len:{} \nValid | Len:{}'.format(len(y_train), len(y_val)))

val_dataset = TwitterDataset(X=X_val, y=y_val)

val_loader = DataLoader(val_dataset, batch_size = batch_size, shuffle = False, num_workers = 0)

# 定义模型

model = Atten_BiLSTM(embedding, embedding_dim=256, hidden_dim=128, num_layers=1, dropout=0.5, fix_embedding=fix_embedding)

model = model.to(device) # device为 "cuda",model 使用 GPU 来训练(inputs 也需要是 cuda tensor)

# 开始训练

training(batch_size, epoch, lr, X_train, y_train, val_loader, train_x_no_label, model, device)

# training(batch_size, epoch, lr, train_x, y, None, train_x_no_label, model, device)

def testing(batch_size, test_loader, model, device):

model.eval() # 将 model 的模式设为 eval,这样 model 的参数就会被固定住

ret_output = [] # 返回的output

with torch.no_grad():

for i, inputs in enumerate(test_loader):

inputs = inputs.to(device, dtype=torch.long)

outputs = model(inputs)

outputs = outputs.squeeze()

outputs[outputs >= 0.5] = 1 # 大于等于0.5为正面

outputs[outputs < 0.5] = 0 # 小于0.5为负面

ret_output += outputs.int().tolist()

return ret_output

# 测试模型并作预测

# 读取测试数据test_x

print("loading testing data ...")

test_x = load_testing_data('testing_data.txt')

# 对test_x作预处理

test_x = preprocess.sentence_word2idx(test_x)

test_dataset = TwitterDataset(X=test_x, y=None)

test_loader = DataLoader(test_dataset, batch_size=batch_size, shuffle=False, num_workers=0)

# 读取模型

print('\nload model ...')

model = torch.load('ckpt.model')

# 测试模型

outputs = testing(batch_size, test_loader, model, device)

# 保存为 csv

tmp = pd.DataFrame({"id": [str(i) for i in range(len(test_x))], "label": outputs})

print("save csv ...")

tmp.to_csv('predict.csv', index=False)

print("Finish Predicting")

Public Score:0.82281

Private Score:0.82330

汇总

| Public Score | Private Score | |

|---|---|---|

| baseline | 0.80391 | 0.80425 |

| 修改1 | 0.80838 | 0.80988 |

| 修改2 | 0.81251 | 0.81409 |

| 修改3 | 0.82281 | 0.82330 |