TensorFlow2使用TensorFlow Hub进行迁移学习(利用flower_photos.tgz数据集)

TensorFlow Hub共享了许多预训练好的模型组件。

本案例主要内容:

- 通过tf.keras使用TensorFlow Hub

- 使用TensorFlow Hub完成图像分类任务

- 完成简单的迁移学习

1. 导入所需的库

import tensorflow as tf

import tensorflow_hub as hub

import matplotlib.pylab as plt

import numpy as np

from PIL import Image

for i in [tf,hub,np]:

print(i.__name__,": ",i.__version__,sep="")输出:

tensorflow: 2.2.0

tensorflow_hub: 0.8.0

numpy: 1.17.42. ImageNet分类器

2.1 从TensorFlow Hub下载ImageNet分类器

classifier_url = "https://hub.tensorflow.google.cn/google/tf2-preview/mobilenet_v2/classification/2"

image_shape = (224,224)

classifier = tf.keras.Sequential([

hub.KerasLayer(classifier_url,input_shape=image_shape+(3,)) # 使用了元组相加:(224,224)+(3,)=(224,224,3)

])没有输出。没有消息,就是最好的消息!

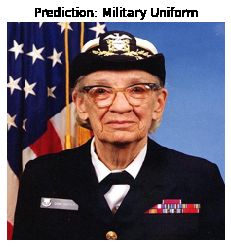

2.2 下载预测用数据

grace_hopper = tf.keras.utils.get_file("image.jpg","https://storage.googleapis.com/download.tensorflow.org/example_images/grace_hopper.jpg",cache_dir="./")

grace_hopper = Image.open(grace_hopper).resize(image_shape)

grace_hopper输出:

Downloading data from https://storage.googleapis.com/download.tensorflow.org/example_images/grace_hopper.jpg

65536/61306 [================================] - 1s 23us/step# 将数值归一化到0至1之间的数

grace_hopper = np.array(grace_hopper)/255.0

grace_hopper.shape输出:

(224, 224, 3)result = classifier.predict(grace_hopper[np.newaxis,...])

result.shape输出:

(1, 1001)predicted_class = np.argmax(result[0],axis=-1)

predicted_class输出:

6532.3 解码预测结果

根据ImageNet数据集的标签,解码预测的结果。

# 下载ImageNet数据集标签

labels_path = tf.keras.utils.get_file("ImageNetLabels.txt","https://storage.googleapis.com/download.tensorflow.org/data/ImageNetLabels.txt",cache_dir="./")

imagenet_labels = np.array(open(labels_path).read().splitlines())输出:

Downloading data from https://storage.googleapis.com/download.tensorflow.org/data/ImageNetLabels.txt

16384/10484 [==============================================] - 0s 27us/step# 输出预测的标签名

plt.imshow(grace_hopper)

plt.axis("off")

predicted_class_name = imagenet_labels[predicted_class]

_ = plt.title("Prediction: "+predicted_class_name.title())输出:

预测结果为:military uniform(军装),预测正确。

3. 直接用预训练模型预测结果

3.1 下载数据集

data_root = tf.keras.utils.get_file("flower_photos","https://storage.googleapis.com/download.tensorflow.org/example_images/flower_photos.tgz",untar=True)

data_root输出:

'C:\\Users\\my-pc\\.keras\\datasets\\flower_photos'使用tf.keras.preprocessing.image.ImageDataGenerator加载数据,并用rescale参数将数据转换成0至1之间的浮点数。

image_generator = tf.keras.preprocessing.image.ImageDataGenerator(rescale=1./255)

image_data = image_generator.flow_from_directory(data_root,target_size=image_shape)输出:

Found 3670 images belonging to 5 classes.flow_from_directory函数默认批大小为32,详细默认参数如下:

flow_from_directory(

directory, target_size=(256, 256), color_mode='rgb', classes=None,

class_mode='categorical', batch_size=32, shuffle=True, seed=None,

save_to_dir=None, save_prefix='', save_format='png', follow_links=False,

subset=None, interpolation='nearest' )for image_batch, label_batch in image_data:

print("Image batch shape: ",image_batch.shape)

print("Label batch shape: ",label_batch.shape)

break输出:

Image batch shape: (32, 224, 224, 3)

Label batch shape: (32, 5)3.2 批量预测图像数据

result_batch = classifier.predict(image_batch)

result_batch.shape输出:

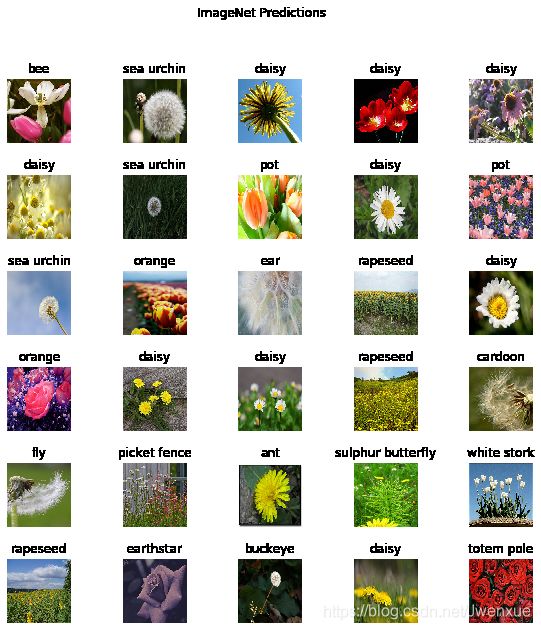

(32, 1001)3.3 可视化预测结果

predicted_class_names = imagenet_labels[np.argmax(result_batch,axis=-1)]

predicted_class_names输出:

array(['bee', 'sea urchin', 'daisy', 'daisy', 'daisy', 'daisy',

'sea urchin', 'pot', 'daisy', 'pot', 'sea urchin', 'orange', 'ear',

'rapeseed', 'daisy', 'orange', 'daisy', 'daisy', 'rapeseed',

'cardoon', 'fly', 'picket fence', 'ant', 'sulphur butterfly',

'white stork', 'rapeseed', 'earthstar', 'buckeye', 'daisy',

'totem pole', 'quill', 'daisy'], dtype='plt.figure(figsize=(10,10))

plt.subplots_adjust(hspace=0.5)

for n in range(30):

plt.subplot(6,5,n+1)

plt.imshow(image_batch[n])

plt.title(predicted_class_names[n])

plt.axis("off")

plt.suptitle("ImageNet Predictions")输出:

如上输出结果显示,几乎大部分都预测错误了。此时可能会怀疑是不是代码敲错了,或是其它什么地方出了问题。

其实,我们下载的ImageNet训练的数据集中没有这些花的样本,或类似的样本,导致模型无法正确地识别出这些花。

4. 迁移学习

如上结果显示,直接用ImageNet训练的模型进行预测时错误率很高,此时就需要迁移学习发挥作用了。

4.1 下载headless模型

headless模型:没有最后分类的层,只保留了前面提取特征的卷积层等。

feature_extractor_url = "https://hub.tensorflow.google.cn/google/tf2-preview/mobilenet_v2/feature_vector/2"

feature_extractor_layer = hub.KerasLayer(feature_extractor_url,input_shape=(224,224,3))

feature_batch = feature_extractor_layer(image_batch)

feature_batch.shape输出:

TensorShape([32, 1280])如上输出说明,对于单个图像下载的模型预测输出是1280的向量,即加入神经网络时本层应该有1280个神经元。

4.2 构建新模型

后面加入全连接层训练时,需要将下载的headless模型中的参数设置为不可训练。

feature_extractor_layer.trainable = False

model = tf.keras.Sequential([

feature_extractor_layer,

tf.keras.layers.Dense(image_data.num_classes)

])

model.compile(optimizer="adam",

loss=tf.keras.losses.CategoricalCrossentropy(from_logits=True),

metrics=["accuracy"])

model.summary()输出:

Model: "sequential_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

keras_layer_1 (KerasLayer) (None, 1280) 2257984

_________________________________________________________________

dense_1 (Dense) (None, 5) 6405

=================================================================

Total params: 2,264,389

Trainable params: 6,405

Non-trainable params: 2,257,984

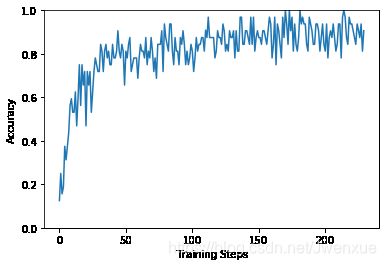

_________________________________________________________________4.3 训练模型

class CollectBatchStats(tf.keras.callbacks.Callback):

def __init__(self):

self.batch_losses = []

self.batch_acc = []

def on_train_batch_end(self,batch,logs=None):

self.batch_losses.append(logs["loss"])

self.batch_acc.append(logs["accuracy"])

self.model.reset_metrics()

steps_per_epoch = np.ceil(image_data.samples/image_data.batch_size)

batch_stats_callback = CollectBatchStats()

history = model.fit_generator(image_data,epochs=2,

steps_per_epoch=steps_per_epoch,

callbacks=[batch_stats_callback])输出:

Epoch 1/2

115/115 [==============================] - 12s 104ms/step - loss: 0.3387 - accuracy: 0.8750

Epoch 2/2

115/115 [==============================] - 8s 69ms/step - loss: 0.2902 - accuracy: 0.9062plt.figure()

plt.ylabel("Loss")

plt.xlabel("Training Steps")

plt.ylim([0,2])

plt.plot(batch_stats_callback.batch_losses)输出:

plt.figure()

plt.ylabel("Accuracy")

plt.xlabel("Training Steps")

plt.ylim([0,1])

plt.plot(batch_stats_callback.batch_acc)输出:

从如上结果可以发现,仅仅训练了两轮,准确率就达到了不错的效果。

5. 利用新模型进行预测

class_names = sorted(image_data.class_indices.items(),key=lambda pair:pair[1])

class_names = np.array([key.title() for key, value in class_names])

class_names输出:

array(['Daisy', 'Dandelion', 'Roses', 'Sunflowers', 'Tulips'],

dtype='predicted_batch = model.predict(image_batch)

predicted_id = np.argmax(predicted_batch,axis=-1)

predicted_label_batch = class_names[predicted_id]

# 可视化

label_id = np.argmax(label_batch, axis=-1)

plt.figure(figsize=(10,10))

plt.subplots_adjust(hspace=0.5)

for n in range(30):

plt.subplot(6,5,n+1)

plt.imshow(image_batch[n])

color = "green" if predicted_id[n] == label_id[n] else "red"

plt.title(predicted_label_batch[n].title(),color=color)

plt.axis("off")

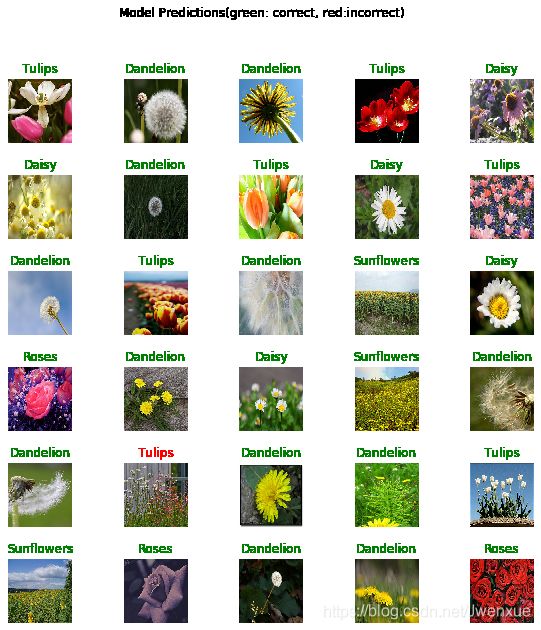

plt.suptitle("Model Predictions(green: correct, red:incorrect)")输出:

利用新模型预测,30个显示结果中,只有一个预测错了。这是一个很好的结果了。

6. 导出模型

6.1 保存模型到本地

import time

t = time.time()

export_path = "./TransferLearning_SavedModel/{}".format(int(t))

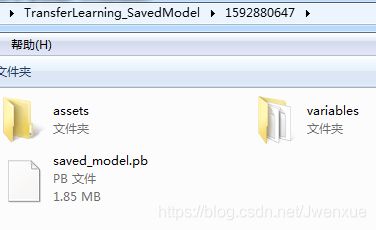

model.save(export_path, save_format="tf")输出:

INFO:tensorflow:Assets written to: ./TransferLearning_SavedModel/1592880647\assets

INFO:tensorflow:Assets written to: ./TransferLearning_SavedModel/1592880647\assets模型已经保存到TransferLearning_SavedModel目录下

6.2 加载保存的本地模型

reloaded_model = tf.keras.models.load_model(export_path) # 加载保存的本地模型

result_batch = model.predict(image_batch) # 未保存前模型预测结果

reloaded_result_batch = reloaded_model.predict(image_batch) # 重新加载模型的预测结果

abs(reloaded_result_batch - result_batch).max() # 对比两者预测结果输出:

0.0如上结果表明,重新加载的模型与保存之前的模型一模一样。