SparkSQL之DataSource

文章目录

- Parquet 文件

- 加载Parquet文件

- Partition Discovery 分区探测

- Schema 合并

- ORC文件

- Hive表

- 用JDBC读其它数据库

- Performance Tuning性能优化

- Caching Data In Memory

- Other Configuration Options

- Broadcast Hint for SQL Queries

你用MapReduce、Spark、Hive去处理源数据,处理之后输出出来。输入和输出的数据文件的格式可能有很多种,比如文本、json、ORC、Parquet格式的文件等。另外这些数据可能在本地、hdfs、S3上等。另外还有数据是否压缩等。如果以上这些情况都会出现,那么处理起来就比较麻烦,要混合起来处理。

这样就有了外部数据源(Spark1.2版本出来的)。

Spark之外有很多数据源,这些数据源要给Spark去处理,就需要通过Spark的DataSource来转换一下,转换之后就变成了DataFrame或Dataset了,后面处理就简单了。另外DataFrame或Dataset也可以输出为外部数据源。可以把DataSource理解为DataFrame和外部数据源的连接点。

Spark SQL通过DataFrame 接口可以操作大量的数据源。DataFrame 可以被相关的算子操作,还可以被用来创建临时的视图。把DataFrame 注册为一张临时的视图,你就可以在这些数据上运行SQL查询了。本节讲的是用Spark的DataSource加载数据源以及保存数据源,以及操作spark内置的数据源。

Parquet 文件

官网:http://spark.apache.org/docs/latest/sql-data-sources-parquet.html#parquet-files

加载Parquet文件

Parquet 是列式存储的一种文件类型,许多数据处理系统都支持Parquet格式。

Spark SQL对于Parquet 文件的写和读都支持。读的话变成DataFrame,写的话变成Parquet 文件。Spark SQL会自动识别Parquet 文件里的schema。写Parquet 文件时,由于兼容性原因,所有列都自动转换为可以为空的列。

//建议标准写法

//读取json文件

spark.read.json() //简化写法,读取json文件

spark.read.format("json").load() //标准写法。你写json,就是读取json文件,写可以写其它格式文件

//源码,json() 调用的就是format

def json(paths: String*): DataFrame = format("json").load(paths : _*)

peopleDF.write.parquet("people.parquet") //简写

peopleDF.write.format("parquet").save("") //标准写法

小知识点:数据源格式需要指定全名(例如:org.apache.spark.sql.parquet),如果数据源格式为内置格式,则只需要指定简称json, parquet, jdbc, orc, libsvm, csv, text来指定数据的格式。

举例:

//读取一个json文件

scala> val peopleDF = spark.read.format("json").load("file:///home/hadoop/app/spark-2.4.2-bin-2.6.0-cdh5.7.0/examples/src/main/resources/people.json")

peopleDF: org.apache.spark.sql.DataFrame = [age: bigint, name: string]

scala> peopleDF.show

+----+-------+

| age| name|

+----+-------+

|null|Michael|

| 30| Andy|

| 19| Justin|

+----+-------+

//写parquet到本地

scala> peopleDF.write.format("parquet").save("file:///home/hadoop/data/peopleParquet")

//本地文件

[hadoop@hadoop001 peopleParquet]$ pwd

/home/hadoop/data/peopleParquet

[hadoop@hadoop001 peopleParquet]$ ls

part-00000-3e25fdbf-d928-4b63-b587-12fe9eb1fc2b-c000.snappy.parquet _SUCCESS

//读本地parquet

scala> val parquetDF = spark.read.format("parquet").load("file:///home/hadoop/data/peopleParquet/")

parquetDF: org.apache.spark.sql.DataFrame = [age: bigint, name: string]

scala> parquetDF.show

+----+-------+

| age| name|

+----+-------+

|null|Michael|

| 30| Andy|

| 19| Justin|

+----+-------+

scala> peopleDF.createOrReplaceTempView("people")

scala> spark.sql("select * from people").show

WARN ObjectStore: Failed to get database global_temp, returning NoSuchObjectException

+----+-------+

| age| name|

+----+-------+

|null|Michael|

| 30| Andy|

| 19| Justin|

+----+-------+

scala> peopleDF.map(x => "name" + x(1)).show

+-----------+

| value|

+-----------+

|nameMichael|

| nameAndy|

| nameJustin|

+-----------+

//另外,spark.sql可以直接作用在文件之上

scala> spark.sql("SELECT * FROM parquet.`hdfs://hadoop001:9000/tmp/users.parquet`").show

19/07/04 22:28:18 WARN ObjectStore: Failed to get database parquet, returning NoSuchObjectException

+------+--------------+----------------+

| name|favorite_color|favorite_numbers|

+------+--------------+----------------+

|Alyssa| null| [3, 9, 15, 20]|

| Ben| red| []|

+------+--------------+----------------+

Partition Discovery 分区探测

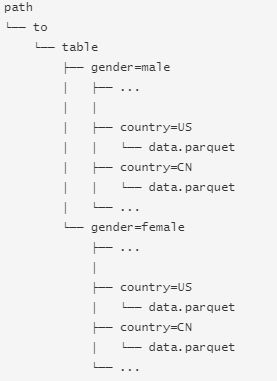

像Hive里面的表分区,表的分区是一种通用的优化方法。在一个分区表里,数据存储在不同的目录下,分区的列值作为每个分区目录的一部分。Spark所有内置的数据源(包括Text/CSV/JSON/ORC/Parquet),都能够被探测,而且自动推断分区信息。

举例:

//创建分区

[hadoop@hadoop001 data]$ hdfs dfs -mkdir -p /sparksql/table/gender=male/county=US

[hadoop@hadoop001 data]$ hdfs dfs -mkdir -p /sparksql/table/gender=male/county=CN

[hadoop@hadoop001 data]$ hdfs dfs -mkdir -p /sparksql/table/gender=female/county=CN

[hadoop@hadoop001 data]$ hdfs dfs -mkdir -p /sparksql/table/gender=female/county=US

//把parquet数据放进某个分区下面

[hadoop@hadoop001 resources]$ hdfs dfs -put users.parquet /sparksql/table/gender=male/county=US/

//从这里看到前面三列是数据文件本身有的列

//后面两列是根据分区目录名自动推导的列名

scala> spark.read.format("parquet").load("hdfs://hadoop001:9000/sparksql/table").printSchema

root

|-- name: string (nullable = true)

|-- favorite_color: string (nullable = true)

|-- favorite_numbers: array (nullable = true)

| |-- element: integer (containsNull = true)

|-- gender: string (nullable = true)

|-- county: string (nullable = true)

scala> spark.read.format("parquet").load("hdfs://hadoop001:9000/sparksql/table")..show

+------+--------------+----------------+------+------+

| name|favorite_color|favorite_numbers|gender|county|

+------+--------------+----------------+------+------+

|Alyssa| null| [3, 9, 15, 20]| male| US|

| Ben| red| []| male| US|

+------+--------------+----------------+------+------+

把path/to/table这个路径传递给 SparkSession.read.parquet或者SparkSession.read.load,Spark SQL会自动从路径中提取partition分区的信息。

请注意,分区列的数据类型是自动推断的。目前,支持数字数据类型、日期、时间戳和字符串类型。有时,用户可能不想自动推断分区列的数据类型。对于这些用例,可以通过spark.sql.sources.partitionColumnTypeInference.enabled配置自动类型推断,默认值为true。当类型推断被禁用时,字符串类型将用于分区列。

从spark 1.6.0开始,默认情况下,分区探测仅查找给定路径下的分区,就给定的路径下往下查找。对于上面的示例,如果用户将path/to/table/gender=male传递给SparkSession.read.parquet或SparkSession.read.load,则gender不会被视为分区列。如果用户需要指定分区发现应该使用的基本路径,则可以在数据源选项中设置基本路径。例如,当path/to/table/gender=male是数据的路径,并且用户将basepath设置为path/to/table/时,gender将是分区列。

//如果你继续写路径到table的下一层gender=male,那么可看到如下:

scala> spark.read.format("parquet").load("hdfs://hadoop001:9000/sparksql/table/gender=male").printSchema

root

|-- name: string (nullable = true)

|-- favorite_color: string (nullable = true)

|-- favorite_numbers: array (nullable = true)

| |-- element: integer (containsNull = true)

|-- county: string (nullable = true)

scala> spark.read.format("parquet").load("hdfs://hadoop001:9000/sparksql/table/gender=male").show

+------+--------------+----------------+------+

| name|favorite_color|favorite_numbers|county|

+------+--------------+----------------+------+

|Alyssa| null| [3, 9, 15, 20]| US|

| Ben| red| []| US|

+------+--------------+----------------+------+

Schema 合并

可以把多个parquet文件的schema合并,了解下,具体看官网。不建议用,因为它是 expensive operation。用户可以获取多个有不同Schema但相互兼容的Parquet文件。现在Parquet数据源能自动检测这种情况,并合并这些文件的schemas。

因为Schema合并是一个高消耗的操作,在大多数情况下并不需要,所以Spark SQL从1.5.0 开始默认关闭了该功能。如何开启该功能,具体看官网。

ORC文件

了解一下。

Since Spark 2.3, Spark supports a vectorized ORC reader with a new ORC file format for ORC files. To do that, the following configurations are newly added. The vectorized reader is used for the native ORC tables (e.g., the ones created using the clause USING ORC) when spark.sql.orc.impl is set to native and spark.sql.orc.enableVectorizedReader is set to true. For the Hive ORC serde tables (e.g., the ones created using the clause USING HIVE OPTIONS (fileFormat ‘ORC’)), the vectorized reader is used when spark.sql.hive.convertMetastoreOrc is also set to true.

实际上,一般用这种方式就可以了:

spark.read.format("orc").load(orc文件的路径)

Hive表

参考官网:http://spark.apache.org/docs/latest/sql-data-sources-hive-tables.html#hive-tables

下面这段话引用:https://blog.51cto.com/14309075/2411816

Apache Hive是Hadoop上的SQL引擎,Spark SQL编译时可以包含Hive支持,也可以不包含。包含Hive支持的Spark SQL可以支持Hive表访问、UDF(用户自定义函数)以及 Hive 查询语言(HiveQL/HQL)等。需要强调的 一点是,如果要在Spark SQL中包含Hive的库,并不需要事先安装Hive。一般来说,最好还是在编译Spark SQL时引入Hive支持,这样就可以使用这些特性了。如果你下载的是二进制版本的 Spark,它应该已经在编译时添加了 Hive 支持。

若要把Spark SQL连接到一个部署好的Hive上,你必须把hive-site.xml复制到 Spark的配置文件目录中($SPARK_HOME/conf)。即使没有部署好Hive,Spark SQL也可以运行。

需要注意的是,如果你没有部署好Hive,Spark SQL会在当前的工作目录中创建出自己的Hive 元数据仓库,叫作 metastore_db。此外,如果你尝试使用 HiveQL 中的 CREATE TABLE (并非 CREATE EXTERNAL TABLE)语句来创建表,这些表会被放在你默认的文件系统中的 /user/hive/warehouse 目录中(如果你的 classpath 中有配好的 hdfs-site.xml,默认的文件系统就是 HDFS,否则就是本地文件系统)。

举例:

import java.io.File

import org.apache.spark.sql.{Row, SaveMode, SparkSession}

case class Record(key: Int, value: String)

// warehouseLocation points to the default location for managed databases and tables

val warehouseLocation = new File("spark-warehouse").getAbsolutePath

//enableHiveSupport()要开启

val spark = SparkSession

.builder()

.appName("Spark Hive Example")

.config("spark.sql.warehouse.dir", warehouseLocation)

.enableHiveSupport()

.getOrCreate()

import spark.implicits._

import spark.sql

sql("CREATE TABLE IF NOT EXISTS src (key INT, value STRING) USING hive")

sql("LOAD DATA LOCAL INPATH 'examples/src/main/resources/kv1.txt' INTO TABLE src")

// Queries are expressed in HiveQL

sql("SELECT * FROM src").show()

// +---+-------+

// |key| value|

// +---+-------+

// |238|val_238|

// | 86| val_86|

// |311|val_311|

// ...

// Aggregation queries are also supported.

sql("SELECT COUNT(*) FROM src").show()

// +--------+

// |count(1)|

// +--------+

// | 500 |

// +--------+

// The results of SQL queries are themselves DataFrames and support all normal functions.

val sqlDF = sql("SELECT key, value FROM src WHERE key < 10 ORDER BY key")

// The items in DataFrames are of type Row, which allows you to access each column by ordinal.

val stringsDS = sqlDF.map {

case Row(key: Int, value: String) => s"Key: $key, Value: $value"

}

stringsDS.show()

// +--------------------+

// | value|

// +--------------------+

// |Key: 0, Value: val_0|

// |Key: 0, Value: val_0|

// |Key: 0, Value: val_0|

// ...

// You can also use DataFrames to create temporary views within a SparkSession.

val recordsDF = spark.createDataFrame((1 to 100).map(i => Record(i, s"val_$i")))

recordsDF.createOrReplaceTempView("records")

// Queries can then join DataFrame data with data stored in Hive.

sql("SELECT * FROM records r JOIN src s ON r.key = s.key").show()

// +---+------+---+------+

// |key| value|key| value|

// +---+------+---+------+

// | 2| val_2| 2| val_2|

// | 4| val_4| 4| val_4|

// | 5| val_5| 5| val_5|

// ...

// Create a Hive managed Parquet table, with HQL syntax instead of the Spark SQL native syntax

// `USING hive`

sql("CREATE TABLE hive_records(key int, value string) STORED AS PARQUET")

// Save DataFrame to the Hive managed table

val df = spark.table("src")

df.write.mode(SaveMode.Overwrite).saveAsTable("hive_records")

// After insertion, the Hive managed table has data now

sql("SELECT * FROM hive_records").show()

// +---+-------+

// |key| value|

// +---+-------+

// |238|val_238|

// | 86| val_86|

// |311|val_311|

// ...

// Prepare a Parquet data directory

val dataDir = "/tmp/parquet_data"

spark.range(10).write.parquet(dataDir)

// Create a Hive external Parquet table

sql(s"CREATE EXTERNAL TABLE hive_ints(key int) STORED AS PARQUET LOCATION '$dataDir'")

// The Hive external table should already have data

sql("SELECT * FROM hive_ints").show()

// +---+

// |key|

// +---+

// | 0|

// | 1|

// | 2|

// ...

// Turn on flag for Hive Dynamic Partitioning

spark.sqlContext.setConf("hive.exec.dynamic.partition", "true")

spark.sqlContext.setConf("hive.exec.dynamic.partition.mode", "nonstrict")

// Create a Hive partitioned table using DataFrame API

df.write.partitionBy("key").format("hive").saveAsTable("hive_part_tbl")

// Partitioned column `key` will be moved to the end of the schema.

sql("SELECT * FROM hive_part_tbl").show()

// +-------+---+

// | value|key|

// +-------+---+

// |val_238|238|

// | val_86| 86|

// |val_311|311|

// ...

spark.stop()

spark-shell中测试:

scala> import org.apache.spark.sql.{Row, SaveMode, SparkSession}

import org.apache.spark.sql.{Row, SaveMode, SparkSession}

scala> import spark.implicits._

import spark.implicits._

scala> import spark.sql

import spark.sql

//自动的找到hive的warehouse,然后把表放进去

scala> sql("CREATE TABLE IF NOT EXISTS src (key INT, value STRING) USING hive")

WARN HiveMetaStore: Location: hdfs://hadoop001:9000/user/hive/warehouse/src specified for non-external table:src

Warning: fs.defaultFS is not set when running "chgrp" command.

Warning: fs.defaultFS is not set when running "chmod" command.

res0: org.apache.spark.sql.DataFrame = []

scala> sql("LOAD DATA LOCAL INPATH '/home/hadoop/app/spark-2.4.2-bin-2.6.0-cdh5.7.0/examples/src/main/resources/kv1.txt' INTO TABLE src")

Warning: fs.defaultFS is not set when running "chgrp" command.

Warning: fs.defaultFS is not set when running "chmod" command.

19/07/04 00:14:58 WARN ObjectStore: Failed to get database global_temp, returning NoSuchObjectException

res1: org.apache.spark.sql.DataFrame = []

scala> sql("SELECT * FROM src").show()

+---+-------+

|key| value|

+---+-------+

|238|val_238|

| 86| val_86|

|311|val_311|

| 27| val_27|

|165|val_165|

|409|val_409|

|255|val_255|

|278|val_278|

| 98| val_98|

|484|val_484|

|265|val_265|

|193|val_193|

|401|val_401|

|150|val_150|

|273|val_273|

|224|val_224|

|369|val_369|

| 66| val_66|

|128|val_128|

|213|val_213|

+---+-------+

only showing top 20 rows

scala> sql("show tables").show

+--------+------------+-----------+

|database| tableName|isTemporary|

+--------+------------+-----------+

| default| dept| false|

| default| emp| false|

| default|sparksqltest| false|

| default| src| false|

+--------+------------+-----------+

scala> sql("select count(*) from src").show

+--------+

|count(1)|

+--------+

| 500|

+--------+

scala> val sqlDF = sql("SELECT key, value FROM src WHERE key < 10 ORDER BY key")

sqlDF: org.apache.spark.sql.DataFrame = [key: int, value: string]

scala> sqlDF.show

+---+-----+

|key|value|

+---+-----+

| 0|val_0|

| 0|val_0|

| 0|val_0|

| 2|val_2|

| 4|val_4|

| 5|val_5|

| 5|val_5|

| 5|val_5|

| 8|val_8|

| 9|val_9|

+---+-----+

//DataFrame中的项属于Row类型,允许您按序号访问每一列。

scala> val stringsDS = sqlDF.map {

| case Row(key: Int, value: String) => s"Key: $key, Value: $value"

| }

stringsDS: org.apache.spark.sql.Dataset[String] = [value: string]

scala> stringsDS.show()

+--------------------+

| value|

+--------------------+

|Key: 0, Value: val_0|

|Key: 0, Value: val_0|

|Key: 0, Value: val_0|

|Key: 2, Value: val_2|

|Key: 4, Value: val_4|

|Key: 5, Value: val_5|

|Key: 5, Value: val_5|

|Key: 5, Value: val_5|

|Key: 8, Value: val_8|

|Key: 9, Value: val_9|

+--------------------+

scala> val recordDF = spark.createDataFrame( (1 to 10).map(x => Record( x , s"value_$x") ) )

recordDF: org.apache.spark.sql.DataFrame = [key: int, value: string]

scala> recordDF.show

+---+--------+

|key| value|

+---+--------+

| 1| value_1|

| 2| value_2|

| 3| value_3|

| 4| value_4|

| 5| value_5|

| 6| value_6|

| 7| value_7|

| 8| value_8|

| 9| value_9|

| 10|value_10|

+---+--------+

scala> recordDF.createOrReplaceTempView("records")

scala> sql("select * from src").show

+---+-------+

|key| value|

+---+-------+

|238|val_238|

| 86| val_86|

|311|val_311|

| 27| val_27|

|165|val_165|

|409|val_409|

|255|val_255|

|278|val_278|

| 98| val_98|

|484|val_484|

|265|val_265|

|193|val_193|

|401|val_401|

|150|val_150|

|273|val_273|

|224|val_224|

|369|val_369|

| 66| val_66|

|128|val_128|

|213|val_213|

+---+-------+

only showing top 20 rows

scala> sql("select * from records r join src s on r.key=s.key").show

+---+--------+---+------+

|key| value|key| value|

+---+--------+---+------+

| 4| value_4| 4| val_4|

| 8| value_8| 8| val_8|

| 10|value_10| 10|val_10|

| 5| value_5| 5| val_5|

| 5| value_5| 5| val_5|

| 2| value_2| 2| val_2|

| 5| value_5| 5| val_5|

| 9| value_9| 9| val_9|

+---+--------+---+------+

//创建一张hive表,存储格式为parquet,另外可以看到它自动创建到hive数据库里了

scala> sql("CREATE TABLE hive_records(key int, value string) STORED AS PARQUET")

19/07/04 23:46:44 WARN HiveMetaStore: Location: hdfs://hadoop001:9000/user/hive/warehouse/hive_records specified for non-external table:hive_records

Warning: fs.defaultFS is not set when running "chgrp" command.

Warning: fs.defaultFS is not set when running "chmod" command.

res44: org.apache.spark.sql.DataFrame = []

//把hivesrc表转换成一个DataFrame

scala> val df = spark.table("src")

df: org.apache.spark.sql.DataFrame = [key: int, value: string]

//保存模式为重写(有好几种,可以看官网),把上面的df这个DataFrame数据保存到hive表里

scala> df.write.mode(SaveMode.Overwrite).saveAsTable("hive_records")

Warning: fs.defaultFS is not set when running "chgrp" command.

Warning: fs.defaultFS is not set when running "chmod" command.

scala> sql("SELECT * FROM hive_records").show()

+---+-------+

|key| value|

+---+-------+

|238|val_238|

| 86| val_86|

|311|val_311|

| 27| val_27|

|165|val_165|

|409|val_409|

|255|val_255|

|278|val_278|

| 98| val_98|

|484|val_484|

|265|val_265|

|193|val_193|

|401|val_401|

|150|val_150|

|273|val_273|

|224|val_224|

|369|val_369|

| 66| val_66|

|128|val_128|

|213|val_213|

+---+-------+

only showing top 20 rows

//准备一个parquet数据目录

scala> val dataDir = "/home/hadoop/data/parquet_data"

dataDir: String = /home/hadoop/data/parquet_data

把1到9折9个数字,分别写到上面parquet文件目录中去

scala> spark.range(10).write.parquet(dataDir)

scala> spark.read.parquet("/home/hadoop/data/parquet_data").show

+---+

| id|

+---+

| 5|

| 6|

| 7|

| 8|

| 9|

| 0|

| 1|

| 2|

| 3|

| 4|

+---+

//创建一张存储格式为parquet的外部表,指定路径为上面准备好的路径

scala> sql(s"CREATE EXTERNAL TABLE hive_ints(key int) STORED AS PARQUET LOCATION '$dataDir'")

res64: org.apache.spark.sql.DataFrame = []

//这里全是null值,不知道为什么

scala> sql("SELECT * FROM hive_ints").show()

+----+

| key|

+----+

|null|

|null|

|null|

|null|

|null|

|null|

|null|

|null|

|null|

|null|

+----+

scala> df.show

+---+-------+

|key| value|

+---+-------+

|238|val_238|

| 86| val_86|

|311|val_311|

| 27| val_27|

|165|val_165|

|409|val_409|

|255|val_255|

|278|val_278|

| 98| val_98|

|484|val_484|

|265|val_265|

|193|val_193|

|401|val_401|

|150|val_150|

|273|val_273|

|224|val_224|

|369|val_369|

| 66| val_66|

|128|val_128|

|213|val_213|

+---+-------+

only showing top 20 rows

//把hive动态分区开关打开

scala> spark.sqlContext.setConf("hive.exec.dynamic.partition", "true")

scala> spark.sqlContext.setConf("hive.exec.dynamic.partition.mode", "nonstrict")

//用DataFrame API创建动态分区表

scala>df.write.partitionBy("key").format("hive").saveAsTable("hive_part_tbl")

。。。。

Warning: fs.defaultFS is not set when running "chgrp" command.

Warning: fs.defaultFS is not set when running "chmod" command.

Warning: fs.defaultFS is not set when running "chgrp" command.

Warning: fs.defaultFS is not set when running "chmod" command.

WARN log: Updating partition stats fast for: hive_part_tbl

WARN log: Updated size to 8

Warning: fs.defaultFS is not set when running "chgrp" command.

Warning: fs.defaultFS is not set when running "chmod" command.

Warning: fs.defaultFS is not set when running "chgrp" command.

Warning: fs.defaultFS is not set when running "chmod" command.

。。。。

//分区的列 (这里是'key',将会移动到schema的最后结尾

scala> sql("SELECT * FROM hive_part_tbl").show()

+-------+---+

| value|key|

+-------+---+

|val_125|125|

|val_125|125|

|val_374|374|

| val_8| 8|

|val_432|432|

|val_200|200|

|val_200|200|

|val_497|497|

| val_41| 41|

|val_164|164|

|val_164|164|

|val_316|316|

|val_316|316|

|val_316|316|

|val_417|417|

|val_417|417|

|val_417|417|

| val_37| 37|

| val_37| 37|

|val_338|338|

+-------+---+

only showing top 20 rows

[hadoop@hadoop001 data]$ hdfs dfs -ls hdfs://hadoop001:9000/user/hive/warehouse/hive_part_tbl

Found 309 items

drwxr-xr-x - hadoop supergroup 0 hdfs://hadoop001:9000/user/hive/warehouse/hive_part_tbl/key=0

drwxr-xr-x - hadoop supergroup 0 hdfs://hadoop001:9000/user/hive/warehouse/hive_part_tbl/key=10

drwxr-xr-x - hadoop supergroup 0 hdfs://hadoop001:9000/user/hive/warehouse/hive_part_tbl/key=100

drwxr-xr-x - hadoop supergroup 0 hdfs://hadoop001:9000/user/hive/warehouse/hive_part_tbl/key=103

.......

Hive表的存储格式

具体参考官网。

When you create a Hive table, you need to define how this table should read/write data from/to file system, i.e. the “input format” and “output format”. You also need to define how this table should deserialize the data to rows, or serialize rows to data, i.e. the “serde”. The following options can be used to specify the storage format(“serde”, “input format”, “output format”), e.g. CREATE TABLE src(id int) USING hive OPTIONS(fileFormat ‘parquet’). By default, we will read the table files as plain text.

你创建一个Hive表,你需要指定从哪里读取数据,这个数据是什么格式的;你创建这张表的存储格式又是什么。还有序列化反序列化等。

比如:

sql("CREATE TABLE hive_records(key int, value string) STORED AS PARQUET")

val df = spark.table("src")

df.write.mode(SaveMode.Overwrite).saveAsTable("hive_records")

sql(s"CREATE EXTERNAL TABLE hive_ints(key int) STORED AS PARQUET LOCATION '$dataDir'")

用JDBC读其它数据库

参考官网:http://spark.apache.org/docs/latest/sql-data-sources-jdbc.html

Spark SQL也可以用JDBC去从其它数据库去读取数据。读取的结果返回的是DataFrame ,可以在Spark SQL很容易的去处理,以及与其它数据源进行join。

需要保证 数据库的驱动对driver端和executor端可见。

提前需要把你要连接的数据库驱动放到spark classpath里,用下面这种方式,

bin/spark-shell --driver-class-path +跟上相关数据库的驱动

举例:

scala> val dbsjdbcDF = spark.read.format("jdbc")

.option("url","jdbc:mysql://hadoop001:3306/hive")

.option("dbtable","dbs")

.option("user","root")

.option("password","123456")

.load()

jdbcDF: org.apache.spark.sql.DataFrame = [DB_ID: bigint, DESC: string ... 4 more fields]

scala> dbsjdbcDF.show

+-----+--------------------+--------------------+-------+----------+----------+

|DB_ID| DESC| DB_LOCATION_URI| NAME|OWNER_NAME|OWNER_TYPE|

+-----+--------------------+--------------------+-------+----------+----------+

| 1|Default Hive data...|hdfs://hadoop001:...|default| public| ROLE|

| 2| null|hdfs://hadoop001:...| g6| hadoop| USER|

+-----+--------------------+--------------------+-------+----------+----------+

scala> val tblsjdbcDF = spark.read.format("jdbc").option("url","jdbc:mysql://hadoop001:3306/ruoze_hive").option("dbtable","tbls").option("user","root").option("password","123456").load()

tblsjdbcDF: org.apache.spark.sql.DataFrame = [TBL_ID: bigint, CREATE_TIME: int ... 9 more fields]

scala> tblsjdbcDF.show

+------+-----------+-----+----------------+------+---------+-----+-------------+--------------+------------------+------------------+

|TBL_ID|CREATE_TIME|DB_ID|LAST_ACCESS_TIME| OWNER|RETENTION|SD_ID| TBL_NAME| TBL_TYPE|VIEW_EXPANDED_TEXT|VIEW_ORIGINAL_TEXT|

+------+-----------+-----+----------------+------+---------+-----+-------------+--------------+------------------+------------------+

| 1| 1561877732| 2| 0|hadoop| 0| 1| test| MANAGED_TABLE| null| null|

| 2| 1561880911| 1| 0|hadoop| 0| 2| emp| MANAGED_TABLE| null| null|

| 3| 1561880919| 1| 0|hadoop| 0| 3| dept| MANAGED_TABLE| null| null|

| 6| 1561884678| 1| 0|hadoop| 0| 6| sparksqltest| MANAGED_TABLE| null| null|

| 11| 1562170275| 1| 0|hadoop| 0| 11| src| MANAGED_TABLE| null| null|

| 17| 1562255263| 1| 0|hadoop| 0| 17| hive_records| MANAGED_TABLE| null| null|

| 20| 1562256521| 1| 0|hadoop| 0| 20| hive_ints|EXTERNAL_TABLE| null| null|

| 21| 1562256772| 1| 0|hadoop| 0| 21|hive_part_tbl| MANAGED_TABLE| null| null|

+------+-----------+-----+----------------+------+---------+-----+-------------+--------------+------------------+------------------+

//实现两个表的join;

scala> dbsjdbcDF.join(tblsjdbcDF,"db_id").select("db_id","name","TBL_NAME","DB_LOCATION_URI").show(false)

+-----+-------+-------------+-----------------------------------------------+

|db_id|name |TBL_NAME |DB_LOCATION_URI |

+-----+-------+-------------+-----------------------------------------------+

|1 |default|dept |hdfs://hadoop001:9000/user/hive/warehouse |

|1 |default|emp |hdfs://hadoop001:9000/user/hive/warehouse |

|1 |default|hive_ints |hdfs://hadoop001:9000/user/hive/warehouse |

|1 |default|hive_part_tbl|hdfs://hadoop001:9000/user/hive/warehouse |

|1 |default|hive_records |hdfs://hadoop001:9000/user/hive/warehouse |

|1 |default|sparksqltest |hdfs://hadoop001:9000/user/hive/warehouse |

|1 |default|src |hdfs://hadoop001:9000/user/hive/warehouse |

|2 |g6 |test |hdfs://hadoop001:9000/user/hive/warehouse/g6.db|

+-----+-------+-------------+-----------------------------------------------+

/./或者这样:

scala> dbsjdbcDF.join(tblsjdbcDF,dbsjdbcDF("db_id")===tblsjdbcDF("db_id")).select("name","TBL_NAME","DB_LOCATION_URI","TBL_TYPE").show(false)

+-------+-------------+-----------------------------------------------+--------------+

|name |TBL_NAME |DB_LOCATION_URI |TBL_TYPE |

+-------+-------------+-----------------------------------------------+--------------+

|default|emp |hdfs://hadoop001:9000/user/hive/warehouse |MANAGED_TABLE |

|default|dept |hdfs://hadoop001:9000/user/hive/warehouse |MANAGED_TABLE |

|default|sparksqltest |hdfs://hadoop001:9000/user/hive/warehouse |MANAGED_TABLE |

|default|src |hdfs://hadoop001:9000/user/hive/warehouse |MANAGED_TABLE |

|default|hive_records |hdfs://hadoop001:9000/user/hive/warehouse |MANAGED_TABLE |

|default|hive_ints |hdfs://hadoop001:9000/user/hive/warehouse |EXTERNAL_TABLE|

|default|hive_part_tbl|hdfs://hadoop001:9000/user/hive/warehouse |MANAGED_TABLE |

|g6 |test |hdfs://hadoop001:9000/user/hive/warehouse/g6.db|MANAGED_TABLE |

+-------+-------------+-----------------------------------------------+--------------+

多个数据源来的DataFrame就可以做相应的关联操作。

Performance Tuning性能优化

通过把数据缓存在内存中或配置一些选项可以提高Spark工作的性能。

Caching Data In Memory

Spark SQL可以通过调用spark.catalog.cacheTable("tableName") 或者 dataFrame.cache() 来把数据缓存到内存中。

为了减少内存的使用和GC的压力, Spark SQL会浏览你仅仅需要的列,而且自动优化压缩。

你可以使用 spark.catalog.uncacheTable("tableName") 来移除内存中的表。

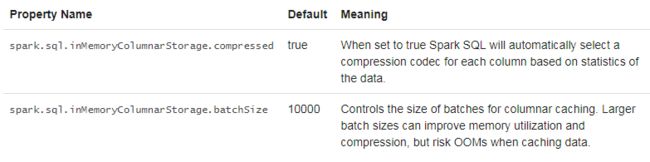

内存缓存的配置可以使用SparkSession 的setConf 方法或使用SQL运行SET key=value命令来完成。

这些一般默认就行。

Other Configuration Options

The following options can also be used to tune the performance of query execution. It is possible that these options will be deprecated in future release as more optimizations are performed automatically.

以下的参数也可以用于优化查询执行的性能。

在将来的版本中,这些选项可能会被弃用,因为会自动执行更多的优化。

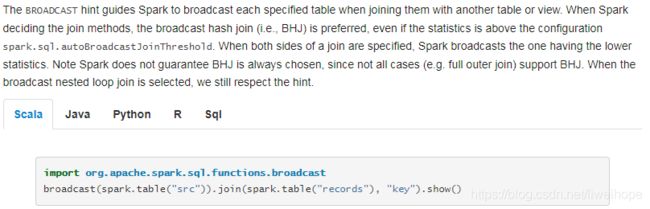

Broadcast Hint for SQL Queries

为SQL查询广播提示。

当一个表去和其它表或视图做join的时候,BROADCAST hint会让Spark去广播这个表。