特征检索算法(Harris、SIFT、SURF、FAST、BRIEF、ORB)

Content:

-

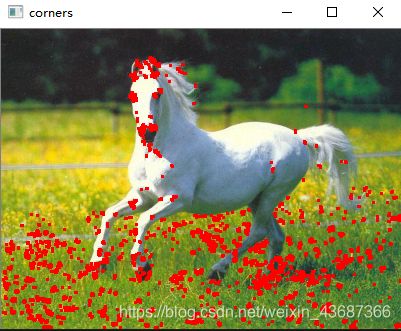

Harris:检测角点

-

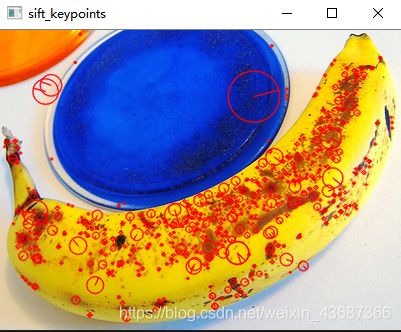

SIFT:检测斑点

-

SURF:检测斑点

-

FAST:检测角点

-

BRIEF:检测斑点

-

ORB:带方向的FAST算法和具有旋转不变性的BRIEF算法(brute-force暴力匹配)

1、Harris

角点:三维图像亮度变化剧烈的点或者图像边缘曲线上曲率极大值的点

使用cornerHarris来识别角点

import cv2

import numpy as np

img = cv2.imread('images/horse.jpg')

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

gray = np.float32(gray)

dst = cv2.cornerHarris(gray,2,3,0.04)

dst = cv2.dilate(dst,None)

img[dst>0.01 * dst.max()] = [0,0,255]

cv2.imshow('corners',img)

cv2.imwrite('corners.png',img)

if cv2.waitKey(0) & 0xff == 27:

cv2.destroyALLWindows()

结果:

2、SIFT

前面的cornerHarris函数可以很好的检测角点,并且在图像旋转的情况下也能检测到,然而减少或者增加图像的大小,可能会丢失图像的某些部分,甚至会增加角点的质量。所以采用一种与图像比例变化无关的角点检测方法来解决特特征损失现象!也就是尺度不变特征变换(SIFT)。

参考:非常详细的sift算法原理解析

import sys

import cv2

import numpy as np

#image root

imgpath = 'C:/Users/data/hotdog/train/not-hotdog/451.png'

img = cv2.imread(imgpath)

#png to gray所有都需要做这一步

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

#创建一个sift对象,并计算灰度图像

sift = cv2.xfeatures2d.SIFT_create()

#计算关键点信息和描述符

keypoints,descriptor = sift.detectAndCompute(gray,None)

#显示出关键点

img = cv2.drawKeypoints(image=img,outImage=img,keypoints=keypoints,flags=cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS,color=(0,0,255))

cv2.imshow('sift_keypoints',img)

if cv2.waitKey(0) & 0xff == ord("q"):

cv2.destroyAllWindows()结果:

3、SURF

SURF采用快速Hessian算法检测关键点,然后SURF提取特征

import cv2

import numpy as np

imgpath = 'C:/Users/data/hotdog/train/not-hotdog/451.png'

img = cv2.imread(imgpath)

def fd(algorithm):

if algorithm == 'SIFT':

return cv2.xfeatures2d.SIFT_create()

if algorithm == 'SURF':

return cv2.xfeatures2d.SURF_create()

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

algorithm = input("请输入你的处理方法:SIFT or SURF:")

fd_flag = fd(algorithm)

keypoints,descriptor = fd_flag.detectAndCompute(gray,None)

img = cv2.drawKeypoints(image=img,outImage=img,keypoints=keypoints,flags=4,color=(0,0,255))

cv2.namedWindow('Mywindow')

cv2.imshow('Mywindow',img)

if cv2.waitKey(0) & 0xff == ord("q"):

cv2.destroyAllWindows('Mywindow')

结果:

4、FAST:检测角点

推荐这位博主的FAST讲解:FAST算子总结

import cv2

import numpy as np

from matplotlib import pyplot as plt

img = cv2.imread('images/desk.jpg',0)

fast = cv2.FastFeatureDetector_create()

keypoints = fast.detect(img,None)

imgs = cv2.drawKeypoints(img,keypoints,outImage=img,color=(0,0,255))

print("Thereshold:",fast.getThreshold())

print("nonmaxSuppression:",fast.getNonmaxSuppression())

print("neighborhood:",fast.getType())

print("Total keypoints with nonmaxSuppression:",len(keypoints))

#使用非最大值抑制的结果

cv2.imwrite('fast_desk.png',imgs)

cv2.imshow('fast',imgs)

if cv2.waitKey(0) & 0xff == ord("q"):

cv2.destroyAllWindows()

结果:

5、BRIEF:检测斑点

并不是特征检测算法,它是一个描述符。关键点描述符是图像的一种表示,因为可以比较两个图像的关键点描述符,并找到他们的共同之处,因此可以作为特征匹配的一种方法。推荐:BRIEF特征提取

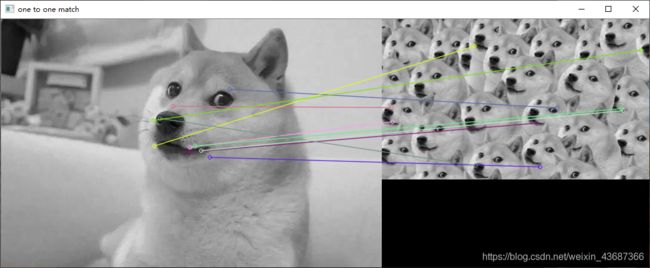

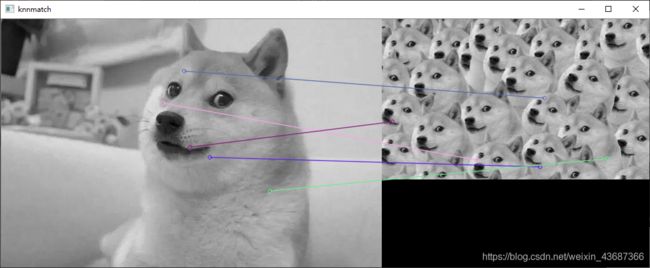

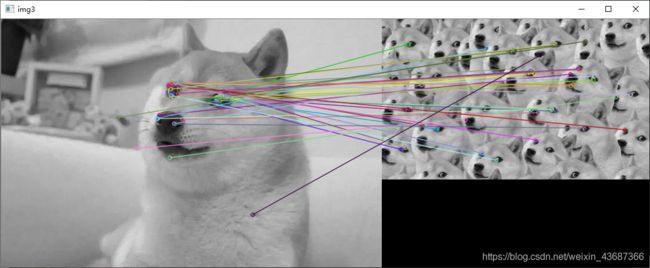

6、ORB:带方向的FAST算法和具有旋转不变性的BRIEF算法

brute-force暴力匹配:1、一对一匹配 ;2、k最佳匹配

import cv2

import numpy as np

import matplotlib.pyplot as plt

img2 = cv2.imread('../image/caicai.jpg',0)

img1 = cv2.imread('../image/cai.jpg',0)

def cv_show(name,img):

cv2.imshow(name,img)

cv2.waitKey(0)

cv2.destroyAllWindows()

# cv_show('img1',img1)

# cv_show('img2',img2)

sift = cv2.xfeatures2d.SIFT_create()

kp1,des1 = sift.detectAndCompute(img1,None)

kp2,des2 = sift.detectAndCompute(img2,None)

bf = cv2.BFMatcher(crossCheck=True)

#1对1匹配

# matches = bf.match(des1,des2)

# matches = sorted(matches,key=lambda x:x.distance)

# img3 = cv2.drawMatches(img1,kp1,img2,kp2,matches[:10],None,flags=2)

# cv_show('img3',img3)

#k对最佳匹配

bf = cv2.BFMatcher()

matches = bf.knnMatch(des1,des2,k=2)

good = []

for m,n in matches:

if m.distance < 0.75*n.distance:

good.append([m])

img3 = cv2.drawMatchesKnn(img1,kp1,img2,kp2,good,None,flags=2)

cv_show('img3',img3)

ORB:

import cv2

import numpy as np

img1 = cv2.imread('../image/cai.jpg',cv2.IMREAD_GRAYSCALE)

img2 = cv2.imread('../image/caicai.jpg',cv2.IMREAD_GRAYSCALE)

orb = cv2.ORB_create()

kp1,des1 = orb.detectAndCompute(img1,None)

kp2,des2 = orb.detectAndCompute(img2,None)

#暴力匹配

bf = cv2.BFMatcher(cv2.NORM_HAMMING,crossCheck=True)

matches = bf.match(des1,des2)

matches = sorted(matches,key=lambda x:x.distance)

img3 = cv2.drawMatches(img1,kp1,img2,kp2,matches[:40],img2,flags=2)

cv2.imshow('img3',img3)

cv2.waitKey(0)

cv2.destroyAllWindows()