深度学习pytorch分层、 分参数设置不同的学习率等

1.

对于bias参数和weight参数,设置不同的学习率

{'params': get_parameters(model, bias=False)},

{'params': get_parameters(model, bias=True),

'lr': args.lr * 2, 'weight_decay': 0},

对于不同的层,做不同的处理

for m in model.modules():

if xxx

else if xxx

def get_parameters(model, bias=False):

import torch.nn as nn

modules_skipped = (

nn.ReLU,

nn.MaxPool2d,

nn.Dropout2d,

nn.Sequential,

torchfcn.models.FCN32s,

torchfcn.models.FCN16s,

torchfcn.models.FCN8s,

)

for m in model.modules():

if isinstance(m, nn.Conv2d):

if bias:

yield m.bias

else:

yield m.weight

elif isinstance(m, nn.ConvTranspose2d):

# weight is frozen because it is just a bilinear upsampling

if bias:

assert m.bias is None

elif isinstance(m, modules_skipped):

continue

else:

raise ValueError('Unexpected module: %s' % str(m))

optim = torch.optim.SGD(

[

{'params': get_parameters(model, bias=False)},

{'params': get_parameters(model, bias=True),

'lr': args.lr * 2, 'weight_decay': 0},

],

lr=args.lr,

momentum=args.momentum,

weight_decay=args.weight_decay)

关键点解析:

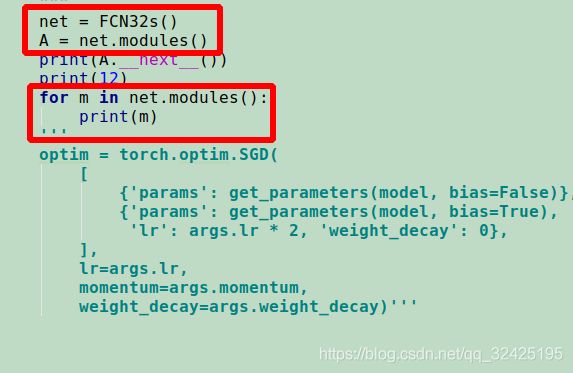

点1:首先,

for m in model.modules()中,m依次是什么

以自定义的网络FCN32s为例,m是FCN32s网络module和FCN32s的init函数(只包括init()函数)中所有定义的net module

代码:

自定义的FCN32s网络

class FCN32s(nn.Module):

def __init__(self, n_class=21):

super(FCN32s, self).__init__()

#将每个层都定义为FCN32s类的参数

# conv1

self.conv1_1 = nn.Conv2d(3, 64, 3, padding=100)

self.relu1_1 = nn.ReLU(inplace=True)

self.conv1_2 = nn.Conv2d(64, 64, 3, padding=1)

self.relu1_2 = nn.ReLU(inplace=True)

self.pool1 = nn.MaxPool2d(2, stride=2, ceil_mode=True) # 1/2

# conv2

self.conv2_1 = nn.Conv2d(64, 128, 3, padding=1)

self.relu2_1 = nn.ReLU(inplace=True)

self.conv2_2 = nn.Conv2d(128, 128, 3, padding=1)

self.relu2_2 = nn.ReLU(inplace=True)

self.pool2 = nn.MaxPool2d(2, stride=2, ceil_mode=True) # 1/4

# conv3

self.conv3_1 = nn.Conv2d(128, 256, 3, padding=1)

self.relu3_1 = nn.ReLU(inplace=True)

self.conv3_2 = nn.Conv2d(256, 256, 3, padding=1)

self.relu3_2 = nn.ReLU(inplace=True)

self.conv3_3 = nn.Conv2d(256, 256, 3, padding=1)

self.relu3_3 = nn.ReLU(inplace=True)

self.pool3 = nn.MaxPool2d(2, stride=2, ceil_mode=True) # 1/8

# conv4

self.conv4_1 = nn.Conv2d(256, 512, 3, padding=1)

self.relu4_1 = nn.ReLU(inplace=True)

self.conv4_2 = nn.Conv2d(512, 512, 3, padding=1)

self.relu4_2 = nn.ReLU(inplace=True)

self.conv4_3 = nn.Conv2d(512, 512, 3, padding=1)

self.relu4_3 = nn.ReLU(inplace=True)

self.pool4 = nn.MaxPool2d(2, stride=2, ceil_mode=True) # 1/16

# conv5

self.conv5_1 = nn.Conv2d(512, 512, 3, padding=1)

self.relu5_1 = nn.ReLU(inplace=True)

self.conv5_2 = nn.Conv2d(512, 512, 3, padding=1)

self.relu5_2 = nn.ReLU(inplace=True)

self.conv5_3 = nn.Conv2d(512, 512, 3, padding=1)

self.relu5_3 = nn.ReLU(inplace=True)

self.pool5 = nn.MaxPool2d(2, stride=2, ceil_mode=True) # 1/32

# fc6

self.fc6 = nn.Conv2d(512, 4096, 7)

self.relu6 = nn.ReLU(inplace=True)

self.drop6 = nn.Dropout2d()

# fc7

self.fc7 = nn.Conv2d(4096, 4096, 1)

self.relu7 = nn.ReLU(inplace=True)

self.drop7 = nn.Dropout2d()

#用于计算每个类的分值

self.score_fr = nn.Conv2d(4096, n_class, 1)

#ConvTranspose2d是Conv2d的逆操作,此处输入通道数和输出通道数都是n_class,上采样卷积核为64,步进为32.上采样反卷积的操作参考github

self.upscore = nn.ConvTranspose2d(n_class, n_class, 64, stride=32, bias=False)

self._initialize_weights()

net.modules()打印的结果

FCN32s(

(conv1_1): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(100, 100))

(relu1_1): ReLU(inplace)

(conv1_2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu1_2): ReLU(inplace)

(pool1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=True)

(conv2_1): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu2_1): ReLU(inplace)

(conv2_2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu2_2): ReLU(inplace)

(pool2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=True)

(conv3_1): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu3_1): ReLU(inplace)

(conv3_2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu3_2): ReLU(inplace)

(conv3_3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu3_3): ReLU(inplace)

(pool3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=True)

(conv4_1): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu4_1): ReLU(inplace)

(conv4_2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu4_2): ReLU(inplace)

(conv4_3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu4_3): ReLU(inplace)

(pool4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=True)

(conv5_1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu5_1): ReLU(inplace)

(conv5_2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu5_2): ReLU(inplace)

(conv5_3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(relu5_3): ReLU(inplace)

(pool5): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=True)

(fc6): Conv2d(512, 4096, kernel_size=(7, 7), stride=(1, 1))

(relu6): ReLU(inplace)

(drop6): Dropout2d(p=0.5)

(fc7): Conv2d(4096, 4096, kernel_size=(1, 1), stride=(1, 1))

(relu7): ReLU(inplace)

(drop7): Dropout2d(p=0.5)

(score_fr): Conv2d(4096, 21, kernel_size=(1, 1), stride=(1, 1))

(upscore): ConvTranspose2d(21, 21, kernel_size=(64, 64), stride=(32, 32), bias=False)

)

Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(100, 100))

ReLU(inplace)

Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

ReLU(inplace)

MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=True)

Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

ReLU(inplace)

Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

ReLU(inplace)

MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=True)

Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

ReLU(inplace)

Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

ReLU(inplace)

Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

ReLU(inplace)

MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=True)

Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

ReLU(inplace)

Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

ReLU(inplace)

Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

ReLU(inplace)

MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=True)

Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

ReLU(inplace)

Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

ReLU(inplace)

Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

ReLU(inplace)

MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=True)

Conv2d(512, 4096, kernel_size=(7, 7), stride=(1, 1))

ReLU(inplace)

Dropout2d(p=0.5)

Conv2d(4096, 4096, kernel_size=(1, 1), stride=(1, 1))

ReLU(inplace)

Dropout2d(p=0.5)

Conv2d(4096, 21, kernel_size=(1, 1), stride=(1, 1))

ConvTranspose2d(21, 21, kernel_size=(64, 64), stride=(32, 32), bias=False)

Process finished with exit code 0这样,就可以通过for m in net.modules对FCN32s中不同的网络子模块做不同的处理。

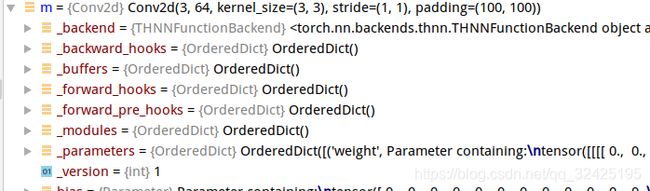

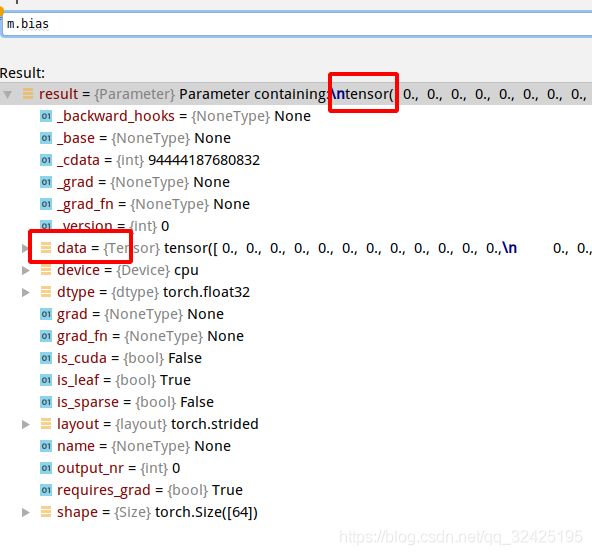

每次遍历时,m的类型时nn.Modules神经网络,m.bias的类型是tensor

点2:

{'params': get_parameters(model, bias=False)}中,get_parameters返回的是某个神经网络的参数,是一个tensor。我猜测,当参数更新时,要更新到这个参数时,就会查询这个参数的更新方法,而这个参数的更新方法就是通过上面截图来指定的