Hadoop3.3.0入门到架构篇之一

Hadoop入门到架构篇之一

Hadoop组成

HDFS (浏览器端口号50070(low) / 9870(high))

NameNode(nn) : 存储文件的元数据如文件名,文件目录结构,文件属性(生成时间副本数,文件权限),以及每个文件的块列表和所在的DataNode.

DataNode(dn) : 在本地文件系统存储块数据,以及块数据校验和.

SencondayNameNode : 用来监控HDFS状态的辅助后台程序,每个一段时间获取HDFS元数据的快照

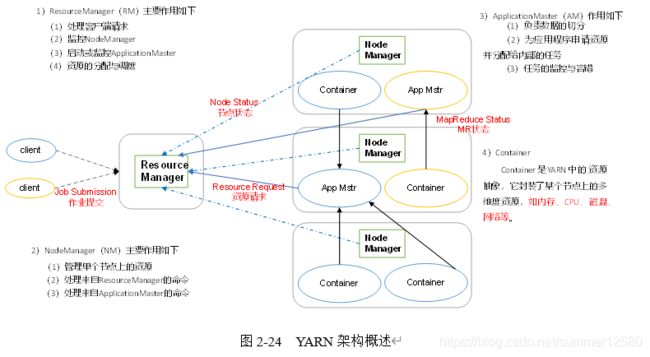

Yarn架构

MapReduce架构

MapReduce将计算过程分为两个阶段:Map和Reduce,如图2-25所示

1)Map阶段并行处理输入数据

2)Reduce阶段对Map结果进行汇总

Hadoop本地模式

官方grep案例

bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.0.jar grep input output 'dfs[a-z.]+'

官方wordcount案例

bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.0.jar wordcount wc.txt output

Hadoop伪分布式模式

-

修改hadoop-env.sh,配置 JAVA_HOME

-

修改core-site.xml 配置namenode和data存放目录

<property> <name>fs.defaultFSname> <value>hdfs://hadoop101:9000value> property> <property> <name>hadoop.tmp.dirname> <value>/opt/module/hadoop-3.3.0/data/tmpvalue> property> -

修改hdfs-site.xml 修改副本数,默认是:3

<property> <name>dfs.replicationname> <value>1value> property> -

启动集群

第一次启动先格式化 NameNode ,以后就不需要格式化了

bin/hdfs namenode -format启动集群

#旧命令 hadoop-daemon.sh {start|status|stop} xxx bin/hadoop-daemon.sh start namenode bin/hadoop-daemon.sh start datanode ############################################## #新命令 hdfs-daemon {start|status|stop} xxx hdfs --daemon start namenode hdfs --daemon start datanode ############################################## # jps 查看进程 jps 3728 Jps 3380 DataNode 3224 NameNode浏览器查看 旧版本50070 新版本9870

浏览器地址 http://hadoop100:9870/

-

操作hdfs

# hdfs上创建文件夹 hdfs dfs -mkdir -p /user/dev # 上传文件到hdfs hdfs dfs -put wc.txt /user/dev # hdfs 查看文件列表 hdfs dfs -ls / # hdfs 查看文件内容 hdfs dfs -cat /user/dev/wc.txt # 运行wordcount案例 hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.0.jar wordcount /user/dev/wc.txt output # 查看MR运行后的结果 hdfs dfs -cat /output/par-r-00000

配置&启动YARN&运行MR

-

修改

yarn-sh & mapred-env.sh配置JAVA_HOME -

配置yarn-site.xml

yarn.nodemanager.aux-services mapreduce_shuffle yarn.resourcemanager.hostname hadoop100 -

配置 mapred-site.xml

<property> <name>mapreduce.framework.namename> <value>yarnvalue> property> -

启动YARN (必须保证 NameNode DataNode已经启动) 笔者从这开始就只用新命令了

# 启动 resourcemanager 和 nodemanager yarn --daemon start resourcemanager yarn --daemon start nodemanager ########################################## # jps 查看进程 jps 3380 DataNode 4117 NodeManager 3224 NameNode 4185 Jps 3690 ResourceManager浏览器地址 : http://hadoop100:8088/ , 暂时还看不了 Applications 的 History . 需要在MR配置文件配置历史服务器才可以看.

配置历史服务器

-

修改 mapred-site.xml (浏览器访问端口你自己任意配置 我这里是19888)

<property> <name>mapreduce.jobhistory.addressname> <value>hadoop100:10020value> property> <property> <name>mapreduce.jobhistory.webapp.addressname> <value>hadoop100:19888value> property> -

启动历史服务器

#旧命令 mr-jobhistory-daemon.sh {start|status|stop} xxx mr-jobhistory-daemon.sh start historyserver ############################################## #新命令 mapred --daemon {start|status|stop} xxx mapred --daemon start historyserver ########################################## # jps 查看进程 jps 7828 JobHistoryServer 5511 DataNode 6763 ResourceManager 7021 NodeManager 7885 Jps 5407 NameNode浏览器地址 : http://hadoop100:19888/jobhistory

配置日志聚集 日志上传到HDFS

日志聚集 :应用运行完成以后,将程序运行日志信息上传到HDFS系统上

日志聚集功能好处 : 可以方便的查看到程序运行详情,方便开发调试

注意 : 开启日志聚集功能,需要重新启动NodeManager , ResourceManager & HistoryManager

步骤 :

-

配置 yarn-site.xml

-

重启 YARN和历史服务器 ( NodeManager , ResourceManager & HistoryManager )

yarn --daemon stop resourcemanager yarn --daemon stop nodemanager mapred --daemon stop historyserver yarn --daemon start resourcemanager yarn --daemon start nodemanager mapred --daemon start historyserver # 测试 删除output,重新测试wordcount...查看日志

完全分布式模式

准备:

(1) 3台机机器(关闭防火墙、静态ip、主机名称)

(2) 安装JDK & 安装Hadoop

(3) 配置环境变量

(4) 配置ssh免密登录

(5) 配置集群,并分发其他机器修改部分

(6) 群起并测试集群

前3步略过~

ssh免密登录 ssh命令(注意用户一致)

我这里为了方便拷贝文件, 我把 root 用户 和 dev 用户 都配置了免密登录的. 启动hadoop用的dev用户(不要用root,人家启动脚本也会提示你)

-

生成 公私钥

ssh-keygen -t rsaid_rsa : 公钥

id_rsa.pub : 私钥

authorized_keys : 存放授权过的免密登录服务器公钥

-

将公钥拷贝到目标机器(包括自己)

ssh-copy-id hadoop111 ssh-copy-id hadoop112 ssh-copy-id hadoop113

每台机器 完成上面1,2 步

集群分发脚本xsync的编写

(每个机器得可以执行rsync,没有安装一下 yum -y install rsync )

-

scp 命令简介 : security copy , (src,dest地址都可以是远程地址)

scp -r hadoop-3.3.0 root@hadoop111:/opt/module

-

rsync 远程同步命令,差异文件更新,快于scp,命令src,dest地址同scp

rsync -rvl source_path dest_path

参数说明 :

-r : 递归

-v : 显示复制过程

-l : 拷贝符号链接

eg: rsync -rvl hadoop-3.3.0 root@hadoop112:/opt/moudule

指定要同步的文件/文件夹 就同步到指定的机器

可以将 自己写的 rsync.sh 放到/usr/local/bin目录,就可以全局使用

xsync.sh 脚本内容 :

#!/bin/bash

#1 获取输入参数个数,如果没有参数,直接退出

pcount=$#

if((pcount==0)); then

echo no args;

exit;

fi

#2 获取文件名称

p1=$1

fname=`basename $p1`

echo fname=$fname

#3 获取上级目录到绝对路径

pdir=`cd -P $(dirname $p1); pwd`

echo pdir=$pdir

#4 获取当前用户名称

user=`whoami`

#5 循环

for((host=112; host<114; host++)); do

echo ------------------- hadoop$host --------------

rsync -rvl $pdir/$fname $user@hadoop$host:$pdir

done

授权

chmod 777 xsync.sh

分发案例

xsync xsync

xsync /opt/module/hadoop-3.3.0/etc

集群部署计划 (yarn避免和namenode同一台机器)

namenode和resourcemanager在同一台机器会各分一半内存,这样的话整个集群服务器内存分配就没有最大化利用起来

| hadoop111 | hadoop112 | hadoop113 | |

|---|---|---|---|

| HDFS | NameNode DataNode | DataNode | SecondaryNameNode DataNode |

| YARN | NodeManager | ResourceManager NodeManager | NodeManager |

| 脚本 | start-dfs.sh | yarn-start.sh | — |

集群配置

-

环境变量配置 JAVA_HOME

hadoop-env.sh yarn-env.sh mapred-env.sh export JAVA_HOME=/opt/module/jdk1.8.0_221 -

修改 core-site.xml

<property> <name>fs.defaultFSname> <value>hdfs://hadoop111:9000value> property> <property> <name>hadoop.tmp.dirname> <value>/opt/module/hadoop-3.3.0/data/tmpvalue> property> -

修改 hdfs-site.xml

<property> <name>dfs.replicationname> <value>3value> property> <property> <name>dfs.namenode.secondary.http-addressname> <value>hadoop113:50090value> property> -

修改 yarn-site.xml

<property> <name>yarn.nodemanager.aux-servicesname> <value>mapreduce_shufflevalue> property> <property> <name>yarn.resourcemanager.hostnamename> <value>hadoop112value> property> <property> <name>yarn.application.classpathname> <value>/opt/module/hadoop-3.3.0/etc/hadoop:/opt/module/hadoop-3.3.0/share/hadoop/common/lib/*:/opt/module/hadoop-3.3.0/share/hadoop/common/*:/opt/module/hadoop-3.3.0/share/hadoop/hdfs:/opt/module/hadoop-3.3.0/share/hadoop/hdfs/lib/*:/opt/module/hadoop-3.3.0/share/hadoop/hdfs/*:/opt/module/hadoop-3.3.0/share/hadoop/mapreduce/*:/opt/module/hadoop-3.3.0/share/hadoop/yarn:/opt/module/hadoop-3.3.0/share/hadoop/yarn/lib/*:/opt/module/hadoop-3.3.0/share/hadoop/yarn/*value> property> -

修改 mapred-site.xml

<property> <name>mapreduce.framework.namename> <value>yarnvalue> property> -

集群配置 workers 不能有空格,空行,注意一下

vim /opt/module/hadoop-3.3.0/etc/hadoop/workers

默认内容 localhost ,把干掉添加 :

hadoop111 hadoop112 hadoop113

集群配置分发

xsync /opt/module/hadoop-3.3.0/

eg : 我在 /opt/module/hadoop-3.3.0/etc 位置,把 hadoop 配置分发到其它两台机器

群起集群

如果是集群第一次启动,需要格式化 namenode,格式化之前 先停止 namenode 和 datanode ,再删除 logs 和 data

如果是第一次启动集群格式化 namenode :

bin/hdfs namenode -format

群起 :

-

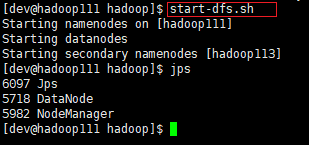

在NameNode机器上群起启动 HDFS 我这里是192.168.0.111

sbin/start-dfs.sh -

在放 ResourceManager 机器上启动 YARN

sbin/start-yarn.sh

hadoop111服务器 :

hadoop112服务器 :

hadoop113服务器 :

-

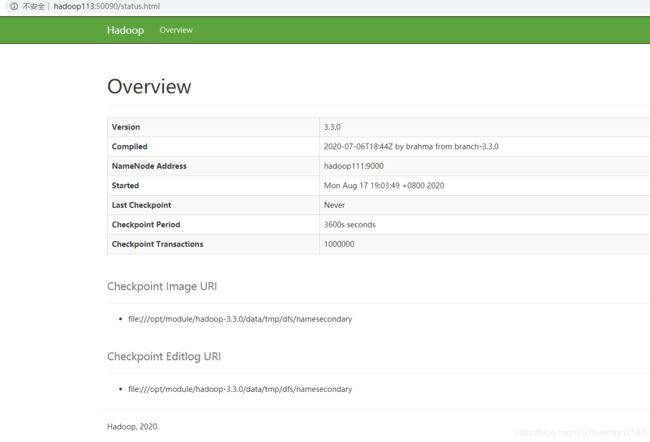

浏览器查看 SecondayNameNode

浏览器地址 : http://hadoop113:50090/

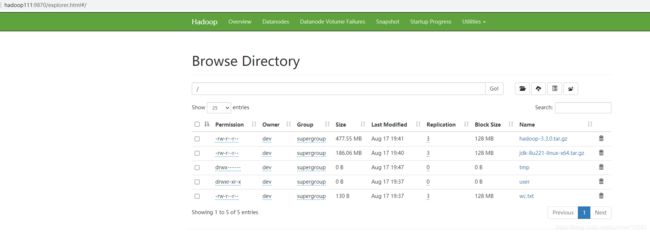

- 测试集群

hdfs dfs -mkdir -p /user/dev

hdfs dfs -put wcinput/wc.txt /

# 小文件上传

hdfs dfs -put wc.txt /

#大文件上传

hdfs dfs -put /opt/software/hadoop-3.3.0.tar.gz /

# wordcount测试

hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.0.jar wordcount /wc.txt /output

hdfs dfs -ls /

hdfs dfs -cat /output/part-r-00000

#hdfs的文件下载到本地

hdfs dfs -get wc.txt /opt/module/

#...自己测试下

集群启停命令

①单个启停 {}中选一个

hdfs --daemon {start | stop} {namenode | datanode | secondarynamenode}

yarn --daemon {start | stop} {resourcemanager | nodemanager}

②按模块起停

hdfs :

{ start-dfs.sh | stop-dfs.sh }

yarn :

{ start-yarn.sh | stop-yarn.sh }

集群时间同步

时间服务器机器设置(root用户)

ntp 没有安装就安装一下 yum -y install ntp

① vim /etc/ntp.cnf

# 修改1 打开注释 (表示192.168.1.0 ~ 192.168.1.255网段所有机器可以从这台机器查询和同步时间)

#restrict 192.168.1.0 mask 255.255.255.0 nomodify notrap

restrict 192.168.1.0 mask 255.255.255.0 nomodify notrap

#修改2 改为注释掉 (表示不使用互联网上的时间)

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

# 修改3 添加下面2句 (表示时间服务器节点丢失网络连接,可以采用本地时间为集群中其他节点提供时间同步)

server 127.127.1.0

fudge 127.127.1.0 stratum 10

② vim /etc/sysconfig/ntpd

# 增加 让硬件时间与系统时间一起同步

SYNC_HWCLOCK=yes

③ 重启 ntpd 服务

systemctl restart ntpd

④ 设置开机启动

systemctl enable ntpd

其他机器设置(root用户)

ntp 没有安装就安装一下 yum -y install ntp

① 定时更新时间,找时间服务器同步 这里用crontab , 每10分钟同步一次

*/10 * * * * /usr/sbin/ntpdate hadoop111

Hadoop源码编译(先看环境需要 BUILDING.txt)

两种编译方式 : ①最简单的方式 docker, 运行 官网脚本 start-build-env.sh即可;②非docker手动安装各种依赖环境编译

源码包里面有个BUILDING.txt文件说明,需要哪些环境,依赖;看完装完依赖再去编译;

编译前提: 3.3.0版本-centos非docker编译方式要求centos8

Build instructions for Hadoop

----------------------------------------------------------------------------------

Requirements:

* Unix System

* JDK 1.8

* Maven 3.3 or later

* Protocol Buffers 3.7.1 (if compiling native code)

* CMake 3.1 or newer (if compiling native code)

* Zlib devel (if compiling native code)

* Cyrus SASL devel (if compiling native code)

* One of the compilers that support thread_local storage: GCC 4.8.1 or later, Visual Studio,

Clang (community version), Clang (version for iOS 9 and later) (if compiling native code)

* openssl devel (if compiling native hadoop-pipes and to get the best HDFS encryption performance)

* Linux FUSE (Filesystem in Userspace) version 2.6 or above (if compiling fuse_dfs)

* Doxygen ( if compiling libhdfspp and generating the documents )

* Internet connection for first build (to fetch all Maven and Hadoop dependencies)

* python (for releasedocs)

* bats (for shell code testing)

* Node.js / bower / Ember-cli (for YARN UI v2 building)

Mac编译流程:

----------------------------------------------------------------------------------

Building on macOS (without Docker)

----------------------------------------------------------------------------------

Installing required dependencies for clean install of macOS 10.14:

* Install Xcode Command Line Tools

$ xcode-select --install

* Install Homebrew

$ /usr/bin/ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"

* Install OpenJDK 8

$ brew tap AdoptOpenJDK/openjdk

$ brew cask install adoptopenjdk8

* Install maven and tools

$ brew install maven autoconf automake cmake wget

* Install native libraries, only openssl is required to compile native code,

you may optionally install zlib, lz4, etc.

$ brew install openssl

* Protocol Buffers 3.7.1 (required to compile native code)

$ wget https://github.com/protocolbuffers/protobuf/releases/download/v3.7.1/protobuf-java-3.7.1.tar.gz

$ mkdir -p protobuf-3.7 && tar zxvf protobuf-java-3.7.1.tar.gz --strip-components 1 -C protobuf-3.7

$ cd protobuf-3.7

$ ./configure

$ make

$ make check

$ make install

$ protoc --version

Note that building Hadoop 3.1.1/3.1.2/3.2.0 native code from source is broken

on macOS. For 3.1.1/3.1.2, you need to manually backport YARN-8622. For 3.2.0,

you need to backport both YARN-8622 and YARN-9487 in order to build native code.

----------------------------------------------------------------------------------

Building command example:

* Create binary distribution with native code but without documentation:

$ mvn package -Pdist,native -DskipTests -Dmaven.javadoc.skip \

-Dopenssl.prefix=/usr/local/opt/openssl

Note that the command above manually specified the openssl library and include

path. This is necessary at least for Homebrewed OpenSSL.

CentOS 8 编译流程:

----------------------------------------------------------------------------------

Building on CentOS 8

----------------------------------------------------------------------------------

* Install development tools such as GCC, autotools, OpenJDK and Maven.

$ sudo dnf group install --with-optional 'Development Tools'

$ sudo dnf install java-1.8.0-openjdk-devel maven

* Install Protocol Buffers v3.7.1.

$ git clone https://github.com/protocolbuffers/protobuf

$ cd protobuf

$ git checkout v3.7.1

$ autoreconf -i

$ ./configure --prefix=/usr/local

$ make

$ sudo make install

$ cd ..

* Install libraries provided by CentOS 8.

$ sudo dnf install libtirpc-devel zlib-devel lz4-devel bzip2-devel openssl-devel cyrus-sasl-devel libpmem-devel

* Install optional dependencies (snappy-devel).

$ sudo dnf --enablerepo=PowerTools snappy-devel

* Install optional dependencies (libzstd-devel).

$ sudo dnf install https://dl.fedoraproject.org/pub/epel/epel-release-latest-8.noarch.rpm

$ sudo dnf --enablerepo=epel install libzstd-devel

* Install optional dependencies (isa-l).

$ sudo dnf --enablerepo=PowerTools install nasm

$ git clone https://github.com/intel/isa-l

$ cd isa-l/

$ ./autogen.sh

$ ./configure

$ make

$ sudo make install

Windows编译流程:

----------------------------------------------------------------------------------

Building on Windows

----------------------------------------------------------------------------------

Requirements:

* Windows System

* JDK 1.8

* Maven 3.0 or later

* Protocol Buffers 3.7.1

* CMake 3.1 or newer

* Visual Studio 2010 Professional or Higher

* Windows SDK 8.1 (if building CPU rate control for the container executor)

* zlib headers (if building native code bindings for zlib)

* Internet connection for first build (to fetch all Maven and Hadoop dependencies)

* Unix command-line tools from GnuWin32: sh, mkdir, rm, cp, tar, gzip. These

tools must be present on your PATH.

* Python ( for generation of docs using 'mvn site')

Unix command-line tools are also included with the Windows Git package which

can be downloaded from http://git-scm.com/downloads

which is problematic if running a 64-bit system.

The Windows SDK 8.1 is available to download at:

http://msdn.microsoft.com/en-us/windows/bg162891.aspx

Cygwin is not required.

----------------------------------------------------------------------------------

Building:

Keep the source code tree in a short path to avoid running into problems related

to Windows maximum path length limitation (for example, C:\hdc).

There is one support command file located in dev-support called win-paths-eg.cmd.

It should be copied somewhere convenient and modified to fit your needs.

win-paths-eg.cmd sets up the environment for use. You will need to modify this

file. It will put all of the required components in the command path,

configure the bit-ness of the build, and set several optional components.

Several tests require that the user must have the Create Symbolic Links

privilege.

All Maven goals are the same as described above with the exception that

native code is built by enabling the 'native-win' Maven profile. -Pnative-win

is enabled by default when building on Windows since the native components

are required (not optional) on Windows.

If native code bindings for zlib are required, then the zlib headers must be

deployed on the build machine. Set the ZLIB_HOME environment variable to the

directory containing the headers.

set ZLIB_HOME=C:\zlib-1.2.7

At runtime, zlib1.dll must be accessible on the PATH. Hadoop has been tested

with zlib 1.2.7, built using Visual Studio 2010 out of contrib\vstudio\vc10 in

the zlib 1.2.7 source tree.

http://www.zlib.net/

----------------------------------------------------------------------------------

Building distributions:

* Build distribution with native code : mvn package [-Pdist][-Pdocs][-Psrc][-Dtar][-Dmaven.javadoc.skip=true]

----------------------------------------------------------------------------------

Running compatibility checks with checkcompatibility.py

Invoke `./dev-support/bin/checkcompatibility.py` to run Java API Compliance Checker

to compare the public Java APIs of two git objects. This can be used by release

managers to compare the compatibility of a previous and current release.

As an example, this invocation will check the compatibility of interfaces annotated as Public or LimitedPrivate:

./dev-support/bin/checkcompatibility.py --annotation org.apache.hadoop.classification.InterfaceAudience.Public --annotation org.apache.hadoop.classification.InterfaceAudience.LimitedPrivate --include "hadoop.*" branch-2.7.2 trunk

----------------------------------------------------------------------------------

Changing the Hadoop version declared returned by VersionInfo

If for compatibility reasons the version of Hadoop has to be declared as a 2.x release in the information returned by

org.apache.hadoop.util.VersionInfo, set the property declared.hadoop.version to the desired version.

For example: mvn package -Pdist -Ddeclared.hadoop.version=2.11

If unset, the project version declared in the POM file is used.