Hive典型案例详解------------实现项目中的MapReduce数据清洗阶段和Hive数据处理阶段(资料链接在最后)

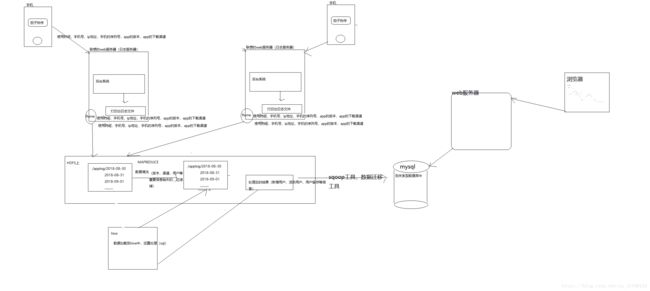

1.数据ETL综合案例()

需求:联想集团有一款app产品叫茄子快传(有上亿的活跃用户,集中在第三世界国家)

现在需要开发一个数据分析系统,来对app的用户行为数据做各类分析;

下面的是整个的过程:

涉及到MapReduce和Hive的只有数据清洗和Hive的运算处理

需求

{

"header": {

"cid_sn": "1501004207EE98AA", sdn码

"mobile_data_type": "",

"os_ver": "9", 操作系统

"mac": "88:1f:a1:03:7d:a8", 物理地址

"resolution": "2560x1337", 分辨率

"commit_time": "1473399829041", 提交时间

"sdk_ver": "103", sdk版本

"device_id_type": "mac", 设备类型

"city": "江门市", 城市

"android_id": "", 安卓设备的安卓id

"device_model": "MacBookPro11,1",设备型号

"carrier": "中国xx", 运营商

"promotion_channel": "1", 推广渠道

"app_ver_name": "1.7", app版本号

"imei": "", 入网表示

"app_ver_code": "23", 公司内部版本码

"pid": "pid",

"net_type": "3", 网络类型

"device_id": "m.88:1f:a1:03:7d:a8", 设备ip

"app_device_id": "m.88:1f:a1:03:7d:a8",

"release_channel": "appstore", 发布渠道

"country": "CN",

"time_zone": "28800000", 时区编码

"os_name": "ios", 操作系统类型

"manufacture": "apple", 生产厂家

"commit_id": "fde7ee2e48494b24bf3599771d7c2a78", 事件标示

"app_token": "XIAONIU_I", app标示

"account": "none", 登陆账号

"app_id": "com.appid.xiaoniu", app组名

"build_num": "YVF6R16303000403", 编译号

"language": "zh" 系统所使用语言

}

}

1、数据预处理(数据清洗)

--》 release_channel,device_id,city,device_id_type,app_ver_name 这几个字段如果缺失,则过滤

--》 将数据整成 字段,字段,字段,...... 这种形式

--》 在每条数据中添加一个字段:user_id(值就是device_id)

2、导入hive中的表的天分区

每天的活跃用户,新增用户

3、进行数据统计分析

每天的新增用户 --》 事实

维度 --》 各种组合

版本 渠道 城市 设备 新增用户数

所有 所有 所有 所有 21949382

所有 所有 所有 具体设备 xxx

所有 所有 具体城市 所有 yyyy

所有 具体渠道 所有 所有 yyyy

具体版本 所有 所有 所有 zzz

具体版本 具体渠道 所有 所有 zzz

0000

0001

0010

0011

0100

0101

0110

0111

1000

1001

1010

1011

1100

1101

1110

1111

1.数据清洗

import java.io.IOException;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONObject;

/**

* 用来清理log日志信息的

*

* @author root

*

*/

public class AppLogClean {

public static class MapTask extends Mapper {

StringBuilder sb = new StringBuilder();

Text k = new Text();

@Override

protected void map(LongWritable key, Text value, Mapper.Context context)

throws IOException, InterruptedException {

// 得到每行数据

String line = value.toString();

JSONObject ob1 = JSON.parseObject(line);

JSONObject ob2 = ob1.getJSONObject("header");

// 关键数据是否有丢失

// release_channel,device_id,city,device_id_type,app_ver_name

// 这几个字段如果缺失,则过滤

if (StringUtils.isBlank(ob2.getString("release_channel")) || StringUtils.isBlank(ob2.getString("device_id"))

|| StringUtils.isBlank(ob2.getString("city"))

|| StringUtils.isBlank(ob2.getString("device_id_type"))

|| StringUtils.isBlank(ob2.getString("app_ver_name"))

|| StringUtils.isBlank(ob2.getString("os_name"))

|| StringUtils.isBlank(ob2.getString("mac"))) {

return;

}

if (ob2.getString("app_ver_name").equals("android")) {

if (StringUtils.isBlank(ob2.getString("android_id"))) {

return;

}

}

sb.append(ob2.getString("cid_sn")).append(",");

sb.append(ob2.getString("mobile_data_type")).append(",");

sb.append(ob2.getString("os_ver")).append(",");

sb.append(ob2.getString("mac")).append(",");

sb.append(ob2.getString("resolution")).append(",");

sb.append(ob2.getString("commit_time")).append(",");

sb.append(ob2.getString("sdk_ver")).append(",");

sb.append(ob2.getString("device_id_type")).append(",");

sb.append(ob2.getString("city")).append(",");

sb.append(ob2.getString("android_id")).append(",");

sb.append(ob2.getString("device_model")).append(",");

sb.append(ob2.getString("carrier")).append(",");

sb.append(ob2.getString("promotion_channel")).append(",");

sb.append(ob2.getString("app_ver_name")).append(",");

sb.append(ob2.getString("imei")).append(",");

sb.append(ob2.getString("app_ver_code")).append(",");

sb.append(ob2.getString("pid")).append(",");

sb.append(ob2.getString("net_type")).append(",");

sb.append(ob2.getString("device_id")).append(",");

sb.append(ob2.getString("app_device_id")).append(",");

sb.append(ob2.getString("release_channel")).append(",");

sb.append(ob2.getString("country")).append(",");

sb.append(ob2.getString("time_zone")).append(",");

sb.append(ob2.getString("os_name")).append(",");

sb.append(ob2.getString("manufacture")).append(",");

sb.append(ob2.getString("commit_id")).append(",");

sb.append(ob2.getString("app_token")).append(",");

sb.append(ob2.getString("account")).append(",");

sb.append(ob2.getString("app_id")).append(",");

sb.append(ob2.getString("build_num")).append(",");

sb.append(ob2.getString("language")).append(",");

String uid = ob2.getString("mac");//???

sb.append(uid);

k.set(sb.toString());

context.write(k, NullWritable.get());

//清除sb的数据

sb.delete(0, sb.length());

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

//设置map,设置driver,设置输出类型。。。

job.setJarByClass(AppLogClean.class);

job.setMapperClass(MapTask.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(NullWritable.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

//不需要reduce 可以设置为0

job.setNumReduceTasks(0);

boolean ret = job.waitForCompletion(true);

System.exit(ret?0:1);

}

}

2.数据处理(求每天的活跃用户)

每天的活跃用户

/** 创建表 用来加载清洗好的数据 **/

create table ods_app_log(

cid_sn string,

mobile_data_type string,

os_ver string,

mac string,

resolution string,

commit_time string,

sdk_ver string,

device_id_type string,

city string,

android_id string,

device_model string,

carrier string,

promotion_channel string,

app_ver_name string,

imei string,

app_ver_code string,

pid string,

net_type string,

device_id string,

app_device_id string,

release_channel string,

country string,

time_zone string,

os_name string,

manufacture string,

commit_id string,

app_token string,

account string,

app_id string,

build_num string,

language string,

uid string

)

partitioned by(day string)

row format delimited

fields terminated by ",";

/** 加载数据 **/

load data local inpath "/root/data/20170101" into table ods_app_log partition(day="20170101");

/** 创建活跃用户的表 **/

create table etl_user_active_day(

uid string,

commit_time string,

city string,

release_channel string,

app_ver_name string

)partitioned by (day string);

/** 计算数据到活跃用户表 **/

/** 20170101 **/

insert into table etl_user_active_day partition(day="20170101")

select uid,commit_time,city , release_channel,app_ver_name

from

(select

uid,commit_time, city , release_channel,app_ver_name,

row_number() over(partition by uid order by commit_time) as rn

from ods_app_log where day="20170101") tmp1

where tmp1.rn=1;

insert into table etl_user_active_day partition(day="20170102")

select uid,commit_time,city , release_channel,app_ver_name

from

(select

uid,commit_time, city , release_channel,app_ver_name,

row_number() over(partition by uid order by commit_time) as rn

from ods_app_log where day="20170102") tmp1

where tmp1.rn=1;

/** 分维度进行统计 **/

时间 城市 渠道 版本 活跃用户

当天 0 0 0

当天 0 0 1

当天 0 1 0

当天 0 1 1

当天 1 0 0

当天 1 0 1

当天 1 1 0

当天 1 1 1

/** 创建维度统计表 **/

create table dim_user_active(

city string,

release_channel string,

app_ver_name string,

active_user_cnt int

)partitioned by (day string,flag string);

//多重插入 读一次数据,多次计算

from etl_user_active_day

insert into table dim_user_active partition(day="20170101",flag="000")

select "all","all","all",count(1) where day="20170101"

insert into table dim_user_active partition(day="20170101",flag="001")

select "all","all",app_ver_name,count(1) where day="20170101"

group by app_ver_name

insert into table dim_user_active partition(day="20170101",flag="010")

select "all",release_channel,"all",count(1) where day="20170101"

group by release_channel

insert into table dim_user_active partition(day="20170101",flag="011")

select "all",release_channel,app_ver_name,count(1) where day="20170101"

group by release_channel,app_ver_name

insert into table dim_user_active partition(day="20170101",flag="100")

select city,"all","all",count(1) where day="20170101"

group by city

insert into table dim_user_active partition(day="20170101",flag="101")

select city,"all",app_ver_name,count(1) where day="20170101"

group by city,app_ver_name

insert into table dim_user_active partition(day="20170101",flag="110")

select city,release_channel,"all",count(1) where day="20170101"

group by city,release_channel

insert into table dim_user_active partition(day="20170101",flag="111")

select city,release_channel,app_ver_name,count(1) where day="20170101"

group by city,release_channel,app_ver_name;

3.数据处理(求每天的新用户)

思路:

1:抽取出来当天活跃用户 etl_user_active_day,

2: 拿当天活跃用户跟历史用户做对比(得出当日的新增用户)

3:增加新增用户到历史记录表里面

4:对当日新增用户进行各维度统计

/* 历史用户表 */

create table etl_history_user(uid string);/* 创建一个每日新增用户表 */

create table etl_user_new_day(

uid string,

commit_time string,

city string,

release_channel string,

app_ver_name string

)partitioned by (day string);

/*- 创建维度聚合的 **/

create table dim_user_new(

city string,

release_channel string,

app_ver_name string,

newnew_cnt int

)partitioned by (day string,flag string);

/**etl_user_new_day */

/* 新增用户 20170101 */

insert into table etl_user_new_day partition(day="20170101")

select

a.uid,a.commit_time,a.city,a.release_channel,a.app_ver_name

from etl_user_active_day a

left join

etl_history_user b

on a.uid=b.uid

where a.day="20170101" and b.uid is null;

/** 把新增用户插入到历史记录表里面 **/

insert into table etl_history_user

select uid from etl_user_new_day where day="20170101";/* 新增用户 20170102 */

insert into table etl_user_new_day partition(day="20170102")

select

a.uid,a.commit_time,a.city,a.release_channel,a.app_ver_name

from etl_user_active_day a

left join

etl_history_user b

on a.uid=b.uid

where a.day="20170102" and b.uid is null;

/** 把新增用户插入到历史记录表里面 **/

insert into table etl_history_user

select uid from etl_user_new_day where day="20170102";/* 分维度进行统计 */

/* 分维度进行统计 */

时间 城市 渠道 版本 新增用户

当天 0 0 0

当天 0 0 1

当天 0 1 0

当天 0 1 1

当天 1 0 0

当天 1 0 1

当天 1 1 0

当天 1 1 1

from etl_user_new_day

insert into table dim_user_new partition(day="20170101",flag="000")

select "all","all","all",count(1) where day="20170101"

insert into table dim_user_new partition(day="20170101",flag="001")

select "all","all",app_ver_name,count(1) where day="20170101"

group by app_ver_name

insert into table dim_user_new partition(day="20170101",flag="010")

select "all",release_channel,"all",count(1) where day="20170101"

group by release_channel

insert into table dim_user_new partition(day="20170101",flag="011")

select "all",release_channel,app_ver_name,count(1) where day="20170101"

group by release_channel,app_ver_name

insert into table dim_user_new partition(day="20170101",flag="100")

select city,"all","all",count(1) where day="20170101"

group by city

insert into table dim_user_new partition(day="20170101",flag="101")

select city,"all",app_ver_name,count(1) where day="20170101"

group by city , app_ver_name

insert into table dim_user_new partition(day="20170101",flag="110")

select city,release_channel,"all",count(1) where day="20170101"

group by city,release_channel

insert into table dim_user_new partition(day="20170101",flag="111")

select city,release_channel,app_ver_name,count(1) where day="20170101"

group by city,release_channel,app_ver_name

;链接:https://pan.baidu.com/s/1m8rYOPsE0RZK2CSbv27iYw 密码:0ijt