TensorFlow2.0基本操作(五)

4 自定义网络

keras.Sequential(容器):model.trainable_variables所有的权重参数,会自动调用model.call()

keras.layers.Layer(继承制)

keras.Model:母类,inherit:1,实现初始化参数,2,

5 模型保存与加载

1)仅保存训练参数

import tensorflow as tf

from tensorflow.keras import datasets, layers, optimizers, Sequential, metrics

def preprocess(x, y):

"""

x is a simple image, not a batch

"""

x = tf.cast(x, dtype=tf.float32) / 255.

x = tf.reshape(x, [28*28])

y = tf.cast(y, dtype=tf.int32)

y = tf.one_hot(y, depth=10)

return x,y

batchsz = 128

(x, y), (x_val, y_val) = datasets.mnist.load_data()

print('datasets:', x.shape, y.shape, x.min(), x.max())

db = tf.data.Dataset.from_tensor_slices((x,y))

db = db.map(preprocess).shuffle(60000).batch(batchsz)

ds_val = tf.data.Dataset.from_tensor_slices((x_val, y_val))

ds_val = ds_val.map(preprocess).batch(batchsz)

sample = next(iter(db))

print(sample[0].shape, sample[1].shape)

network = Sequential([layers.Dense(256, activation='relu'),

layers.Dense(128, activation='relu'),

layers.Dense(64, activation='relu'),

layers.Dense(32, activation='relu'),

layers.Dense(10)])

network.build(input_shape=(None, 28*28))

network.summary()

network.compile(optimizer=optimizers.Adam(lr=0.01),

loss=tf.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy']

)

# 模型训练

network.fit(db, epochs=3, validation_data=ds_val, validation_freq=2)

# 模型测试

network.evaluate(ds_val)

#保存参数

network.save_weights('weights.ckpt')

print('saved weights.')

# 删除网络

del network

# 重新搭建完全一样的网络

network = Sequential([layers.Dense(256, activation='relu'),

layers.Dense(128, activation='relu'),

layers.Dense(64, activation='relu'),

layers.Dense(32, activation='relu'),

layers.Dense(10)])

# 设置前向传播参数

network.compile(optimizer=optimizers.Adam(lr=0.01),

loss=tf.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy']

)

# 加载保存好的参数

network.load_weights('weights.ckpt')

print('loaded weights!')

# 模型评估

network.evaluate(ds_val)

# 备注:最后训练的结果不完全一样,是因为,影响最终结果的不仅仅是这些保存的参数,还有一些其他的因素。

# 如果想要 完全一样,那么就要使用另外的一种保存方式。

datasets: (60000, 28, 28) (60000,) 0 255

(128, 784) (128, 10)

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) multiple 200960

_________________________________________________________________

dense_1 (Dense) multiple 32896

_________________________________________________________________

dense_2 (Dense) multiple 8256

_________________________________________________________________

dense_3 (Dense) multiple 2080

_________________________________________________________________

dense_4 (Dense) multiple 330

=================================================================

Total params: 244,522

Trainable params: 244,522

Non-trainable params: 0

_________________________________________________________________

Epoch 1/3

469/469 [==============================] - 5s 12ms/step - loss: 0.2728 - accuracy: 0.8466

Epoch 2/3

469/469 [==============================] - 5s 11ms/step - loss: 0.1394 - accuracy: 0.9589 - val_loss: 0.2163 - val_accuracy: 0.9512

Epoch 3/3

469/469 [==============================] - 4s 10ms/step - loss: 0.1178 - accuracy: 0.9649

79/79 [==============================] - 0s 5ms/step - loss: 0.1110 - accuracy: 0.9674

saved weights.

loaded weights!

79/79 [==============================] - 1s 10ms/step - loss: 0.1110 - accuracy: 0.9634

[0.1110091062153607, 0.9674]

2) 保存整个网络

import tensorflow as tf

from tensorflow.keras import datasets, layers, optimizers, Sequential, metrics

def preprocess(x, y):

"""

x is a simple image, not a batch

"""

x = tf.cast(x, dtype=tf.float32) / 255.

x = tf.reshape(x, [28*28])

y = tf.cast(y, dtype=tf.int32)

y = tf.one_hot(y, depth=10)

return x,y

batchsz = 128

(x, y), (x_val, y_val) = datasets.mnist.load_data()

print('datasets:', x.shape, y.shape, x.min(), x.max())

db = tf.data.Dataset.from_tensor_slices((x,y))

db = db.map(preprocess).shuffle(60000).batch(batchsz)

ds_val = tf.data.Dataset.from_tensor_slices((x_val, y_val))

ds_val = ds_val.map(preprocess).batch(batchsz)

sample = next(iter(db))

print(sample[0].shape, sample[1].shape)

network = Sequential([layers.Dense(256, activation='relu'),

layers.Dense(128, activation='relu'),

layers.Dense(64, activation='relu'),

layers.Dense(32, activation='relu'),

layers.Dense(10)])

network.build(input_shape=(None, 28*28))

network.summary()

network.compile(optimizer=optimizers.Adam(lr=0.01),

loss=tf.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy']

)

network.fit(db, epochs=3, validation_data=ds_val, validation_freq=2)

# 创建完以后做一个evaluate

network.evaluate(ds_val)

# 接下来进行保存,保存整个model

network.save('model.h5')

print('saved total model.')

del network

print('loaded model from file.')

network = tf.keras.models.load_model('model.h5', compile=False) # 这里就没有Sequential()这个方法了,直接从文件中恢复这个模型

network.compile(optimizer=optimizers.Adam(lr=0.01),

loss=tf.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy']

)

x_val = tf.cast(x_val, dtype=tf.float32) / 255.

x_val = tf.reshape(x_val, [-1, 28*28])

y_val = tf.cast(y_val, dtype=tf.int32)

y_val = tf.one_hot(y_val, depth=10)

ds_val = tf.data.Dataset.from_tensor_slices((x_val, y_val)).batch(128)

network.evaluate(ds_val) # 最后再做一个evaluate()

datasets: (60000, 28, 28) (60000,) 0 255

(128, 784) (128, 10)

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) multiple 200960

_________________________________________________________________

dense_1 (Dense) multiple 32896

_________________________________________________________________

dense_2 (Dense) multiple 8256

_________________________________________________________________

dense_3 (Dense) multiple 2080

_________________________________________________________________

dense_4 (Dense) multiple 330

=================================================================

Total params: 244,522

Trainable params: 244,522

Non-trainable params: 0

_________________________________________________________________

Epoch 1/3

469/469 [==============================] - 5s 12ms/step - loss: 0.2801 - accuracy: 0.8401

Epoch 2/3

469/469 [==============================] - 5s 11ms/step - loss: 0.1357 - accuracy: 0.9582 - val_loss: 0.1496 - val_accuracy: 0.9562

Epoch 3/3

469/469 [==============================] - 4s 10ms/step - loss: 0.1084 - accuracy: 0.9689

79/79 [==============================] - 0s 6ms/step - loss: 0.1324 - accuracy: 0.9636

saved total model.

loaded model from file.

79/79 [==============================] - 0s 6ms/step - loss: 0.1324 - accuracy: 0.9584

[0.13236246153615014, 0.9636]

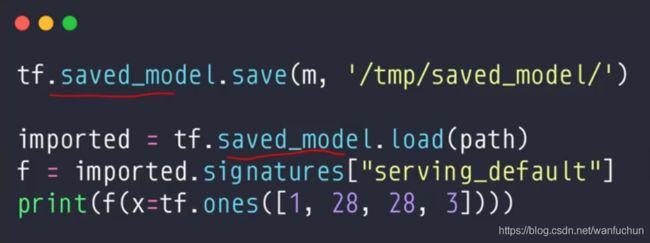

3) 第三种保存的方式,主要用于模型在工业的部署,这种方法更加的通用,可以供其它语言进行使用

5 Kera实战

CIFAR10自定义网络实战-1

32*32

要使用到一个自定义的网络层:My Dense Layer

import tensorflow as tf

# datasets数据集的管理, layers, optimizers优化器,sequential容器, metrics测试的度量器

from tensorflow.keras import datasets, layers, optimizers, Sequential, metrics

from tensorflow import keras

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

def preprocess(x, y):

# [0~255] => [-1~1]

x = 2 * tf.cast(x, dtype=tf.float32) / 255. - 1.

y = tf.cast(y, dtype=tf.int32)

return x, y

batchsz = 128

# [50k, 32, 32, 3], [10k, 1]

(x, y), (x_val, y_val) = datasets.cifar10.load_data()

y = tf.squeeze(y) # 压缩掉张量里面的一维

y_val = tf.squeeze(y_val)

y = tf.one_hot(y, depth=10) # [50k, 10]

y_val = tf.one_hot(y_val, depth=10) # [10k, 10]

print('datasets:', x.shape, y.shape, x_val.shape, y_val.shape, x.min(), x.max())

train_db = tf.data.Dataset.from_tensor_slices((x, y))

train_db = train_db.map(preprocess).shuffle(10000).batch(batchsz)

test_db = tf.data.Dataset.from_tensor_slices((x_val, y_val))

test_db = test_db.map(preprocess).batch(batchsz)

# 这里生成一个sample,查看下它的shape

sample = next(iter(train_db))

print('batch:', sample[0].shape, sample[1].shape)

# 接下来新建一个这样的网络对象。

# (1)实现一个自定义的层

class MyDense(layers.Layer): # 因为我们要自定义一个网络结构,因此我们要新建一个类,这个类要继承自layers.Layer这样一个母类

# to replace standard layers.Dense()

# 同样的我们要继承两个函数,自定义以下两个函数:

# 1) 初始化的一个函数

def __init__(self, inp_dim, outp_dim): # 输入的维度,和输出的维度

super(MyDense, self).__init__()

# 新建一个kernel(核函数)

self.kernel = self.add_variable('w', [inp_dim, outp_dim]) # kernel 等于这样的一个shape

# self.bias = self.add_variable('b', [outp_dim])

# 2) 前向逻辑的函数

def __call__(self, inputs, training=None):

x = inputs @ self.kernel # 此处没有加bias

return x

# (2) 实现一个自定义的网络

class MyNetwork(keras.Model):

# 同样的道理,他也需要实现两个函数

# 1)

def __init__(self):

super(MyNetwork, self).__init__()

# 这里新建5层

self.fc1 = MyDense(32*32*3, 256)

self.fc2 = MyDense(256, 128)

self.fc3 = MyDense(128, 64)

self.fc4 = MyDense(64, 32)

self.fc5 = MyDense(32, 10)

# 2) 实现一个前向传播的逻辑

def call(self,inputs, training=None):

'''

:param inputs:[b,32,32,3]

:param training:

:return:

'''

x = tf.reshape(inputs, [-1, 32*32*3])

# [b, 32*32*3] => [b, 256]

x = self.fc1(x)

x = tf.nn.relu(x)

# [b, 256] => [b, 128]

x = self.fc2(x)

x = tf.nn.relu(x)

# [b, 128] => [b, 64]

x = self.fc3(x)

x = tf.nn.relu(x)

# [b, 64] => [b, 32]

x = self.fc4(x)

x = tf.nn.relu(x)

# [b, 32] => [b, 10]

x = self.fc5(x)

return x

# 接下来我们就将网络和loss装配起来就可以了。

network = MyNetwork()

network.compile(optimizer=optimizers.Adam(lr=1e-3), # 开始装配

loss=tf.losses.CategoricalCrossentropy(from_logits=True), # 这里为了追求一个数据稳定性,一般都使用from_logits=True

metrics=['accuracy'])

network.fit(train_db, epochs=15, validation_data=test_db, validation_freq=1) # 做测试,频率为1

# 一般我们会选择保存一个参数,而不是整个网络,因为这样是一个轻量级的保存方式

network.evaluate(test_db) # 首先evaluate()来评估一下模型

network.save_weights('ckpt/weights.ckpt') # 后缀名自己随便起,因为这个是他自己定义的,和我们怎么定义没有关系

del network # 把这个网络删除掉

print('saved to ckpt/weights.ckpt')

# 到此为止模型已经保存起来了,保存起来以后我们需要额外的再创建一下

# 再创建模型的时候,因为以上只是单纯的保存了权值,因此还是需要以下步骤:

# 1)我们把网络创建的这一部分加载进来

network = MyNetwork()

network.compile(optimizer=optimizers.Adam(lr=1e-3), # 开始装配

loss=tf.losses.CategoricalCrossentropy(from_logits=True), # 这里为了追求一个数据稳定性,一般都使用from_logits=True

metrics=['accuracy'])

# 2)我们把权值给更新

network.load_weights('ckpt/weights.ckpt')

print('loaded weights from file.')

network.evaluate(test_db) # 做一下模型测试,对比之前和之后来看一下是否有变化

# 基本上对于cifar10来讲,如果不使用卷积神经网络或者深层次的RsNet的话,就很难达到70%的准确率

# 因为只保存了权值,因此最后的结果并不是完全一样,比如随机种子没有保存等等,但是差别不大

File "", line 78

:param inputs:[b,32,32,3]

^

IndentationError: unexpected indent

'''

# 本人此处是用pycharm运行的,copy到了这里,notebook同样可以运行

F:\Anaconda3\envs\gpu\python.exe H:/lesson13/keras_train.py

datasets: (50000, 32, 32, 3) (50000, 10) (10000, 32, 32, 3) (10000, 10) 0 255

batch: (128, 32, 32, 3) (128, 10)

Epoch 1/15

1/391 [..............................] - ETA: 7:38 - loss: 2.3948 - accuracy: 0.0625

5/391 [..............................] - ETA: 1:34 - loss: 2.2641 - accuracy: 0.1199

10/391 [..............................] - ETA: 48s - loss: 2.1756 - accuracy: 0.1605

15/391 [>.............................] - ETA: 33s - loss: 2.1270 - accuracy: 0.1814

20/391 [>.............................] - ETA: 26s - loss: 2.0827 - accuracy: 0.1958

25/391 [>.............................] - ETA: 21s - loss: 2.0638 - accuracy: 0.2074

30/391 [=>............................] - ETA: 18s - loss: 2.0296 - accuracy: 0.2171

35/391 [=>............................] - ETA: 16s - loss: 2.0153 - accuracy: 0.2250

40/391 [==>...........................] - ETA: 14s - loss: 2.0061 - accuracy: 0.2320

45/391 [==>...........................] - ETA: 12s - loss: 1.9865 - accuracy: 0.2381

50/391 [==>...........................] - ETA: 11s - loss: 1.9769 - accuracy: 0.2437

55/391 [===>..........................] - ETA: 10s - loss: 1.9622 - accuracy: 0.2489

60/391 [===>..........................] - ETA: 10s - loss: 1.9483 - accuracy: 0.2537

65/391 [===>..........................] - ETA: 9s - loss: 1.9407 - accuracy: 0.2580

70/391 [====>.........................] - ETA: 9s - loss: 1.9314 - accuracy: 0.2617

75/391 [====>.........................] - ETA: 8s - loss: 1.9235 - accuracy: 0.2651

80/391 [=====>........................] - ETA: 8s - loss: 1.9141 - accuracy: 0.2682

85/391 [=====>........................] - ETA: 7s - loss: 1.9072 - accuracy: 0.2712

90/391 [=====>........................] - ETA: 7s - loss: 1.9008 - accuracy: 0.2740

95/391 [======>.......................] - ETA: 6s - loss: 1.8936 - accuracy: 0.2765

100/391 [======>.......................] - ETA: 6s - loss: 1.8870 - accuracy: 0.2789

105/391 [=======>......................] - ETA: 6s - loss: 1.8826 - accuracy: 0.2813

110/391 [=======>......................] - ETA: 6s - loss: 1.8766 - accuracy: 0.2834

115/391 [=======>......................] - ETA: 5s - loss: 1.8684 - accuracy: 0.2853

120/391 [========>.....................] - ETA: 5s - loss: 1.8636 - accuracy: 0.2873

125/391 [========>.....................] - ETA: 5s - loss: 1.8589 - accuracy: 0.2891

130/391 [========>.....................] - ETA: 5s - loss: 1.8520 - accuracy: 0.2909

135/391 [=========>....................] - ETA: 5s - loss: 1.8474 - accuracy: 0.2926

140/391 [=========>....................] - ETA: 4s - loss: 1.8434 - accuracy: 0.2943

145/391 [==========>...................] - ETA: 4s - loss: 1.8354 - accuracy: 0.2958

150/391 [==========>...................] - ETA: 4s - loss: 1.8307 - accuracy: 0.2974

155/391 [==========>...................] - ETA: 4s - loss: 1.8252 - accuracy: 0.2989

160/391 [===========>..................] - ETA: 4s - loss: 1.8223 - accuracy: 0.3004

165/391 [===========>..................] - ETA: 4s - loss: 1.8162 - accuracy: 0.3019

170/391 [============>.................] - ETA: 4s - loss: 1.8116 - accuracy: 0.3033

175/391 [============>.................] - ETA: 3s - loss: 1.8080 - accuracy: 0.3047

180/391 [============>.................] - ETA: 3s - loss: 1.8042 - accuracy: 0.3060

185/391 [=============>................] - ETA: 3s - loss: 1.8024 - accuracy: 0.3074

190/391 [=============>................] - ETA: 3s - loss: 1.7984 - accuracy: 0.3087

195/391 [=============>................] - ETA: 3s - loss: 1.7957 - accuracy: 0.3099

200/391 [==============>...............] - ETA: 3s - loss: 1.7925 - accuracy: 0.3111

205/391 [==============>...............] - ETA: 3s - loss: 1.7900 - accuracy: 0.3123

210/391 [===============>..............] - ETA: 3s - loss: 1.7875 - accuracy: 0.3135

215/391 [===============>..............] - ETA: 2s - loss: 1.7851 - accuracy: 0.3146

220/391 [===============>..............] - ETA: 2s - loss: 1.7841 - accuracy: 0.3157

225/391 [================>.............] - ETA: 2s - loss: 1.7824 - accuracy: 0.3168

230/391 [================>.............] - ETA: 2s - loss: 1.7790 - accuracy: 0.3178

235/391 [=================>............] - ETA: 2s - loss: 1.7751 - accuracy: 0.3189

240/391 [=================>............] - ETA: 2s - loss: 1.7747 - accuracy: 0.3199

245/391 [=================>............] - ETA: 2s - loss: 1.7730 - accuracy: 0.3208

250/391 [==================>...........] - ETA: 2s - loss: 1.7699 - accuracy: 0.3218

255/391 [==================>...........] - ETA: 2s - loss: 1.7687 - accuracy: 0.3227

260/391 [==================>...........] - ETA: 2s - loss: 1.7655 - accuracy: 0.3236

265/391 [===================>..........] - ETA: 1s - loss: 1.7640 - accuracy: 0.3245

270/391 [===================>..........] - ETA: 1s - loss: 1.7619 - accuracy: 0.3254

275/391 [====================>.........] - ETA: 1s - loss: 1.7597 - accuracy: 0.3263

280/391 [====================>.........] - ETA: 1s - loss: 1.7585 - accuracy: 0.3271

285/391 [====================>.........] - ETA: 1s - loss: 1.7554 - accuracy: 0.3280

290/391 [=====================>........] - ETA: 1s - loss: 1.7533 - accuracy: 0.3288

295/391 [=====================>........] - ETA: 1s - loss: 1.7516 - accuracy: 0.3296

300/391 [======================>.......] - ETA: 1s - loss: 1.7502 - accuracy: 0.3304

305/391 [======================>.......] - ETA: 1s - loss: 1.7481 - accuracy: 0.3312

310/391 [======================>.......] - ETA: 1s - loss: 1.7457 - accuracy: 0.3319

317/391 [=======================>......] - ETA: 1s - loss: 1.7437 - accuracy: 0.3330

326/391 [========================>.....] - ETA: 0s - loss: 1.7424 - accuracy: 0.3342

335/391 [========================>.....] - ETA: 0s - loss: 1.7388 - accuracy: 0.3355

344/391 [=========================>....] - ETA: 0s - loss: 1.7346 - accuracy: 0.3367

353/391 [==========================>...] - ETA: 0s - loss: 1.7306 - accuracy: 0.3379

362/391 [==========================>...] - ETA: 0s - loss: 1.7273 - accuracy: 0.3391

370/391 [===========================>..] - ETA: 0s - loss: 1.7251 - accuracy: 0.3401

378/391 [============================>.] - ETA: 0s - loss: 1.7223 - accuracy: 0.3411

387/391 [============================>.] - ETA: 0s - loss: 1.7199 - accuracy: 0.3422

391/391 [==============================] - 6s 15ms/step - loss: 1.7180 - accuracy: 0.3428 - val_loss: 1.5808 - val_accuracy: 0.4397

Epoch 2/15

1/391 [..............................] - ETA: 2:59 - loss: 1.6163 - accuracy: 0.5000

6/391 [..............................] - ETA: 33s - loss: 1.5496 - accuracy: 0.4834

11/391 [..............................] - ETA: 19s - loss: 1.5743 - accuracy: 0.4759

16/391 [>.............................] - ETA: 14s - loss: 1.5710 - accuracy: 0.4675

21/391 [>.............................] - ETA: 12s - loss: 1.5458 - accuracy: 0.4636

26/391 [>.............................] - ETA: 10s - loss: 1.5524 - accuracy: 0.4620

31/391 [=>............................] - ETA: 9s - loss: 1.5481 - accuracy: 0.4616

36/391 [=>............................] - ETA: 8s - loss: 1.5489 - accuracy: 0.4606

41/391 [==>...........................] - ETA: 7s - loss: 1.5539 - accuracy: 0.4597

46/391 [==>...........................] - ETA: 7s - loss: 1.5477 - accuracy: 0.4588

51/391 [==>...........................] - ETA: 6s - loss: 1.5553 - accuracy: 0.4583

56/391 [===>..........................] - ETA: 6s - loss: 1.5553 - accuracy: 0.4579

304/391 [======================>.......] - ETA: 1s - loss: 0.7426 - accuracy: 0.7298

309/391 [======================>.......] - ETA: 1s - loss: 0.7415 - accuracy: 0.7299

315/391 [=======================>......] - ETA: 0s - loss: 0.7414 - accuracy: 0.7300

324/391 [=======================>......] - ETA: 0s - loss: 0.7399 - accuracy: 0.7301

333/391 [========================>.....] - ETA: 0s - loss: 0.7395 - accuracy: 0.7303

342/391 [=========================>....] - ETA: 0s - loss: 0.7389 - accuracy: 0.7304

350/391 [=========================>....] - ETA: 0s - loss: 0.7393 - accuracy: 0.7305

358/391 [==========================>...] - ETA: 0s - loss: 0.7379 - accuracy: 0.7307

367/391 [===========================>..] - ETA: 0s - loss: 0.7364 - accuracy: 0.7308

375/391 [===========================>..] - ETA: 0s - loss: 0.7352 - accuracy: 0.7309

383/391 [============================>.] - ETA: 0s - loss: 0.7361 - accuracy: 0.7311

390/391 [============================>.] - ETA: 0s - loss: 0.7351 - accuracy: 0.7312

391/391 [==============================] - 5s 13ms/step - loss: 0.7347 - accuracy: 0.7312 - val_loss: 2.0116 - val_accuracy: 0.4835

1/79 [..............................] - ETA: 0s - loss: 1.7383 - accuracy: 0.4766

9/79 [==>...........................] - ETA: 0s - loss: 1.9050 - accuracy: 0.4722

17/79 [=====>........................] - ETA: 0s - loss: 1.9247 - accuracy: 0.4793

25/79 [========>.....................] - ETA: 0s - loss: 1.9901 - accuracy: 0.4741

33/79 [===========>..................] - ETA: 0s - loss: 1.9831 - accuracy: 0.4846

41/79 [==============>...............] - ETA: 0s - loss: 1.9813 - accuracy: 0.4857

49/79 [=================>............] - ETA: 0s - loss: 1.9717 - accuracy: 0.4876

57/79 [====================>.........] - ETA: 0s - loss: 2.0079 - accuracy: 0.4840

66/79 [========================>.....] - ETA: 0s - loss: 2.0058 - accuracy: 0.4838

74/79 [===========================>..] - ETA: 0s - loss: 2.0027 - accuracy: 0.4848

79/79 [==============================] - 1s 7ms/step - loss: 2.0116 - accuracy: 0.4835

saved to ckpt/weights.ckpt

loaded weights from file.

1/79 [..............................] - ETA: 21s - loss: 1.7383 - accuracy: 0.4766

9/79 [==>...........................] - ETA: 2s - loss: 1.9050 - accuracy: 0.4498

16/79 [=====>........................] - ETA: 1s - loss: 1.9337 - accuracy: 0.4591

24/79 [========>.....................] - ETA: 0s - loss: 1.9727 - accuracy: 0.4660

32/79 [===========>..................] - ETA: 0s - loss: 1.9818 - accuracy: 0.4690

40/79 [==============>...............] - ETA: 0s - loss: 1.9753 - accuracy: 0.4724

46/79 [================>.............] - ETA: 0s - loss: 1.9714 - accuracy: 0.4742

52/79 [==================>...........] - ETA: 0s - loss: 1.9880 - accuracy: 0.4757

60/79 [=====================>........] - ETA: 0s - loss: 2.0014 - accuracy: 0.4770

68/79 [========================>.....] - ETA: 0s - loss: 2.0076 - accuracy: 0.4778

76/79 [===========================>..] - ETA: 0s - loss: 2.0038 - accuracy: 0.4785

79/79 [==============================] - 1s 11ms/step - loss: 2.0116 - accuracy: 0.4787

Process finished with exit code 0

'''

```

这里就是目录下保存的文件

```python

```