NLP基础入门之新闻文本基于深度学习的文本分类Test5

NLP基础入门之新闻文本基于深度学习的文本分类Test5

Word2Vector介绍

相较于传统NLP的高维、稀疏的表示法(One-hot Representation),Word2Vec训练出的词向量是低维、稠密的。Word2Vec利用了词的上下文信息,语义信息更加丰富,目前常见的应用有:

使用训练出的词向量作为输入特征,提升现有系统,如应用在情感分析、词性标注、语言翻译等神经网络中的输入层。

直接从语言学的角度对词向量进行应用,如使用向量的距离表示词语相似度、query相关性等。

在NLP中,最细粒度的对象是词语。如果我们要进行词性标注,用一般的思路,我们可以有一系列的样本数据(x,y)。其中x表示词语,y表示词性。而我们要做的,就是找到一个x -> y的映射关系,传统的方法包括Bayes,SVM等算法。但是我们的数学模型,一般都是数值型的输入。但是NLP中的词语,是人类的抽象总结,是符号形式的(比如中文、英文、拉丁文等等),所以需要把他们转换成数值形式,或者说——嵌入到一个数学空间里,这种嵌入方式,就叫词嵌入(word embedding),而 Word2vec,就是词嵌入( word embedding) 的一种。

在 NLP 中,把 x 看做一个句子里的一个词语,y 是这个词语的上下文词语,那么这里的 f,便是 NLP 中经常出现的『语言模型』(language model),这个模型的目的,就是判断 (x,y) 这个样本,是否符合自然语言的法则,更通俗点说就是:词语x和词语y放在一起,是不是人话。

Word2vec 正是来源于这个思想,但它的最终目的,不是要把 f 训练得多么完美,而是只关心模型训练完后的副产物——模型参数(这里特指神经网络的权重),并将这些参数,作为输入 x 的某种向量化的表示,这个向量便叫做——词向量。

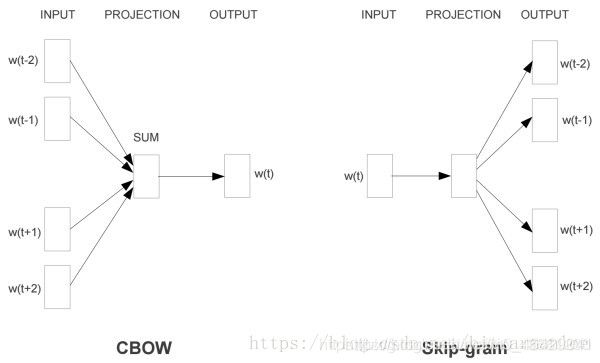

word2vec里面有两个重要的模型-CBOW模型(Continuous Bag-of-Words Model)与Skip-gram模型。

由名字与图都很容易看出来,CBOW就是根据某个词前面的C个词或者前后C个连续的词,来计算某个词出现的概率。Skip-Gram Model相反,是根据某个词,然后分别计算它前后出现某几个词的各个概率。

使用Gensim中的Word2Vector进行训练

from gensim.models import Word2Vec

model = Word2Vec(data['text'],size=200,window=3,min_count=3)

参数介绍:

1、size: 训练时词向量维度,默认值是100。这个维度的取值一般与我们的语料的大小相关,如果是不大的语料,比如小于100M的文本语料,则使用默认值一般就可以了。如果是超大的语料,建议增大维度。

2、window:即词向量上下文最大距离,这个参数在我们的算法原理篇中标记为c,window越大,则和某一词较远的词也会产生上下文关系。默认值为5。在实际使用中,可以根据实际的需求来动态调整这个window的大小。如果是小语料则这个值可以设的更小。对于一般的语料这个值推荐在[5,10]之间。

3、min_count:需要计算词向量的最小词频。这个值可以去掉一些很生僻的低频词,默认是5。如果是小语料,可以调低这个值。

4、workers:完成训练过程的线程数,默认为1即不使用多线程。

更多参数请查阅官方文档

TextCNN模型搭建

# build module

import torch.nn as nn

import torch.nn.functional as F

class Attention(nn.Module):

def __init__(self, hidden_size):

super(Attention, self).__init__()

self.weight = nn.Parameter(torch.Tensor(hidden_size, hidden_size))

self.weight.data.normal_(mean=0.0, std=0.05)

self.bias = nn.Parameter(torch.Tensor(hidden_size))

b = np.zeros(hidden_size, dtype=np.float32)

self.bias.data.copy_(torch.from_numpy(b))

self.query = nn.Parameter(torch.Tensor(hidden_size))

self.query.data.normal_(mean=0.0, std=0.05)

def forward(self, batch_hidden, batch_masks):

# batch_hidden: b x len x hidden_size (2 * hidden_size of lstm)

# batch_masks: b x len

# linear

key = torch.matmul(batch_hidden, self.weight) + self.bias # b x len x hidden

# compute attention

outputs = torch.matmul(key, self.query) # b x len

masked_outputs = outputs.masked_fill((1 - batch_masks).bool(), float(-1e32))

attn_scores = F.softmax(masked_outputs, dim=1) # b x len

# 对于全零向量,-1e32的结果为 1/len, -inf为nan, 额外补0

masked_attn_scores = attn_scores.masked_fill((1 - batch_masks).bool(), 0.0)

# sum weighted sources

batch_outputs = torch.bmm(masked_attn_scores.unsqueeze(1), key).squeeze(1) # b x hidden

return batch_outputs, attn_scores

# build word encoder

word2vec_path = '../emb/word2vec.txt'

dropout = 0.15

class WordCNNEncoder(nn.Module):

def __init__(self, vocab):

super(WordCNNEncoder, self).__init__()

self.dropout = nn.Dropout(dropout)

self.word_dims = 100

self.word_embed = nn.Embedding(vocab.word_size, self.word_dims, padding_idx=0)

extword_embed = vocab.load_pretrained_embs(word2vec_path)

extword_size, word_dims = extword_embed.shape

logging.info("Load extword embed: words %d, dims %d." % (extword_size, word_dims))

self.extword_embed = nn.Embedding(extword_size, word_dims, padding_idx=0)

self.extword_embed.weight.data.copy_(torch.from_numpy(extword_embed))

self.extword_embed.weight.requires_grad = False

input_size = self.word_dims

self.filter_sizes = [2, 3, 4] # n-gram window

self.out_channel = 100

self.convs = nn.ModuleList([nn.Conv2d(1, self.out_channel, (filter_size, input_size), bias=True)

for filter_size in self.filter_sizes])

def forward(self, word_ids, extword_ids):

# word_ids: sen_num x sent_len

# extword_ids: sen_num x sent_len

# batch_masks: sen_num x sent_len

sen_num, sent_len = word_ids.shape

word_embed = self.word_embed(word_ids) # sen_num x sent_len x 100

extword_embed = self.extword_embed(extword_ids)

batch_embed = word_embed + extword_embed

if self.training:

batch_embed = self.dropout(batch_embed)

batch_embed.unsqueeze_(1) # sen_num x 1 x sent_len x 100

pooled_outputs = []

for i in range(len(self.filter_sizes)):

filter_height = sent_len - self.filter_sizes[i] + 1

conv = self.convs[i](batch_embed)

hidden = F.relu(conv) # sen_num x out_channel x filter_height x 1

mp = nn.MaxPool2d((filter_height, 1)) # (filter_height, filter_width)

pooled = mp(hidden).reshape(sen_num,

self.out_channel) # sen_num x out_channel x 1 x 1 -> sen_num x out_channel

pooled_outputs.append(pooled)

reps = torch.cat(pooled_outputs, dim=1) # sen_num x total_out_channel

if self.training:

reps = self.dropout(reps)

return reps

# build sent encoder

sent_hidden_size = 256

sent_num_layers = 2

class SentEncoder(nn.Module):

def __init__(self, sent_rep_size):

super(SentEncoder, self).__init__()

self.dropout = nn.Dropout(dropout)

self.sent_lstm = nn.LSTM(

input_size=sent_rep_size,

hidden_size=sent_hidden_size,

num_layers=sent_num_layers,

batch_first=True,

bidirectional=True

)

def forward(self, sent_reps, sent_masks):

# sent_reps: b x doc_len x sent_rep_size

# sent_masks: b x doc_len

sent_hiddens, _ = self.sent_lstm(sent_reps) # b x doc_len x hidden*2

sent_hiddens = sent_hiddens * sent_masks.unsqueeze(2)

if self.training:

sent_hiddens = self.dropout(sent_hiddens)

return sent_hiddens

# build model

class Model(nn.Module):

def __init__(self, vocab):

super(Model, self).__init__()

self.sent_rep_size = 300

self.doc_rep_size = sent_hidden_size * 2

self.all_parameters = {}

parameters = []

self.word_encoder = WordCNNEncoder(vocab)

parameters.extend(list(filter(lambda p: p.requires_grad, self.word_encoder.parameters())))

self.sent_encoder = SentEncoder(self.sent_rep_size)

self.sent_attention = Attention(self.doc_rep_size)

parameters.extend(list(filter(lambda p: p.requires_grad, self.sent_encoder.parameters())))

parameters.extend(list(filter(lambda p: p.requires_grad, self.sent_attention.parameters())))

self.out = nn.Linear(self.doc_rep_size, vocab.label_size, bias=True)

parameters.extend(list(filter(lambda p: p.requires_grad, self.out.parameters())))

if use_cuda:

self.to(device)

if len(parameters) > 0:

self.all_parameters["basic_parameters"] = parameters

logging.info('Build model with cnn word encoder, lstm sent encoder.')

para_num = sum([np.prod(list(p.size())) for p in self.parameters()])

logging.info('Model param num: %.2f M.' % (para_num / 1e6))

def forward(self, batch_inputs):

# batch_inputs(batch_inputs1, batch_inputs2): b x doc_len x sent_len

# batch_masks : b x doc_len x sent_len

batch_inputs1, batch_inputs2, batch_masks = batch_inputs

batch_size, max_doc_len, max_sent_len = batch_inputs1.shape[0], batch_inputs1.shape[1], batch_inputs1.shape[2]

batch_inputs1 = batch_inputs1.view(batch_size * max_doc_len, max_sent_len) # sen_num x sent_len

batch_inputs2 = batch_inputs2.view(batch_size * max_doc_len, max_sent_len) # sen_num x sent_len

batch_masks = batch_masks.view(batch_size * max_doc_len, max_sent_len) # sen_num x sent_len

sent_reps = self.word_encoder(batch_inputs1, batch_inputs2) # sen_num x sent_rep_size

sent_reps = sent_reps.view(batch_size, max_doc_len, self.sent_rep_size) # b x doc_len x sent_rep_size

batch_masks = batch_masks.view(batch_size, max_doc_len, max_sent_len) # b x doc_len x max_sent_len

sent_masks = batch_masks.bool().any(2).float() # b x doc_len

sent_hiddens = self.sent_encoder(sent_reps, sent_masks) # b x doc_len x doc_rep_size

doc_reps, atten_scores = self.sent_attention(sent_hiddens, sent_masks) # b x doc_rep_size

batch_outputs = self.out(doc_reps) # b x num_labels

return batch_outputs

model = Model(vocab)

参考阿里天池文本分类论坛分享代码

TextRNN搭建

# build module

import torch.nn as nn

import torch.nn.functional as F

class Attention(nn.Module):

def __init__(self, hidden_size):

super(Attention, self).__init__()

self.weight = nn.Parameter(torch.Tensor(hidden_size, hidden_size))

self.weight.data.normal_(mean=0.0, std=0.05)

self.bias = nn.Parameter(torch.Tensor(hidden_size))

b = np.zeros(hidden_size, dtype=np.float32)

self.bias.data.copy_(torch.from_numpy(b))

self.query = nn.Parameter(torch.Tensor(hidden_size))

self.query.data.normal_(mean=0.0, std=0.05)

def forward(self, batch_hidden, batch_masks):

# batch_hidden: b x len x hidden_size (2 * hidden_size of lstm)

# batch_masks: b x len

# linear

key = torch.matmul(batch_hidden, self.weight) + self.bias # b x len x hidden

# compute attention

outputs = torch.matmul(key, self.query) # b x len

masked_outputs = outputs.masked_fill((1 - batch_masks).bool(), float(-1e32))

attn_scores = F.softmax(masked_outputs, dim=1) # b x len

# 对于全零向量,-1e32的结果为 1/len, -inf为nan, 额外补0

masked_attn_scores = attn_scores.masked_fill((1 - batch_masks).bool(), 0.0)

# sum weighted sources

batch_outputs = torch.bmm(masked_attn_scores.unsqueeze(1), key).squeeze(1) # b x hidden

return batch_outputs, attn_scores

# build word encoder

word2vec_path = '../emb/word2vec.txt'

dropout = 0.15

word_hidden_size = 128

word_num_layers = 2

class WordLSTMEncoder(nn.Module):

def __init__(self, vocab):

super(WordLSTMEncoder, self).__init__()

self.dropout = nn.Dropout(dropout)

self.word_dims = 100

self.word_embed = nn.Embedding(vocab.word_size, self.word_dims, padding_idx=0)

extword_embed = vocab.load_pretrained_embs(word2vec_path)

extword_size, word_dims = extword_embed.shape

logging.info("Load extword embed: words %d, dims %d." % (extword_size, word_dims))

self.extword_embed = nn.Embedding(extword_size, word_dims, padding_idx=0)

self.extword_embed.weight.data.copy_(torch.from_numpy(extword_embed))

self.extword_embed.weight.requires_grad = False

input_size = self.word_dims

self.word_lstm = nn.LSTM(

input_size=input_size,

hidden_size=word_hidden_size,

num_layers=word_num_layers,

batch_first=True,

bidirectional=True

)

def forward(self, word_ids, extword_ids, batch_masks):

# word_ids: sen_num x sent_len

# extword_ids: sen_num x sent_len

# batch_masks sen_num x sent_len

word_embed = self.word_embed(word_ids) # sen_num x sent_len x 100

extword_embed = self.extword_embed(extword_ids)

batch_embed = word_embed + extword_embed

if self.training:

batch_embed = self.dropout(batch_embed)

hiddens, _ = self.word_lstm(batch_embed) # sen_num x sent_len x hidden*2

hiddens = hiddens * batch_masks.unsqueeze(2)

if self.training:

hiddens = self.dropout(hiddens)

return hiddens

# build sent encoder

sent_hidden_size = 256

sent_num_layers = 2

class SentEncoder(nn.Module):

def __init__(self, sent_rep_size):

super(SentEncoder, self).__init__()

self.dropout = nn.Dropout(dropout)

self.sent_lstm = nn.LSTM(

input_size=sent_rep_size,

hidden_size=sent_hidden_size,

num_layers=sent_num_layers,

batch_first=True,

bidirectional=True

)

def forward(self, sent_reps, sent_masks):

# sent_reps: b x doc_len x sent_rep_size

# sent_masks: b x doc_len

sent_hiddens, _ = self.sent_lstm(sent_reps) # b x doc_len x hidden*2

sent_hiddens = sent_hiddens * sent_masks.unsqueeze(2)

if self.training:

sent_hiddens = self.dropout(sent_hiddens)

return sent_hiddens

# build model

class Model(nn.Module):

def __init__(self, vocab):

super(Model, self).__init__()

self.sent_rep_size = word_hidden_size * 2

self.doc_rep_size = sent_hidden_size * 2

self.all_parameters = {}

parameters = []

self.word_encoder = WordLSTMEncoder(vocab)

self.word_attention = Attention(self.sent_rep_size)

parameters.extend(list(filter(lambda p: p.requires_grad, self.word_encoder.parameters())))

parameters.extend(list(filter(lambda p: p.requires_grad, self.word_attention.parameters())))

self.sent_encoder = SentEncoder(self.sent_rep_size)

self.sent_attention = Attention(self.doc_rep_size)

parameters.extend(list(filter(lambda p: p.requires_grad, self.sent_encoder.parameters())))

parameters.extend(list(filter(lambda p: p.requires_grad, self.sent_attention.parameters())))

self.out = nn.Linear(self.doc_rep_size, vocab.label_size, bias=True)

parameters.extend(list(filter(lambda p: p.requires_grad, self.out.parameters())))

if use_cuda:

self.to(device)

if len(parameters) > 0:

self.all_parameters["basic_parameters"] = parameters

logging.info('Build model with lstm word encoder, lstm sent encoder.')

para_num = sum([np.prod(list(p.size())) for p in self.parameters()])

logging.info('Model param num: %.2f M.' % (para_num / 1e6))

def forward(self, batch_inputs):

# batch_inputs(batch_inputs1, batch_inputs2): b x doc_len x sent_len

# batch_masks : b x doc_len x sent_len

batch_inputs1, batch_inputs2, batch_masks = batch_inputs

batch_size, max_doc_len, max_sent_len = batch_inputs1.shape[0], batch_inputs1.shape[1], batch_inputs1.shape[2]

batch_inputs1 = batch_inputs1.view(batch_size * max_doc_len, max_sent_len) # sen_num x sent_len

batch_inputs2 = batch_inputs2.view(batch_size * max_doc_len, max_sent_len) # sen_num x sent_len

batch_masks = batch_masks.view(batch_size * max_doc_len, max_sent_len) # sen_num x sent_len

batch_hiddens = self.word_encoder(batch_inputs1, batch_inputs2,

batch_masks) # sen_num x sent_len x sent_rep_size

sent_reps, atten_scores = self.word_attention(batch_hiddens, batch_masks) # sen_num x sent_rep_size

sent_reps = sent_reps.view(batch_size, max_doc_len, self.sent_rep_size) # b x doc_len x sent_rep_size

batch_masks = batch_masks.view(batch_size, max_doc_len, max_sent_len) # b x doc_len x max_sent_len

sent_masks = batch_masks.bool().any(2).float() # b x doc_len

sent_hiddens = self.sent_encoder(sent_reps, sent_masks) # b x doc_len x doc_rep_size

doc_reps, atten_scores = self.sent_attention(sent_hiddens, sent_masks) # b x doc_rep_size

batch_outputs = self.out(doc_reps) # b x num_labels

return batch_outputs

model = Model(vocab)

参考阿里天池文本分类论坛分享代码

总结:

深度学习有待深入学习了解

参考链接

1、https://blog.csdn.net/weixin_43561290/article/details/101638438?biz_id=102&utm_term=word2vec&utm_medium=distribute.pc_search_result.none-task-blog-2allsobaiduweb~default-1-101638438&spm=1018.2118.3001.4187;

2、https://blog.csdn.net/HappyCtest/article/details/85091686?ops_request_misc=%257B%2522request%255Fid%2522%253A%2522159620380919724845052194%2522%252C%2522scm%2522%253A%252220140713.130102334…%2522%257D&request_id=159620380919724845052194&biz_id=0&utm_medium=distribute.pc_search_result.none-task-blog-2allfirst_rank_v2~rank_v25-2-85091686.first_rank_v2_rank_v25&utm_term=gensim+word2vec&spm=1018.2118.3001.4187

3、https://tianchi.aliyun.com/competition/entrance/531810/forum