观看莫烦老师《迁移学习》,利用VGG实现一个判断老虎和猫的尺寸

"""

This is a simple example of transfer learning using VGG.

Fine tune a CNN from a classifier to regressor.

Generate some fake data for describing cat and tiger length.

Fake length setting:

Cat - Normal distribution (40, 8)

Tiger - Normal distribution (100, 30)

The VGG model and parameters are adopted from:

https://github.com/machrisaa/tensorflow-vgg

Learn more, visit my tutorial site: [莫烦Python](https://morvanzhou.github.io)

"""

from urllib.request import urlretrieve

import os

import numpy as np

import tensorflow as tf

import skimage.io

import skimage.transform

import matplotlib.pyplot as plt

# def download(): # download tiger and kittycat image

# categories = ['tiger', 'kittycat']

# for category in categories:

# os.makedirs('./for_transfer_learning/data/%s' % category, exist_ok=True)

# with open('./for_transfer_learning/imagenet_%s.txt' % category, 'r') as file:

# urls = file.readlines()

# n_urls = len(urls)

# for i, url in enumerate(urls):

# try:

# urlretrieve(url.strip(), './for_transfer_learning/data/%s/%s' % (category, url.strip().split('/')[-1]))

# print('%s %i/%i' % (category, i, n_urls))

# except:

# print('%s %i/%i' % (category, i, n_urls), 'no image')

def load_img(path):

img = skimage.io.imread(path)

img = img / 255.0

# print "Original Image Shape: ", img.shape

# we crop image from center

short_edge = min(img.shape[:2])

yy = int((img.shape[0] - short_edge) / 2)

xx = int((img.shape[1] - short_edge) / 2)

crop_img = img[yy: yy + short_edge, xx: xx + short_edge]

# resize to 224, 224

resized_img = skimage.transform.resize(crop_img, (224, 224))[None, :, :, :] # shape [1, 224, 224, 3]

return resized_img

def load_data():

imgs = {'tiger': [], 'kittycat': []}

for k in imgs.keys():

dir = 'D:/VGG_practice/for_transfer_learning/data/' + k

for file in os.listdir(dir):

if not file.lower().endswith('.jpg'):

continue

try:

resized_img = load_img(os.path.join(dir, file))

except OSError:

continue

imgs[k].append(resized_img) # [1, height, width, depth] * n

if len(imgs[k]) == 400: # only use 400 imgs to reduce my memory load

break

# fake length data for tiger and cat

tigers_y = np.maximum(20, np.random.randn(len(imgs['tiger']), 1) * 30 + 100)

cat_y = np.maximum(10, np.random.randn(len(imgs['kittycat']), 1) * 8 + 40)

return imgs['tiger'], imgs['kittycat'], tigers_y, cat_y

class Vgg16:

vgg_mean = [103.939, 116.779, 123.68]

print(3)

def __init__(self, vgg16_npy_path=None, restore_from=None):

# pre-trained parameters

try:

self.data_dict = np.load(vgg16_npy_path, encoding='latin1').item()

print(Vgg16)

except FileNotFoundError:

print('Please download VGG16 parameters from here https://mega.nz/#!YU1FWJrA!O1ywiCS2IiOlUCtCpI6HTJOMrneN-Qdv3ywQP5poecM\nOr from my Baidu Cloud: https://pan.baidu.com/s/1Spps1Wy0bvrQHH2IMkRfpg')

self.tfx = tf.placeholder(tf.float32, [None, 224, 224, 3])

self.tfy = tf.placeholder(tf.float32, [None, 1])

# Convert RGB to BGR

red, green, blue = tf.split(axis=3, num_or_size_splits=3, value=self.tfx * 255.0)

bgr = tf.concat(axis=3, values=[

blue - self.vgg_mean[0],

green - self.vgg_mean[1],

red - self.vgg_mean[2],

])

# pre-trained VGG layers are fixed in fine-tune

conv1_1 = self.conv_layer(bgr, "conv1_1")

conv1_2 = self.conv_layer(conv1_1, "conv1_2")

pool1 = self.max_pool(conv1_2, 'pool1')

conv2_1 = self.conv_layer(pool1, "conv2_1")

conv2_2 = self.conv_layer(conv2_1, "conv2_2")

pool2 = self.max_pool(conv2_2, 'pool2')

conv3_1 = self.conv_layer(pool2, "conv3_1")

conv3_2 = self.conv_layer(conv3_1, "conv3_2")

conv3_3 = self.conv_layer(conv3_2, "conv3_3")

pool3 = self.max_pool(conv3_3, 'pool3')

conv4_1 = self.conv_layer(pool3, "conv4_1")

conv4_2 = self.conv_layer(conv4_1, "conv4_2")

conv4_3 = self.conv_layer(conv4_2, "conv4_3")

pool4 = self.max_pool(conv4_3, 'pool4')

conv5_1 = self.conv_layer(pool4, "conv5_1")

conv5_2 = self.conv_layer(conv5_1, "conv5_2")

conv5_3 = self.conv_layer(conv5_2, "conv5_3")

pool5 = self.max_pool(conv5_3, 'pool5')

# detach original VGG fc layers and

# reconstruct your own fc layers serve for your own purpose

self.flatten = tf.reshape(pool5, [-1, 7*7*512])

self.fc6 = tf.layers.dense(self.flatten, 256, tf.nn.relu, name='fc6')

self.out = tf.layers.dense(self.fc6, 1, name='out')

self.sess = tf.Session()

if restore_from:

saver = tf.train.Saver()

saver.restore(self.sess, restore_from)

else: # training graph

self.loss = tf.losses.mean_squared_error(labels=self.tfy, predictions=self.out)

self.train_op = tf.train.RMSPropOptimizer(0.001).minimize(self.loss)

self.sess.run(tf.global_variables_initializer())

def max_pool(self, bottom, name):

return tf.nn.max_pool(bottom, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME', name=name)

def conv_layer(self, bottom, name):

with tf.variable_scope(name): # CNN's filter is constant, NOT Variable that can be trained

conv = tf.nn.conv2d(bottom, self.data_dict[name][0], [1, 1, 1, 1], padding='SAME')

lout = tf.nn.relu(tf.nn.bias_add(conv, self.data_dict[name][1]))

return lout

def train(self, x, y):

loss, _ = self.sess.run([self.loss, self.train_op], {self.tfx: x, self.tfy: y})

return loss

def predict(self, paths):

fig, axs = plt.subplots(1, 2)

for i, path in enumerate(paths):

x = load_img(path)

length = self.sess.run(self.out, {self.tfx: x})

axs[i].imshow(x[0])

axs[i].set_title('Len: %.1f cm' % length)

axs[i].set_xticks(()); axs[i].set_yticks(())

plt.show()

def save(self, path='D:/VGG_practice/for_transfer_learning/model/transfer_learn'):

saver = tf.train.Saver()

saver.save(self.sess, path, write_meta_graph=False)

def train():

tigers_x, cats_x, tigers_y, cats_y = load_data()

# plot fake length distribution

plt.hist(tigers_y, bins=20, label='Tigers')

plt.hist(cats_y, bins=10, label='Cats')

plt.legend()

plt.xlabel('length')

print(4)

plt.show()

print(5)

xs = np.concatenate(tigers_x + cats_x, axis=0)

ys = np.concatenate((tigers_y, cats_y), axis=0)

print(1)

vgg = Vgg16(vgg16_npy_path='D:/VGG_practice/for_transfer_learning/vgg16.npy')

print(2)

print('Net built')

for i in range(100):

b_idx = np.random.randint(0, len(xs), 6)

train_loss = vgg.train(xs[b_idx], ys[b_idx])

print(i, 'train loss: ', train_loss)

vgg.save('D:/VGG_practice/for_transfer_learning/model/transfer_learn') # save learned fc layers

def eval():

vgg = Vgg16(vgg16_npy_path='D:/VGG_practice/for_transfer_learning/vgg16.npy',restore_from='D:/VGG_practice/for_transfer_learning/model/transfer_learn')

vgg.predict(

['D:/VGG_practice/for_transfer_learning/data/kittycat/000129037.jpg', 'D:/VGG_practice/for_transfer_learning/data/tiger/391412.jpg'])

if __name__ == '__main__':

# download()

# train()

eval()上面是莫烦老师上传的代码,我试着运行了一下,因为tigers和cats的图片我已经通过百度云下载了,所以def download()中的代码我就没有用,因此注释了起来。

所谓“迁移学习”就是站在巨人的肩膀上,“他山之石,可以攻玉”,比如这段代码,主要部分就是利用了VGG网络,(self.flatten之前的部分)只是把最后的全连接层为了实现自己的目的做出了改变。

虽然这个代码很简单,但是我调试了一下午才成功,(菜鸟实在伤不起--!)

后来想了一下之前失败的原因,有两种可能:1.VGG那个模型没有下载好 2.CPU跑代码需要一些时间,之前为了了解代码的运行过程,我用print输出了一些数字,发现到plt.show()输出一张柱状图之后就不往下继续走了,就这样不断调试了好长时间,后来我在它显示出了一张图片以后就没有管它,在关掉那张图片以后,程序就开始跑起来了。。。。(真的醉了)哈哈哈哈。。。

今天算是我第一次些博客,虽然自己是个菜鸟,但是梦想还是要有的,别忘了“亮剑”精神哦!

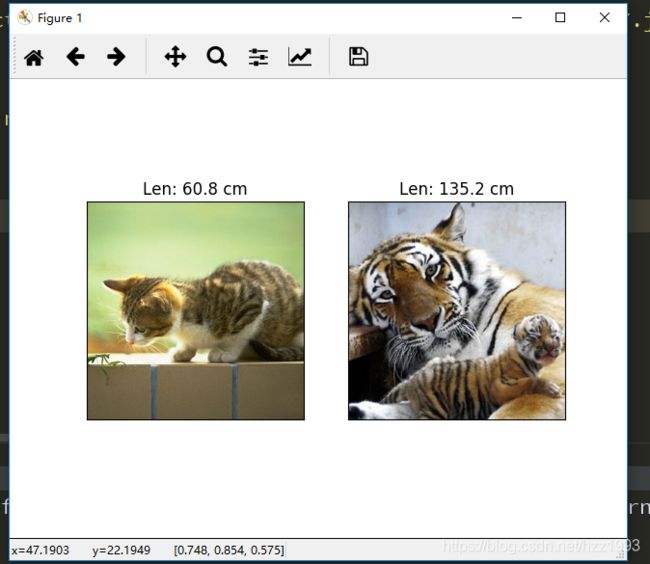

下图是运行的结果

这是之前输入的猫和老虎的尺寸数据

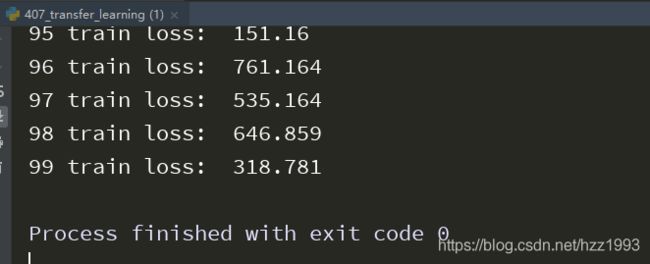

它开始跑起来了。。。

出现了这个警告。。。

运行结束了

大功告成啦!(这也算扬名立万的第一步。。。。)