跑通SOLOV1-V2实例分割代码,并训练自己的数据集。

系统平台:Ubuntu18.04

硬件平台:RTX2080 super

cuda和cudnn版本:cuda10.0 cudnn:7.5.6

pytorch版本:pytorch1.2.0

环境安装:

#创建solo虚拟环境

conda create -n solo python=3.7 -y

conda activate solo

#下载solo源码,并编译

git clone https://github.com/WXinlong/SOLO.git

cd SOLO

pip install -r requirements/build.txt

pip install "git+https://github.com/cocodataset/cocoapi.git#subdirectory=PythonAPI"

pip install -v -e . #不要忘记了后面的这个点,记得将cuda添加到系统环境中。

完成上面操作就可以跑demo了,但是solo只给了单张图片的预测,摄像头预测是无法运行的,下面的代码是摄像头实时检测的代码,大家可以试一下:

import argparse

import cv2

import torch

import mmcv

from mmdet.apis import inference_detector, init_detector, show_result, show_result_ins

def main():

config_file = '../configs/solov2/solov2_light_448_r18_fpn_8gpu_3x.py'

checkpoint_file = '../checkpoints/SOLOv2_LIGHT_448_R18_3x.pth'

model = init_detector(config_file, checkpoint_file, device='cuda:0')

camera = cv2.VideoCapture(0)

print('Press "Esc", "q" or "Q" to exit.')

i=0

while True:

i += 1

ret_val, img = camera.read()

result = inference_detector(model, img)

#cv2.imshow('test',img)

#cv2.waitKey(1)

#ch = cv2.waitKey(1)

#if ch == 27 or ch == ord('q') or ch == ord('Q'):

# break

image = show_result_ins(img, result, model.CLASSES, score_thr=0.25, out_file="demo_out.jpg")

#mmcv.imwrite(image, 'zzw'+str(i)+'.jpg')

#mmcv.imshow_det_bboxes()

mmcv.imshow(image,win_name='zzw',wait_time=1)

if __name__ == '__main__':

main()

数据集准备:

我们标注数据集使用的是labelme来标注,每一个图片会生成一个json标注文件,标注完成后我们需要将我们所有json文件合并为一个json文件。代码如下:

# -*- coding:utf-8 -*-

# !/usr/bin/env python

import argparse

import json

import matplotlib.pyplot as plt

import skimage.io as io

import cv2

from labelme import utils

import numpy as np

import glob

import PIL.Image

class MyEncoder(json.JSONEncoder):

def default(self, obj):

if isinstance(obj, np.integer):

return int(obj)

elif isinstance(obj, np.floating):

return float(obj)

elif isinstance(obj, np.ndarray):

return obj.tolist()

else:

return super(MyEncoder, self).default(obj)

class labelme2coco(object):

def __init__(self, labelme_json=[], save_json_path='./tran.json'):

'''

:param labelme_json: 所有labelme的json文件路径组成的列表

:param save_json_path: json保存位置

'''

self.labelme_json = labelme_json

self.save_json_path = save_json_path

self.images = []

self.categories = []

self.annotations = []

# self.data_coco = {}

self.label = []

self.annID = 1

self.height = 0

self.width = 0

self.save_json()

def data_transfer(self):

for num, json_file in enumerate(self.labelme_json):

with open(json_file, 'r') as fp:

data = json.load(fp) # 加载json文件

self.images.append(self.image(data, num))

for shapes in data['shapes']:

label = shapes['label']

if label not in self.label:

self.categories.append(self.categorie(label))

self.label.append(label)

points = shapes['points']#这里的point是用rectangle标注得到的,只有两个点,需要转成四个点

points.append([points[0][0],points[1][1]])

points.append([points[1][0],points[0][1]])

self.annotations.append(self.annotation(points, label, num))

self.annID += 1

def image(self, data, num):

image = {}

img = utils.img_b64_to_arr(data['imageData']) # 解析原图片数据

# img=io.imread(data['imagePath']) # 通过图片路径打开图片

# img = cv2.imread(data['imagePath'], 0)

height, width = img.shape[:2]

img = None

image['height'] = height

image['width'] = width

image['id'] = num + 1

image['file_name'] = data['imagePath'].split('/')[-1]

self.height = height

self.width = width

return image

def categorie(self, label):

categorie = {}

categorie['supercategory'] = 'Cancer'

categorie['id'] = len(self.label) + 1 # 0 默认为背景

categorie['name'] = label

return categorie

def annotation(self, points, label, num):

annotation = {}

annotation['segmentation'] = [list(np.asarray(points).flatten())]

annotation['iscrowd'] = 0

annotation['image_id'] = num + 1

# annotation['bbox'] = str(self.getbbox(points)) # 使用list保存json文件时报错(不知道为什么)

# list(map(int,a[1:-1].split(','))) a=annotation['bbox'] 使用该方式转成list

annotation['bbox'] = list(map(float, self.getbbox(points)))

annotation['area'] = annotation['bbox'][2] * annotation['bbox'][3]

# annotation['category_id'] = self.getcatid(label)

annotation['category_id'] = self.getcatid(label)

annotation['id'] = self.annID

return annotation

def getcatid(self, label):

for categorie in self.categories:

if label == categorie['name']:

return categorie['id']

return 1

def getbbox(self, points):

# img = np.zeros([self.height,self.width],np.uint8)

# cv2.polylines(img, [np.asarray(points)], True, 1, lineType=cv2.LINE_AA) # 画边界线

# cv2.fillPoly(img, [np.asarray(points)], 1) # 画多边形 内部像素值为1

polygons = points

mask = self.polygons_to_mask([self.height, self.width], polygons)

return self.mask2box(mask)

def mask2box(self, mask):

'''从mask反算出其边框

mask:[h,w] 0、1组成的图片

1对应对象,只需计算1对应的行列号(左上角行列号,右下角行列号,就可以算出其边框)

'''

# np.where(mask==1)

index = np.argwhere(mask == 1)

rows = index[:, 0]

clos = index[:, 1]

# 解析左上角行列号

left_top_r = np.min(rows) # y

left_top_c = np.min(clos) # x

# 解析右下角行列号

right_bottom_r = np.max(rows)

right_bottom_c = np.max(clos)

# return [(left_top_r,left_top_c),(right_bottom_r,right_bottom_c)]

# return [(left_top_c, left_top_r), (right_bottom_c, right_bottom_r)]

# return [left_top_c, left_top_r, right_bottom_c, right_bottom_r] # [x1,y1,x2,y2]

return [left_top_c, left_top_r, right_bottom_c - left_top_c,

right_bottom_r - left_top_r] # [x1,y1,w,h] 对应COCO的bbox格式

def polygons_to_mask(self, img_shape, polygons):

mask = np.zeros(img_shape, dtype=np.uint8)

mask = PIL.Image.fromarray(mask)

xy = list(map(tuple, polygons))

PIL.ImageDraw.Draw(mask).polygon(xy=xy, outline=1, fill=1)

mask = np.array(mask, dtype=bool)

return mask

def data2coco(self):

data_coco = {}

data_coco['images'] = self.images

data_coco['categories'] = self.categories

data_coco['annotations'] = self.annotations

return data_coco

def save_json(self):

self.data_transfer()

self.data_coco = self.data2coco()

# 保存json文件

json.dump(self.data_coco, open(self.save_json_path, 'w'), indent=4, cls=MyEncoder)

labelme_json = glob.glob('C:/Users/86183/Desktop/shujuji/train/json1/*.json')

# labelme_json=['./Annotations/*.json']

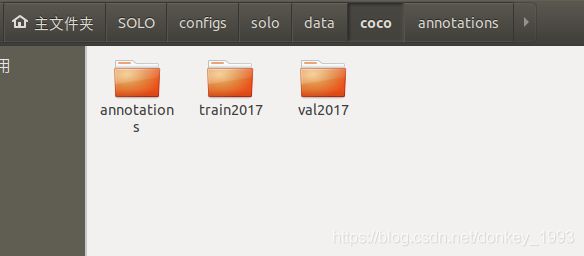

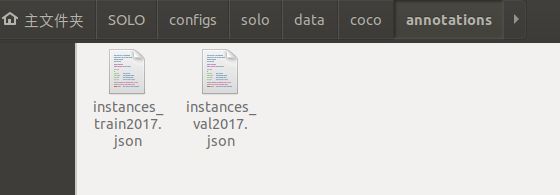

labelme2coco(labelme_json, 'C:/Users/86183/Desktop/train/test.json')转换完之后,我们需要生成如下几个文件夹。annotations存储的是我们上面转换的json文件。train2017和val2017存储的是训练和测试的图片。

创建我们自己的数据集。在SOLO/mmdet/datasets文件夹下面创建我们自己的数据集,我创建的是pig_data.py文件:

from .coco import CocoDataset

from .registry import DATASETS

@DATASETS.register_module

class pig_data(CocoDataset):

CLASSES = ['pig_standing','pig_kneeling','pig_side_lying','pig_action_unknown','pig_climbing','person']修改SOLO/mmdet/datasets/__init__.py文件,将我们的数据集加进去。

from .builder import build_dataset

from .cityscapes import CityscapesDataset

from .coco import CocoDataset

from .custom import CustomDataset

from .dataset_wrappers import ConcatDataset, RepeatDataset

from .loader import DistributedGroupSampler, GroupSampler, build_dataloader

from .registry import DATASETS

from .voc import VOCDataset

from .wider_face import WIDERFaceDataset

from .xml_style import XMLDataset

from .pig_data import pig_data #把我们的数据集加进去

__all__ = [

'CustomDataset', 'XMLDataset', 'CocoDataset', 'VOCDataset',

'CityscapesDataset', 'GroupSampler', 'DistributedGroupSampler',

'build_dataloader', 'ConcatDataset', 'RepeatDataset', 'WIDERFaceDataset',

'DATASETS', 'build_dataset','pig_data'

]修改训练文件:

模型训练文件在SOLO/configs/solo文件夹下,我修改的是solo_r50_fpn_8gpu_3x.py。你想要训练哪个就修改哪个。

# model settings

model = dict(

type='SOLO',

pretrained='torchvision://resnet50',

backbone=dict(

type='ResNet',

depth=50,

num_stages=4,

out_indices=(0, 1, 2, 3), # C2, C3, C4, C5

frozen_stages=1,

style='pytorch'),

neck=dict(

type='FPN',

in_channels=[256, 512, 1024, 2048],

out_channels=256,

start_level=0,

num_outs=5),

bbox_head=dict(

type='SOLOHead',

num_classes=6,

in_channels=256,

stacked_convs=7,

seg_feat_channels=256,

strides=[8, 8, 16, 32, 32],

scale_ranges=((1, 96), (48, 192), (96, 384), (192, 768), (384, 2048)),

sigma=0.2,

num_grids=[40, 36, 24, 16, 12],

cate_down_pos=0,

with_deform=False,

loss_ins=dict(

type='DiceLoss',

use_sigmoid=True,

loss_weight=3.0),

loss_cate=dict(

type='FocalLoss',

use_sigmoid=True,

gamma=2.0,

alpha=0.25,

loss_weight=1.0),

))

# training and testing settings

train_cfg = dict()

test_cfg = dict(

nms_pre=500,

score_thr=0.1,

mask_thr=0.5,

update_thr=0.05,

kernel='gaussian', # gaussian/linear

sigma=2.0,

max_per_img=100)

# dataset settings

dataset_type = 'pig_data' #这里是你的数据集的名字

data_root = '/home/uc/SOLO/configs/solo/data/coco/' #这是你数据集所在文件夹

img_norm_cfg = dict(

mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

train_pipeline = [

dict(type='LoadImageFromFile'),

dict(type='LoadAnnotations', with_bbox=True, with_mask=True),

dict(type='Resize',

img_scale=[(1333, 800), (1333, 768), (1333, 736), #这里可以修改图片的大小。

(1333, 704), (1333, 672), (1333, 640)],

multiscale_mode='value',

keep_ratio=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(type='Normalize', **img_norm_cfg),

dict(type='Pad', size_divisor=32),

dict(type='DefaultFormatBundle'),

dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels', 'gt_masks']),

]

test_pipeline = [

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale=(1333, 800),

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip'),

dict(type='Normalize', **img_norm_cfg),

dict(type='Pad', size_divisor=32),

dict(type='ImageToTensor', keys=['img']),

dict(type='Collect', keys=['img']),

])

]

data = dict(

imgs_per_gpu=1,

workers_per_gpu=1,

train=dict(

type=dataset_type,

ann_file=data_root + 'annotations/instances_train2017.json', #读取训练数据集

img_prefix=data_root + 'train2017/',

pipeline=train_pipeline),

val=dict(

type=dataset_type,

ann_file=data_root + 'annotations/instances_val2017.json', #读取测试数据集

img_prefix=data_root + 'val2017/',

pipeline=test_pipeline),

test=dict(

type=dataset_type,

ann_file=data_root + 'annotations/instances_val2017.json',

img_prefix=data_root + 'val2017/',

pipeline=test_pipeline))

# optimizer

optimizer = dict(type='SGD', lr=0.01, momentum=0.9, weight_decay=0.0001)

optimizer_config = dict(grad_clip=dict(max_norm=35, norm_type=2))

# learning policy

lr_config = dict(

policy='step',

warmup='linear',

warmup_iters=500,

warmup_ratio=1.0 / 3,

step=[27, 33])

checkpoint_config = dict(interval=1)

# yapf:disable

log_config = dict(

interval=50,

hooks=[

dict(type='TextLoggerHook'),

# dict(type='TensorboardLoggerHook')

])

# yapf:enable

# runtime settings

total_epochs = 36

device_ids = range(8)

dist_params = dict(backend='nccl')

log_level = 'INFO'

work_dir = './work_dirs/solo_release_r50_fpn_8gpu_3x' #存储模型路径

load_from = None

resume_from = None

workflow = [('train', 1)]

这样就可以完成solo训练自己的数据集了,经过测试分割效果很出色,边缘信息也比较好。