XGBoost调参

import pandas as pd

import numpy as np

from sklearn.metrics import mean_absolute_error,make_scorer

import xgboost as xgb

from sklearn.model_selection import GridSearchCV

import warnings

warnings.filterwarnings('ignore')

train=r'C:\Users\10991\Desktop\kaggle\baoxian_train.csv'

test=r'C:\Users\10991\Desktop\kaggle\baoxian_test.csv'

train=pd.read_csv(train)

train['log_loss']=np.log(train['loss'])

features=[x for x in train.columns if x not in ['loss','id','log_loss']]

cat_features=[x for x in train.select_dtypes(include=['object']).columns if x not in ['loss','id','log_loss']]

num_features=[x for x in train.select_dtypes(exclude=['object']).columns if x not in ['loss','id','log_loss']]

print(len(cat_features))

print(len(num_features))

ntrain=train.shape[0]

train_x=train[features]

train_y=train['log_loss']

for c in range(len(cat_features)):

train_x[cat_features[c]]=train_x[cat_features[c]].astype('category').cat.codes

print(train_x.shape)

print(train_y.shape

def xg_eval_mae(yhat,dtrain): #平均绝对误差来衡量效果

y=dtrain.get_label()

return 'mae',mean_absolute_error(np.exp(y),np.exp(yhat))

class XGBoostRegressor():

def __init__(self,**kwargs):

self.params=kwargs

if 'num_boost_round' in self.params:

self.num_boost_round=self.params['num_boost_round']

self.params.update({'silent':1,'objective':'reg:linear','seed':0})

def fit(self,x_train,y_train):

dtrain=xgb.DMatrix(x_train,y_train)

self.bst=xgb.train(params=self.params,dtrain=dtrain,num_boost_round=self.num_boost_round,feval=xg_eval_mae,maximize=False)

def predict(self,x_pred):

dpred=xgb.DMatrix(x_pred)

return self.bst.predict(dpred)

def kfold(self,x_train,y_train,nfold=5):

dtrain=xgb.DMatrix(x_train,y_train)

cv_round=xgb.cv(params=self.params,dtrain=dtrain,num_boost_round=self.num_boost_round,nfold=nfold,feval=xg_eval_mae,maximize=False,early_stopping_rounds=10)

return cv_round.iloc[-1,:]

def plot_feature_importance(self):

feat_imp = pd.Series(self.bst.get_fscore()).sort_values(ascending=False)

feat_imp.plot(title='Feature importances')

def get_params(self,deep=True):

return self.params

def set_params(self,**params):

self.params.update(params)

return self

def mae_score(y_true,y_pred): #平均绝对误差来衡量效果

return mean_absolute_error(np.exp(y_true),np.exp(y_pred))

#基准模型

mae_score=make_scorer(mae_score,greater_is_better=False)

bst=XGBoostRegressor(eta=0.1,colsample_bytree=0.5,subsample=0.5,max_depth=5,min_child_weight=3,num_boost_round=50)

print(bst.kfold(train_x,train_y,nfold=5))

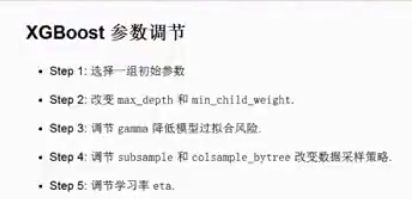

#树的深度和数子节点权重限制

xgb_param_grid={'max_depth':list(range(4,5)),'min_child_weight':list((1,3))}

grid=GridSearchCV(XGBoostRegressor(eta=0.1,colsample_bytree=0.5,subsample=0.5,num_boost_round=50),param_grid=xgb_param_grid,cv=2,scoring=mae_score)

grid.fit(train_x,train_y.values)

print(grid.scorer_)

print(grid.best_params_)

print(grid.best_score_)

#gamma值,是否要继续分割

xgb_param_grid={'gamma':[0.1*i for i in range(0,5)]}

grid=GridSearchCV(XGBoostRegressor(eta=0.1,colsample_bytree=0.5,subsample=0.5,max_depth=8,min_child_weight=6,num_boost_round=50),param_grid=xgb_param_grid,cv=2,scoring=mae_score)

grid.fit(train_x,train_y.values)

grid.fit(train_x,train_y.values)

print(grid.scorer_)

print(grid.best_params_)

print(grid.best_score_)

#调整样本采样方式,subsample,colsample_bytree行和列

xgb_param_grid={'subsample':[0.1*i for i in range(6,9)],'colsample_bytree':[0.1*i for i in range(6,9)]}

grid=GridSearchCV(XGBoostRegressor(eta=0.1,gamma=0.2,max_depth=8,min_child_weight=6,num_boost_round=50),param_grid=xgb_param_grid,cv=2,scoring=mae_score)

grid.fit(train_x,train_y.values)

grid.fit(train_x,train_y.values)

print(grid.scorer_)

print(grid.best_params_)

print(grid.best_score_)

#减小学习率并增大数的个数(结果差异较大)

xgb_param_grid={'eta':[0.5,0.3,0.1,0.05,0.03,0.01]}

grid=GridSearchCV(XGBoostRegressor(gamma=0.2,max_depth=8,min_child_weight=6,num_boost_round=50,colsample_bytree=0.5,subsample=0.5),param_grid=xgb_param_grid,cv=2,scoring=mae_score)

grid.fit(train_x,train_y.values)

grid.fit(train_x,train_y.values)

print(grid.scorer_)

print(grid.best_params_)

print(grid.best_score_)

查看数据状况代码:

print(train.shape)

print(train.describe()) #查看数据状况,判断是否已经标准化处理

print(pd.isnull(train).values.any()) #判断是否有缺失值

print(train.info()) #数据信息

cat_features=list(train.select_dtypes(include=['object']).columns)

cont_features=[cont for cont in list(train.select_dtypes(include=['float64','int64']).columns) if cont not in ['loss','id']]

id_col=list(train.select_dtypes(include=['int64']).columns)

print("类别型数据:{}".format(len(cat_features)))

print("连续型数据:{}".format(len(cont_features)))

print("标签:{}".format(id_col))

cat_uniques=[] #类别数据中属性的个数

for cat in cat_features:

cat_uniques.append(len(train[cat].unique()))

uniq_value_in_catagories=pd.DataFrame.from_items([('cat_names',cat_features),('unique_value',cat_uniques)])

print(uniq_value_in_catagories.head(5))

plt.figure(figsize=(16,8)) #标签值

plt.plot(train['id'],train['loss'])

plt.show()

print(stats.mstats.skew(train['loss']).data) #对标签数据,计算某一列的偏度值,比1大就是倾斜的,不利于建模

print(stats.mstats.skew(np.log(train['loss']).data)) #进行对数变换,倾斜值变小,分布均匀

fig,(ax1,ax2)=plt.subplots(1,2) #可视化展示变换前后的标签数据分布

fig.set_size_inches(16,5)

ax1.hist(train['loss'],bins=50)

ax1.set_title("Train loss target histogram")

ax2.hist(np.log(train['loss']),bins=50,color='g')

ax2.set_title("Train log loss target histogram")

plt.show()

a=train[cont_features].hist(bins=50,figsize=(16,12)) #连续值的分布情况

plt.subplots(figsize=(16,9)) #特征之间的相关性,筛选相似度高的特征

correlation=train[cont_features].corr()

sns.heatmap(correlation,annot=True)```