第5章 深度学习与Keras工程实践

5.3 Keras使用方法

5.3.2 Keras神经网络堆叠的两种方式

1.线性模型

from keras.models import Sequential

from keras.layers import Dense

model = Sequential()

model.add(Dense(units=4, activation='relu', input_dim=100))

model.add(Dense(units=5, activation='softmax'))

model.compile(loss='categorical_crossentropy',

optimizer='sgd',

metrics=['accuracy'])

model.fit(x_train, y_train, epochs=5, batch_size=32)

classes = model.predict(x_test, batch_size=128)

2.函数式API

from keras.layers import Input, Dense

from keras.models import Model

inputs = Input(shape=(100,))

X = Dense(4, activation='relu')(inputs)

predictions = Dense(5, activation='softmax')(X)

model = Model(inputs=inputs, outputs=predictions)

model.compile(loss='categorical_crossentropy',

optimizer='sgd',

metrics=['accuracy'])

model.fit(data, labels)

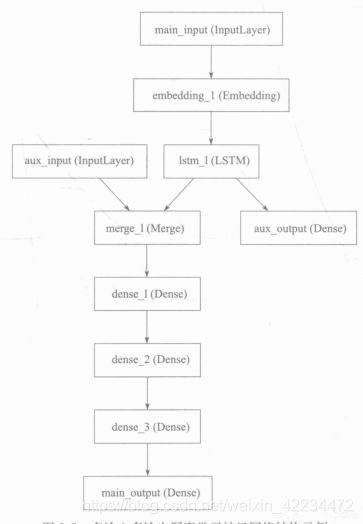

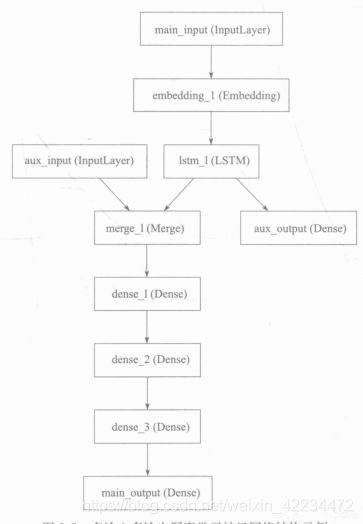

复杂网络样例

from keras.layers import Input, Dense, Embedding, LSTM

from keras.models import Model

from tensorflow import keras

main_input = Input(shape=(100,), dtype='int32', name='main_input')

X = Embedding(output_dim=521,

input_dim=10000,

input_length=100)(main_input)

lstm_out = LSTM(32)(X)

auxiliary_output = Dense(1, activation='sigmoid', name='aux_output')(lstm_out)

auxiliary_input = Input(shape=(5,), name='aux_input')

x = keras.layers.concatenate([lstm_out, auxiliary_input])

x = Dense(64, activation='relu')(x)

x = Dense(64, activation='relu')(x)

x = Dense(64, activation='relu')(x)

main_output = Dense(1, activation='sigmoid', name='main_output')(x)

model = Model(inputs=[main_input, auxiliary_input],

outputs=[main_output, auxiliary_output])

model.compile(optimizer='rmsprop',

loss='binary_crossentropy',

loss_weights=[1, 0.2]

)

model.fit([headline_data, additional_data],

[labels, labels],

epoches=50,

batch_size=32)