音视频开发任务(2)——AudioRecord音频录制并转码播放

一、音视频文件的一些基础知识

参考:https://blog.csdn.net/leixiaohua1020/article/details/18893769

-

音视频播放的过程: 拿到一段音视频,会经过解封装、解编码(音频解码、视频解码)然后得到音视频原始数据给播放设备进行播放。

-

音视频原始数据:

-

音频原始数据:音频输入设备通过采样得到的数据,例如PCM格式

-

视频原始数据:指视频的像素数据,主要有RGB和YUV两种

-

-

编码格式:将音视频原始数据压缩成音频流、视频流,从而降低数据大小

-

音频编码格式:AAC,MP3,WAV等

-

视频编码格式:H.264,H.265,MPEG4等

-

-

封装格式:将已经压缩编码的视频数据和音频数据按照一定格式放到一起,一种封装格式往往支持多种编码格式

-

主流封装格式:avi,rmvb,mp4,flv等

-

-

几个概念: 参考:https://blog.51cto.com/ticktick/1748506

-

采样率(samplerate):音频模拟信号转数字信号的过程需要采样,采样频率越高,记录这一段音频信号所用的数据量就越大,同时音频质量也就越高。通常人耳能听到频率范围大约在20Hz~20kHz之间的声音,为了保证声音不失真,采样频率应在40kHz以上。常用的音频采样频率有:8kHz、11.025kHz、22.05kHz、16kHz、37.8kHz、44.1kHz、48kHz、96kHz、192kHz

-

-

量化精度(位宽):每个采样点都用一个数值来表示大小,这个数值的数据类型大小可以是:4bit、8bit、16bit、32bit等等,位数越多,表示得就越精细,数据量越大。

-

声道数(channels):单声道(Mono)和双声道(Stereo)比较常见

-

音频帧(frame):为了音频算法处理/传输的方便,一般约定俗成取2.5ms~60ms为单位的数据量为一帧音频。假设某通道的音频信号是采样率为8kHz,位宽为16bit,20ms一帧,双通道,则一帧音频数据的大小为:= 8000 x 16bit x 0.02s x 2 = 5120 bit = 640 byte。

二、AudioRecordAPI的使用

-

Android提供了两个类用于录音,分别是AudioRecord和MediaRecord,其中MediaRecord实现了更高一级的封装,能直接得到编码后音频,AudioRecord得到是原始音频。

-

AudioRecord录制音频的过程:

-

构造一个AudioRecord对象,并初始化设置

-

创建一个buffer用于缓存从AudioRedio中的数据

-

将buffer中的数据写入到文件

-

-

代码如下:

/获取最低AudioRecord内部音视频缓冲区大小,此大小依赖于系统 //三个参数:采样率 声道数(MONO单 STEREO立体声) 返回的音频数据格式 recordBuffsize = AudioRecord.getMinBufferSize(44100, AudioFormat.CHANNEL_IN_MONO, AudioFormat.ENCODING_PCM_16BIT); //初始化audio实例 第一个参数指的是音频采集的输入源 一般是default或者mic audioRecord = new AudioRecord(MediaRecorder.AudioSource.MIC, 44100, AudioFormat.CHANNEL_IN_MONO, AudioFormat.ENCODING_PCM_16BIT, recordBuffsize); //检测audioRecord初始化是否成功 if (audioRecord.getState() != AudioRecord.STATE_INITIALIZED) { audioRecord = null; recordBuffsize = 0; Log.i(TAG, "audiorecord init fail"); } //创建PCM文件 final byte data[] = new byte[recordBuffsize]; final File file = new File(getExternalFilesDir(Environment.DIRECTORY_MUSIC), "test.pcm"); audioRecord.startRecording(); isRecording = true; //另起一条线程从缓冲区读取数据到文件 new Thread(new Runnable() { @Override public void run() { FileOutputStream os = null; try { os = new FileOutputStream(file);//会自动覆盖之前的文件 } catch (FileNotFoundException e) { e.printStackTrace(); } if (os != null) { while (isRecording) { int read = audioRecord.read(data, 0, recordBuffsize); if (read != AudioRecord.ERROR_INVALID_OPERATION) { try { os.write(data); } catch (IOException e) { e.printStackTrace(); } } } } try { Log.i(TAG, "run: close file output stream !"); os.close(); } catch (IOException e) { e.printStackTrace(); } } }).start(); -

使用AudioTrack播放PCM文件: 过程类似于录制的步骤:

-

初始化一个AudioTrack对象

-

创建一个buffer用于缓冲从文件读出的PCM数据

-

将buffer中的数据写入Audiotrack进行播放

代码如下:public void streamPlayModeStart() { //关闭已有的播放线程 //closeThread(); Log.i(TAG, "streamPlayModeStart"); if (audioTrack == null) { trackBuffersize = AudioTrack.getMinBufferSize(44100, AudioFormat.CHANNEL_OUT_MONO, AudioFormat.ENCODING_PCM_16BIT); Log.i(TAG, "trackBuffersize:" + trackBuffersize); /** *播放模式:MODE_STATIC 或者 MODE_STREAM * MODE_STATIC 预先将需要播放的音频数据读取到内存中,然后才开始播放。 * MODE_STREAM 边读边播,不会将数据直接加载到内存 * */ audioTrack = new AudioTrack(AudioManager.STREAM_MUSIC, 44100, AudioFormat.CHANNEL_OUT_MONO, AudioFormat.ENCODING_PCM_16BIT, trackBuffersize, AudioTrack.MODE_STREAM); audioTrack.play(); //启动播放线程 isPlaying = true; startPlayThread = new Thread(playStartRunnable); startPlayThread.start(); }}Runnable playStartRunnable = new Runnable() { @Override public void run() { File file = new File(path); if (file.exists()) { try { FileInputStream fileInputStream = new FileInputStream(file); byte[] tempBuffer = new byte[trackBuffersize]; while (fileInputStream.available() > 0 && isPlaying) { int readCount = fileInputStream.read(tempBuffer); Log.i(TAG, "readcount: " + readCount); if (audioTrack != null && readCount != 0 && readCount != -1) { Log.i(TAG,"AudioTrack write"); audioTrack.write(tempBuffer, 0, readCount); } else isPlaying = false; } } catch (FileNotFoundException e) { Log.i(TAG, "FileNotFoundException"); e.printStackTrace(); } catch (IOException e) { e.printStackTrace(); Log.i(TAG, "IOException"); } } } };注意:AudioTrack有两种播放模式:MODE_STATIC 或者 MODE_STREAM,参看代码中的注释

三、将PCM文件转换为WAV文件

-

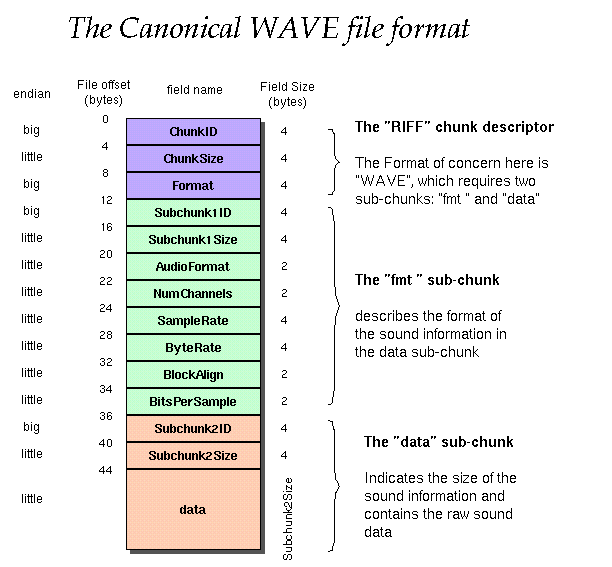

WAV文件的格式:参考:https://www.cnblogs.com/ranson7zop/p/7657874.html

-

根据格式可知:wav格式就是在PCM数据上加了WAV文件的格式头即可,就是文件读写操作,我的思路是,先往文件中写入音频数据,然后使用RandomAccessFile类将文件指针移到文件开头,写入wav头格式的数据 代码如下:

public void convertPcm2Wav(){ WavFileHeader header=new WavFileHeader(44100,16,1); File file=new File(path); File wavfile=new File(getExternalFilesDir(Environment.DIRECTORY_MUSIC), "test.wav"); if(!file.exists()){ Log.e(TAG,"文件不存在"); return; } if(wavfile.exists()){ try { wavfile.delete(); wavfile.createNewFile(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } //写data数据 byte[] tempBuffer=new byte[1024]; int size=0; int once=0; try { FileInputStream inputStream=new FileInputStream(file); FileOutputStream outputStream=new FileOutputStream(wavfile); while(true){ once=inputStream.read(tempBuffer); if(once==0||once==-1)break; outputStream.write(tempBuffer, 0, once); size=size+once; } } catch (FileNotFoundException e) { Log.e(TAG,"PCM文件不存在"); e.printStackTrace(); } catch (IOException e) { Log.e(TAG,"读写文件出错"); e.printStackTrace(); } header.mAudiodata=size; header.mChunkSize=36+size;//头部分36字节 header.writeToFile();}WavFileHeader.java

public class WavFileHeader { ///-------------------------RIFF块-------------------------- public byte[] mChunkID = {'R','I','F','F'}; public int mChunkSize = 0; //从下一个字节开始到文件尾部大小:即整个文件大小=mChunksize+8 public byte[] mFormat = {'W','A','V','E'}; //---------------------------fmt块---------------------------- public byte[] mSubChunk1ID = {'f','m','t',' '}; public int mSubChunk1Size = 16;//格式块长度,不包含前两个参数 public short mAudioFormat = 1; //格式类别 1是PCM public short mNumChannel = 1;//通道数 public int mSampleRate = 44100;//采样率 public int mByteRate = 0;//每秒数据字节数 = SampleRate * NumChannels * BitsPerSample/8 public short mBlockAlign = 0;//数据块对齐= NumChannels * BitsPerSample/8 public short mBitsPerSample = 16;//采样位数 8bits, 16bits, etc. //----------------------------data块头------------------------ public byte[] mSubChunk2ID = {'d','a','t','a'}; public int mAudiodata = 0;//语音数据大小 public WavFileHeader() { } public WavFileHeader(int sampleRateInHz, int bitsPerSample, int channels) { mSampleRate = sampleRateInHz; mBitsPerSample = (short)bitsPerSample; mNumChannel = (short)channels; mByteRate = mSampleRate*mNumChannel*mBitsPerSample/8; mBlockAlign = (short)(mNumChannel*mBitsPerSample/8); } public void writeToFile(){ try { RandomAccessFile raf=new RandomAccessFile("/storage/emulated/0/Android/data/com.example.audiodemo/files/Music/"+"test.wav","rw"); raf.write(mChunkID); raf.write(intToByteArray(mChunkSize)); raf.write(mFormat); raf.write(mSubChunk1ID); raf.write(intToByteArray(mSubChunk1Size)); raf.write(shortToByteArray(mAudioFormat)); raf.write(shortToByteArray(mNumChannel)); raf.write(intToByteArray(mSampleRate)); raf.write(intToByteArray(mByteRate)); raf.write(shortToByteArray(mBlockAlign)); raf.write(shortToByteArray(mBitsPerSample)); raf.write(mSubChunk2ID); raf.write(intToByteArray(mAudiodata)); raf.close(); } catch (FileNotFoundException e) { // TODO Auto-generated catch block e.printStackTrace(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } private static byte[] intToByteArray(int data) { return ByteBuffer.allocate(4).order(ByteOrder.LITTLE_ENDIAN).putInt(data).array(); } private static byte[] shortToByteArray(short data) { return ByteBuffer.allocate(2).order(ByteOrder.LITTLE_ENDIAN).putShort(data).array(); } private static short byteArrayToShort(byte[] b) { return ByteBuffer.wrap(b).order(ByteOrder.LITTLE_ENDIAN).getShort(); } private static int byteArrayToInt(byte[] b) { return ByteBuffer.wrap(b).order(ByteOrder.LITTLE_ENDIAN).getInt(); }