【OpenCV】基于OpenCV的双目视觉测试

首先,本人写的文档可能比较冗余,所以请认真仔细读完再进行操作。

代码参考邹宇华老师的双目,Camera calibration With OpenCV,Camera Calibration and 3D Reconstruction部分,按照自己的情况进行了更改。

如果读者是想快速工程使用,那可以看我的这篇博客,如果想要系统学习,请先看相关教材,并辅以邹宇华老师的博客。

准备环境

因为本文是进行双目立体视觉实验,所以你必须有两个摄像头,单摄像头标定的实验可以看我的前一篇文章。解决方案,并且有特别需要注意的点我都会仔细说明。

双目摄像头准备

- 直接购买两个普通的usb摄像头,这个方案是我最早采用的,但是遇到不少坑。

-

- 注意不要太广角,因为广角的畸变会很大,这在后面匹配的时候带来很大的问题

-

- 安装的时候注意把两个安装的较为平行,虽然可以矫正出来,但是当然还是自身比较平行的好

-

- 同时也要注意两个摄像头的轴距,不要太远或者太近,5-10cm为较为适宜的

-

- 分辨率可以高一点,但实验上我把他限制在320*240,其实可以标定采用高分辨率,匹配采用低分辨率

- 购买淘宝的一种双目摄像头

-

- 同样注意是否广角,这点我觉得有点坑,因为不想涉及到打广告,淘宝那家集成的很好,但是,最广角的貌似有点问题,我换成不太广的了

标定板准备

- 淘宝购买标定板,一个字贵,土豪可以忽略。

- 自己打印,那么可以按照我的前一篇文章里的方法准备,注意记好到底是几乘几的。

这个几乘以几是按照黑白格子的交叉的数量算的,例如,标定板就是9*6的,记好。

双目标定环节

最下面给了所有实验的源代码,代码有点乱,还是分开来说。双目标定环节就是需要得到两个摄像机各自的内参,以及他们俩的外参数。

双目摄像机读取

直接上双目读取的代码,我把两个摄像头的分辨率都改了一下。这个得看具体摄像头的支持程度,有的无法改,你改了也没效果。

#include "opencv2/opencv.hpp"

#include "opencv2/opencv.hpp"

#include "opencv2/highgui.hpp"

using namespace std;

using namespace cv;

int main(){

VideoCapture camera_l(1);

VideoCapture camera_r(0);

if (!camera_l.isOpened()) { cout << "No left camera!" << endl; return -1; }

if (!camera_r.isOpened()) { cout << "No right camera!" << endl; return -1; }

camera_l.set(CAP_PROP_FRAME_WIDTH, 320);

camera_l.set(CAP_PROP_FRAME_HEIGHT, 240);

camera_r.set(CAP_PROP_FRAME_WIDTH, 320);

camera_r.set(CAP_PROP_FRAME_HEIGHT, 240);

cv::Mat frame_l, frame_r;

while (1) {

camera_l >> frame_l;

camera_r >> frame_r;

imshow("Left Camera", frame_l);

imshow("Right Camera", frame_r);

char key = waitKey(1);

if (key == 27 || key == 'q' || key == 'Q') //Allow ESC to quit

break;

}

return 0;

}本来双目摄像机读取起来并没有什么可说的,但是我在后期做别的实验的时候发现,摄像机有时候读取的有问题,无法完成初始化,如果确定两个摄像头分辨读取没有问题,只是无法一起读取,有如下两种解决方案。

* 邹老师的方案

* 在两个摄像机分别都可以运行之后,我们改变初始化部分的代码,把两个if判断改成如下两行。

while (!camera_l.isOpened()) { camera_l.open(1); };

while (!camera_r.isOpened()) { camera_r.open(0); };注意到,右摄像头的图像相对于左摄像头的图像有点“左移”。这点自己分析一下原因。很重要,如果不是这项,你下面的工作会白做。因为匹配的算法就是遵循这种“左移”的。

标定环节

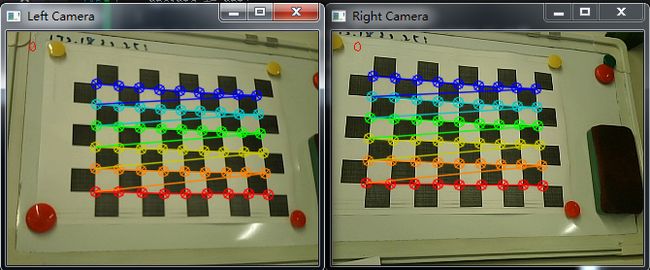

基本上的流程就是读取左右摄像头,分别检测棋盘的角点,当同时都检测到完整的角点之后,进行精细化处理,得到更精确地角点并存储。攒够一定的数量之后(20-30)之后进行参数计算。并将参数进行存储。还是直接上代码,并说明一些实现的细节部分。

请慢慢阅读。

一上来的这个ChessboardStable 是用来检测棋盘格是否稳定的,因为在我的试验中,双目摄像头是用手拿着的,或多或少会有一些抖动,这样如果只是检测是否存在角点,可能会通过不是很清晰稳定的图像进行分析,这样会带来比较大的误差,如果通过一个队列判断是否稳定,则可以避免这种误差。我是简单粗暴的使用vector代替队列的。

后面的部分需要注意的就是boardSize,squareSize 需要设置为你的标定板对应的尺寸,我拿A4纸简单的打印的一份,每个格子的大小经过测量时26mm,你可以根据你自己的标定板进行相应的设置。

#include 注意标定的时候把各个方向,大小都照顾到。

到30之后就进入标定环节了。

立体匹配

直接上完整代码了。注意之前有人问我标定完之后如何去黑边,可以注意一下里面的函数

initUndistortRectifyMap(cameraMatrix[0], distCoeffs[0], R1, P1, imageSize, CV_16SC2, rmap[0][0], rmap[0][1]);这个就是用来计算校正后的映射的。后面可以计算出校正后的内接矩形,也就是校正后的无黑边的图像部分,会损失掉原图像的边缘。

此外,额,这次我自己测试的效果不是很好,之前在实验室的时候要比写文档这次好很多,所以也希望你们可以把自己的结果发出来。看下图的横线的话,感觉还是标定的不太好。这次的文档就当是一个流程介绍吧。

#include sbm = StereoBM::create(ndisparities, SADWindowSize);

sbm->setMinDisparity(0);

//sbm->setNumDisparities(64);

sbm->setTextureThreshold(10);

sbm->setDisp12MaxDiff(-1);

sbm->setPreFilterCap(31);

sbm->setUniquenessRatio(25);

sbm->setSpeckleRange(32);

sbm->setSpeckleWindowSize(100);

Ptr sgbm = StereoSGBM::create(0, 64, 7,

10 * 7 * 7,

40 * 7 * 7,

1, 63, 10, 100, 32, StereoSGBM::MODE_SGBM);

Mat rimg, cimg;

Mat Mask;

while (1)

{

camera_l >> frame_l;

camera_r >> frame_r;

if (frame_l.empty() || frame_r.empty())

continue;

remap(frame_l, rimg, rmap[0][0], rmap[0][1], INTER_LINEAR);

rimg.copyTo(cimg);

Mat canvasPart1 = !isVerticalStereo ? canvas(Rect(w * 0, 0, w, h)) : canvas(Rect(0, h * 0, w, h));

resize(cimg, canvasPart1, canvasPart1.size(), 0, 0, INTER_AREA);

Rect vroi1(cvRound(validRoi[0].x*sf), cvRound(validRoi[0].y*sf),

cvRound(validRoi[0].width*sf), cvRound(validRoi[0].height*sf));

remap(frame_r, rimg, rmap[1][0], rmap[1][1], INTER_LINEAR);

rimg.copyTo(cimg);

Mat canvasPart2 = !isVerticalStereo ? canvas(Rect(w * 1, 0, w, h)) : canvas(Rect(0, h * 1, w, h));

resize(cimg, canvasPart2, canvasPart2.size(), 0, 0, INTER_AREA);

Rect vroi2 = Rect(cvRound(validRoi[1].x*sf), cvRound(validRoi[1].y*sf),

cvRound(validRoi[1].width*sf), cvRound(validRoi[1].height*sf));

Rect vroi = vroi1&vroi2;

imgLeft = canvasPart1(vroi).clone();

imgRight = canvasPart2(vroi).clone();

rectangle(canvasPart1, vroi1, Scalar(0, 0, 255), 3, 8);

rectangle(canvasPart2, vroi2, Scalar(0, 0, 255), 3, 8);

if (!isVerticalStereo)

for (int j = 0; j < canvas.rows; j += 32)

line(canvas, Point(0, j), Point(canvas.cols, j), Scalar(0, 255, 0), 1, 8);

else

for (int j = 0; j < canvas.cols; j += 32)

line(canvas, Point(j, 0), Point(j, canvas.rows), Scalar(0, 255, 0), 1, 8);

cvtColor(imgLeft, imgLeft, CV_BGR2GRAY);

cvtColor(imgRight, imgRight, CV_BGR2GRAY);

//-- And create the image in which we will save our disparities

Mat imgDisparity16S = Mat(imgLeft.rows, imgLeft.cols, CV_16S);

Mat imgDisparity8U = Mat(imgLeft.rows, imgLeft.cols, CV_8UC1);

Mat sgbmDisp16S = Mat(imgLeft.rows, imgLeft.cols, CV_16S);

Mat sgbmDisp8U = Mat(imgLeft.rows, imgLeft.cols, CV_8UC1);

if (imgLeft.empty() || imgRight.empty())

{

std::cout << " --(!) Error reading images " << std::endl; return -1;

}

sbm->compute(imgLeft, imgRight, imgDisparity16S);

imgDisparity16S.convertTo(imgDisparity8U, CV_8UC1, 255.0 / 1000.0);

cv::compare(imgDisparity16S, 0, Mask, CMP_GE);

applyColorMap(imgDisparity8U, imgDisparity8U, COLORMAP_HSV);

Mat disparityShow;

imgDisparity8U.copyTo(disparityShow, Mask);

sgbm->compute(imgLeft, imgRight, sgbmDisp16S);

sgbmDisp16S.convertTo(sgbmDisp8U, CV_8UC1, 255.0 / 1000.0);

cv::compare(sgbmDisp16S, 0, Mask, CMP_GE);

applyColorMap(sgbmDisp8U, sgbmDisp8U, COLORMAP_HSV);

Mat sgbmDisparityShow;

sgbmDisp8U.copyTo(sgbmDisparityShow, Mask);

imshow("bmDisparity", disparityShow);

imshow("sgbmDisparity", sgbmDisparityShow);

imshow("rectified", canvas);

char c = (char)waitKey(1);

if (c == 27 || c == 'q' || c == 'Q')

break;

}

return 0;

} 此外你们可能还需要的代码是读取参数并进行双目匹配的代码,我也在后面放出来了。

额,因为不可能用一次标一次。

#include 谢谢你能有耐心看完这篇文档。