MASK R-CNN demo源码解析TensorFlow版本

MASK R-CNN源码解析TensorFlow版本

- demo运行

- 库函数的导入及路径设置

- 设置配置

- 创建模型并加载训练权重

- 分类数量

- 运行检测

论文连接

代码连接

论文解析

参考博客

参考博客

参考博客

demo运行

代码的运行在jupyter notebook中进行,其位置位于/Mask_RCNN/samples中的demp.ipynb

库函数的导入及路径设置

import os

import sys

import random

import math

import numpy as np

import skimage.io

import matplotlib

import matplotlib.pyplot as plt

# 设置基础路径

ROOT_DIR = os.path.abspath("../")

# 添加路径

sys.path.append(ROOT_DIR)

import mrcnn.model as modellib

from mrcnn import visualize

# 设置COCO配置导入本地python文件

sys.path.append(os.path.join(ROOT_DIR, "samples/coco/"))

import coco

# 把图像画在jupyter notebook里面

get_ipython().run_line_magic('matplotlib', 'inline')

# 保存日志和培训模型

MODEL_DIR = os.path.join(ROOT_DIR, "logs")

# 训练好模型的权重目录位置

COCO_MODEL_PATH = os.path.join(ROOT_DIR, "mask_rcnn_coco.h5")

# 如果没有此路径,下载coco模型

if not os.path.exists(COCO_MODEL_PATH):

utils.download_trained_weights(COCO_MODEL_PATH)

# 设置测试图像路径

IMAGE_DIR = os.path.join(ROOT_DIR, "images")

此段为jupyter notebook中的第一段,如果出现报错,多数为文件路径的问题。

其操作为导入各项库,和设置路径,其各项功能以在代码中注释清楚。

设置配置

class InferenceConfig(coco.CocoConfig):

#使用coco训练好的模型配置

GPU_COUNT = 1

IMAGES_PER_GPU = 1

config = InferenceConfig()

config.display()

在demo中使用了coco训练好的模型,其模型配置在/Mask_RCNN/samples/coco/coco.py和/Mask_RCNN/mrcnn/config.py

class CocoConfig(Config):

#配置名称为coco

NAME = "coco"

#12G现存的GPU可以检测2张图片,如果GPU性能相对低下,可以降低。

IMAGES_PER_GPU = 2

# 训练在8张GPU上进行,默认为1,在此注释

# 后文中训练自己数据集将会使用这部分。

# GPU_COUNT = 8

# 将被检测目标分成几类,coco的80个类+背景

NUM_CLASSES = 1 + 80

#虽然是使用coco进行配置,其实核心配置部分在/Mask_RCNN/mrcnn/config.py中,下面进行解析。

class Config(object):

"""基础设置类型,对于自定义配置,请创建从该类继承并重写属性的子类

"""

#命名配置。例如,“COCO”

NAME = None # 在子类中重写

# GPU使用数量。

GPU_COUNT = 1

# 12G现存的GPU可以检测2张图片,如果GPU性能相对低下,可以降低。

# 图片分辨率为1024x1024px.

# 根据您的GPU内存和图像大小进行调整

IMAGES_PER_GPU = 2

# 每次训练的步数,此参数不需要与训练设置相匹配,在每次训练结束更新Tensorboard,

# 因此,将此设置为较小的数字意味着更频繁地更新TensorBoard。

# 验证状态也在每个训练结束时计算,并且可能需要一段时间,所以不要设置得太小以避免在验证统计上花了很多时间。

STEPS_PER_EPOCH = 1000

# 在每个培训阶段结束时要运行的验证步数,较大的数字可以提高验证统计的准确性,但会减慢训练的速度。

VALIDATION_STEPS = 50

# 主干网架构,支持的值为:resnet50、resnet101。您还可以提供应具有签名的可调用的模型

# 如果这样做,您还需要提供一个可调用的来计算主干形状

BACKBONE = "resnet101"

# 只有当您提供一个可调用到主干时才有用。应计算FPN金字塔各层的形状。参见/Mask_RCNN/mrcnn/model.py_主干形状

COMPUTE_BACKBONE_SHAPE = None

# FPN金字塔各层的步幅。这些值基于resnet101主干。

BACKBONE_STRIDES = [4, 8, 16, 32, 64]

# 分类网络中完全连接层的大小

FPN_CLASSIF_FC_LAYERS_SIZE = 1024

# 用于构建特征金字塔的自顶向下层的大小

TOP_DOWN_PYRAMID_SIZE = 256

# 分类类数(包括背景)

NUM_CLASSES = 1 # 在子类中重写

# 方形anchor边的长度(像素)

RPN_ANCHOR_SCALES = (32, 64, 128, 256, 512)

# 每个单元的锚定比(宽度/高度)值1表示方形锚定,0.5表示宽锚定

RPN_ANCHOR_RATIOS = [0.5, 1, 2]

# Anchor 步长

#如果为1,则为主干要素图中的每个单元创建锚定。

#如果为2,则为每个其他单元格创建锚,依此类推。

RPN_ANCHOR_STRIDE = 1

# 过滤RPN建议的非最大抑制阈值。您可以在培训期间增加该阈值以生成更多侯选匡。

RPN_NMS_THRESHOLD = 0.7

# 每个图像有多少锚用于RPN训练

RPN_TRAIN_ANCHORS_PER_IMAGE = 256

# 敏感区域(RIOs)在预测匡和非极大值抑制之间的数值

PRE_NMS_LIMIT = 6000

# 敏感区域在非极大值抑制之后的数值 (训练和检测)

POST_NMS_ROIS_TRAINING = 2000

POST_NMS_ROIS_INFERENCE = 1000

# 如果启用,请将实例掩码调整为较小的大小以减少内存负载。建议使用高分辨率图像。

USE_MINI_MASK = True

MINI_MASK_SHAPE = (56, 56) # (height, width) of the mini-mask

# 输入图像大小调整

# 一般情况下,使用“正方形”的大小调整模式进行训练和预测,在大多数情况下都能很好地工作。

# 在此模式下,图像被放大,使小边=IMAGE_MIN_DIM,但确保缩放不会使长边>IMAGE_MAX_DIM。

# 然后用零填充图像,使其成为一个正方形,这样可以将多个图像放在一批中。

#可用的调整大小模式:

#null:无大小调整或填充。返回未更改的图像。

#正方形:调整大小并用零填充,以获得大小为[最大尺寸,最大尺寸]的正方形图像。

#square:用零填充宽度和高度,使其成为64的倍数。

# 如果image_min_dim或image_min_scale不是none,则在填充前会向上缩放。图像最大亮度在此模式下被忽略。

# 需要64的倍数,以确保在6层FPN金字塔(2*6=64)上下平滑缩放功能图。

#crop:从图像中随机选取裁剪。首先,根据“图像最小值”和“图像最小值”缩放图像

# 然后选择一个随机裁剪的“图像最小值”和“图像最小 值”。只能用于培训。

#此模式中不使用图像最大亮度。

IMAGE_RESIZE_MODE = "square"

IMAGE_MIN_DIM = 800

IMAGE_MAX_DIM = 1024

# 最小比例。在确保MIN_IMAGE_DIM,可以进一步强制缩放。例如,如果设置为2,

# 则图像将放大到宽度和高度的两倍或更多,即使MIN_IMAGE_DIM不需要它。

# 但是,在“正方形”模式下,它可以被IMAGE_MAX_DIM所限制。

IMAGE_MIN_SCALE = 0

# 每个图像的颜色通道数。rgb=3,灰度=1,rgb-d=4

# 更改此项需要对代码进行其他更改。查看wiki了解更多信息

# 详情:https://github.com/matterport/mask rcnn/wiki

IMAGE_CHANNEL_COUNT = 3

# 图像平均值(RGB)

MEAN_PIXEL = np.array([123.7, 116.8, 103.9])

# 每张图片去适应分类和mask的敏感区域数量:

# Mask RCNN论文使用512,但通常情况下,RPN并没有产生足够的积极建议来满足这一要求,

# 并保持1:3的正/负比率。您可以通过调整RPN NMS阈值来增加提案的数量。

TRAIN_ROIS_PER_IMAGE = 200

# 用于训练分类器/遮罩层的ROI百分比

ROI_POSITIVE_RATIO = 0.33

# 敏感区域(RIOs)的池化层

POOL_SIZE = 7

MASK_POOL_SIZE = 14

# 输出遮罩形状

# 要更改此设置,还需要更改mask分支

MASK_SHAPE = [28, 28]

# Maximum number of ground truth instances to use in one image

MAX_GT_INSTANCES = 100

# RPN和最终检测的边界框精化标准偏差。

RPN_BBOX_STD_DEV = np.array([0.1, 0.1, 0.2, 0.2])

BBOX_STD_DEV = np.array([0.1, 0.1, 0.2, 0.2])

# 最高检测数

DETECTION_MAX_INSTANCES = 100

# 接受检测到的实例的最小概率值

# 跳过低于此阈值的ROI

DETECTION_MIN_CONFIDENCE = 0.7

# 非极大值抑制阈值

DETECTION_NMS_THRESHOLD = 0.3

# 学习速率和动量

# MASK R-CNN论文使用学习速率=0.02,但在TensorFlow上,它会导致权重爆炸,可能是由于优化器实现的不同。

LEARNING_RATE = 0.001

LEARNING_MOMENTUM = 0.9

# 权重正则化

WEIGHT_DECAY = 0.0001

# 更精确的优化权重,可以使用R-CNN的训练配置

LOSS_WEIGHTS = {

"rpn_class_loss": 1.,

"rpn_bbox_loss": 1.,

"mrcnn_class_loss": 1.,

"mrcnn_bbox_loss": 1.,

"mrcnn_mask_loss": 1.

}

# 使用RPN ROI或外部生成的ROI进行训练。在大多数情况下都要保持为True。

# 如果您想对代码而不是RPN中的ROI生成的ROI上的头分支进行培训,请设置为false。

# 例如,在不需要训练RPN的情况下调试分类器。

USE_RPN_ROIS = True

#训练或冻结批量标准化层

#None:训练BN图层。 这是正常模式

#False:冻结BN图层。 使用小批量时很好

#True :(不要使用)。 即使在预测时也在训练网络

TRAIN_BN = False # 默认设置为False

# Gradient norm clipping

GRADIENT_CLIP_NORM = 5.0

def __init__(self):

"""设置计算属性的值"""

# Effective batch size

self.BATCH_SIZE = self.IMAGES_PER_GPU * self.GPU_COUNT

# 输入图像大小进行改变

if self.IMAGE_RESIZE_MODE == "crop":

self.IMAGE_SHAPE = np.array([self.IMAGE_MIN_DIM, self.IMAGE_MIN_DIM,

self.IMAGE_CHANNEL_COUNT])

else:

self.IMAGE_SHAPE = np.array([self.IMAGE_MAX_DIM, self.IMAGE_MAX_DIM,

self.IMAGE_CHANNEL_COUNT])

# 图像元数据长度

# See compose_image_meta() for details

self.IMAGE_META_SIZE = 1 + 3 + 3 + 4 + 1 + self.NUM_CLASSES

def display(self):

"""现实设置参数."""

print("\nConfigurations:")

for a in dir(self):

if not a.startswith("__") and not callable(getattr(self, a)):

print("{:30} {}".format(a, getattr(self, a)))

print("\n")

关于Non-maximum suppression非极大值抑制参考此篇文字

有关学习速率和优化器

创建模型并加载训练权重

# 创建模型

model = modellib.MaskRCNN(mode="inference", model_dir=MODEL_DIR, config=config)

# 加载权重

model.load_weights(COCO_MODEL_PATH, by_name=True)

来到MASK R-CNN的重点,也模型的设置,其中部分参数已在上部分进行详细介绍。下面结合网络结构,仔细看网络的构建。

文件路径/Mask_RCNN/mrcnn/model.py

关于Kerala方面的知识请参考这几篇文章,本文也有部分注释

Keras学习笔记(一) keras Lambda 层

class MaskRCNN():

def __init__(self, mode, config, model_dir):

"""

mode(模式): 选择"training训练"或者"inference运行"

config(参数): 使用上文config派生出来的子类

model_dir(模型路径): 保存训练日志和训练权重的路径

"""

assert mode in ['training', 'inference']

self.mode = mode

self.config = config

self.model_dir = model_dir

self.set_log_dir()

self.keras_model = self.build(mode=mode, config=config)

def build(self, mode, config):

"""构建Mask R-CNN主体部分

input_shape(输入形状): 输入图像的参数

mode(模型): 选择"training训练"或者"inference运行"。

模型的输入和输出也相应地有所不同。

"""

assert mode in ['training', 'inference']

# 图像大小必须至少可分割2次(此函数为判断作用)

# 在缩小和增大比例时避免分数。

# 例如,使用256、320、384、448、512,…等等。

h, w = config.IMAGE_SHAPE[:2]

if h / 2**6 != int(h / 2**6) or w / 2**6 != int(w / 2**6):

raise Exception("Image size must be dividable by 2 at least 6 times "

"to avoid fractions when downscaling and upscaling."

"For example, use 256, 320, 384, 448, 512, ... etc. ")

# Inputs 利用Keras获取图像

input_image = KL.Input(

shape=[None, None, config.IMAGE_SHAPE[2]], name="input_image")

input_image_meta = KL.Input(shape=[config.IMAGE_META_SIZE],

name="input_image_meta")

# 如果模式是训练的情况下

if mode == "training":

# RPN GT

input_rpn_match = KL.Input(

shape=[None, 1], name="input_rpn_match", dtype=tf.int32)

input_rpn_bbox = KL.Input(

shape=[None, 4], name="input_rpn_bbox", dtype=tf.float32)

# Detection GT (class IDs, bounding boxes, and masks)

# 1. GT Class IDs (zero padded)

input_gt_class_ids = KL.Input(

shape=[None], name="input_gt_class_ids", dtype=tf.int32)

# 2. GT Boxes in pixels (zero padded)

# [batch, MAX_GT_INSTANCES, (y1, x1, y2, x2)] in image coordinates

input_gt_boxes = KL.Input(

shape=[None, 4], name="input_gt_boxes", dtype=tf.float32)

# Normalize coordinates

gt_boxes = KL.Lambda(lambda x: norm_boxes_graph(

x, K.shape(input_image)[1:3]))(input_gt_boxes)

# 3. GT Masks (zero padded)

# [batch, height, width, MAX_GT_INSTANCES]

if config.USE_MINI_MASK:

input_gt_masks = KL.Input(

shape=[config.MINI_MASK_SHAPE[0],

config.MINI_MASK_SHAPE[1], None],

name="input_gt_masks", dtype=bool)

else:

input_gt_masks = KL.Input(

shape=[config.IMAGE_SHAPE[0], config.IMAGE_SHAPE[1], None],

name="input_gt_masks", dtype=bool)

elif mode == "inference":

# Anchors in normalized coordinates

input_anchors = KL.Input(shape=[None, 4], name="input_anchors")

#构建共享卷积层。

#自下而上的图层

#返回每个阶段的最后一层的列表,总共5个。

#不要创建thead(第5阶段),所以我们选择列表中的第4项。

if callable(config.BACKBONE):

_, C2, C3, C4, C5 = config.BACKBONE(input_image, stage5=True,

train_bn=config.TRAIN_BN)

else:

_, C2, C3, C4, C5 = resnet_graph(input_image, config.BACKBONE,

stage5=True, train_bn=config.TRAIN_BN)

# 自上而下

# TODO: add assert to varify feature map sizes match what's in config

P5 = KL.Conv2D(config.TOP_DOWN_PYRAMID_SIZE, (1, 1), name='fpn_c5p5')(C5)

P4 = KL.Add(name="fpn_p4add")([

KL.UpSampling2D(size=(2, 2), name="fpn_p5upsampled")(P5),

KL.Conv2D(config.TOP_DOWN_PYRAMID_SIZE, (1, 1), name='fpn_c4p4')(C4)])

P3 = KL.Add(name="fpn_p3add")([

KL.UpSampling2D(size=(2, 2), name="fpn_p4upsampled")(P4),

KL.Conv2D(config.TOP_DOWN_PYRAMID_SIZE, (1, 1), name='fpn_c3p3')(C3)])

P2 = KL.Add(name="fpn_p2add")([

KL.UpSampling2D(size=(2, 2), name="fpn_p3upsampled")(P3),

KL.Conv2D(config.TOP_DOWN_PYRAMID_SIZE, (1, 1), name='fpn_c2p2')(C2)])

# Attach 3x3 conv to all P layers to get the final feature maps.

P2 = KL.Conv2D(config.TOP_DOWN_PYRAMID_SIZE, (3, 3), padding="SAME", name="fpn_p2")(P2)

P3 = KL.Conv2D(config.TOP_DOWN_PYRAMID_SIZE, (3, 3), padding="SAME", name="fpn_p3")(P3)

P4 = KL.Conv2D(config.TOP_DOWN_PYRAMID_SIZE, (3, 3), padding="SAME", name="fpn_p4")(P4)

P5 = KL.Conv2D(config.TOP_DOWN_PYRAMID_SIZE, (3, 3), padding="SAME", name="fpn_p5")(P5)

# P6 is used for the 5th anchor scale in RPN. Generated by

# subsampling from P5 with stride of 2.

P6 = KL.MaxPooling2D(pool_size=(1, 1), strides=2, name="fpn_p6")(P5)

# Note that P6 is used in RPN, but not in the classifier heads.

rpn_feature_maps = [P2, P3, P4, P5, P6]

mrcnn_feature_maps = [P2, P3, P4, P5]

以上部分为初始化,以及开始的卷积层构造,此处构造较为简单,

其共享卷积核的思想是fast rcnn的核心观点,

下文是rpn网络,可以参考faster rcnn进行理解

# Anchors

if mode == "training":

anchors = self.get_anchors(config.IMAGE_SHAPE)

# Duplicate across the batch dimension because Keras requires it

# TODO: can this be optimized to avoid duplicating the anchors?

anchors = np.broadcast_to(anchors, (config.BATCH_SIZE,) + anchors.shape)

# A hack to get around Keras's bad support for constants

anchors = KL.Lambda(lambda x: tf.Variable(anchors), name="anchors")(input_image)

else:

anchors = input_anchors

# RPN Model

# 构造RPN网络,传入参数包含,anchor的步长,anchor的数量以及输入的深度。

rpn = build_rpn_model(config.RPN_ANCHOR_STRIDE,

len(config.RPN_ANCHOR_RATIOS), config.TOP_DOWN_PYRAMID_SIZE)

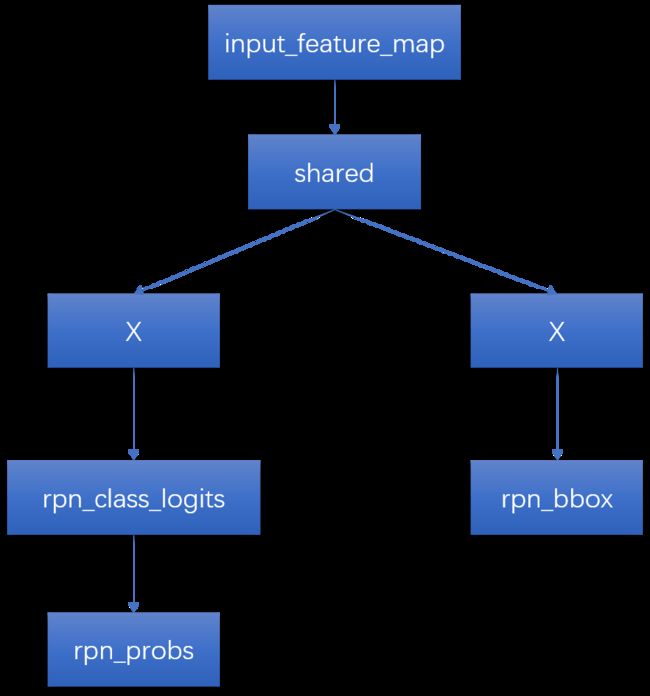

关于 RPN网络的构造 在此文件第874行,其代码如下:

def build_rpn_model(anchor_stride, anchors_per_location, depth):

"""构建RPN(区域提案网络)的Keras模型。 它封装了RPN图,因此可以使用共享权重多次使用它。

anchors_per_location:要素图中每个像素的锚点数

anchor_stride:控制锚点的密度。 通常为1(要素图中每个像素的锚点)或2(每隔一个像素)。

depth:特征图的深度。

返回Keras模型对象。调用时,模型输出为:

rpn_class_logits:[batch,H * W * anchors_per_location,2] Anchor分类器logits(在softmax之前)

rpn_probs:[batch,H * W * anchors_per_location,2] Anchor lassifier概率。

rpn_bbox:[batch,H * W * anchors_per_location,(dy,dx,log(dh),log(dw))]要应用于锚点的增量。

"""

input_feature_map = KL.Input(shape=[None, None, depth],

name="input_rpn_feature_map")

outputs = rpn_graph(input_feature_map, anchors_per_location, anchor_stride)

return KM.Model([input_feature_map], outputs, name="rpn_model")

其中rpn_graph在本文件的830行,代码如下:

# 输入为,输入的图像,anchor的形状大小和anchor的步长

def rpn_graph(feature_map, anchors_per_location, anchor_stride):

"""构建Region Proposal Network的计算网络。

feature_map: 主要特征[batch, height, width, depth] 数量,高度,宽度,数量

anchors_per_location: 特征图中每个像素的锚点数

anchor_stride: 控制锚的密度。 通常为1(锚定为要素图中的每个像素)或2(每隔一个像素)。

Returns:

rpn_class_logits: [batch, H * W * anchors_per_location, 2] Anchor的逻辑分类 (在softmax之前)

rpn_probs: [batch, H * W * anchors_per_location, 2] Anchor分类的可能

rpn_bbox: [batch, H * W * anchors_per_location, (dy, dx, log(dh), log(dw))] Deltas to be

applied to anchors.

"""

"""

keras.layers.convolutional.Conv2D(filters, kernel_size, strides=(1, 1), padding='valid',

data_format=None, dilation_rate=(1, 1), activation=None, use_bias=True,

kernel_initializer='glorot_uniform', bias_initializer='zeros', kernel_regularizer=None,

bias_regularizer=None, activity_regularizer=None, kernel_constraint=None, bias_constraint=None)

参数

filters:卷积核的数目(即输出的维度)

kernel_size:单个整数或由两个整数构成的list/tuple,卷积核的宽度和长度。如为单个整数,则表示在各个空间维度的相同长度。

strides:单个整数或由两个整数构成的list/tuple,为卷积的步长。如为单个整数,则表示在各个空间维度的相同步长。任何不为1的strides均与任何不为1的dilation_rata均不兼容

padding:补0策略,为“valid”, “same” 。“valid”代表只进行有效的卷积,即对边界数据不处理。“same”代表保留边界处的卷积结果,通常会导致输出shape与输入shape相同,因为卷积核移动时在边缘会出现大小不够的情况。

activation:激活函数,为预定义的激活函数名(参考激活函数),或逐元素(element-wise)的Theano函数。如果不指定该参数,将不会使用任何激活函数(即使用线性激活函数:a(x)=x)

dilation_rate:单个整数或由两个个整数构成的list/tuple,指定dilated convolution中的膨胀比例。任何不为1的dilation_rata均与任何不为1的strides均不兼容。

data_format:字符串,“channels_first”或“channels_last”之一,代表图像的通道维的位置。该参数是Keras 1.x中的image_dim_ordering,“channels_last”对应原本的“tf”,“channels_first”对应原本的“th”。以128x128的RGB图像为例,“channels_first”应将数据组织为(3,128,128),而“channels_last”应将数据组织为(128,128,3)。该参数的默认值是~/.keras/keras.json中设置的值,若从未设置过,则为“channels_last”。

use_bias:布尔值,是否使用偏置项

kernel_initializer:权值初始化方法,为预定义初始化方法名的字符串,或用于初始化权重的初始化器。参考initializers

bias_initializer:权值初始化方法,为预定义初始化方法名的字符串,或用于初始化权重的初始化器。参考initializers

kernel_regularizer:施加在权重上的正则项,为Regularizer对象

bias_regularizer:施加在偏置向量上的正则项,为Regularizer对象

activity_regularizer:施加在输出上的正则项,为Regularizer对象

kernel_constraints:施加在权重上的约束项,为Constraints对象

bias_constraints:施加在偏置上的约束项,为Constraints对象

"""

# TODO: 如果步长为2检查特征图是否会出现对其问题

# 在RPN之前共享卷积网络

shared = KL.Conv2D(512, (3, 3), padding='same', activation='relu',

strides=anchor_stride,

name='rpn_conv_shared')(feature_map)

# Anchor Score. [batch, height, width, anchors per location * 2].

x = KL.Conv2D(2 * anchors_per_location, (1, 1), padding='valid',

activation='linear', name='rpn_class_raw')(shared)

# Reshape to [batch, anchors, 2]

rpn_class_logits = KL.Lambda(

lambda t: tf.reshape(t, [tf.shape(t)[0], -1, 2]))(x)

# Softmax on last dimension of BG/FG.

rpn_probs = KL.Activation(

"softmax", name="rpn_class_xxx")(rpn_class_logits)

# Bounding box refinement. [batch, H, W, anchors per location * depth]

# where depth is [x, y, log(w), log(h)]

x = KL.Conv2D(anchors_per_location * 4, (1, 1), padding="valid",

activation='linear', name='rpn_bbox_pred')(shared)

# Reshape to [batch, anchors, 4]

rpn_bbox = KL.Lambda(lambda t: tf.reshape(t, [tf.shape(t)[0], -1, 4]))(x)

return [rpn_class_logits, rpn_probs, rpn_bbox]

以上部分为此份代码的RPN网络的构造

具体可以结合下图进行理解:

# 循环通过rpn

layer_outputs = [] # list of lists

for p in rpn_feature_maps:

layer_outputs.append(rpn([p]))

# 连接图层输出

# 例如 [[a1,b1,c1],[a2,b2,c2]] => [[a1,a2],[b1,b2],[c1,c2]]

# Convert from list of lists of level outputs to list of lists

# of outputs across levels.

output_names = ["rpn_class_logits", "rpn_class", "rpn_bbox"]

# zip() 函数用于将可迭代的对象作为参数,将对象中对应的元素打包成一个个元组,然后返回由这些元组组成的列表。

outputs = list(zip(*layer_outputs))

# concatenate操作是网络结构设计中很重要的一种操作,经常用于将特征联合,

# 多个卷积特征提取框架提取的特征融合或者是将输出层的信息进行融合

outputs = [KL.Concatenate(axis=1, name=n)(list(o))

for o, n in zip(outputs, output_names)]

rpn_class_logits, rpn_class, rpn_bbox = outputs

# 以上为格式转换

# 生成预选框

# 建议是标准化坐标中的[batch,N,(y1,x1,y2,x2)]和零填充。

# 对于预选框数量进行控制,

proposal_count = config.POST_NMS_ROIS_TRAINING if mode == "training"\

else config.POST_NMS_ROIS_INFERENCE

下面就是去重部分的初始化

rpn_rois = ProposalLayer(

proposal_count=proposal_count,

nms_threshold=config.RPN_NMS_THRESHOLD,

name="ROI",

config=config)([rpn_class, rpn_bbox, anchors])

关于去重采用类定义为以下代码,代码在此文档第255行

class ProposalLayer(KE.Layer):

"""

接收锚点分数并选择一个子集作为提议传递给第二阶段。

基于锚点得分和非最大抑制来完成过滤以去除重叠。

它还将边界框细化增量应用于锚点。

Inputs:

rpn_probs: [batch, num_anchors, (bg prob, fg prob)]

rpn_bbox: [batch, num_anchors, (dy, dx, log(dh), log(dw))]

anchors: [batch, num_anchors, (y1, x1, y2, x2)] anchors in normalized coordinates

Returns:

Proposals in normalized coordinates [batch, rois, (y1, x1, y2, x2)]

标准化坐标中的建议

"""

def __init__(self, proposal_count, nms_threshold, config=None, **kwargs):

super(ProposalLayer, self).__init__(**kwargs)

self.config = config

self.proposal_count = proposal_count

self.nms_threshold = nms_threshold

def call(self, inputs):

# Box Scores. Use the foreground class confidence. [Batch, num_rois, 1]

scores = inputs[0][:, :, 1]

# Box deltas [batch, num_rois, 4]

deltas = inputs[1]

deltas = deltas * np.reshape(self.config.RPN_BBOX_STD_DEV, [1, 1, 4])

# Anchors

anchors = inputs[2]

# Improve performance by trimming to top anchors by score

# and doing the rest on the smaller subset.

pre_nms_limit = tf.minimum(self.config.PRE_NMS_LIMIT, tf.shape(anchors)[1])

ix = tf.nn.top_k(scores, pre_nms_limit, sorted=True,

name="top_anchors").indices

scores = utils.batch_slice([scores, ix], lambda x, y: tf.gather(x, y),

self.config.IMAGES_PER_GPU)

deltas = utils.batch_slice([deltas, ix], lambda x, y: tf.gather(x, y),

self.config.IMAGES_PER_GPU)

pre_nms_anchors = utils.batch_slice([anchors, ix], lambda a, x: tf.gather(a, x),

self.config.IMAGES_PER_GPU,

names=["pre_nms_anchors"])

# Apply deltas to anchors to get refined anchors.

# [batch, N, (y1, x1, y2, x2)]

boxes = utils.batch_slice([pre_nms_anchors, deltas],

lambda x, y: apply_box_deltas_graph(x, y),

self.config.IMAGES_PER_GPU,

names=["refined_anchors"])

# Clip to image boundaries. Since we're in normalized coordinates,

# clip to 0..1 range. [batch, N, (y1, x1, y2, x2)]

window = np.array([0, 0, 1, 1], dtype=np.float32)

boxes = utils.batch_slice(boxes,

lambda x: clip_boxes_graph(x, window),

self.config.IMAGES_PER_GPU,

names=["refined_anchors_clipped"])

# Filter out small boxes

# According to Xinlei Chen's paper, this reduces detection accuracy

# for small objects, so we're skipping it.

# Non-max suppression

def nms(boxes, scores):

indices = tf.image.non_max_suppression(

boxes, scores, self.proposal_count,

self.nms_threshold, name="rpn_non_max_suppression")

proposals = tf.gather(boxes, indices)

# Pad if needed

padding = tf.maximum(self.proposal_count - tf.shape(proposals)[0], 0)

proposals = tf.pad(proposals, [(0, padding), (0, 0)])

return proposals

proposals = utils.batch_slice([boxes, scores], nms,

self.config.IMAGES_PER_GPU)

return proposals

def compute_output_shape(self, input_shape):

return (None, self.proposal_count, 4)

if mode == "training":

# Class ID mask to mark class IDs supported by the dataset the image

# came from.

active_class_ids = KL.Lambda(

lambda x: parse_image_meta_graph(x)["active_class_ids"]

)(input_image_meta)

if not config.USE_RPN_ROIS:

# Ignore predicted ROIs and use ROIs provided as an input.

input_rois = KL.Input(shape=[config.POST_NMS_ROIS_TRAINING, 4],

name="input_roi", dtype=np.int32)

# Normalize coordinates

target_rois = KL.Lambda(lambda x: norm_boxes_graph(

x, K.shape(input_image)[1:3]))(input_rois)

else:

target_rois = rpn_rois

# Generate detection targets

# Subsamples proposals and generates target outputs for training

# Note that proposal class IDs, gt_boxes, and gt_masks are zero

# padded. Equally, returned rois and targets are zero padded.

rois, target_class_ids, target_bbox, target_mask =\

DetectionTargetLayer(config, name="proposal_targets")([

target_rois, input_gt_class_ids, gt_boxes, input_gt_masks])

# Network Heads

# TODO: verify that this handles zero padded ROIs

mrcnn_class_logits, mrcnn_class, mrcnn_bbox =\

fpn_classifier_graph(rois, mrcnn_feature_maps, input_image_meta,

config.POOL_SIZE, config.NUM_CLASSES,

train_bn=config.TRAIN_BN,

fc_layers_size=config.FPN_CLASSIF_FC_LAYERS_SIZE)

mrcnn_mask = build_fpn_mask_graph(rois, mrcnn_feature_maps,

input_image_meta,

config.MASK_POOL_SIZE,

config.NUM_CLASSES,

train_bn=config.TRAIN_BN)

# TODO: clean up (use tf.identify if necessary)

output_rois = KL.Lambda(lambda x: x * 1, name="output_rois")(rois)

# Losses

rpn_class_loss = KL.Lambda(lambda x: rpn_class_loss_graph(*x), name="rpn_class_loss")(

[input_rpn_match, rpn_class_logits])

rpn_bbox_loss = KL.Lambda(lambda x: rpn_bbox_loss_graph(config, *x), name="rpn_bbox_loss")(

[input_rpn_bbox, input_rpn_match, rpn_bbox])

class_loss = KL.Lambda(lambda x: mrcnn_class_loss_graph(*x), name="mrcnn_class_loss")(

[target_class_ids, mrcnn_class_logits, active_class_ids])

bbox_loss = KL.Lambda(lambda x: mrcnn_bbox_loss_graph(*x), name="mrcnn_bbox_loss")(

[target_bbox, target_class_ids, mrcnn_bbox])

mask_loss = KL.Lambda(lambda x: mrcnn_mask_loss_graph(*x), name="mrcnn_mask_loss")(

[target_mask, target_class_ids, mrcnn_mask])

# Model

inputs = [input_image, input_image_meta,

input_rpn_match, input_rpn_bbox, input_gt_class_ids, input_gt_boxes, input_gt_masks]

if not config.USE_RPN_ROIS:

inputs.append(input_rois)

outputs = [rpn_class_logits, rpn_class, rpn_bbox,

mrcnn_class_logits, mrcnn_class, mrcnn_bbox, mrcnn_mask,

rpn_rois, output_rois,

rpn_class_loss, rpn_bbox_loss, class_loss, bbox_loss, mask_loss]

model = KM.Model(inputs, outputs, name='mask_rcnn')

else:

# Network Heads 神经网络分支

# Proposal classifier and BBox regressor heads

# 分类器和边框回归分支

mrcnn_class_logits, mrcnn_class, mrcnn_bbox =\

fpn_classifier_graph(rpn_rois, mrcnn_feature_maps, input_image_meta,

config.POOL_SIZE, config.NUM_CLASSES,

train_bn=config.TRAIN_BN,

fc_layers_size=config.FPN_CLASSIF_FC_LAYERS_SIZE)

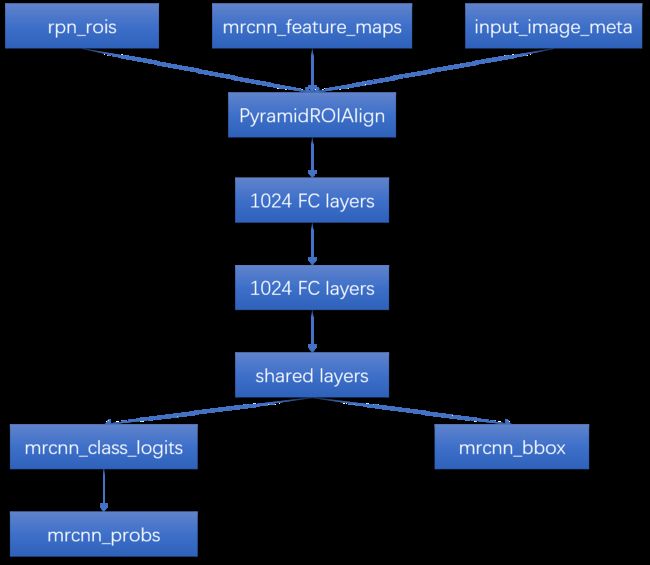

fpn_classifier_graph函数在900行

此部分代码的作用是将rpn网络生成的数据和mask rcnn中的特征图相融合,并筛选重复的框

其代码如下

def fpn_classifier_graph(rois, feature_maps, image_meta,

pool_size, num_classes, train_bn=True,

fc_layers_size=1024):

"""

构建特征金字塔网络分类器和回归分支的计算图。

以下是输入输出的说明:

rois: [batch, num_rois, (y1, x1, y2, x2)] Proposal boxes in normalized

coordinates.

feature_maps: List of feature maps from different layers of the pyramid,

[P2, P3, P4, P5]. Each has a different resolution.

image_meta: [batch, (meta data)] Image details. See compose_image_meta()

pool_size: The width of the square feature map generated from ROI Pooling.

num_classes: number of classes, which determines the depth of the results

train_bn: Boolean. Train or freeze Batch Norm layers

fc_layers_size: Size of the 2 FC layers

Returns:

logits: [batch, num_rois, NUM_CLASSES] classifier logits (before softmax)

probs: [batch, num_rois, NUM_CLASSES] classifier probabilities

bbox_deltas: [batch, num_rois, NUM_CLASSES, (dy, dx, log(dh), log(dw))] Deltas to apply to

proposal boxes

"""

# ROI Pooling

# Shape: [batch, num_rois, POOL_SIZE, POOL_SIZE, channels]

x = PyramidROIAlign([pool_size, pool_size],

name="roi_align_classifier")([rois, image_meta] + feature_maps)

# Two 1024 FC layers (implemented with Conv2D for consistency)

x = KL.TimeDistributed(KL.Conv2D(fc_layers_size, (pool_size, pool_size), padding="valid"),

name="mrcnn_class_conv1")(x)

x = KL.TimeDistributed(BatchNorm(), name='mrcnn_class_bn1')(x, training=train_bn)

x = KL.Activation('relu')(x)

x = KL.TimeDistributed(KL.Conv2D(fc_layers_size, (1, 1)),

name="mrcnn_class_conv2")(x)

x = KL.TimeDistributed(BatchNorm(), name='mrcnn_class_bn2')(x, training=train_bn)

x = KL.Activation('relu')(x)

shared = KL.Lambda(lambda x: K.squeeze(K.squeeze(x, 3), 2),

name="pool_squeeze")(x)

# Classifier head

mrcnn_class_logits = KL.TimeDistributed(KL.Dense(num_classes),

name='mrcnn_class_logits')(shared)

mrcnn_probs = KL.TimeDistributed(KL.Activation("softmax"),

name="mrcnn_class")(mrcnn_class_logits)

# BBox head

# [batch, num_rois, NUM_CLASSES * (dy, dx, log(dh), log(dw))]

x = KL.TimeDistributed(KL.Dense(num_classes * 4, activation='linear'),

name='mrcnn_bbox_fc')(shared)

# Reshape to [batch, num_rois, NUM_CLASSES, (dy, dx, log(dh), log(dw))]

s = K.int_shape(x)

mrcnn_bbox = KL.Reshape((s[1], num_classes, 4), name="mrcnn_bbox")(x)

return mrcnn_class_logits, mrcnn_probs, mrcnn_bbox

此部分最关键的在于PyramidROIAlign

其代码在344行,如下所示:

class PyramidROIAlign(KE.Layer):

"""Implements ROI Pooling on multiple levels of the feature pyramid.

Params:

- pool_shape: [pool_height, pool_width] of the output pooled regions. Usually [7, 7]

Inputs:

- boxes: [batch, num_boxes, (y1, x1, y2, x2)] in normalized

coordinates. Possibly padded with zeros if not enough

boxes to fill the array.

- image_meta: [batch, (meta data)] Image details. See compose_image_meta()

- feature_maps: List of feature maps from different levels of the pyramid.

Each is [batch, height, width, channels]

Output:

Pooled regions in the shape: [batch, num_boxes, pool_height, pool_width, channels].

The width and height are those specific in the pool_shape in the layer

constructor.

"""

def __init__(self, pool_shape, **kwargs):

super(PyramidROIAlign, self).__init__(**kwargs)

self.pool_shape = tuple(pool_shape)

def call(self, inputs):

# Crop boxes [batch, num_boxes, (y1, x1, y2, x2)] in normalized coords

boxes = inputs[0]

# Image meta

# Holds details about the image. See compose_image_meta()

image_meta = inputs[1]

# Feature Maps. List of feature maps from different level of the

# feature pyramid. Each is [batch, height, width, channels]

feature_maps = inputs[2:]

# Assign each ROI to a level in the pyramid based on the ROI area.

y1, x1, y2, x2 = tf.split(boxes, 4, axis=2)

h = y2 - y1

w = x2 - x1

# Use shape of first image. Images in a batch must have the same size.

image_shape = parse_image_meta_graph(image_meta)['image_shape'][0]

# Equation 1 in the Feature Pyramid Networks paper. Account for

# the fact that our coordinates are normalized here.

# e.g. a 224x224 ROI (in pixels) maps to P4

image_area = tf.cast(image_shape[0] * image_shape[1], tf.float32)

roi_level = log2_graph(tf.sqrt(h * w) / (224.0 / tf.sqrt(image_area)))

roi_level = tf.minimum(5, tf.maximum(

2, 4 + tf.cast(tf.round(roi_level), tf.int32)))

roi_level = tf.squeeze(roi_level, 2)

# Loop through levels and apply ROI pooling to each. P2 to P5.

pooled = []

box_to_level = []

for i, level in enumerate(range(2, 6)):

ix = tf.where(tf.equal(roi_level, level))

level_boxes = tf.gather_nd(boxes, ix)

# Box indices for crop_and_resize.

box_indices = tf.cast(ix[:, 0], tf.int32)

# Keep track of which box is mapped to which level

box_to_level.append(ix)

# Stop gradient propogation to ROI proposals

level_boxes = tf.stop_gradient(level_boxes)

box_indices = tf.stop_gradient(box_indices)

# Crop and Resize

# From Mask R-CNN paper: "We sample four regular locations, so

# that we can evaluate either max or average pooling. In fact,

# interpolating only a single value at each bin center (without

# pooling) is nearly as effective."

#

# Here we use the simplified approach of a single value per bin,

# which is how it's done in tf.crop_and_resize()

# Result: [batch * num_boxes, pool_height, pool_width, channels]

pooled.append(tf.image.crop_and_resize(

feature_maps[i], level_boxes, box_indices, self.pool_shape,

method="bilinear"))

# Pack pooled features into one tensor

pooled = tf.concat(pooled, axis=0)

# Pack box_to_level mapping into one array and add another

# column representing the order of pooled boxes

box_to_level = tf.concat(box_to_level, axis=0)

box_range = tf.expand_dims(tf.range(tf.shape(box_to_level)[0]), 1)

box_to_level = tf.concat([tf.cast(box_to_level, tf.int32), box_range],

axis=1)

# Rearrange pooled features to match the order of the original boxes

# Sort box_to_level by batch then box index

# TF doesn't have a way to sort by two columns, so merge them and sort.

sorting_tensor = box_to_level[:, 0] * 100000 + box_to_level[:, 1]

ix = tf.nn.top_k(sorting_tensor, k=tf.shape(

box_to_level)[0]).indices[::-1]

ix = tf.gather(box_to_level[:, 2], ix)

pooled = tf.gather(pooled, ix)

# Re-add the batch dimension

shape = tf.concat([tf.shape(boxes)[:2], tf.shape(pooled)[1:]], axis=0)

pooled = tf.reshape(pooled, shape)

return pooled

def compute_output_shape(self, input_shape):

return input_shape[0][:2] + self.pool_shape + (input_shape[2][-1], )

梳理一下,此部分是mask rcnn模型最后的部分,其流程如下图

# Detections

# output is [batch, num_detections, (y1, x1, y2, x2, class_id, score)] in

# normalized coordinates 归一化坐标

detections = DetectionLayer(config, name="mrcnn_detection")(

[rpn_rois, mrcnn_class, mrcnn_bbox, input_image_meta])

关于DetectionLayer的定义在782行,具体如下所示

class DetectionLayer(KE.Layer):

"""

获取分类提案框及其边界框增量并返回最终检测框。

Returns:

[batch, num_detections, (y1, x1, y2, x2, class_id, class_score)] where

coordinates are normalized.

"""

def __init__(self, config=None, **kwargs):

super(DetectionLayer, self).__init__(**kwargs)

self.config = config

def call(self, inputs):

rois = inputs[0]

mrcnn_class = inputs[1]

mrcnn_bbox = inputs[2]

image_meta = inputs[3]

# Get windows of images in normalized coordinates. Windows are the area

# in the image that excludes the padding.

# Use the shape of the first image in the batch to normalize the window

# because we know that all images get resized to the same size.

m = parse_image_meta_graph(image_meta)

image_shape = m['image_shape'][0]

window = norm_boxes_graph(m['window'], image_shape[:2])

# Run detection refinement graph on each item in the batch

detections_batch = utils.batch_slice(

[rois, mrcnn_class, mrcnn_bbox, window],

lambda x, y, w, z: refine_detections_graph(x, y, w, z, self.config),

self.config.IMAGES_PER_GPU)

# Reshape output

# [batch, num_detections, (y1, x1, y2, x2, class_id, class_score)] in

# normalized coordinates

return tf.reshape(

detections_batch,

[self.config.BATCH_SIZE, self.config.DETECTION_MAX_INSTANCES, 6])

def compute_output_shape(self, input_shape):

return (None, self.config.DETECTION_MAX_INSTANCES, 6)

# Create masks for detections

detection_boxes = KL.Lambda(lambda x: x[..., :4])(detections)

mrcnn_mask = build_fpn_mask_graph(detection_boxes, mrcnn_feature_maps,

input_image_meta,

config.MASK_POOL_SIZE,

config.NUM_CLASSES,

train_bn=config.TRAIN_BN)

关于build_fpn_mask_graph的定义在956行,具体如下所示

def build_fpn_mask_graph(rois, feature_maps, image_meta,

pool_size, num_classes, train_bn=True):

"""构建特征金字塔网络mask分支的计算图。

rois: [batch, num_rois, (y1, x1, y2, x2)] Proposal boxes in normalized

coordinates.

feature_maps: List of feature maps from different layers of the pyramid,

[P2, P3, P4, P5]. Each has a different resolution.

image_meta: [batch, (meta data)] Image details. See compose_image_meta()

pool_size: The width of the square feature map generated from ROI Pooling.

num_classes: number of classes, which determines the depth of the results

train_bn: Boolean. Train or freeze Batch Norm layers

Returns: Masks [batch, num_rois, MASK_POOL_SIZE, MASK_POOL_SIZE, NUM_CLASSES]

"""

# ROI Pooling

# Shape: [batch, num_rois, MASK_POOL_SIZE, MASK_POOL_SIZE, channels]

x = PyramidROIAlign([pool_size, pool_size],

name="roi_align_mask")([rois, image_meta] + feature_maps)

# Conv layers

x = KL.TimeDistributed(KL.Conv2D(256, (3, 3), padding="same"),

name="mrcnn_mask_conv1")(x)

x = KL.TimeDistributed(BatchNorm(),

name='mrcnn_mask_bn1')(x, training=train_bn)

x = KL.Activation('relu')(x)

x = KL.TimeDistributed(KL.Conv2D(256, (3, 3), padding="same"),

name="mrcnn_mask_conv2")(x)

x = KL.TimeDistributed(BatchNorm(),

name='mrcnn_mask_bn2')(x, training=train_bn)

x = KL.Activation('relu')(x)

x = KL.TimeDistributed(KL.Conv2D(256, (3, 3), padding="same"),

name="mrcnn_mask_conv3")(x)

x = KL.TimeDistributed(BatchNorm(),

name='mrcnn_mask_bn3')(x, training=train_bn)

x = KL.Activation('relu')(x)

x = KL.TimeDistributed(KL.Conv2D(256, (3, 3), padding="same"),

name="mrcnn_mask_conv4")(x)

x = KL.TimeDistributed(BatchNorm(),

name='mrcnn_mask_bn4')(x, training=train_bn)

x = KL.Activation('relu')(x)

x = KL.TimeDistributed(KL.Conv2DTranspose(256, (2, 2), strides=2, activation="relu"),

name="mrcnn_mask_deconv")(x)

x = KL.TimeDistributed(KL.Conv2D(num_classes, (1, 1), strides=1, activation="sigmoid"),

name="mrcnn_mask")(x)

return x

其中BatchNorm代码如下所示:

class BatchNorm(KL.BatchNormalization):

"""Extends the Keras BatchNormalization class to allow a central place

to make changes if needed.

Batch normalization has a negative effect on training if batches are small

so this layer is often frozen (via setting in Config class) and functions

as linear layer.

"""

def call(self, inputs, training=None):

"""

Note about training values:

None: Train BN layers. This is the normal mode

False: Freeze BN layers. Good when batch size is small

True: (don't use). Set layer in training mode even when making inferences

"""

return super(self.__class__, self).call(inputs, training=training)

model = KM.Model([input_image, input_image_meta, input_anchors],

[detections, mrcnn_class, mrcnn_bbox,

mrcnn_mask, rpn_rois, rpn_class, rpn_bbox],

name='mask_rcnn')

# Add multi-GPU support.

if config.GPU_COUNT > 1:

from mrcnn.parallel_model import ParallelModel

model = ParallelModel(model, config.GPU_COUNT)

return model

以上部分便是mask rcnn的最后一个分支,即mask分支

其流程梳理如下图所示

至此mask rcnn的模型已经全部建立完毕。

总体框架如下:

分类数量

# COCO Class names

# Index of the class in the list is its ID. For example, to get ID of

# the teddy bear class, use: class_names.index('teddy bear')

class_names = ['BG', 'person', 'bicycle', 'car', 'motorcycle', 'airplane',

'bus', 'train', 'truck', 'boat', 'traffic light',

'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird',

'cat', 'dog', 'horse', 'sheep', 'cow', 'elephant', 'bear',

'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie',

'suitcase', 'frisbee', 'skis', 'snowboard', 'sports ball',

'kite', 'baseball bat', 'baseball glove', 'skateboard',

'surfboard', 'tennis racket', 'bottle', 'wine glass', 'cup',

'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple',

'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza',

'donut', 'cake', 'chair', 'couch', 'potted plant', 'bed',

'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote',

'keyboard', 'cell phone', 'microwave', 'oven', 'toaster',

'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors',

'teddy bear', 'hair drier', 'toothbrush']

运行检测

# Load a random image from the images folder

file_names = next(os.walk(IMAGE_DIR))[2]

image = skimage.io.imread(os.path.join(IMAGE_DIR, random.choice(file_names)))

# Run detection

results = model.detect([image], verbose=1)

# Visualize results

r = results[0]

visualize.display_instances(image, r['rois'], r['masks'], r['class_ids'],

class_names, r['scores'])

其中运行检测的代码如下图所示

def detect(self, images, verbose=0):

"""Runs the detection pipeline.

images: List of images, potentially of different sizes.

Returns a list of dicts, one dict per image. The dict contains:

rois: [N, (y1, x1, y2, x2)] detection bounding boxes

class_ids: [N] int class IDs

scores: [N] float probability scores for the class IDs

masks: [H, W, N] instance binary masks

"""

assert self.mode == "inference", "Create model in inference mode."

assert len(

images) == self.config.BATCH_SIZE, "len(images) must be equal to BATCH_SIZE"

if verbose:

log("Processing {} images".format(len(images)))

for image in images:

log("image", image)

# Mold inputs to format expected by the neural network

# 模具输入到神经网络预期的格式

molded_images, image_metas, windows = self.mold_inputs(images)

# Validate image sizes

# All images in a batch MUST be of the same size

image_shape = molded_images[0].shape

for g in molded_images[1:]:

assert g.shape == image_shape,\

"After resizing, all images must have the same size. Check IMAGE_RESIZE_MODE and image sizes."

# Anchors

anchors = self.get_anchors(image_shape)

# Duplicate across the batch dimension because Keras requires it

# TODO: can this be optimized to avoid duplicating the anchors?

anchors = np.broadcast_to(anchors, (self.config.BATCH_SIZE,) + anchors.shape)

if verbose:

log("molded_images", molded_images)

log("image_metas", image_metas)

log("anchors", anchors)

# Run object detection

detections, _, _, mrcnn_mask, _, _, _ =\

self.keras_model.predict([molded_images, image_metas, anchors], verbose=0)

# Process detections

results = []

for i, image in enumerate(images):

final_rois, final_class_ids, final_scores, final_masks =\

self.unmold_detections(detections[i], mrcnn_mask[i],

image.shape, molded_images[i].shape,

windows[i])

results.append({

"rois": final_rois,

"class_ids": final_class_ids,

"scores": final_scores,

"masks": final_masks,

})

return results

其中log函数在38行,代码如下:

def log(text, array=None):

"""

打印信息。如果提供Numpy阵列,则打印其形状,最小值和最大值。

"""

if array is not None:

text = text.ljust(25)

text += ("shape: {:20} ".format(str(array.shape)))

if array.size:

text += ("min: {:10.5f} max: {:10.5f}".format(array.min(),array.max()))

else:

text += ("min: {:10} max: {:10}".format("",""))

text += " {}".format(array.dtype)

print(text)

其中get_anchors函数在2598行,代码如下:

def get_anchors(self, image_shape):

"""Returns anchor pyramid for the given image size."""

backbone_shapes = compute_backbone_shapes(self.config, image_shape)

# Cache anchors and reuse if image shape is the same

if not hasattr(self, "_anchor_cache"):

self._anchor_cache = {}

if not tuple(image_shape) in self._anchor_cache:

# Generate Anchors

a = utils.generate_pyramid_anchors(

self.config.RPN_ANCHOR_SCALES,

self.config.RPN_ANCHOR_RATIOS,

backbone_shapes,

self.config.BACKBONE_STRIDES,

self.config.RPN_ANCHOR_STRIDE)

# Keep a copy of the latest anchors in pixel coordinates because

# it's used in inspect_model notebooks.

# TODO: Remove this after the notebook are refactored to not use it

self.anchors = a

# Normalize coordinates

self._anchor_cache[tuple(image_shape)] = utils.norm_boxes(a, image_shape[:2])

return self._anchor_cache[tuple(image_shape)]

其中unmold_detections函数在2417行,代码如下:

def unmold_detections(self, detections, mrcnn_mask, original_image_shape,

image_shape, window):

"""将一个图像的检测从神经网络输出的格式重新格式化为适合在应用程序的其余部分中使用的格式。

detections: [N, (y1, x1, y2, x2, class_id, score)] in normalized coordinates

mrcnn_mask: [N, height, width, num_classes]

original_image_shape: [H, W, C] Original image shape before resizing

image_shape: [H, W, C] Shape of the image after resizing and padding

window: [y1, x1, y2, x2] Pixel coordinates of box in the image where the real

image is excluding the padding.

Returns:

boxes: [N, (y1, x1, y2, x2)] Bounding boxes in pixels

class_ids: [N] Integer class IDs for each bounding box

scores: [N] Float probability scores of the class_id

masks: [height, width, num_instances] Instance masks

"""

# How many detections do we have?

# Detections array is padded with zeros. Find the first class_id == 0.

zero_ix = np.where(detections[:, 4] == 0)[0]

N = zero_ix[0] if zero_ix.shape[0] > 0 else detections.shape[0]

# Extract boxes, class_ids, scores, and class-specific masks

boxes = detections[:N, :4]

class_ids = detections[:N, 4].astype(np.int32)

scores = detections[:N, 5]

masks = mrcnn_mask[np.arange(N), :, :, class_ids]

# Translate normalized coordinates in the resized image to pixel

# coordinates in the original image before resizing

window = utils.norm_boxes(window, image_shape[:2])

wy1, wx1, wy2, wx2 = window

shift = np.array([wy1, wx1, wy1, wx1])

wh = wy2 - wy1 # window height

ww = wx2 - wx1 # window width

scale = np.array([wh, ww, wh, ww])

# Convert boxes to normalized coordinates on the window

boxes = np.divide(boxes - shift, scale)

# Convert boxes to pixel coordinates on the original image

boxes = utils.denorm_boxes(boxes, original_image_shape[:2])

# Filter out detections with zero area. Happens in early training when

# network weights are still random

exclude_ix = np.where(

(boxes[:, 2] - boxes[:, 0]) * (boxes[:, 3] - boxes[:, 1]) <= 0)[0]

if exclude_ix.shape[0] > 0:

boxes = np.delete(boxes, exclude_ix, axis=0)

class_ids = np.delete(class_ids, exclude_ix, axis=0)

scores = np.delete(scores, exclude_ix, axis=0)

masks = np.delete(masks, exclude_ix, axis=0)

N = class_ids.shape[0]

# Resize masks to original image size and set boundary threshold.

full_masks = []

for i in range(N):

# Convert neural network mask to full size mask

full_mask = utils.unmold_mask(masks[i], boxes[i], original_image_shape)

full_masks.append(full_mask)

full_masks = np.stack(full_masks, axis=-1)\

if full_masks else np.empty(original_image_shape[:2] + (0,))

return boxes, class_ids, scores, full_masks