Zookeeper(二、分布式集群安装与命令行操作)

原文链接: Zookeeper(二、分布式集群安装与命令行操作).

1. 前置条件

安装Hadoop集群

链接: Hadoop(二、安装Hadoop-3.2.1集群).

在hadoop100、hadoop101和hadoop102三个节点上部署Zookeeper。

使用的是Zookeeper-3.6.1版本

链接:https://pan.baidu.com/s/1PKpjntrFyT-yiF20EdlL_Q

提取码:c0ol

2.解压安装

- 上传压缩包到centos7 ,hadoop100机器上

# 使用rz命令上传压缩包

[root@hadoop100 ~]# cd /opt/tar.gz/

[root@hadoop100 tar.gz]# rz

- 解压Zookeeper安装包到/opt/module/目录下

[root@hadoop100 tar.gz]# ls

apache-zookeeper-3.6.1-bin.tar.gz hadoop-3.2.1.tar.gz jdk-8u251-linux-x64.tar.gz

[root@hadoop100 tar.gz]# tar -zxvf apache-zookeeper-3.6.1-bin.tar.gz -C /opt/software/

[root@hadoop100 tar.gz]# cd /opt/software/

[root@hadoop100 software]# ls

apache-zookeeper-3.6.1-bin hadoop-3.2.1 jdk1.8.0_251

- 同步**/opt/software/apache-zookeeper-3.6.1-bin**目录内容到hadoop101、hadoop102

[root@hadoop100 software]# xsync apache-zookeeper-3.6.1-bin/

[root@hadoop100 software]# ssh hadoop101

Last login: Fri Jun 19 00:29:21 2020 from hadoop100

[root@hadoop101 ~]# cd /opt/software/

[root@hadoop101 software]# ls

apache-zookeeper-3.6.1-bin hadoop-3.2.1 jdk1.8.0_251

[root@hadoop101 software]# ssh hadoop102

Last login: Fri Jun 19 00:29:30 2020 from hadoop101

[root@hadoop102 ~]# cd /opt/software/

[root@hadoop102 software]# ls

apache-zookeeper-3.6.1-bin hadoop-3.2.1 jdk1.8.0_251

[root@hadoop102 software]#

3. 配置服务器编号

- 在**/opt/software/apache-zookeeper-3.6.1-bin这个目录下创建zkData**,在目录下下创建一个myid的文件

[root@hadoop102 software]# ssh hadoop100

[root@hadoop100 ~]# cd /opt/software/apache-zookeeper-3.6.1-bin/

[root@hadoop100 apache-zookeeper-3.6.1-bin]# mkdir zkData

[root@hadoop100 apache-zookeeper-3.6.1-bin]# ls

bin conf docs lib LICENSE.txt NOTICE.txt README.md README_packaging.md zkData

[root@hadoop100 apache-zookeeper-3.6.1-bin]# cd zkData/

[root@hadoop100 zkData]# touch myid

[root@hadoop100 zkData]# ls

myid

- 编辑myid文件,在文件中添加与server对应的编号。

[root@hadoop100 zkData]# cd ..

[root@hadoop100 apache-zookeeper-3.6.1-bin]# xsync zkData/ # 分发文件到hadoop101,hadoop102

[root@hadoop100 apache-zookeeper-3.6.1-bin]# cd zkData/

[root@hadoop100 zkData]# vi myid

100

:wq

[root@hadoop100 zkData]# ssh hadoop101

Last login: Tue Jun 23 23:15:35 2020 from hadoop100

[root@hadoop101 ~]# cd /opt/software/apache-zookeeper-3.6.1-bin/zkData/

[root@hadoop101 zkData]# vi myid

101

:wq

[root@hadoop101 zkData]# ssh hadoop102

Last login: Tue Jun 23 23:15:55 2020 from hadoop101

[root@hadoop102 ~]# cd /opt/software/apache-zookeeper-3.6.1-bin/zkData/

[root@hadoop102 zkData]# vi myid

102

:wq

4. 配置

4.1 配置参数解读

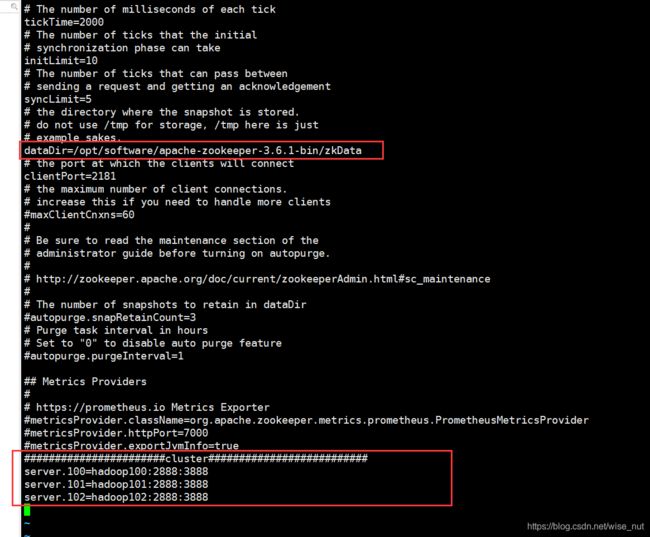

Zookeeper中的配置文件zoo.cfg中参数含义解读如下:

- tickTime =2000:通信心跳数,Zookeeper服务器与客户端心跳时间,单位毫秒

Zookeeper使用的基本时间,服务器之间或客户端与服务器之间维持心跳的时间间隔,也就是每个tickTime时间就会发送一个心跳,时间单位为毫秒。

它用于心跳机制,并且设置最小的session超时时间为两倍心跳时间。(session的最小超时时间是2*tickTime) - initLimit =10:LF初始通信时限

集群中的Follower跟随者服务器与Leader领导者服务器之间初始连接时能容忍的最多心跳数(tickTime的数量),用它来限定集群中的Zookeeper服务器连接到Leader的时限。 - syncLimit =5:LF同步通信时限

集群中Leader与Follower之间的最大响应时间单位,假如响应超过syncLimit * tickTime,Leader认为Follwer死掉,从服务器列表中删除Follwer。 - dataDir:数据文件目录+数据持久化路径

主要用于保存Zookeeper中的数据。 - clientPort =2181:客户端连接端口

监听客户端连接的端口。 - server.A=B:C:D:

A是一个数字,表示这个是第几号服务器;

集群模式下配置一个文件myid,这个文件在dataDir目录下,这个文件里面有一个数据就是A的值,Zookeeper启动时读取此文件,拿到里面的数据与zoo.cfg里面的配置信息比较从而判断到底是哪个server。

B是这个服务器的ip地址;

C是这个服务器与集群中的Leader服务器交换信息的端口;

D是万一集群中的Leader服务器挂了,需要一个端口来重新进行选举,选出一个新的Leader,而这个端口就是用来执行选举时服务器相互通信的端口。

4.2 配置zoo.cfg文件

- 重命名**/opt/software/apache-zookeeper-3.6.1-bin/conf目录下的zoo_sample.cfg为zoo.cfg**

[root@hadoop100 ~]# cd /opt/software/apache-zookeeper-3.6.1-bin/conf/

[root@hadoop100 conf]# ls

configuration.xsl log4j.properties zoo_sample.cfg

[root@hadoop100 conf]# mv zoo_sample.cfg zoo.cfg

[root@hadoop100 conf]# ls

configuration.xsl log4j.properties zoo.cfg

- 打开zoo.cfg文件,修改数据存储路径配置

vi zoo.cfg

[root@hadoop100 conf]# xsync zoo.cfg

5. 集群操作

- 分别启动Zookeeper

[root@hadoop100 apache-zookeeper-3.6.1-bin]# bin/zkServer.sh start

[root@hadoop101 apache-zookeeper-3.6.1-bin]# bin/zkServer.sh start

[root@hadoop102 apache-zookeeper-3.6.1-bin]# bin/zkServer.sh start

- 查看状态

[root@hadoop100 bin]# ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/software/apache-zookeeper-3.6.1-bin/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost.

Mode: follower

[root@hadoop101 bin]# ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/software/apache-zookeeper-3.6.1-bin/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost.

Mode: leader

[root@hadoop102 apache-zookeeper-3.6.1-bin]# bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/software/apache-zookeeper-3.6.1-bin/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost.

Mode: follower

看到当前hadoop100,hadoop102是follower;hadoop101是leader说明Zookeeper选举机制正常运行

有关Zookeeper的基础和原理可以看我上一篇文章:

链接:Zookeeper(一、大数据之ZooKeeper基础与原理).

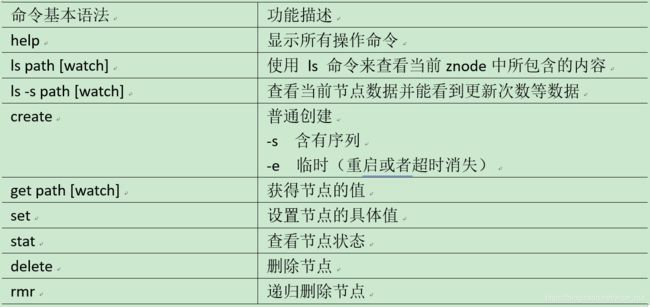

6. 客户端命令行操作

6.1 启动客户端

[root@hadoop100 apache-zookeeper-3.6.1-bin]# bin/zkCli.sh

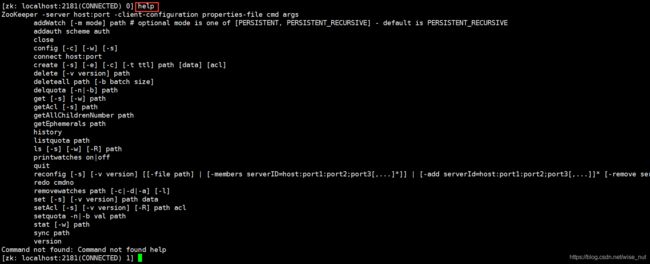

6.2 显示所有操作命令

6.3 查看当前znode中所包含的内容

zk: localhost:2181(CONNECTED) 2] ls /

[zookeeper]

6.4 查看当前节点详细数据

[zk: localhost:2181(CONNECTED) 4] ls -s /

[zookeeper]

cZxid = 0x0

ctime = Thu Jan 01 08:00:00 CST 1970

mZxid = 0x0

mtime = Thu Jan 01 08:00:00 CST 1970

pZxid = 0x0

cversion = -1

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 0

numChildren = 1

6.5. 创建2个普通节点(默认创建持久节点)

[zk: localhost:2181(CONNECTED) 1] create /sanguo "jinlian"

Created /sanguo

[zk: localhost:2181(CONNECTED) 2] create /sanguo/shuguo "liubei"

Created /sanguo/shuguo

[zk: localhost:2181(CONNECTED) 3] ls /

[sanguo, zookeeper]

[zk: localhost:2181(CONNECTED) 4] ls /sanguo

[shuguo]

6.6 获得节点的值

[zk: localhost:2181(CONNECTED) 5] get /sanguo

jinlian

[zk: localhost:2181(CONNECTED) 6] get /sanguo/shuguo

liubei

[zk: localhost:2181(CONNECTED) 7] get -s /sanguo

jinlian

cZxid = 0x100000004

ctime = Wed Jun 24 00:04:51 CST 2020

mZxid = 0x100000004

mtime = Wed Jun 24 00:04:51 CST 2020

pZxid = 0x100000005

cversion = 1

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 7

numChildren = 1

[zk: localhost:2181(CONNECTED) 8] get -s /sanguo/shuguo

liubei

cZxid = 0x100000005

ctime = Wed Jun 24 00:05:10 CST 2020

mZxid = 0x100000005

mtime = Wed Jun 24 00:05:10 CST 2020

pZxid = 0x100000005

cversion = 0

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 6

numChildren = 0

6.7 创建短暂节点

[zk: localhost:2181(CONNECTED) 9] create -e /sanguo/wuguo

Created /shuguo

[zk: localhost:2181(CONNECTED) 10] ls /sanguo

[shuguo, wuguo]

[zk: localhost:2181(CONNECTED) 10] quit

[root@hadoop100 apache-zookeeper-3.6.1-bin]# bin/zkCli.sh

[zk: localhost:2181(CONNECTED) 1] ls /sanguo

[shuguo]

发现客户端退出之后,刚刚创建的节点 /shuguo 就被删除了

6.8 创建带序号的节点

A. 先创建一个普通的根节点/sanguo/weiguo

[zk: localhost:2181(CONNECTED) 0] create /sanguo/weiguo

Created /sanguo/weiguo

[zk: localhost:2181(CONNECTED) 1] ls /sanguo

[shuguo, weiguo]

B. 创建带序号的节点

[zk: localhost:2181(CONNECTED) 2] create -s /sanguo/weiguo "caocao"

Created /sanguo/weiguo0000000003

[zk: localhost:2181(CONNECTED) 3] create -s /sanguo/weiguo "caocao"

Created /sanguo/weiguo0000000004

[zk: localhost:2181(CONNECTED) 4] create -s /sanguo/weiguo "caocao"

Created /sanguo/weiguo0000000005

如果原来没有序号节点,序号从0开始依次递增。如果原节点下已有2个节点,则再排序时从2开始,以此类推。

6.9 修改节点数据值

[zk: localhost:2181(CONNECTED) 8] set /sanguo/shuguo "diaocan"

[zk: localhost:2181(CONNECTED) 9] get -s /sanguo/shuguo

diaocan

cZxid = 0x100000005

ctime = Wed Jun 24 00:05:10 CST 2020

mZxid = 0x100000011

mtime = Wed Jun 24 00:22:34 CST 2020

pZxid = 0x100000005

cversion = 0

dataVersion = 1

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 7

numChildren = 0

[zk: localhost:2181(CONNECTED) 10] set /sanguo/shuguo "lvbu"

[zk: localhost:2181(CONNECTED) 11] get -s /sanguo/shuguo

lvbu

cZxid = 0x100000005

ctime = Wed Jun 24 00:05:10 CST 2020

mZxid = 0x100000012

mtime = Wed Jun 24 00:23:26 CST 2020

pZxid = 0x100000005

cversion = 0

dataVersion = 2

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 4

numChildren = 0

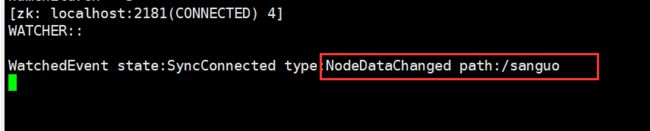

6.10 节点的值变化监听

在 hadoop102 上注册监听 /sanguo 节点数据变化

[zk: localhost:2181(CONNECTED) 3] get -s -w /sanguo

jinlian

cZxid = 0x100000004

ctime = Wed Jun 24 00:04:51 CST 2020

mZxid = 0x100000004

mtime = Wed Jun 24 00:04:51 CST 2020

pZxid = 0x10000000f

cversion = 7

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 7

numChildren = 5

发现在这时候的值还是刚开始创建的值**“jinlian”**

在hadoop100上修改**/sanguo**节点的数据

[zk: localhost:2181(CONNECTED) 13] set /sanguo "guanyu"

回到hadoop102上发现提示有数据,但是此时为一次性监听,之后再次修改不会提示。(之后在Zookeeper工程中可以根据逻辑进行永久监听)!

6.11 删除节点

[zk: localhost:2181(CONNECTED) 18] ls /sanguo

[shuguo, weiguo, weiguo0000000003, weiguo0000000004, weiguo0000000005]

[zk: localhost:2181(CONNECTED) 19] delete /sanguo/weiguo0000000003

[zk: localhost:2181(CONNECTED) 20] ls /sanguo

[shuguo, weiguo, weiguo0000000004, weiguo0000000005]

[zk: localhost:2181(CONNECTED) 21] deleteall /sanguo

[zk: localhost:2181(CONNECTED) 22] ls /

[zookeeper]

6.12 查看节点状态

[zk: localhost:2181(CONNECTED) 25] stat /zookeeper

cZxid = 0x0

ctime = Thu Jan 01 08:00:00 CST 1970

mZxid = 0x0

mtime = Thu Jan 01 08:00:00 CST 1970

pZxid = 0x0

cversion = -2

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 0

numChildren = 2

参考资料:

链接:尚硅谷Zookeeper教程(zookeeper框架精讲).