pytorch CNN完成minst分类 (GPU版本)

需要pytorch1.4.0 注意代码是GPU版本

最近刚去实习,需要完成CNN对经典minst数据集进行分类,作为熟悉torch的上手工作,记录如下:

ps:下载不了数据集的可以通过百度网盘下载,在data的文件夹下面

完整项目(包含数据集)

https://pan.baidu.com/s/1hyq-AYnlIyq3x7GCxLI33Q

密码:qfsa

完整代码如下:

#!/usr/bin/env python

#-*- coding:utf-8 -*-

# datetime:2020-03-06 14:30

# software: PyCharm

import torch

import torch.nn as nn

from torch.autograd import Variable

from torchvision import datasets,transforms

from torch.utils.data import DataLoader

import numpy as np

import matplotlib.pyplot as plt

transform = transforms.Compose([transforms.ToTensor(),transforms.Lambda(lambda x:x.repeat(3,1,1)),transforms.Normalize(mean=[0.5,0.5,0.5],std=[0.5,0.5,0.5])])

data_train = datasets.MNIST(root = './data',transform=transform,train = True,download = True)

data_test = datasets.MNIST(root = './data',transform=transform,train = False)

data_loader_train = DataLoader(dataset=data_train,batch_size=64,shuffle=True)

data_loader_test = DataLoader(dataset=data_test,batch_size=64,shuffle=False)

class CNN(nn.Module):

def __init__(self):

super(CNN,self).__init__()

self.conv1 = nn.Sequential(

nn.Conv2d(in_channels = 3,out_channels = 64,kernel_size = 3,stride = 1,padding = 1),

nn.ReLU(),

nn.Conv2d(in_channels = 64,out_channels = 128,kernel_size = 3,stride = 1,padding = 1),

nn.ReLU(),

nn.MaxPool2d(stride = 2,kernel_size = 2)

)

self.dense = nn.Sequential(

nn.Linear(14*14*128,1024),

nn.ReLU(),

nn.Dropout(p=0.5),

)

self.out = nn.Linear(1024,10)

def forward(self, x):

x = self.conv1(x)

x = x.view(-1,14*14*128) #对参数进行扁平化

x = self.dense(x)

x = self.out(x)

return x

model = CNN()

if torch.cuda.is_available():

print("gpu start")

model.cuda()

#损失函数,交叉熵作为损失函数

cost = nn.CrossEntropyLoss()

#优化器

optimzer = torch.optim.Adam(model.parameters())

#print(model) 打印看看模型

#训练模型

n_epochs = 5

for epoch in range(n_epochs) :

running_loss = 0.0

running_correct = 0

print("Epoch{}/{}".format(epoch, n_epochs))

print("-" * 10)

for data in data_loader_train :

# print("train ing")

X_train, y_train = data

# 有GPU加下面这行,没有不用加

X_train, y_train = X_train.cuda(), y_train.cuda()

X_train, y_train = Variable(X_train), Variable(y_train)

outputs = model(X_train)

_, pred = torch.max(outputs.data, 1)

optimzer.zero_grad()

loss = cost(outputs, y_train)

loss.backward()

optimzer.step()

running_loss += loss.item()

running_correct += torch.sum(pred == y_train.data)

testing_correct = 0

for data in data_loader_test :

X_test, y_test = data

# 有GPU加下面这行,没有不用加

X_test, y_test = X_test.cuda(), y_test.cuda()

X_test, y_test = Variable(X_test), Variable(y_test)

outputs = model(X_test)

_, pred = torch.max(outputs, 1)

testing_correct += torch.sum(pred == y_test.data)

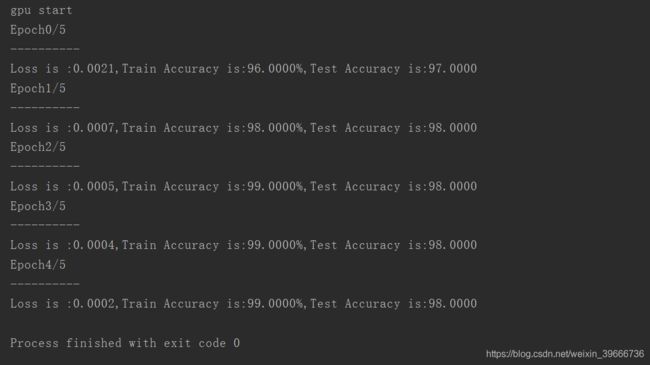

print("Loss is :{:.4f},Train Accuracy is:{:.4f}%,Test Accuracy is:{:.4f}".format(running_loss / len(data_train),

100 * running_correct / len(

data_train),

100 * testing_correct / len(

data_test)))